Hub documentation

Using Flair at Hugging Face

Using Flair at Hugging Face

Flair is a very simple framework for state-of-the-art NLP. Developed by Humboldt University of Berlin and friends.

Exploring Flair in the Hub

You can find flair models by filtering at the left of the models page.

All models on the Hub come with these useful features:

- An automatically generated model card with a brief description.

- An interactive widget you can use to play with the model directly in the browser.

- An Inference API that allows you to make inference requests.

Installation

To get started, you can follow the Flair installation guide. You can also use the following one-line install through pip:

$ pip install -U flairUsing existing models

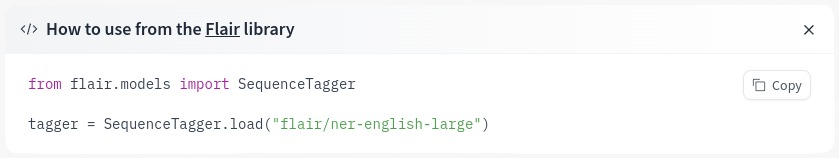

All flair models can easily be loaded from the Hub:

from flair.data import Sentence

from flair.models import SequenceTagger

# load tagger

tagger = SequenceTagger.load("flair/ner-multi")Once loaded, you can use predict() to perform inference:

sentence = Sentence("George Washington ging nach Washington.")

tagger.predict(sentence)

# print sentence

print(sentence)It outputs the following:

Sentence[6]: "George Washington ging nach Washington." → ["George Washington"/PER, "Washington"/LOC]

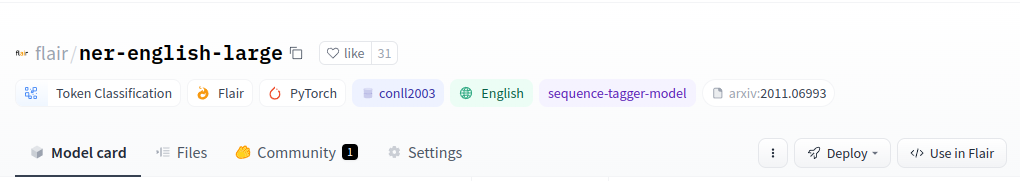

If you want to load a specific Flair model, you can click Use in Flair in the model card and you will be given a working snippet!

Additional resources

- Flair repository

- Flair docs

- Official Flair models on the Hub (mainly trained by @alanakbik and @stefan-it)