Hub documentation

Using OpenCLIP at Hugging Face

Using OpenCLIP at Hugging Face

OpenCLIP is an open-source implementation of OpenAI’s CLIP.

Exploring OpenCLIP on the Hub

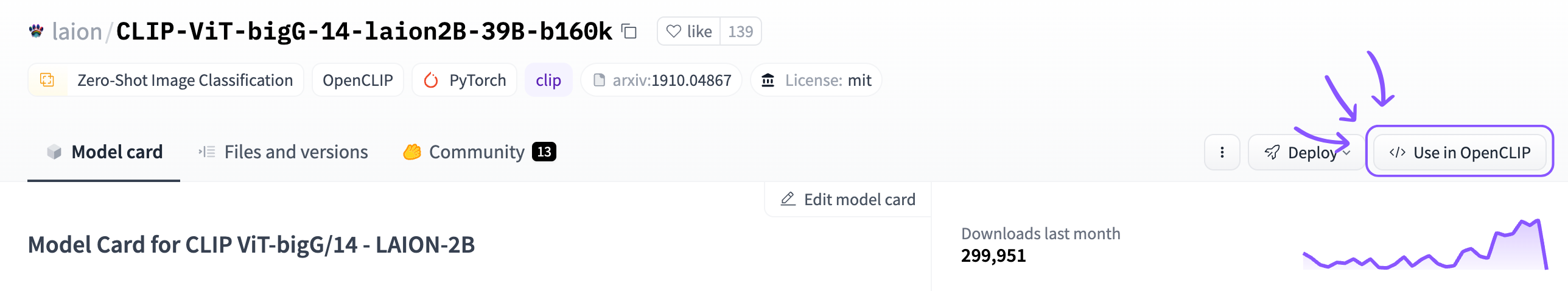

You can find OpenCLIP models by filtering at the left of the models page.

OpenCLIP models hosted on the Hub have a model card with useful information about the models. Thanks to OpenCLIP Hugging Face Hub integration, you can load OpenCLIP models with a few lines of code. You can also deploy these models using Inference Endpoints.

Installation

To get started, you can follow the OpenCLIP installation guide. You can also use the following one-line install through pip:

$ pip install open_clip_torchUsing existing models

All OpenCLIP models can easily be loaded from the Hub:

import open_clip

model, preprocess = open_clip.create_model_from_pretrained('hf-hub:laion/CLIP-ViT-g-14-laion2B-s12B-b42K')

tokenizer = open_clip.get_tokenizer('hf-hub:laion/CLIP-ViT-g-14-laion2B-s12B-b42K')Once loaded, you can encode the image and text to do zero-shot image classification:

import torch

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image = preprocess(image).unsqueeze(0)

text = tokenizer(["a diagram", "a dog", "a cat"])

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs) It outputs the probability of each possible class:

Label probs: tensor([[0.0020, 0.0034, 0.9946]])

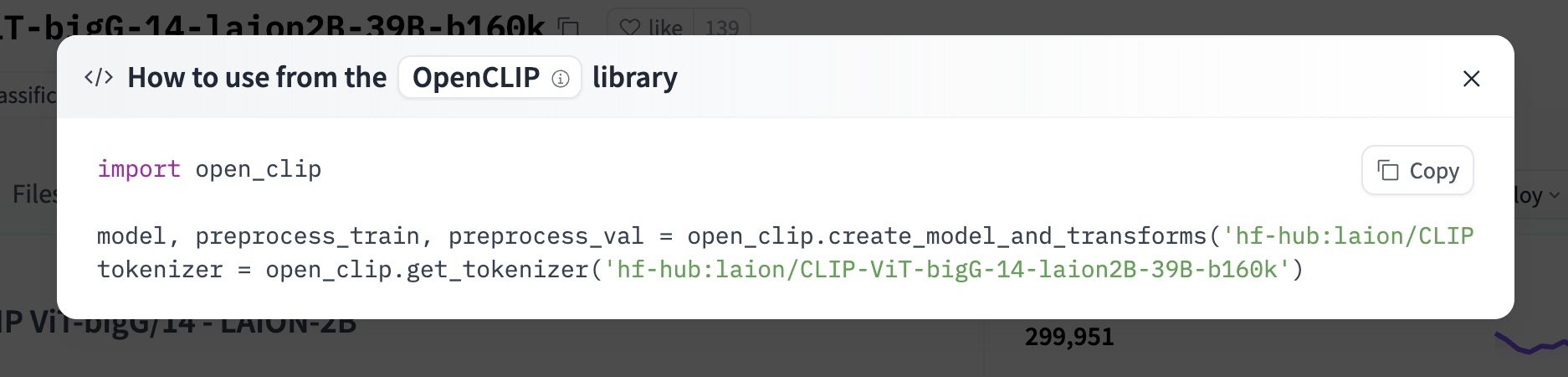

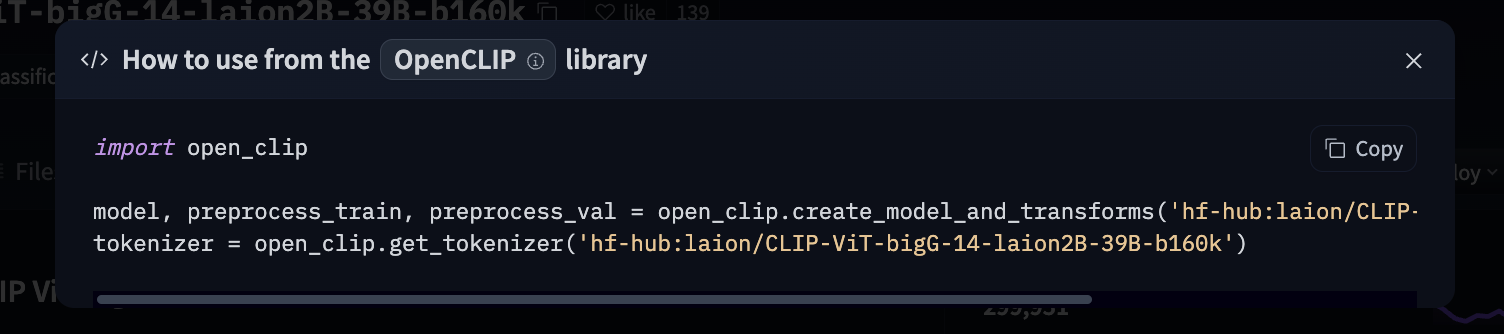

If you want to load a specific OpenCLIP model, you can click Use in OpenCLIP in the model card and you will be given a working snippet!

Additional resources

- OpenCLIP repository

- OpenCLIP docs

- OpenCLIP models in the Hub