Hub documentation

Using PaddleNLP at Hugging Face

Using PaddleNLP at Hugging Face

Leveraging the PaddlePaddle framework, PaddleNLP is an easy-to-use and powerful NLP library with awesome pre-trained model zoo, supporting wide-range of NLP tasks from research to industrial applications.

Exploring PaddleNLP in the Hub

You can find PaddleNLP models by filtering at the left of the models page.

All models on the Hub come up with the following features:

- An automatically generated model card with a brief description and metadata tags that help for discoverability.

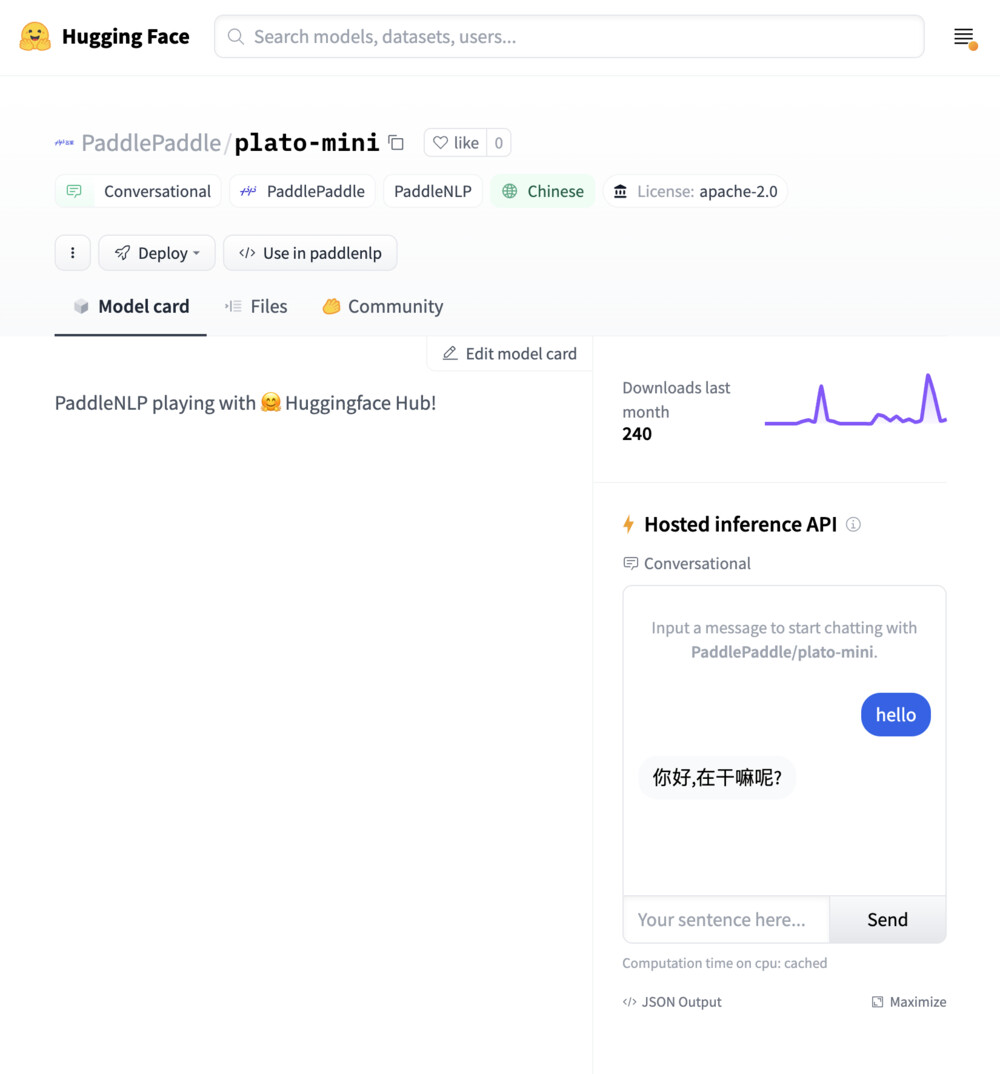

- An interactive widget you can use to play out with the model directly in the browser.

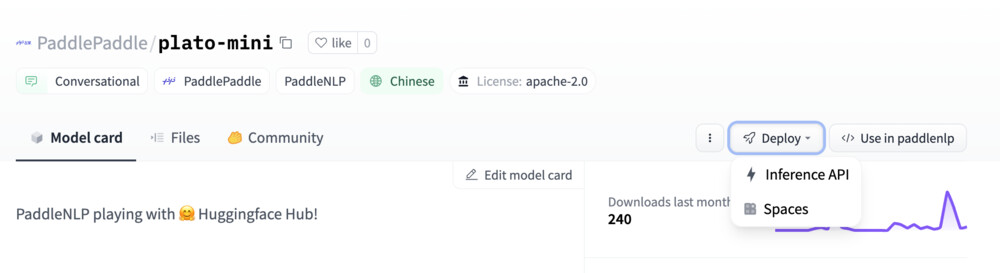

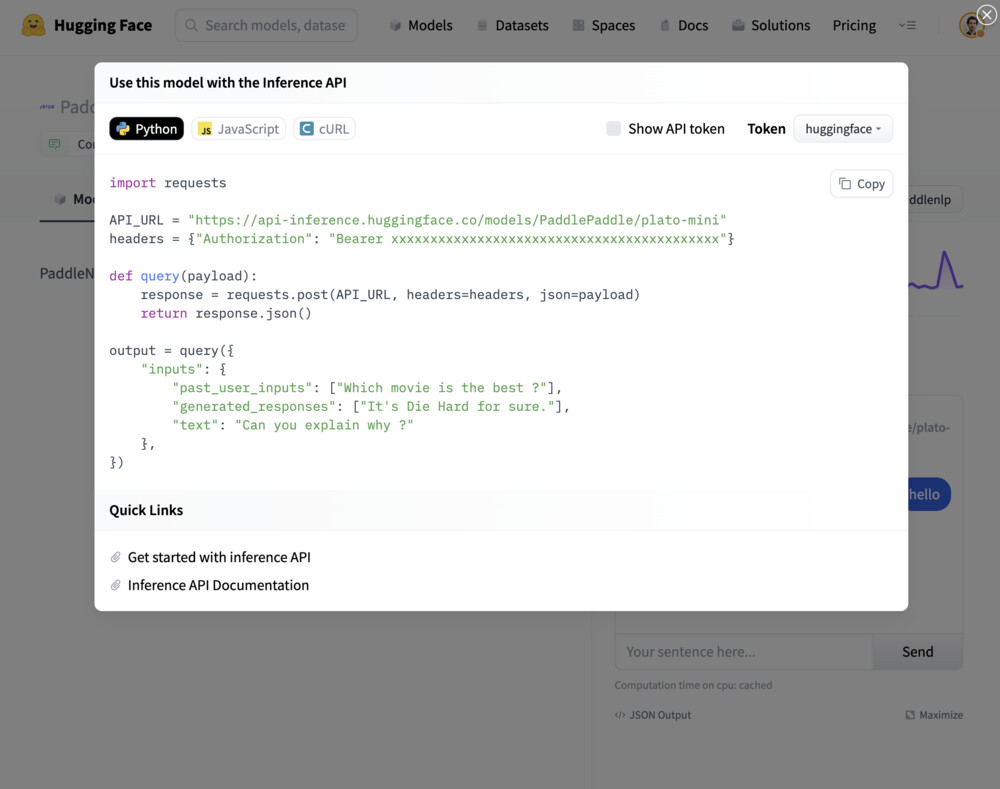

- An Inference API that allows to make inference requests.

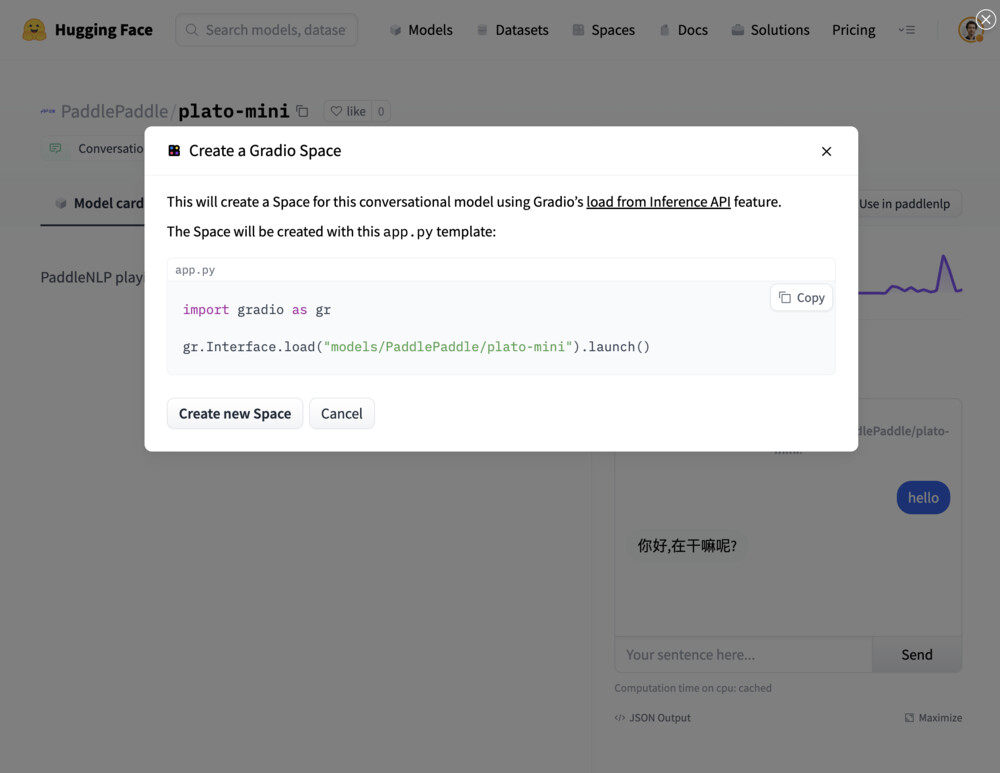

- Easily deploy your model as a Gradio app on Spaces.

Installation

To get started, you can follow PaddlePaddle Quick Start to install the PaddlePaddle Framework with your favorite OS, Package Manager and Compute Platform.

paddlenlp offers a quick one-line install through pip:

pip install -U paddlenlpUsing existing models

Similar to transformer models, the paddlenlp library provides a simple one-liner to load models from the Hugging Face Hub by setting from_hf_hub=True! Depending on how you want to use them, you can use the high-level API using the Taskflow function or you can use AutoModel and AutoTokenizer for more control.

# Taskflow provides a simple end-to-end capability and a more optimized experience for inference

from paddlenlp.transformers import Taskflow

taskflow = Taskflow("fill-mask", task_path="PaddlePaddle/ernie-1.0-base-zh", from_hf_hub=True)

# If you want more control, you will need to define the tokenizer and model.

from paddlenlp.transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("PaddlePaddle/ernie-1.0-base-zh", from_hf_hub=True)

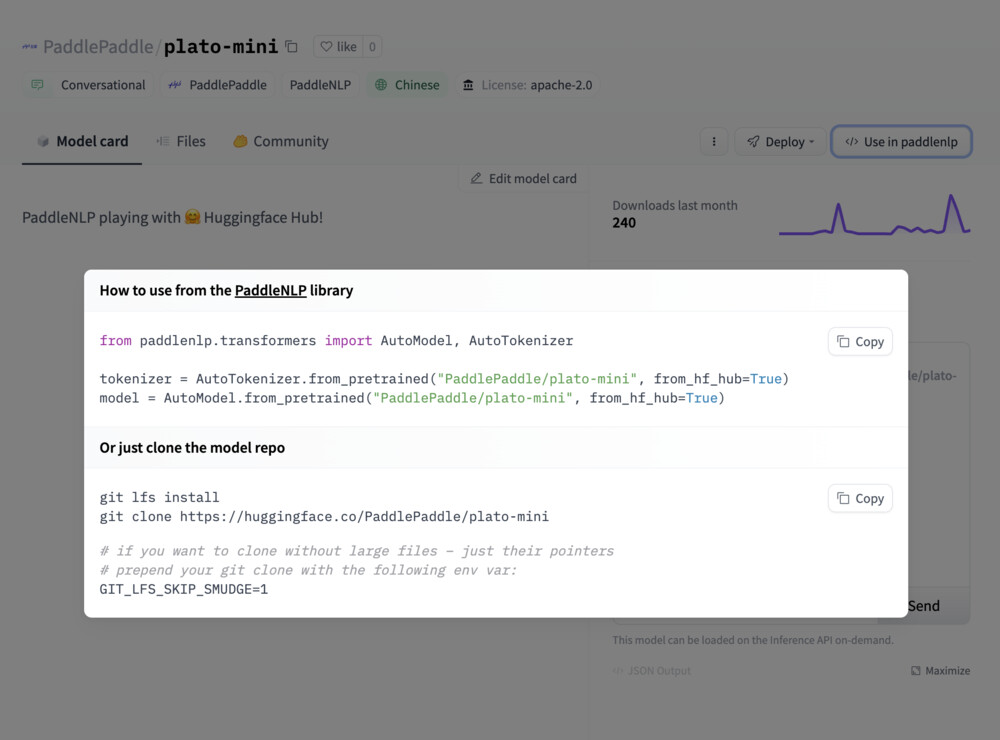

model = AutoModelForMaskedLM.from_pretrained("PaddlePaddle/ernie-1.0-base-zh", from_hf_hub=True)If you want to see how to load a specific model, you can click Use in paddlenlp and you will be given a working snippet that you can load it!

Sharing your models

You can share your PaddleNLP models by using the save_to_hf_hub method under all Model and Tokenizer classes.

from paddlenlp.transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("PaddlePaddle/ernie-1.0-base-zh", from_hf_hub=True)

model = AutoModelForMaskedLM.from_pretrained("PaddlePaddle/ernie-1.0-base-zh", from_hf_hub=True)

tokenizer.save_to_hf_hub(repo_id="<my_org_name>/<my_repo_name>")

model.save_to_hf_hub(repo_id="<my_org_name>/<my_repo_name>")Additional resources

- PaddlePaddle Installation guide.

- PaddleNLP GitHub Repo.

- PaddlePaddle on the Hugging Face Hub