Hub documentation

Using 🧨 diffusers at Hugging Face

Using 🧨 diffusers at Hugging Face

Diffusers is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules. Whether you’re looking for a simple inference solution or want to train your own diffusion model, Diffusers is a modular toolbox that supports both. The library is designed with a focus on usability over performance, simple over easy, and customizability over abstractions.

Exploring Diffusers in the Hub

There are over 10,000 diffusers compatible pipelines on the Hub which you can find by filtering at the left of the models page. Diffusion systems are typically composed of multiple components such as text encoder, UNet, VAE, and scheduler. Even though they are not standalone models, the pipeline abstraction makes it easy to use them for inference or training.

You can find diffusion pipelines for many different tasks:

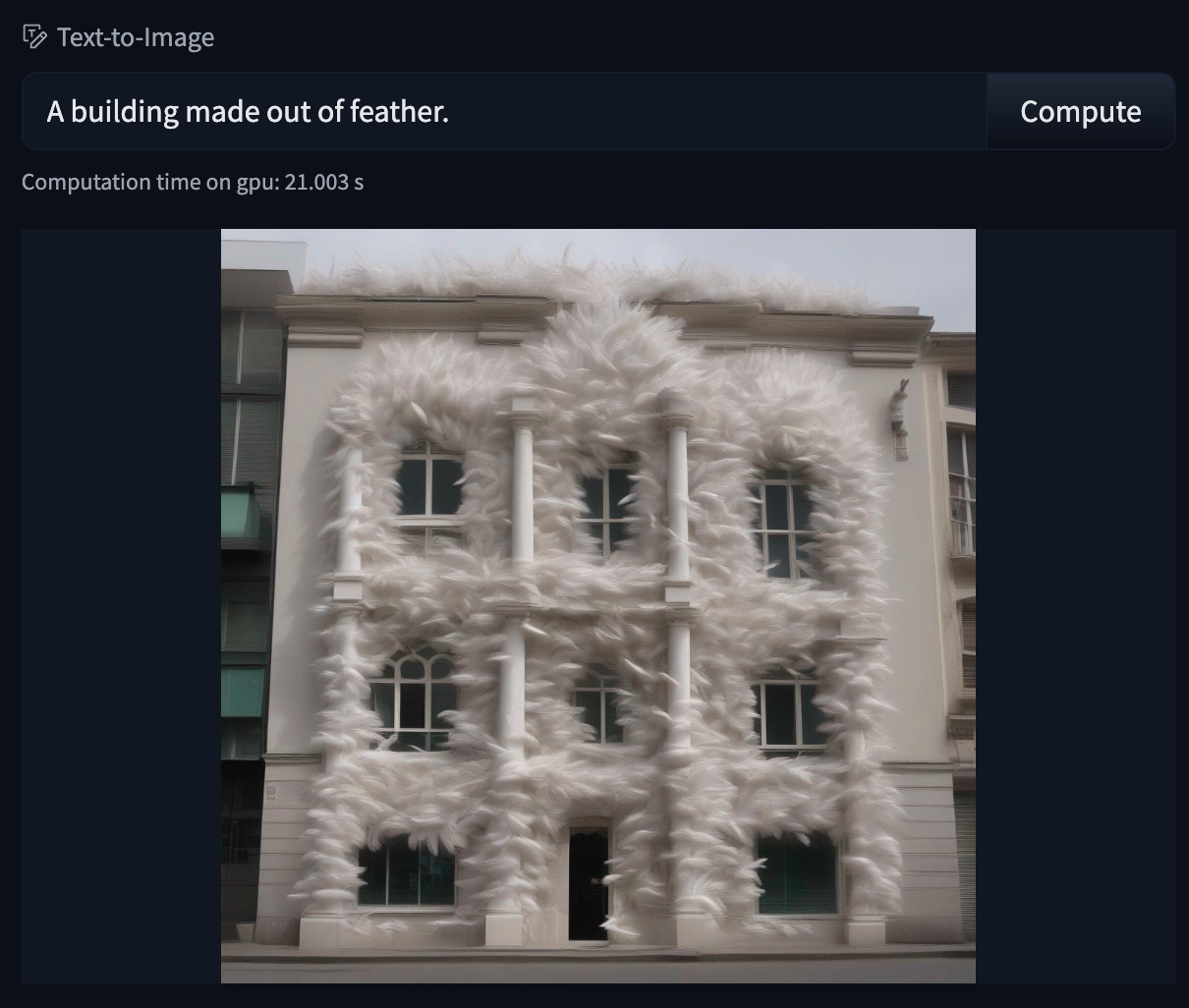

- Generating images from natural language text prompts (text-to-image).

- Transforming images using natural language text prompts (image-to-image).

- Generating videos from natural language descriptions (text-to-video).

You can try out the models directly in the browser if you want to test them out without downloading them, thanks to the in-browser widgets!

Diffusers repository files

A Diffusers model repository contains all the required model sub-components such as the variational autoencoder for encoding images and decoding latents, text encoder, transformer model, and more. These sub-components are organized into a multi-folder layout.

Each subfolder contains the weights and configuration - where applicable - for each component similar to a Transformers model.

Weights are usually stored as safetensors files and the configuration is usually a json file with information about the model architecture.

Using existing pipelines

All diffusers pipelines are a line away from being used! To run generation we recommended to always start from the DiffusionPipeline:

from diffusers import DiffusionPipeline

pipeline = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0")If you want to load a specific pipeline component such as the UNet, you can do so by:

from diffusers import UNet2DConditionModel

unet = UNet2DConditionModel.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="unet")Sharing your pipelines and models

All the pipeline classes, model classes, and scheduler classes are fully compatible with the Hub. More specifically, they can be easily loaded from the Hub using the from_pretrained() method and can be shared with others using the push_to_hub() method.

For more details, please check out the documentation.