Hub documentation

ChatUI on Spaces

ChatUI on Spaces

Hugging Chat is an open-source interface enabling everyone to try open-source large language models such as Falcon, StarCoder, and BLOOM. Thanks to an official Docker template called ChatUI, you can deploy your own Hugging Chat based on a model of your choice with a few clicks using Hugging Face’s infrastructure.

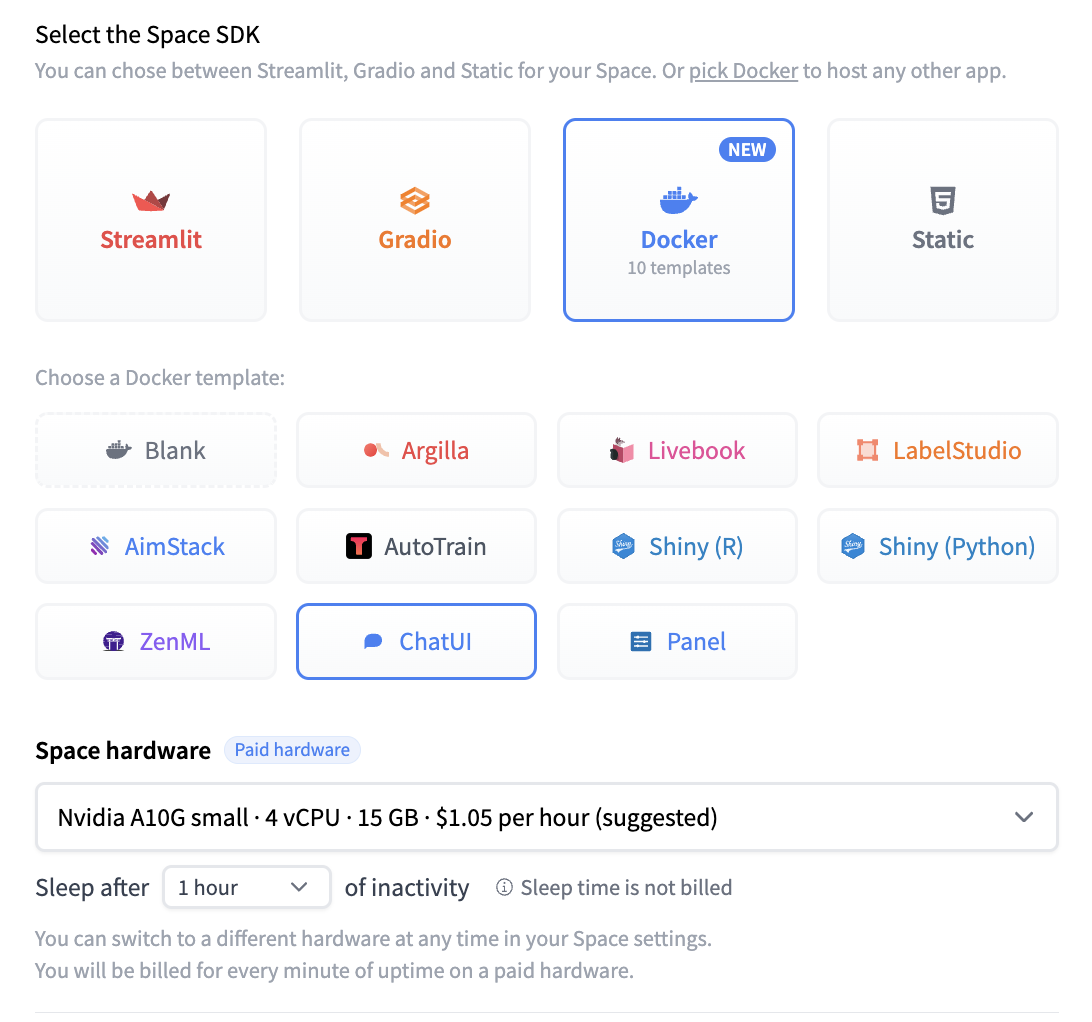

Deploy your own Chat UI

To get started, simply head here. In the backend of this application, text-generation-inference is used for better optimized model inference. Since these models can’t run on CPUs, you can select the GPU depending on your choice of model.

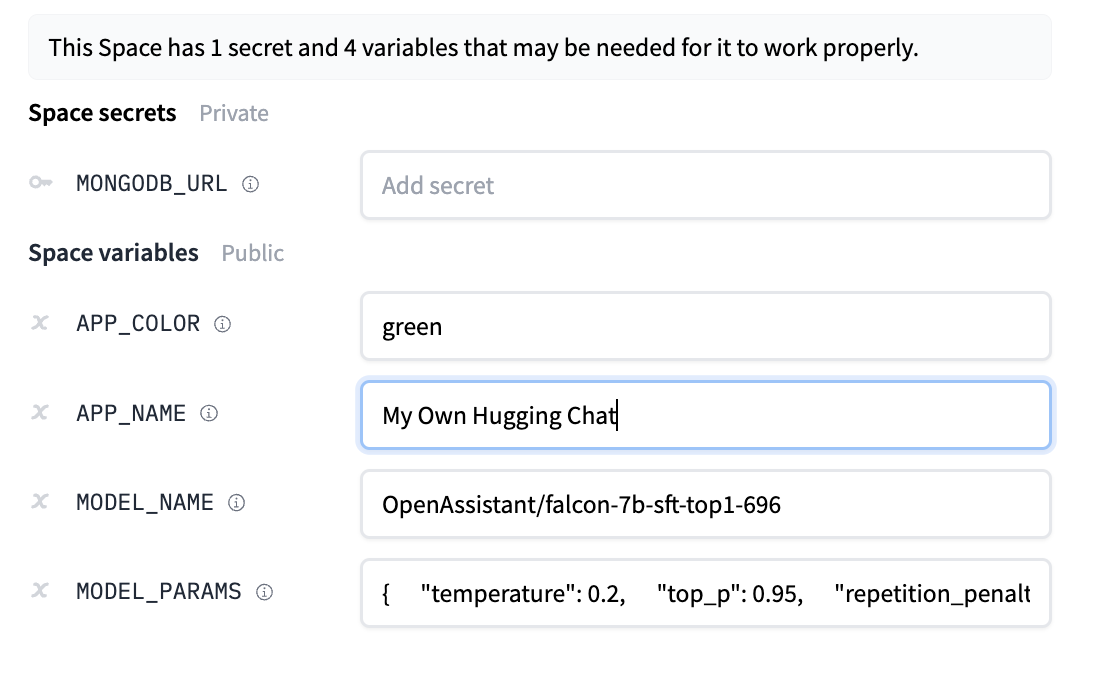

You should provide a MongoDB endpoint where your chats will be written. If you leave this section blank, your logs will be persisted to a database inside the Space. Note that Hugging Face does not have access to your chats. You can configure the name and the theme of the Space by providing the application name and application color parameters. Below this, you can select the Hugging Face Hub ID of the model you wish to serve. You can also change the generation hyperparameters in the dictionary below in JSON format.

Note: If you’d like to deploy a model with gated access or a model in a private repository, you can simply provide HF_TOKEN in repository secrets. You need to set its value to an access token you can get from here.

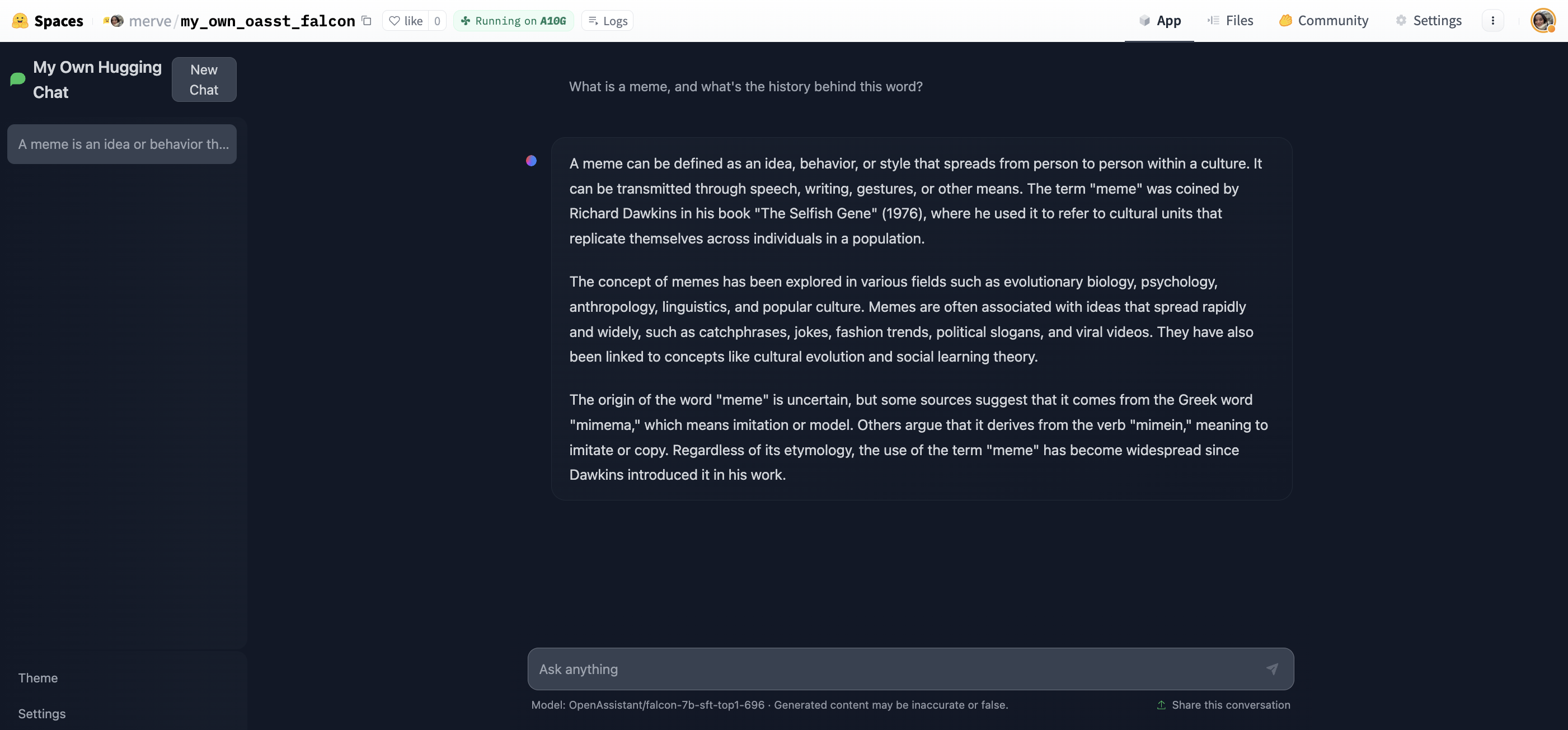

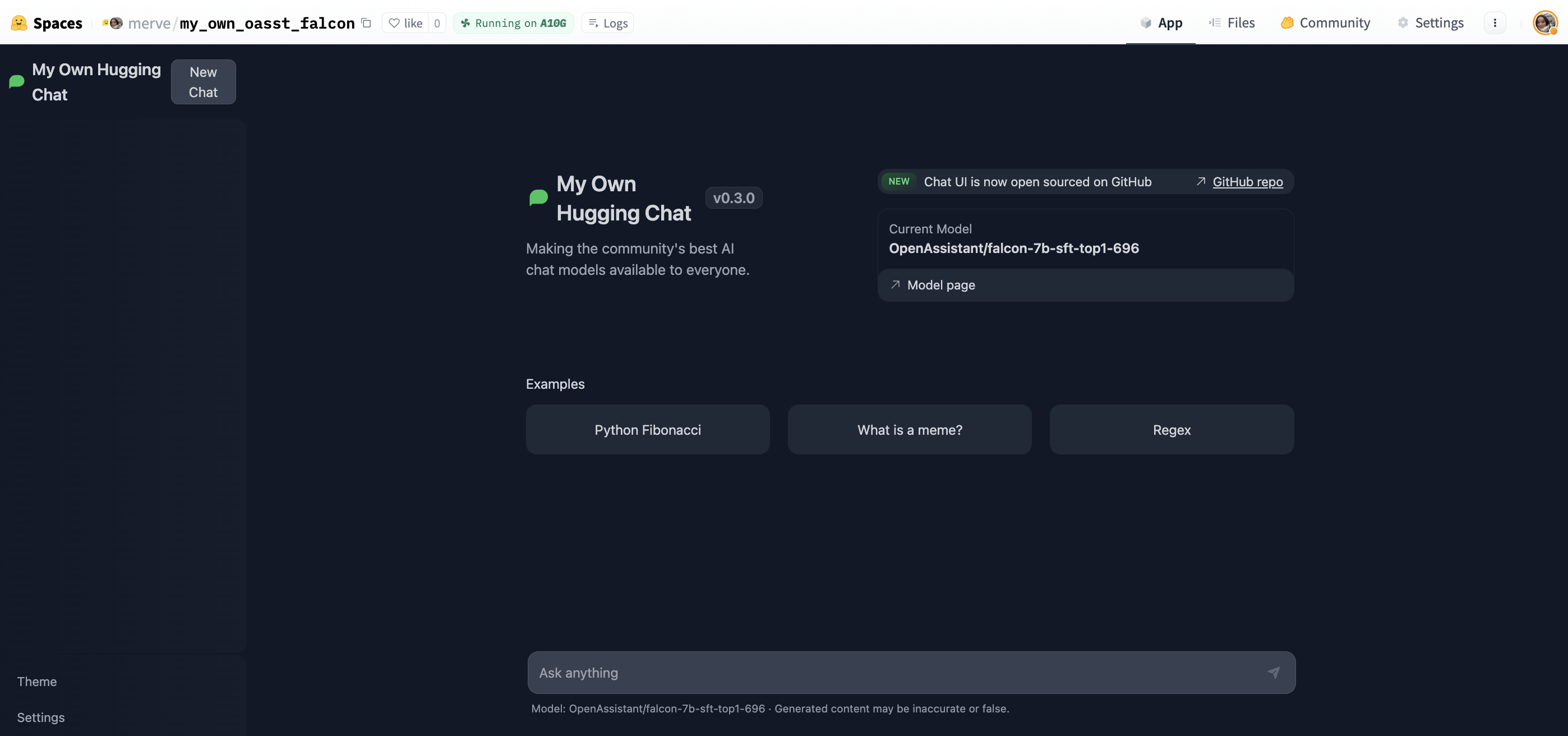

Once the creation is complete, you will see Building on your Space. Once built, you can try your own HuggingChat!

Start chatting!