Hub documentation

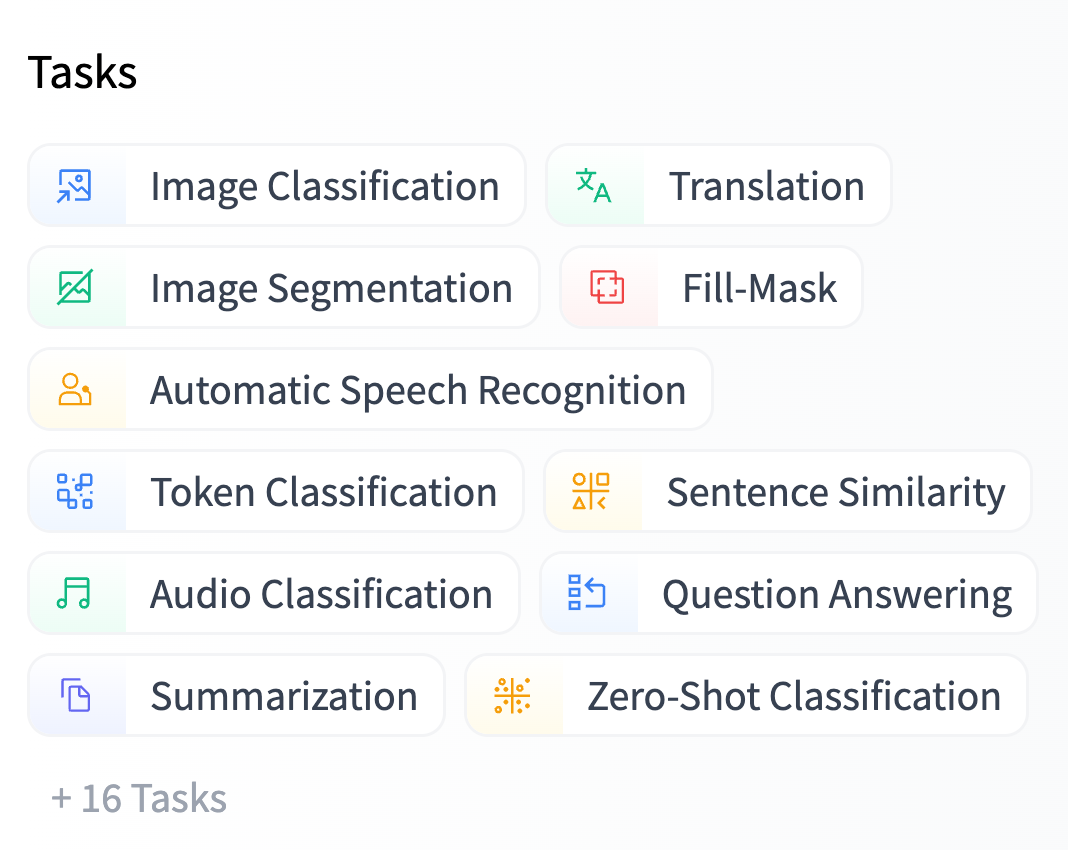

Tasks

Tasks

What’s a task?

Tasks, or pipeline types, describe the “shape” of each model’s API (inputs and outputs) and are used to determine which Inference API and widget we want to display for any given model.

This classification is relatively coarse-grained (you can always add more fine-grained task names in your model tags), so you should rarely have to create a new task. If you want to add support for a new task, this document explains the required steps.

Overview

Having a new task integrated into the Hub means that:

- Users can search for all models – and datasets – of a given task.

- The Inference API supports the task.

- Users can try out models directly with the widget. 🏆

Note that you don’t need to implement all the steps by yourself. Adding a new task is a community effort, and multiple people can contribute. 🧑🤝🧑

To begin the process, open a new issue in the huggingface_hub repository. Please use the “Adding a new task” template. ⚠️Before doing any coding, it’s suggested to go over this document. ⚠️

The first step is to upload a model for your proposed task. Once you have a model in the Hub for the new task, the next step is to enable it in the Inference API. There are three types of support that you can choose from:

- 🤗 using a

transformersmodel - 🐳 using a model from an officially supported library

- 🖨️ using a model with custom inference code. This experimental option has downsides, so we recommend using one of the other approaches.

Finally, you can add a couple of UI elements, such as the task icon and the widget, that complete the integration in the Hub. 📷

Some steps are orthogonal; you don’t need to do them in order. You don’t need the Inference API to add the icon. This means that, even if there isn’t full integration yet, users can still search for models of a given task.

Adding new tasks to the Hub

Using Hugging Face transformers library

If your model is a transformers-based model, there is a 1:1 mapping between the Inference API task and a pipeline class. Here are some example PRs from the transformers library:

Once the pipeline is submitted and deployed, you should be able to use the Inference API for your model.

Using Community Inference API with a supported library

The Hub also supports over 10 open-source libraries in the Community Inference API.

Adding a new task is relatively straightforward and requires 2 PRs:

- PR 1: Add the new task to the API validation. This code ensures that the inference input is valid for a given task. Some PR examples:

- PR 2: Add the new task to a library docker image. You should also add a template to

docker_images/common/app/pipelinesto facilitate integrating the task in other libraries. Here is an example PR:

Adding Community Inference API for a quick prototype

My model is not supported by any library. Am I doomed? 😱

We recommend using Hugging Face Spaces for these use cases.

UI elements

The Hub allows users to filter models by a given task. To do this, you need to add the task to several places. You’ll also get to pick an icon for the task!

- Add the task type to

Types.ts

In huggingface.js/packages/tasks/src/pipelines.ts, you need to do a couple of things

- Add the type to

PIPELINE_DATA. Note that pipeline types are sorted into different categories (NLP, Audio, Computer Vision, and others). - You will also need to fill minor changes in huggingface.js/packages/tasks/src/tasks/index.ts

- Choose an icon

You can add an icon in the lib/Icons directory. We usually choose carbon icons from https://icones.js.org/collection/carbon. Also add the icon to PipelineIcon.

Widget

Once the task is in production, what could be more exciting than implementing some way for users to play directly with the models in their browser? 🤩 You can find all the widgets here.

If you would be interested in contributing with a widget, you can look at the implementation of all the widgets.

< > Update on GitHub