Dataset Viewer

text

stringlengths 2.5k

6.39M

| kind

stringclasses 3

values |

|---|---|

# 09 Strain Gage

This is one of the most commonly used sensor. It is used in many transducers. Its fundamental operating principle is fairly easy to understand and it will be the purpose of this lecture.

A strain gage is essentially a thin wire that is wrapped on film of plastic.

<img src="img/StrainGage.png" width="200">

The strain gage is then mounted (glued) on the part for which the strain must be measured.

<img src="img/Strain_gauge_2.jpg" width="200">

## Stress, Strain

When a beam is under axial load, the axial stress, $\sigma_a$, is defined as:

\begin{align*}

\sigma_a = \frac{F}{A}

\end{align*}

with $F$ the axial load, and $A$ the cross sectional area of the beam under axial load.

<img src="img/BeamUnderStrain.png" width="200">

Under the load, the beam of length $L$ will extend by $dL$, giving rise to the definition of strain, $\epsilon_a$:

\begin{align*}

\epsilon_a = \frac{dL}{L}

\end{align*}

The beam will also contract laterally: the cross sectional area is reduced by $dA$. This results in a transverval strain $\epsilon_t$. The transversal and axial strains are related by the Poisson's ratio:

\begin{align*}

\nu = - \frac{\epsilon_t }{\epsilon_a}

\end{align*}

For a metal the Poission's ratio is typically $\nu = 0.3$, for an incompressible material, such as rubber (or water), $\nu = 0.5$.

Within the elastic limit, the axial stress and axial strain are related through Hooke's law by the Young's modulus, $E$:

\begin{align*}

\sigma_a = E \epsilon_a

\end{align*}

<img src="img/ElasticRegime.png" width="200">

## Resistance of a wire

The electrical resistance of a wire $R$ is related to its physical properties (the electrical resistiviy, $\rho$ in $\Omega$/m) and its geometry: length $L$ and cross sectional area $A$.

\begin{align*}

R = \frac{\rho L}{A}

\end{align*}

Mathematically, the change in wire dimension will result inchange in its electrical resistance. This can be derived from first principle:

\begin{align}

\frac{dR}{R} = \frac{d\rho}{\rho} + \frac{dL}{L} - \frac{dA}{A}

\end{align}

If the wire has a square cross section, then:

\begin{align*}

A & = L'^2 \\

\frac{dA}{A} & = \frac{d(L'^2)}{L'^2} = \frac{2L'dL'}{L'^2} = 2 \frac{dL'}{L'}

\end{align*}

We have related the change in cross sectional area to the transversal strain.

\begin{align*}

\epsilon_t = \frac{dL'}{L'}

\end{align*}

Using the Poisson's ratio, we can relate then relate the change in cross-sectional area ($dA/A$) to axial strain $\epsilon_a = dL/L$.

\begin{align*}

\epsilon_t &= - \nu \epsilon_a \\

\frac{dL'}{L'} &= - \nu \frac{dL}{L} \; \text{or}\\

\frac{dA}{A} & = 2\frac{dL'}{L'} = -2 \nu \frac{dL}{L}

\end{align*}

Finally we can substitute express $dA/A$ in eq. for $dR/R$ and relate change in resistance to change of wire geometry, remembering that for a metal $\nu =0.3$:

\begin{align}

\frac{dR}{R} & = \frac{d\rho}{\rho} + \frac{dL}{L} - \frac{dA}{A} \\

& = \frac{d\rho}{\rho} + \frac{dL}{L} - (-2\nu \frac{dL}{L}) \\

& = \frac{d\rho}{\rho} + 1.6 \frac{dL}{L} = \frac{d\rho}{\rho} + 1.6 \epsilon_a

\end{align}

It also happens that for most metals, the resistivity increases with axial strain. In general, one can then related the change in resistance to axial strain by defining the strain gage factor:

\begin{align}

S = 1.6 + \frac{d\rho}{\rho}\cdot \frac{1}{\epsilon_a}

\end{align}

and finally, we have:

\begin{align*}

\frac{dR}{R} = S \epsilon_a

\end{align*}

$S$ is materials dependent and is typically equal to 2.0 for most commercially availabe strain gages. It is dimensionless.

Strain gages are made of thin wire that is wraped in several loops, effectively increasing the length of the wire and therefore the sensitivity of the sensor.

_Question:

Explain why a longer wire is necessary to increase the sensitivity of the sensor_.

Most commercially available strain gages have a nominal resistance (resistance under no load, $R_{ini}$) of 120 or 350 $\Omega$.

Within the elastic regime, strain is typically within the range $10^{-6} - 10^{-3}$, in fact strain is expressed in unit of microstrain, with a 1 microstrain = $10^{-6}$. Therefore, changes in resistances will be of the same order. If one were to measure resistances, we will need a dynamic range of 120 dB, whih is typically very expensive. Instead, one uses the Wheatstone bridge to transform the change in resistance to a voltage, which is easier to measure and does not require such a large dynamic range.

## Wheatstone bridge:

<img src="img/WheatstoneBridge.png" width="200">

The output voltage is related to the difference in resistances in the bridge:

\begin{align*}

\frac{V_o}{V_s} = \frac{R_1R_3-R_2R_4}{(R_1+R_4)(R_2+R_3)}

\end{align*}

If the bridge is balanced, then $V_o = 0$, it implies: $R_1/R_2 = R_4/R_3$.

In practice, finding a set of resistors that balances the bridge is challenging, and a potentiometer is used as one of the resistances to do minor adjustement to balance the bridge. If one did not do the adjustement (ie if we did not zero the bridge) then all the measurement will have an offset or bias that could be removed in a post-processing phase, as long as the bias stayed constant.

If each resistance $R_i$ is made to vary slightly around its initial value, ie $R_i = R_{i,ini} + dR_i$. For simplicity, we will assume that the initial value of the four resistances are equal, ie $R_{1,ini} = R_{2,ini} = R_{3,ini} = R_{4,ini} = R_{ini}$. This implies that the bridge was initially balanced, then the output voltage would be:

\begin{align*}

\frac{V_o}{V_s} = \frac{1}{4} \left( \frac{dR_1}{R_{ini}} - \frac{dR_2}{R_{ini}} + \frac{dR_3}{R_{ini}} - \frac{dR_4}{R_{ini}} \right)

\end{align*}

Note here that the changes in $R_1$ and $R_3$ have a positive effect on $V_o$, while the changes in $R_2$ and $R_4$ have a negative effect on $V_o$. In practice, this means that is a beam is a in tension, then a strain gage mounted on the branch 1 or 3 of the Wheatstone bridge will produce a positive voltage, while a strain gage mounted on branch 2 or 4 will produce a negative voltage. One takes advantage of this to increase sensitivity to measure strain.

### Quarter bridge

One uses only one quarter of the bridge, ie strain gages are only mounted on one branch of the bridge.

\begin{align*}

\frac{V_o}{V_s} = \pm \frac{1}{4} \epsilon_a S

\end{align*}

Sensitivity, $G$:

\begin{align*}

G = \frac{V_o}{\epsilon_a} = \pm \frac{1}{4}S V_s

\end{align*}

### Half bridge

One uses half of the bridge, ie strain gages are mounted on two branches of the bridge.

\begin{align*}

\frac{V_o}{V_s} = \pm \frac{1}{2} \epsilon_a S

\end{align*}

### Full bridge

One uses of the branches of the bridge, ie strain gages are mounted on each branch.

\begin{align*}

\frac{V_o}{V_s} = \pm \epsilon_a S

\end{align*}

Therefore, as we increase the order of bridge, the sensitivity of the instrument increases. However, one should be carefull how we mount the strain gages as to not cancel out their measurement.

_Exercise_

1- Wheatstone bridge

<img src="img/WheatstoneBridge.png" width="200">

> How important is it to know \& match the resistances of the resistors you employ to create your bridge?

> How would you do that practically?

> Assume $R_1=120\,\Omega$, $R_2=120\,\Omega$, $R_3=120\,\Omega$, $R_4=110\,\Omega$, $V_s=5.00\,\text{V}$. What is $V_\circ$?

```

Vs = 5.00

Vo = (120**2-120*110)/(230*240) * Vs

print('Vo = ',Vo, ' V')

# typical range in strain a strain gauge can measure

# 1 -1000 micro-Strain

AxialStrain = 1000*10**(-6) # axial strain

StrainGageFactor = 2

R_ini = 120 # Ohm

R_1 = R_ini+R_ini*StrainGageFactor*AxialStrain

print(R_1)

Vo = (120**2-120*(R_1))/((120+R_1)*240) * Vs

print('Vo = ', Vo, ' V')

```

> How important is it to know \& match the resistances of the resistors you employ to create your bridge?

> How would you do that practically?

> Assume $R_1= R_2 =R_3=120\,\Omega$, $R_4=120.01\,\Omega$, $V_s=5.00\,\text{V}$. What is $V_\circ$?

```

Vs = 5.00

Vo = (120**2-120*120.01)/(240.01*240) * Vs

print(Vo)

```

2- Strain gage 1:

One measures the strain on a bridge steel beam. The modulus of elasticity is $E=190$ GPa. Only one strain gage is mounted on the bottom of the beam; the strain gage factor is $S=2.02$.

> a) What kind of electronic circuit will you use? Draw a sketch of it.

> b) Assume all your resistors including the unloaded strain gage are balanced and measure $120\,\Omega$, and that the strain gage is at location $R_2$. The supply voltage is $5.00\,\text{VDC}$. Will $V_\circ$ be positive or negative when a downward load is added?

In practice, we cannot have all resistances = 120 $\Omega$. at zero load, the bridge will be unbalanced (show $V_o \neq 0$). How could we balance our bridge?

Use a potentiometer to balance bridge, for the load cell, we ''zero'' the instrument.

Other option to zero-out our instrument? Take data at zero-load, record the voltage, $V_{o,noload}$. Substract $V_{o,noload}$ to my data.

> c) For a loading in which $V_\circ = -1.25\,\text{mV}$, calculate the strain $\epsilon_a$ in units of microstrain.

\begin{align*}

\frac{V_o}{V_s} & = - \frac{1}{4} \epsilon_a S\\

\epsilon_a & = -\frac{4}{S} \frac{V_o}{V_s}

\end{align*}

```

S = 2.02

Vo = -0.00125

Vs = 5

eps_a = -1*(4/S)*(Vo/Vs)

print(eps_a)

```

> d) Calculate the axial stress (in MPa) in the beam under this load.

> e) You now want more sensitivity in your measurement, you install a second strain gage on to

p of the beam. Which resistor should you use for this second active strain gage?

> f) With this new setup and the same applied load than previously, what should be the output voltage?

3- Strain Gage with Long Lead Wires

<img src="img/StrainGageLongWires.png" width="360">

A quarter bridge strain gage Wheatstone bridge circuit is constructed with $120\,\Omega$ resistors and a $120\,\Omega$ strain gage. For this practical application, the strain gage is located very far away form the DAQ station and the lead wires to the strain gage are $10\,\text{m}$ long and the lead wire have a resistance of $0.080\,\Omega/\text{m}$. The lead wire resistance can lead to problems since $R_{lead}$ changes with temperature.

> Design a modified circuit that will cancel out the effect of the lead wires.

## Homework

|

github_jupyter

|

```

#export

from fastai.basics import *

from fastai.tabular.core import *

from fastai.tabular.model import *

from fastai.tabular.data import *

#hide

from nbdev.showdoc import *

#default_exp tabular.learner

```

# Tabular learner

> The function to immediately get a `Learner` ready to train for tabular data

The main function you probably want to use in this module is `tabular_learner`. It will automatically create a `TabulaModel` suitable for your data and infer the irght loss function. See the [tabular tutorial](http://docs.fast.ai/tutorial.tabular) for an example of use in context.

## Main functions

```

#export

@log_args(but_as=Learner.__init__)

class TabularLearner(Learner):

"`Learner` for tabular data"

def predict(self, row):

tst_to = self.dls.valid_ds.new(pd.DataFrame(row).T)

tst_to.process()

tst_to.conts = tst_to.conts.astype(np.float32)

dl = self.dls.valid.new(tst_to)

inp,preds,_,dec_preds = self.get_preds(dl=dl, with_input=True, with_decoded=True)

i = getattr(self.dls, 'n_inp', -1)

b = (*tuplify(inp),*tuplify(dec_preds))

full_dec = self.dls.decode((*tuplify(inp),*tuplify(dec_preds)))

return full_dec,dec_preds[0],preds[0]

show_doc(TabularLearner, title_level=3)

```

It works exactly as a normal `Learner`, the only difference is that it implements a `predict` method specific to work on a row of data.

```

#export

@log_args(to_return=True, but_as=Learner.__init__)

@delegates(Learner.__init__)

def tabular_learner(dls, layers=None, emb_szs=None, config=None, n_out=None, y_range=None, **kwargs):

"Get a `Learner` using `dls`, with `metrics`, including a `TabularModel` created using the remaining params."

if config is None: config = tabular_config()

if layers is None: layers = [200,100]

to = dls.train_ds

emb_szs = get_emb_sz(dls.train_ds, {} if emb_szs is None else emb_szs)

if n_out is None: n_out = get_c(dls)

assert n_out, "`n_out` is not defined, and could not be infered from data, set `dls.c` or pass `n_out`"

if y_range is None and 'y_range' in config: y_range = config.pop('y_range')

model = TabularModel(emb_szs, len(dls.cont_names), n_out, layers, y_range=y_range, **config)

return TabularLearner(dls, model, **kwargs)

```

If your data was built with fastai, you probably won't need to pass anything to `emb_szs` unless you want to change the default of the library (produced by `get_emb_sz`), same for `n_out` which should be automatically inferred. `layers` will default to `[200,100]` and is passed to `TabularModel` along with the `config`.

Use `tabular_config` to create a `config` and cusotmize the model used. There is just easy access to `y_range` because this argument is often used.

All the other arguments are passed to `Learner`.

```

path = untar_data(URLs.ADULT_SAMPLE)

df = pd.read_csv(path/'adult.csv')

cat_names = ['workclass', 'education', 'marital-status', 'occupation', 'relationship', 'race']

cont_names = ['age', 'fnlwgt', 'education-num']

procs = [Categorify, FillMissing, Normalize]

dls = TabularDataLoaders.from_df(df, path, procs=procs, cat_names=cat_names, cont_names=cont_names,

y_names="salary", valid_idx=list(range(800,1000)), bs=64)

learn = tabular_learner(dls)

#hide

tst = learn.predict(df.iloc[0])

#hide

#test y_range is passed

learn = tabular_learner(dls, y_range=(0,32))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

learn = tabular_learner(dls, config = tabular_config(y_range=(0,32)))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

#export

@typedispatch

def show_results(x:Tabular, y:Tabular, samples, outs, ctxs=None, max_n=10, **kwargs):

df = x.all_cols[:max_n]

for n in x.y_names: df[n+'_pred'] = y[n][:max_n].values

display_df(df)

```

## Export -

```

#hide

from nbdev.export import notebook2script

notebook2script()

```

|

github_jupyter

|

# Aerospike Connect for Spark - SparkML Prediction Model Tutorial

## Tested with Java 8, Spark 3.0.0, Python 3.7, and Aerospike Spark Connector 3.0.0

## Summary

Build a linear regression model to predict birth weight using Aerospike Database and Spark.

Here are the features used:

- gestation weeks

- mother’s age

- father’s age

- mother’s weight gain during pregnancy

- [Apgar score](https://en.wikipedia.org/wiki/Apgar_score)

Aerospike is used to store the Natality dataset that is published by CDC. The table is accessed in Apache Spark using the Aerospike Spark Connector, and Spark ML is used to build and evaluate the model. The model can later be converted to PMML and deployed on your inference server for predictions.

### Prerequisites

1. Load Aerospike server if not alrady available - docker run -d --name aerospike -p 3000:3000 -p 3001:3001 -p 3002:3002 -p 3003:3003 aerospike

2. Feature key needs to be located in AS_FEATURE_KEY_PATH

3. [Download the connector](https://www.aerospike.com/enterprise/download/connectors/aerospike-spark/3.0.0/)

```

#IP Address or DNS name for one host in your Aerospike cluster.

#A seed address for the Aerospike database cluster is required

AS_HOST ="127.0.0.1"

# Name of one of your namespaces. Type 'show namespaces' at the aql prompt if you are not sure

AS_NAMESPACE = "test"

AS_FEATURE_KEY_PATH = "/etc/aerospike/features.conf"

AEROSPIKE_SPARK_JAR_VERSION="3.0.0"

AS_PORT = 3000 # Usually 3000, but change here if not

AS_CONNECTION_STRING = AS_HOST + ":"+ str(AS_PORT)

#Locate the Spark installation - this'll use the SPARK_HOME environment variable

import findspark

findspark.init()

# Below will help you download the Spark Connector Jar if you haven't done so already.

import urllib

import os

def aerospike_spark_jar_download_url(version=AEROSPIKE_SPARK_JAR_VERSION):

DOWNLOAD_PREFIX="https://www.aerospike.com/enterprise/download/connectors/aerospike-spark/"

DOWNLOAD_SUFFIX="/artifact/jar"

AEROSPIKE_SPARK_JAR_DOWNLOAD_URL = DOWNLOAD_PREFIX+AEROSPIKE_SPARK_JAR_VERSION+DOWNLOAD_SUFFIX

return AEROSPIKE_SPARK_JAR_DOWNLOAD_URL

def download_aerospike_spark_jar(version=AEROSPIKE_SPARK_JAR_VERSION):

JAR_NAME="aerospike-spark-assembly-"+AEROSPIKE_SPARK_JAR_VERSION+".jar"

if(not(os.path.exists(JAR_NAME))) :

urllib.request.urlretrieve(aerospike_spark_jar_download_url(),JAR_NAME)

else :

print(JAR_NAME+" already downloaded")

return os.path.join(os.getcwd(),JAR_NAME)

AEROSPIKE_JAR_PATH=download_aerospike_spark_jar()

os.environ["PYSPARK_SUBMIT_ARGS"] = '--jars ' + AEROSPIKE_JAR_PATH + ' pyspark-shell'

import pyspark

from pyspark.context import SparkContext

from pyspark.sql.context import SQLContext

from pyspark.sql.session import SparkSession

from pyspark.ml.linalg import Vectors

from pyspark.ml.regression import LinearRegression

from pyspark.sql.types import StringType, StructField, StructType, ArrayType, IntegerType, MapType, LongType, DoubleType

#Get a spark session object and set required Aerospike configuration properties

sc = SparkContext.getOrCreate()

print("Spark Verison:", sc.version)

spark = SparkSession(sc)

sqlContext = SQLContext(sc)

spark.conf.set("aerospike.namespace",AS_NAMESPACE)

spark.conf.set("aerospike.seedhost",AS_CONNECTION_STRING)

spark.conf.set("aerospike.keyPath",AS_FEATURE_KEY_PATH )

```

## Step 1: Load Data into a DataFrame

```

as_data=spark \

.read \

.format("aerospike") \

.option("aerospike.set", "natality").load()

as_data.show(5)

print("Inferred Schema along with Metadata.")

as_data.printSchema()

```

### To speed up the load process at scale, use the [knobs](https://www.aerospike.com/docs/connect/processing/spark/performance.html) available in the Aerospike Spark Connector.

For example, **spark.conf.set("aerospike.partition.factor", 15 )** will map 4096 Aerospike partitions to 32K Spark partitions. <font color=red> (Note: Please configure this carefully based on the available resources (CPU threads) in your system.)</font>

## Step 2 - Prep data

```

# This Spark3.0 setting, if true, will turn on Adaptive Query Execution (AQE), which will make use of the

# runtime statistics to choose the most efficient query execution plan. It will speed up any joins that you

# plan to use for data prep step.

spark.conf.set("spark.sql.adaptive.enabled", 'true')

# Run a query in Spark SQL to ensure no NULL values exist.

as_data.createOrReplaceTempView("natality")

sql_query = """

SELECT *

from natality

where weight_pnd is not null

and mother_age is not null

and father_age is not null

and father_age < 80

and gstation_week is not null

and weight_gain_pnd < 90

and apgar_5min != "99"

and apgar_5min != "88"

"""

clean_data = spark.sql(sql_query)

#Drop the Aerospike metadata from the dataset because its not required.

#The metadata is added because we are inferring the schema as opposed to providing a strict schema

columns_to_drop = ['__key','__digest','__expiry','__generation','__ttl' ]

clean_data = clean_data.drop(*columns_to_drop)

# dropping null values

clean_data = clean_data.dropna()

clean_data.cache()

clean_data.show(5)

#Descriptive Analysis of the data

clean_data.describe().toPandas().transpose()

```

## Step 3 Visualize Data

```

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import math

pdf = clean_data.toPandas()

#Histogram - Father Age

pdf[['father_age']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Fathers Age (years)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

'''

pdf[['mother_age']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Mothers Age (years)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

'''

pdf[['weight_pnd']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Babys Weight (Pounds)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

pdf[['gstation_week']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Gestation (Weeks)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

pdf[['weight_gain_pnd']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('mother’s weight gain during pregnancy',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

#Histogram - Apgar Score

print("Apgar Score: Scores of 7 and above are generally normal; 4 to 6, fairly low; and 3 and below are generally \

regarded as critically low and cause for immediate resuscitative efforts.")

pdf[['apgar_5min']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Apgar score',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

```

## Step 4 - Create Model

**Steps used for model creation:**

1. Split cleaned data into Training and Test sets

2. Vectorize features on which the model will be trained

3. Create a linear regression model (Choose any ML algorithm that provides the best fit for the given dataset)

4. Train model (Although not shown here, you could use K-fold cross-validation and Grid Search to choose the best hyper-parameters for the model)

5. Evaluate model

```

# Define a function that collects the features of interest

# (mother_age, father_age, and gestation_weeks) into a vector.

# Package the vector in a tuple containing the label (`weight_pounds`) for that

# row.##

def vector_from_inputs(r):

return (r["weight_pnd"], Vectors.dense(float(r["mother_age"]),

float(r["father_age"]),

float(r["gstation_week"]),

float(r["weight_gain_pnd"]),

float(r["apgar_5min"])))

#Split that data 70% training and 30% Evaluation data

train, test = clean_data.randomSplit([0.7, 0.3])

#Check the shape of the data

train.show()

print((train.count(), len(train.columns)))

test.show()

print((test.count(), len(test.columns)))

# Create an input DataFrame for Spark ML using the above function.

training_data = train.rdd.map(vector_from_inputs).toDF(["label",

"features"])

# Construct a new LinearRegression object and fit the training data.

lr = LinearRegression(maxIter=5, regParam=0.2, solver="normal")

#Voila! your first model using Spark ML is trained

model = lr.fit(training_data)

# Print the model summary.

print("Coefficients:" + str(model.coefficients))

print("Intercept:" + str(model.intercept))

print("R^2:" + str(model.summary.r2))

model.summary.residuals.show()

```

### Evaluate Model

```

eval_data = test.rdd.map(vector_from_inputs).toDF(["label",

"features"])

eval_data.show()

evaluation_summary = model.evaluate(eval_data)

print("MAE:", evaluation_summary.meanAbsoluteError)

print("RMSE:", evaluation_summary.rootMeanSquaredError)

print("R-squared value:", evaluation_summary.r2)

```

## Step 5 - Batch Prediction

```

#eval_data contains the records (ideally production) that you'd like to use for the prediction

predictions = model.transform(eval_data)

predictions.show()

```

#### Compare the labels and the predictions, they should ideally match up for an accurate model. Label is the actual weight of the baby and prediction is the predicated weight

### Saving the Predictions to Aerospike for ML Application's consumption

```

# Aerospike is a key/value database, hence a key is needed to store the predictions into the database. Hence we need

# to add the _id column to the predictions using SparkSQL

predictions.createOrReplaceTempView("predict_view")

sql_query = """

SELECT *, monotonically_increasing_id() as _id

from predict_view

"""

predict_df = spark.sql(sql_query)

predict_df.show()

print("#records:", predict_df.count())

# Now we are good to write the Predictions to Aerospike

predict_df \

.write \

.mode('overwrite') \

.format("aerospike") \

.option("aerospike.writeset", "predictions")\

.option("aerospike.updateByKey", "_id") \

.save()

```

#### You can verify that data is written to Aerospike by using either [AQL](https://www.aerospike.com/docs/tools/aql/data_management.html) or the [Aerospike Data Browser](https://github.com/aerospike/aerospike-data-browser)

## Step 6 - Deploy

### Here are a few options:

1. Save the model to a PMML file by converting it using Jpmml/[pyspark2pmml](https://github.com/jpmml/pyspark2pmml) and load it into your production enviornment for inference.

2. Use Aerospike as an [edge database for high velocity ingestion](https://medium.com/aerospike-developer-blog/add-horsepower-to-ai-ml-pipeline-15ca42a10982) for your inference pipline.

|

github_jupyter

|

## Concurrency with asyncio

### Thread vs. coroutine

```

# spinner_thread.py

import threading

import itertools

import time

import sys

class Signal:

go = True

def spin(msg, signal):

write, flush = sys.stdout.write, sys.stdout.flush

for char in itertools.cycle('|/-\\'):

status = char + ' ' + msg

write(status)

flush()

write('\x08' * len(status))

time.sleep(.1)

if not signal.go:

break

write(' ' * len(status) + '\x08' * len(status))

def slow_function():

time.sleep(3)

return 42

def supervisor():

signal = Signal()

spinner = threading.Thread(target=spin, args=('thinking!', signal))

print('spinner object:', spinner)

spinner.start()

result = slow_function()

signal.go = False

spinner.join()

return result

def main():

result = supervisor()

print('Answer:', result)

if __name__ == '__main__':

main()

# spinner_asyncio.py

import asyncio

import itertools

import sys

@asyncio.coroutine

def spin(msg):

write, flush = sys.stdout.write, sys.stdout.flush

for char in itertools.cycle('|/-\\'):

status = char + ' ' + msg

write(status)

flush()

write('\x08' * len(status))

try:

yield from asyncio.sleep(.1)

except asyncio.CancelledError:

break

write(' ' * len(status) + '\x08' * len(status))

@asyncio.coroutine

def slow_function():

yield from asyncio.sleep(3)

return 42

@asyncio.coroutine

def supervisor():

#Schedule the execution of a coroutine object:

#wrap it in a future. Return a Task object.

spinner = asyncio.ensure_future(spin('thinking!'))

print('spinner object:', spinner)

result = yield from slow_function()

spinner.cancel()

return result

def main():

loop = asyncio.get_event_loop()

result = loop.run_until_complete(supervisor())

loop.close()

print('Answer:', result)

if __name__ == '__main__':

main()

# flags_asyncio.py

import asyncio

import aiohttp

from flags import BASE_URL, save_flag, show, main

@asyncio.coroutine

def get_flag(cc):

url = '{}/{cc}/{cc}.gif'.format(BASE_URL, cc=cc.lower())

resp = yield from aiohttp.request('GET', url)

image = yield from resp.read()

return image

@asyncio.coroutine

def download_one(cc):

image = yield from get_flag(cc)

show(cc)

save_flag(image, cc.lower() + '.gif')

return cc

def download_many(cc_list):

loop = asyncio.get_event_loop()

to_do = [download_one(cc) for cc in sorted(cc_list)]

wait_coro = asyncio.wait(to_do)

res, _ = loop.run_until_complete(wait_coro)

loop.close()

return len(res)

if __name__ == '__main__':

main(download_many)

# flags2_asyncio.py

import asyncio

import collections

import aiohttp

from aiohttp import web

import tqdm

from flags2_common import HTTPStatus, save_flag, Result, main

DEFAULT_CONCUR_REQ = 5

MAX_CONCUR_REQ = 1000

class FetchError(Exception):

def __init__(self, country_code):

self.country_code = country_code

@asyncio.coroutine

def get_flag(base_url, cc):

url = '{}/{cc}/{cc}.gif'.format(BASE_URL, cc=cc.lower())

resp = yield from aiohttp.ClientSession().get(url)

if resp.status == 200:

image = yield from resp.read()

return image

elif resp.status == 404:

raise web.HTTPNotFound()

else:

raise aiohttp.HttpProcessingError(

code=resp.status, message=resp.reason, headers=resp.headers)

@asyncio.coroutine

def download_one(cc, base_url, semaphore, verbose):

try:

with (yield from semaphore):

image = yield from get_flag(base_url, cc)

except web.HTTPNotFound:

status = HTTPStatus.not_found

msg = 'not found'

except Exception as exc:

raise FetchError(cc) from exc

else:

save_flag(image, cc.lower() + '.gif')

status = HTTPStatus.ok

msg = 'OK'

if verbose and msg:

print(cc, msg)

return Result(status, cc)

@asyncio.coroutine

def downloader_coro(cc_list, base_url, verbose, concur_req):

counter = collections.Counter()

semaphore = asyncio.Semaphore(concur_req)

to_do = [download_one(cc, base_url, semaphore, verbose)

for cc in sorted(cc_list)]

to_do_iter = asyncio.as_completed(to_do)

if not verbose:

to_do_iter = tqdm.tqdm(to_do_iter, total=len(cc_list))

for future in to_do_iter:

try:

res = yield from future

except FetchError as exc:

country_code = exc.country_code

try:

error_msg = exc.__cause__.args[0]

except IndexError:

error_msg = exc.__cause__.__class__.__name__

if verbose and error_msg:

msg = '*** Error for {}: {}'

print(msg.format(country_code, error_msg))

status = HTTPStatus.error

else:

status = res.status

counter[status] += 1

return counter

def download_many(cc_list, base_url, verbose, concur_req):

loop = asyncio.get_event_loop()

coro = download_coro(cc_list, base_url, verbose, concur_req)

counts = loop.run_until_complete(wait_coro)

loop.close()

return counts

if __name__ == '__main__':

main(download_many, DEFAULT_CONCUR_REQ, MAX_CONCUR_REQ)

# run_in_executor

@asyncio.coroutine

def download_one(cc, base_url, semaphore, verbose):

try:

with (yield from semaphore):

image = yield from get_flag(base_url, cc)

except web.HTTPNotFound:

status = HTTPStatus.not_found

msg = 'not found'

except Exception as exc:

raise FetchError(cc) from exc

else:

# save_flag 也是阻塞操作,所以使用run_in_executor调用save_flag进行

# 异步操作

loop = asyncio.get_event_loop()

loop.run_in_executor(None, save_flag, image, cc.lower() + '.gif')

status = HTTPStatus.ok

msg = 'OK'

if verbose and msg:

print(cc, msg)

return Result(status, cc)

## Doing multiple requests for each download

# flags3_asyncio.py

@asyncio.coroutine

def http_get(url):

res = yield from aiohttp.request('GET', url)

if res.status == 200:

ctype = res.headers.get('Content-type', '').lower()

if 'json' in ctype or url.endswith('json'):

data = yield from res.json()

else:

data = yield from res.read()

elif res.status == 404:

raise web.HTTPNotFound()

else:

raise aiohttp.errors.HttpProcessingError(

code=res.status, message=res.reason,

headers=res.headers)

@asyncio.coroutine

def get_country(base_url, cc):

url = '{}/{cc}/metadata.json'.format(base_url, cc=cc.lower())

metadata = yield from http_get(url)

return metadata['country']

@asyncio.coroutine

def get_flag(base_url, cc):

url = '{}/{cc}/{cc}.gif'.format(base_url, cc=cc.lower())

return (yield from http_get(url))

@asyncio.coroutine

def download_one(cc, base_url, semaphore, verbose):

try:

with (yield from semaphore):

image = yield from get_flag(base_url, cc)

with (yield from semaphore):

country = yield from get_country(base_url, cc)

except web.HTTPNotFound:

status = HTTPStatus.not_found

msg = 'not found'

except Exception as exc:

raise FetchError(cc) from exc

else:

country = country.replace(' ', '_')

filename = '{}-{}.gif'.format(country, cc)

loop = asyncio.get_event_loop()

loop.run_in_executor(None, save_flag, image, filename)

status = HTTPStatus.ok

msg = 'OK'

if verbose and msg:

print(cc, msg)

return Result(status, cc)

```

### Writing asyncio servers

```

# tcp_charfinder.py

import sys

import asyncio

from charfinder import UnicodeNameIndex

CRLF = b'\r\n'

PROMPT = b'?>'

index = UnicodeNameIndex()

@asyncio.coroutine

def handle_queries(reader, writer):

while True:

writer.write(PROMPT)

yield from writer.drain()

data = yield from reader.readline()

try:

query = data.decode().strip()

except UnicodeDecodeError:

query = '\x00'

client = writer.get_extra_info('peername')

print('Received from {}: {!r}'.format(client, query))

if query:

if ord(query[:1]) < 32:

break

lines = list(index.find_description_strs(query))

if lines:

writer.writelines(line.encode() + CRLF for line in lines)

writer.write(index.status(query, len(lines)).encode() + CRLF)

yield from writer.drain()

print('Sent {} results'.format(len(lines)))

print('Close the client socket')

writer.close()

def main(address='127.0.0.1', port=2323):

port = int(port)

loop = asyncio.get_event_loop()

server_coro = asyncio.start_server(handle_queries, address, port, loop=loop)

server = loop.run_until_complete(server_coro)

host = server.sockets[0].getsockname()

print('Serving on {}. Hit CTRL-C to stop.'.format(host))

try:

loop.run_forever()

except KeyboardInterrupt:

pass

print('Server shutting down.')

server.close()

loop.run_until_complete(server.wait_closed())

loop.close()

if __name__ == '__main__':

main()

# http_charfinder.py

@asyncio.coroutine

def init(loop, address, port):

app = web.Application(loop=loop)

app.router.add_route('GET', '/', home)

handler = app.make_handler()

server = yield from loop.create_server(handler, address, port)

return server.sockets[0].getsockname()

def home(request):

query = request.GET.get('query', '').strip()

print('Query: {!r}'.format(query))

if query:

descriptions = list(index.find_descriptions(query))

res = '\n'.join(ROW_TPL.format(**vars(descr))

for descr in descriptions)

msg = index.status(query, len(descriptions))

else:

descriptions = []

res = ''

msg = 'Enter words describing characters.'

html = template.format(query=query, result=res, message=msg)

print('Sending {} results'.format(len(descriptions)))

return web.Response(content_type=CONTENT_TYPE, text=html)

def main(address='127.0.0.1', port=8888):

port = int(port)

loop = asyncio.get_event_loop()

host = loop.run_until_complete(init(loop, address, port))

print('Serving on {}. Hit CTRL-C to stop.'.format(host))

try:

loop.run_forever()

except KeyboardInterrupt: # CTRL+C pressed

pass

print('Server shutting down.')

loop.close()

if __name__ == '__main__':

main(*sys.argv[1:])

```

|

github_jupyter

|

## Problem 1

---

#### The solution should try to use all the python constructs

- Conditionals and Loops

- Functions

- Classes

#### and datastructures as possible

- List

- Tuple

- Dictionary

- Set

### Problem

---

Moist has a hobby -- collecting figure skating trading cards. His card collection has been growing, and it is now too large to keep in one disorganized pile. Moist needs to sort the cards in alphabetical order, so that he can find the cards that he wants on short notice whenever it is necessary.

The problem is -- Moist can't actually pick up the cards because they keep sliding out his hands, and the sweat causes permanent damage. Some of the cards are rather expensive, mind you. To facilitate the sorting, Moist has convinced Dr. Horrible to build him a sorting robot. However, in his rather horrible style, Dr. Horrible has decided to make the sorting robot charge Moist a fee of $1 whenever it has to move a trading card during the sorting process.

Moist has figured out that the robot's sorting mechanism is very primitive. It scans the deck of cards from top to bottom. Whenever it finds a card that is lexicographically smaller than the previous card, it moves that card to its correct place in the stack above. This operation costs $1, and the robot resumes scanning down towards the bottom of the deck, moving cards one by one until the entire deck is sorted in lexicographical order from top to bottom.

As wet luck would have it, Moist is almost broke, but keeping his trading cards in order is the only remaining joy in his miserable life. He needs to know how much it would cost him to use the robot to sort his deck of cards.

Input

The first line of the input gives the number of test cases, **T**. **T** test cases follow. Each one starts with a line containing a single integer, **N**. The next **N** lines each contain the name of a figure skater, in order from the top of the deck to the bottom.

Output

For each test case, output one line containing "Case #x: y", where x is the case number (starting from 1) and y is the number of dollars it would cost Moist to use the robot to sort his deck of trading cards.

Limits

1 ≤ **T** ≤ 100.

Each name will consist of only letters and the space character.

Each name will contain at most 100 characters.

No name with start or end with a space.

No name will appear more than once in the same test case.

Lexicographically, the space character comes first, then come the upper case letters, then the lower case letters.

Small dataset

1 ≤ N ≤ 10.

Large dataset

1 ≤ N ≤ 100.

Sample

| Input | Output |

|---------------------|-------------|

| 2 | Case \#1: 1 |

| 2 | Case \#2: 0 |

| Oksana Baiul | |

| Michelle Kwan | |

| 3 | |

| Elvis Stojko | |

| Evgeni Plushenko | |

| Kristi Yamaguchi | |

*Note: Solution is not important but procedure taken to solve the problem is important*

|

github_jupyter

|

# Classification on Iris dataset with sklearn and DJL

In this notebook, you will try to use a pre-trained sklearn model to run on DJL for a general classification task. The model was trained with [Iris flower dataset](https://en.wikipedia.org/wiki/Iris_flower_data_set).

## Background

### Iris Dataset

The dataset contains a set of 150 records under five attributes - sepal length, sepal width, petal length, petal width and species.

Iris setosa | Iris versicolor | Iris virginica

:-------------------------:|:-------------------------:|:-------------------------:

|  |

The chart above shows three different kinds of the Iris flowers.

We will use sepal length, sepal width, petal length, petal width as the feature and species as the label to train the model.

### Sklearn Model

You can find more information [here](http://onnx.ai/sklearn-onnx/). You can use the sklearn built-in iris dataset to load the data. Then we defined a [RandomForestClassifer](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html) to train the model. After that, we convert the model to onnx format for DJL to run inference. The following code is a sample classification setup using sklearn:

```python

# Train a model.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

clr = RandomForestClassifier()

clr.fit(X_train, y_train)

```

## Preparation

This tutorial requires the installation of Java Kernel. To install the Java Kernel, see the [README](https://github.com/awslabs/djl/blob/master/jupyter/README.md).

These are dependencies we will use. To enhance the NDArray operation capability, we are importing ONNX Runtime and PyTorch Engine at the same time. Please find more information [here](https://github.com/awslabs/djl/blob/master/docs/onnxruntime/hybrid_engine.md#hybrid-engine-for-onnx-runtime).

```

// %mavenRepo snapshots https://oss.sonatype.org/content/repositories/snapshots/

%maven ai.djl:api:0.8.0

%maven ai.djl.onnxruntime:onnxruntime-engine:0.8.0

%maven ai.djl.pytorch:pytorch-engine:0.8.0

%maven org.slf4j:slf4j-api:1.7.26

%maven org.slf4j:slf4j-simple:1.7.26

%maven com.microsoft.onnxruntime:onnxruntime:1.4.0

%maven ai.djl.pytorch:pytorch-native-auto:1.6.0

import ai.djl.inference.*;

import ai.djl.modality.*;

import ai.djl.ndarray.*;

import ai.djl.ndarray.types.*;

import ai.djl.repository.zoo.*;

import ai.djl.translate.*;

import java.util.*;

```

## Step 1 create a Translator

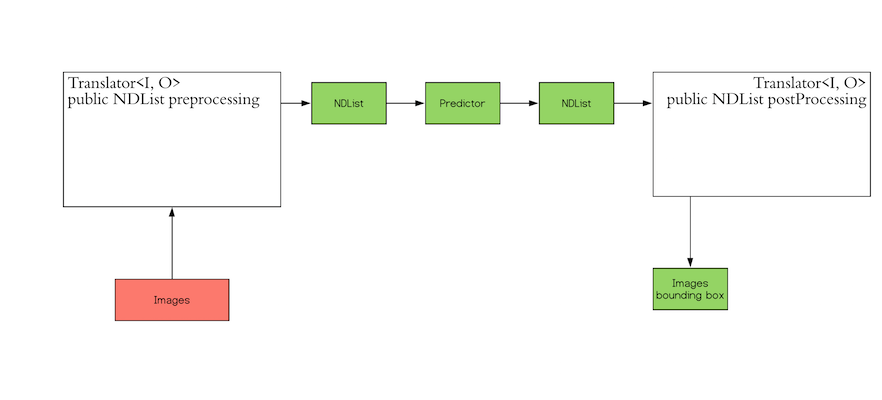

Inference in machine learning is the process of predicting the output for a given input based on a pre-defined model.

DJL abstracts away the whole process for ease of use. It can load the model, perform inference on the input, and provide

output. DJL also allows you to provide user-defined inputs. The workflow looks like the following:

The `Translator` interface encompasses the two white blocks: Pre-processing and Post-processing. The pre-processing

component converts the user-defined input objects into an NDList, so that the `Predictor` in DJL can understand the

input and make its prediction. Similarly, the post-processing block receives an NDList as the output from the

`Predictor`. The post-processing block allows you to convert the output from the `Predictor` to the desired output

format.

In our use case, we use a class namely `IrisFlower` as our input class type. We will use [`Classifications`](https://javadoc.io/doc/ai.djl/api/latest/ai/djl/modality/Classifications.html) as our output class type.

```

public static class IrisFlower {

public float sepalLength;

public float sepalWidth;

public float petalLength;

public float petalWidth;

public IrisFlower(float sepalLength, float sepalWidth, float petalLength, float petalWidth) {

this.sepalLength = sepalLength;

this.sepalWidth = sepalWidth;

this.petalLength = petalLength;

this.petalWidth = petalWidth;

}

}

```

Let's create a translator

```

public static class MyTranslator implements Translator<IrisFlower, Classifications> {

private final List<String> synset;

public MyTranslator() {

// species name

synset = Arrays.asList("setosa", "versicolor", "virginica");

}

@Override

public NDList processInput(TranslatorContext ctx, IrisFlower input) {

float[] data = {input.sepalLength, input.sepalWidth, input.petalLength, input.petalWidth};

NDArray array = ctx.getNDManager().create(data, new Shape(1, 4));

return new NDList(array);

}

@Override

public Classifications processOutput(TranslatorContext ctx, NDList list) {

return new Classifications(synset, list.get(1));

}

@Override

public Batchifier getBatchifier() {

return null;

}

}

```

## Step 2 Prepare your model

We will load a pretrained sklearn model into DJL. We defined a [`ModelZoo`](https://javadoc.io/doc/ai.djl/api/latest/ai/djl/repository/zoo/ModelZoo.html) concept to allow user load model from varity of locations, such as remote URL, local files or DJL pretrained model zoo. We need to define `Criteria` class to help the modelzoo locate the model and attach translator. In this example, we download a compressed ONNX model from S3.

```

String modelUrl = "https://mlrepo.djl.ai/model/tabular/random_forest/ai/djl/onnxruntime/iris_flowers/0.0.1/iris_flowers.zip";

Criteria<IrisFlower, Classifications> criteria = Criteria.builder()

.setTypes(IrisFlower.class, Classifications.class)

.optModelUrls(modelUrl)

.optTranslator(new MyTranslator())

.optEngine("OnnxRuntime") // use OnnxRuntime engine by default

.build();

ZooModel<IrisFlower, Classifications> model = ModelZoo.loadModel(criteria);

```

## Step 3 Run inference

User will just need to create a `Predictor` from model to run the inference.

```

Predictor<IrisFlower, Classifications> predictor = model.newPredictor();

IrisFlower info = new IrisFlower(1.0f, 2.0f, 3.0f, 4.0f);

predictor.predict(info);

```

|

github_jupyter

|

<table class="ee-notebook-buttons" align="left">

<td><a target="_blank" href="https://github.com/giswqs/earthengine-py-notebooks/tree/master/Algorithms/landsat_radiance.ipynb"><img width=32px src="https://www.tensorflow.org/images/GitHub-Mark-32px.png" /> View source on GitHub</a></td>

<td><a target="_blank" href="https://nbviewer.jupyter.org/github/giswqs/earthengine-py-notebooks/blob/master/Algorithms/landsat_radiance.ipynb"><img width=26px src="https://upload.wikimedia.org/wikipedia/commons/thumb/3/38/Jupyter_logo.svg/883px-Jupyter_logo.svg.png" />Notebook Viewer</a></td>

<td><a target="_blank" href="https://colab.research.google.com/github/giswqs/earthengine-py-notebooks/blob/master/Algorithms/landsat_radiance.ipynb"><img src="https://www.tensorflow.org/images/colab_logo_32px.png" /> Run in Google Colab</a></td>

</table>

## Install Earth Engine API and geemap

Install the [Earth Engine Python API](https://developers.google.com/earth-engine/python_install) and [geemap](https://geemap.org). The **geemap** Python package is built upon the [ipyleaflet](https://github.com/jupyter-widgets/ipyleaflet) and [folium](https://github.com/python-visualization/folium) packages and implements several methods for interacting with Earth Engine data layers, such as `Map.addLayer()`, `Map.setCenter()`, and `Map.centerObject()`.

The following script checks if the geemap package has been installed. If not, it will install geemap, which automatically installs its [dependencies](https://github.com/giswqs/geemap#dependencies), including earthengine-api, folium, and ipyleaflet.

```

# Installs geemap package

import subprocess

try:

import geemap

except ImportError:

print('Installing geemap ...')

subprocess.check_call(["python", '-m', 'pip', 'install', 'geemap'])

import ee

import geemap

```

## Create an interactive map

The default basemap is `Google Maps`. [Additional basemaps](https://github.com/giswqs/geemap/blob/master/geemap/basemaps.py) can be added using the `Map.add_basemap()` function.

```

Map = geemap.Map(center=[40,-100], zoom=4)

Map

```

## Add Earth Engine Python script

```

# Add Earth Engine dataset

# Load a raw Landsat scene and display it.

raw = ee.Image('LANDSAT/LC08/C01/T1/LC08_044034_20140318')

Map.centerObject(raw, 10)

Map.addLayer(raw, {'bands': ['B4', 'B3', 'B2'], 'min': 6000, 'max': 12000}, 'raw')

# Convert the raw data to radiance.

radiance = ee.Algorithms.Landsat.calibratedRadiance(raw)

Map.addLayer(radiance, {'bands': ['B4', 'B3', 'B2'], 'max': 90}, 'radiance')

# Convert the raw data to top-of-atmosphere reflectance.

toa = ee.Algorithms.Landsat.TOA(raw)

Map.addLayer(toa, {'bands': ['B4', 'B3', 'B2'], 'max': 0.2}, 'toa reflectance')

```

## Display Earth Engine data layers

```

Map.addLayerControl() # This line is not needed for ipyleaflet-based Map.

Map

```

|

github_jupyter

|

```

%cd /Users/Kunal/Projects/TCH_CardiacSignals_F20/

from numpy.random import seed

seed(1)

import numpy as np

import os

import matplotlib.pyplot as plt

import tensorflow

tensorflow.random.set_seed(2)

from tensorflow import keras

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras.regularizers import l1, l2

from tensorflow.keras.layers import Dense, Flatten, Reshape, Input, InputLayer, Dropout, Conv1D, MaxPooling1D, BatchNormalization, UpSampling1D, Conv1DTranspose

from tensorflow.keras.models import Sequential, Model

from src.preprocess.dim_reduce.patient_split import *

from src.preprocess.heartbeat_split import heartbeat_split

from sklearn.model_selection import train_test_split

data = np.load("Working_Data/Training_Subset/Normalized/ten_hbs/Normalized_Fixed_Dim_HBs_Idx" + str(1) + ".npy")

data.shape

def read_in(file_index, normalized, train, ratio):

"""

Reads in a file and can toggle between normalized and original files

:param file_index: patient number as string

:param normalized: binary that determines whether the files should be normalized or not

:param train: int that determines whether or not we are reading in data to train the model or for encoding

:param ratio: ratio to split the files into train and test

:return: returns npy array of patient data across 4 leads

"""

# filepath = os.path.join("Working_Data", "Normalized_Fixed_Dim_HBs_Idx" + file_index + ".npy")

# filepath = os.path.join("Working_Data", "1000d", "Normalized_Fixed_Dim_HBs_Idx35.npy")

filepath = "Working_Data/Training_Subset/Normalized/ten_hbs/Normalized_Fixed_Dim_HBs_Idx" + str(file_index) + ".npy"

if normalized == 1:

if train == 1:

normal_train, normal_test, abnormal = patient_split_train(filepath, ratio)

# noise_factor = 0.5

# noise_train = normal_train + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=normal_train.shape)

return normal_train, normal_test

elif train == 0:

training, test, full = patient_split_all(filepath, ratio)

return training, test, full

elif train == 2:

train_, test, full = patient_split_all(filepath, ratio)

# 4x the data

noise_factor = 0.5

noise_train = train_ + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=train_.shape)

noise_train2 = train_ + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=train_.shape)

noise_train3 = train_ + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=train_.shape)

train_ = np.concatenate((train_, noise_train, noise_train2, noise_train3))

return train_, test, full

else:

data = np.load(os.path.join("Working_Data", "Fixed_Dim_HBs_Idx" + file_index + ".npy"))

return data

def build_model(sig_shape, encode_size):

"""

Builds a deterministic autoencoder model, returning both the encoder and decoder models

:param sig_shape: shape of input signal

:param encode_size: dimension that we want to reduce to

:return: encoder, decoder models

"""

# encoder = Sequential()

# encoder.add(InputLayer((1000,4)))

# # idk if causal is really making that much of an impact but it seems useful for time series data?

# encoder.add(Conv1D(10, 11, activation="linear", padding="causal"))

# encoder.add(Conv1D(10, 5, activation="relu", padding="causal"))

# # encoder.add(Conv1D(10, 3, activation="relu", padding="same"))

# encoder.add(Flatten())

# encoder.add(Dense(750, activation = 'tanh', kernel_initializer='glorot_normal')) #tanh

# encoder.add(Dense(500, activation='relu', kernel_initializer='glorot_normal'))

# encoder.add(Dense(400, activation = 'relu', kernel_initializer='glorot_normal'))

# encoder.add(Dense(300, activation='relu', kernel_initializer='glorot_normal'))

# encoder.add(Dense(200, activation = 'relu', kernel_initializer='glorot_normal')) #relu

# encoder.add(Dense(encode_size))

encoder = Sequential()

encoder.add(InputLayer((1000,4)))

encoder.add(Conv1D(3, 11, activation="tanh", padding="same"))

encoder.add(Conv1D(5, 7, activation="relu", padding="same"))

encoder.add(MaxPooling1D(2))

encoder.add(Conv1D(5, 5, activation="tanh", padding="same"))

encoder.add(Conv1D(7, 3, activation="tanh", padding="same"))

encoder.add(MaxPooling1D(2))

encoder.add(Flatten())

encoder.add(Dense(750, activation = 'tanh', kernel_initializer='glorot_normal'))

# encoder.add(Dense(500, activation='relu', kernel_initializer='glorot_normal'))

encoder.add(Dense(400, activation = 'tanh', kernel_initializer='glorot_normal'))

# encoder.add(Dense(300, activation='relu', kernel_initializer='glorot_normal'))

encoder.add(Dense(200, activation = 'tanh', kernel_initializer='glorot_normal'))

encoder.add(Dense(encode_size))

# encoder.summary()

####################################################################################################################

# Build the decoder

# decoder = Sequential()

# decoder.add(InputLayer((latent_dim,)))

# decoder.add(Dense(200, activation='tanh', kernel_initializer='glorot_normal'))

# decoder.add(Dense(300, activation='relu', kernel_initializer='glorot_normal'))

# decoder.add(Dense(400, activation='relu', kernel_initializer='glorot_normal'))

# decoder.add(Dense(500, activation='relu', kernel_initializer='glorot_normal'))

# decoder.add(Dense(750, activation='relu', kernel_initializer='glorot_normal'))

# decoder.add(Dense(10000, activation='relu', kernel_initializer='glorot_normal'))

# decoder.add(Reshape((1000, 10)))

# decoder.add(Conv1DTranspose(4, 7, activation="relu", padding="same"))

decoder = Sequential()

decoder.add(InputLayer((encode_size,)))

decoder.add(Dense(200, activation='tanh', kernel_initializer='glorot_normal'))

# decoder.add(Dense(300, activation='relu', kernel_initializer='glorot_normal'))

decoder.add(Dense(400, activation='tanh', kernel_initializer='glorot_normal'))

# decoder.add(Dense(500, activation='relu', kernel_initializer='glorot_normal'))

decoder.add(Dense(750, activation='tanh', kernel_initializer='glorot_normal'))

decoder.add(Dense(10000, activation='tanh', kernel_initializer='glorot_normal'))

decoder.add(Reshape((1000, 10)))

# decoder.add(Conv1DTranspose(8, 3, activation="relu", padding="same"))

decoder.add(Conv1DTranspose(8, 11, activation="relu", padding="same"))

decoder.add(Conv1DTranspose(4, 5, activation="linear", padding="same"))

return encoder, decoder

def training_ae(num_epochs, reduced_dim, file_index):

"""

Training function for deterministic autoencoder model, saves the encoded and reconstructed arrays

:param num_epochs: number of epochs to use

:param reduced_dim: goal dimension

:param file_index: patient number

:return: None

"""

normal, abnormal, all = read_in(file_index, 1, 2, 0.3)

normal_train, normal_valid = train_test_split(normal, train_size=0.85, random_state=1)

# normal_train = normal[:round(len(normal)*.85),:]

# normal_valid = normal[round(len(normal)*.85):,:]

signal_shape = normal.shape[1:]

batch_size = round(len(normal) * 0.1)

encoder, decoder = build_model(signal_shape, reduced_dim)

inp = Input(signal_shape)

encode = encoder(inp)

reconstruction = decoder(encode)

autoencoder = Model(inp, reconstruction)

opt = keras.optimizers.Adam(learning_rate=0.0001) #0.0008

autoencoder.compile(optimizer=opt, loss='mse')

early_stopping = EarlyStopping(patience=10, min_delta=0.001, mode='min')

autoencoder = autoencoder.fit(x=normal_train, y=normal_train, epochs=num_epochs, validation_data=(normal_valid, normal_valid), batch_size=batch_size, callbacks=early_stopping)

plt.plot(autoencoder.history['loss'])

plt.plot(autoencoder.history['val_loss'])

plt.title('model loss patient' + str(file_index))

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'validation'], loc='upper left')

plt.show()

# using AE to encode other data

encoded = encoder.predict(all)

reconstruction = decoder.predict(encoded)

# save reconstruction, encoded, and input if needed

# reconstruction_save = os.path.join("Working_Data", "reconstructed_ae_" + str(reduced_dim) + "d_Idx" + str(file_index) + ".npy")

# encoded_save = os.path.join("Working_Data", "reduced_ae_" + str(reduced_dim) + "d_Idx" + str(file_index) + ".npy")

reconstruction_save = "Working_Data/Training_Subset/Model_Output/reconstructed_10hb_cae_" + str(file_index) + ".npy"

encoded_save = "Working_Data/Training_Subset/Model_Output/encoded_10hb_cae_" + str(file_index) + ".npy"

np.save(reconstruction_save, reconstruction)

np.save(encoded_save,encoded)

# if training and need to save test split for MSE calculation

# input_save = os.path.join("Working_Data","1000d", "original_data_test_ae" + str(100) + "d_Idx" + str(35) + ".npy")

# np.save(input_save, test)

def run(num_epochs, encoded_dim):

"""

Run training autoencoder over all dims in list

:param num_epochs: number of epochs to train for

:param encoded_dim: dimension to run on

:return None, saves arrays for reconstructed and dim reduced arrays

"""

for patient_ in [1,16,4,11]: #heartbeat_split.indicies:

print("Starting on index: " + str(patient_))

training_ae(num_epochs, encoded_dim, patient_)

print("Completed " + str(patient_) + " reconstruction and encoding, saved test data to assess performance")

#################### Training to be done for 100 epochs for all dimensions ############################################

run(100, 100)

# run(100,100)

def mean_squared_error(reduced_dimensions, model_name, patient_num, save_errors=False):

"""

Computes the mean squared error of the reconstructed signal against the original signal for each lead for each of the patient_num

Each signal's dimensions are reduced from 100 to 'reduced_dimensions', then reconstructed to obtain the reconstructed signal

:param reduced_dimensions: number of dimensions the file was originally reduced to

:param model_name: "lstm, vae, ae, pca, test"

:return: dictionary of patient_index -> length n array of MSE for each heartbeat (i.e. MSE of 100x4 arrays)

"""

print("calculating mse for file index {} on the reconstructed {} model".format(patient_num, model_name))

original_signals = np.load(

os.path.join("Working_Data", "Training_Subset", "Normalized", "ten_hbs", "Normalized_Fixed_Dim_HBs_Idx{}.npy".format(str(patient_num))))

print("original normalized signal")

# print(original_signals[0, :,:])

# print(np.mean(original_signals[0,:,:]))

# print(np.var(original_signals[0, :, :]))

# print(np.linalg.norm(original_signals[0,:,:]))

# print([np.linalg.norm(i) for i in original_signals[0,:,:].flatten()])

reconstructed_signals = np.load(os.path.join("Working_Data","Training_Subset", "Model_Output",

"reconstructed_10hb_cae_{}.npy").format(str(patient_num)))

# compute mean squared error for each heartbeat

# mse = (np.square(original_signals - reconstructed_signals) / (np.linalg.norm(original_signals))).mean(axis=1).mean(axis=1)

# mse = (np.square(original_signals - reconstructed_signals) / (np.square(original_signals) + np.square(reconstructed_signals))).mean(axis=1).mean(axis=1)

mse = np.zeros(np.shape(original_signals)[0])

for i in range(np.shape(original_signals)[0]):

mse[i] = (np.linalg.norm(original_signals[i,:,:] - reconstructed_signals[i,:,:]) ** 2) / (np.linalg.norm(original_signals[i,:,:]) ** 2)

# orig_flat = original_signals[i,:,:].flatten()

# recon_flat = reconstructed_signals[i,:,:].flatten()

# mse[i] = sklearn_mse(orig_flat, recon_flat)

# my_mse = mse[i]

# plt.plot([i for i in range(np.shape(mse)[0])], mse)

# plt.show()

if save_errors:

np.save(

os.path.join("Working_Data", "{}_errors_{}d_Idx{}.npy".format(model_name, reduced_dimensions, patient_num)), mse)

# print(list(mse))

# return np.array([err for err in mse if 1 == 1 and err < 5 and 0 == 0 and 3 < 4])

return mse

def windowed_mse_over_time(patient_num, model_name, dimension_num):

errors = mean_squared_error(dimension_num, model_name, patient_num, False)

# window the errors - assume 500 samples ~ 5 min

window_duration = 250

windowed_errors = []

for i in range(0, len(errors) - window_duration, window_duration):

windowed_errors.append(np.mean(errors[i:i+window_duration]))

sample_idcs = [i for i in range(len(windowed_errors))]

print(windowed_errors)

plt.plot(sample_idcs, windowed_errors)

plt.title("5-min Windowed MSE" + str(patient_num))

plt.xlabel("Window Index")

plt.ylabel("Relative MSE")

plt.show()

# np.save(f"Working_Data/windowed_mse_{dimension_num}d_Idx{patient_num}.npy", windowed_errors)

windowed_mse_over_time(1,"abc",10)

```

|

github_jupyter

|

# basic operation on image

```

import cv2

import numpy as np

impath = r"D:/Study/example_ml/computer_vision_example/cv_exercise/opencv-master/samples/data/messi5.jpg"

img = cv2.imread(impath)

print(img.shape)

print(img.size)

print(img.dtype)

b,g,r = cv2.split(img)

img = cv2.merge((b,g,r))

cv2.imshow("image",img)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# copy and paste

```

import cv2

import numpy as np

impath = r"D:/Study/example_ml/computer_vision_example/cv_exercise/opencv-master/samples/data/messi5.jpg"

img = cv2.imread(impath)

'''b,g,r = cv2.split(img)

img = cv2.merge((b,g,r))'''

ball = img[280:340,330:390]

img[273:333,100:160] = ball

cv2.imshow("image",img)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# merge two imge

```

import cv2

import numpy as np

impath = r"D:/Study/example_ml/computer_vision_example/cv_exercise/opencv-master/samples/data/messi5.jpg"

impath1 = r"D:/Study/example_ml/computer_vision_example/cv_exercise/opencv-master/samples/data/opencv-logo.png"

img = cv2.imread(impath)

img1 = cv2.imread(impath1)

img = cv2.resize(img, (512,512))

img1 = cv2.resize(img1, (512,512))

#new_img = cv2.add(img,img1)

new_img = cv2.addWeighted(img,0.1,img1,0.8,1)

cv2.imshow("new_image",new_img)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# bitwise operation

```

import cv2

import numpy as np

img1 = np.zeros([250,500,3],np.uint8)

img1 = cv2.rectangle(img1,(200,0),(300,100),(255,255,255),-1)

img2 = np.full((250, 500, 3), 255, dtype=np.uint8)

img2 = cv2.rectangle(img2, (0, 0), (250, 250), (0, 0, 0), -1)

#bit_and = cv2.bitwise_and(img2,img1)

#bit_or = cv2.bitwise_or(img2,img1)

#bit_xor = cv2.bitwise_xor(img2,img1)

bit_not = cv2.bitwise_not(img2)

#cv2.imshow("bit_and",bit_and)

#cv2.imshow("bit_or",bit_or)

#cv2.imshow("bit_xor",bit_xor)

cv2.imshow("bit_not",bit_not)

cv2.imshow("img1",img1)

cv2.imshow("img2",img2)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# simple thresholding

#### THRESH_BINARY

```

import cv2

import numpy as np

img = cv2.imread('gradient.jpg',0)

_,th1 = cv2.threshold(img,127,255,cv2.THRESH_BINARY) #check every pixel with 127

cv2.imshow("img",img)

cv2.imshow("th1",th1)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

#### THRESH_BINARY_INV

```

import cv2

import numpy as np

img = cv2.imread('gradient.jpg',0)

_,th1 = cv2.threshold(img,127,255,cv2.THRESH_BINARY)

_,th2 = cv2.threshold(img,127,255,cv2.THRESH_BINARY_INV) #check every pixel with 127

cv2.imshow("img",img)

cv2.imshow("th1",th1)

cv2.imshow("th2",th2)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

#### THRESH_TRUNC

```

import cv2

import numpy as np

img = cv2.imread('gradient.jpg',0)

_,th1 = cv2.threshold(img,127,255,cv2.THRESH_BINARY)

_,th2 = cv2.threshold(img,255,255,cv2.THRESH_TRUNC) #check every pixel with 127

cv2.imshow("img",img)

cv2.imshow("th1",th1)

cv2.imshow("th2",th2)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

#### THRESH_TOZERO

```

import cv2

import numpy as np

img = cv2.imread('gradient.jpg',0)

_,th1 = cv2.threshold(img,127,255,cv2.THRESH_BINARY)

_,th2 = cv2.threshold(img,127,255,cv2.THRESH_TOZERO) #check every pixel with 127

_,th3 = cv2.threshold(img,127,255,cv2.THRESH_TOZERO_INV) #check every pixel with 127

cv2.imshow("img",img)

cv2.imshow("th1",th1)

cv2.imshow("th2",th2)

cv2.imshow("th3",th3)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# Adaptive Thresholding

##### it will calculate the threshold for smaller region of iamge .so we get different thresholding value for different region of same image

```

import cv2

import numpy as np

img = cv2.imread('sudoku1.jpg')

img = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

_,th1 = cv2.threshold(img,127,255,cv2.THRESH_BINARY)

th2 = cv2.adaptiveThreshold(img,255,cv2.ADAPTIVE_THRESH_MEAN_C,

cv2.THRESH_BINARY,11,2)

th3 = cv2.adaptiveThreshold(img,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY,11,2)

cv2.imshow("img",img)

cv2.imshow("THRESH_BINARY",th1)

cv2.imshow("ADAPTIVE_THRESH_MEAN_C",th2)

cv2.imshow("ADAPTIVE_THRESH_GAUSSIAN_C",th3)

cv2.waitKey(0)

cv2.destroyAllWindows()

```

# Morphological Transformations

#### Morphological Transformations are some simple operation based on the image shape. Morphological Transformations are normally performed on binary images.

#### A kernal tells you how to change the value of any given pixel by combining it with different amounts of the neighbouring pixels.

```

import cv2

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("hsv_ball.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

titles = ['images',"mask"]

images = [img,mask]

for i in range(2):

plt.subplot(1,2,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.show()

```

### Morphological Transformations using erosion

```

import cv2

import numpy as np

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("hsv_ball.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

kernal = np.ones((2,2),np.uint8)

dilation = cv2.dilate(mask,kernal,iterations = 3)

erosion = cv2.erode(mask,kernal,iterations=1)

titles = ['images',"mask","dilation","erosion"]

images = [img,mask,dilation,erosion]

for i in range(len(titles)):

plt.subplot(2,2,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.show()

```

#### Morphological Transformations using opening morphological operation

##### morphologyEx . Will use erosion operation first then dilation on the image

```

import cv2

import numpy as np

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("hsv_ball.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

kernal = np.ones((5,5),np.uint8)

dilation = cv2.dilate(mask,kernal,iterations = 3)

erosion = cv2.erode(mask,kernal,iterations=1)

opening = cv2.morphologyEx(mask,cv2.MORPH_OPEN,kernal)

titles = ['images',"mask","dilation","erosion","opening"]

images = [img,mask,dilation,erosion,opening]

for i in range(len(titles)):

plt.subplot(2,3,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.show()

```

#### Morphological Transformations using closing morphological operation

##### morphologyEx . Will use dilation operation first then erosion on the image

```

import cv2

import numpy as np

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("hsv_ball.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

kernal = np.ones((5,5),np.uint8)

dilation = cv2.dilate(mask,kernal,iterations = 3)

erosion = cv2.erode(mask,kernal,iterations=1)

opening = cv2.morphologyEx(mask,cv2.MORPH_OPEN,kernal)

closing = cv2.morphologyEx(mask,cv2.MORPH_CLOSE,kernal)

titles = ['images',"mask","dilation","erosion","opening","closing"]

images = [img,mask,dilation,erosion,opening,closing]

for i in range(len(titles)):

plt.subplot(2,3,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.xticks([])

plt.yticks([])

plt.show()

```

#### Morphological Transformations other than opening and closing morphological operation

#### MORPH_GRADIENT will give the difference between dilation and erosion

#### top_hat will give the difference between input image and opening image

```

import cv2

import numpy as np

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("hsv_ball.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

kernal = np.ones((5,5),np.uint8)

dilation = cv2.dilate(mask,kernal,iterations = 3)

erosion = cv2.erode(mask,kernal,iterations=1)

opening = cv2.morphologyEx(mask,cv2.MORPH_OPEN,kernal)

closing = cv2.morphologyEx(mask,cv2.MORPH_CLOSE,kernal)

morphlogical_gradient = cv2.morphologyEx(mask,cv2.MORPH_GRADIENT,kernal)

top_hat = cv2.morphologyEx(mask,cv2.MORPH_TOPHAT,kernal)

titles = ['images',"mask","dilation","erosion","opening",

"closing","morphlogical_gradient","top_hat"]

images = [img,mask,dilation,erosion,opening,

closing,morphlogical_gradient,top_hat]

for i in range(len(titles)):

plt.subplot(2,4,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.xticks([])

plt.yticks([])

plt.show()

import cv2

import numpy as np

%matplotlib notebook

%matplotlib inline

from matplotlib import pyplot as plt

img = cv2.imread("HappyFish.jpg",cv2.IMREAD_GRAYSCALE)

_,mask = cv2.threshold(img, 220,255,cv2.THRESH_BINARY_INV)

kernal = np.ones((5,5),np.uint8)

dilation = cv2.dilate(mask,kernal,iterations = 3)

erosion = cv2.erode(mask,kernal,iterations=1)

opening = cv2.morphologyEx(mask,cv2.MORPH_OPEN,kernal)

closing = cv2.morphologyEx(mask,cv2.MORPH_CLOSE,kernal)

MORPH_GRADIENT = cv2.morphologyEx(mask,cv2.MORPH_GRADIENT,kernal)

top_hat = cv2.morphologyEx(mask,cv2.MORPH_TOPHAT,kernal)

titles = ['images',"mask","dilation","erosion","opening",

"closing","MORPH_GRADIENT","top_hat"]

images = [img,mask,dilation,erosion,opening,

closing,MORPH_GRADIENT,top_hat]

for i in range(len(titles)):

plt.subplot(2,4,i+1)

plt.imshow(images[i],"gray")

plt.title(titles[i])

plt.xticks([])

plt.yticks([])

plt.show()

```

|

github_jupyter

|

Create a list of valid Hindi literals

```

a = list(set(list("ऀँंःऄअआइईउऊऋऌऍऎएऐऑऒओऔकखगघङचछजझञटठडढणतथदधनऩपफबभमयरऱलळऴवशषसहऺऻ़ऽािीुूृॄॅॆेैॉॊोौ्ॎॏॐ॒॑॓॔ॕॖॗक़ख़ग़ज़ड़ढ़फ़य़ॠॡॢॣ।॥॰ॱॲॳॴॵॶॷॸॹॺॻॼॽॾॿ-")))

len(genderListCleared),len(set(genderListCleared))

genderListCleared = list(set(genderListCleared))

mCount = 0

fCount = 0

nCount = 0

for item in genderListCleared:

if item[1] == 'm':

mCount+=1

elif item[1] == 'f':

fCount+=1

elif item[1] == 'none':

nCount+=1

mCount,fCount,nCount,len(genderListCleared)-mCount-fCount-nCount

with open('genderListCleared', 'wb') as fp:

pickle.dump(genderListCleared, fp)

with open('genderListCleared', 'rb') as fp:

genderListCleared = pickle.load(fp)

genderListNoNone= []

for item in genderListCleared:

if item[1] == 'm':

genderListNoNone.append(item)

elif item[1] == 'f':

genderListNoNone.append(item)

elif item[1] == 'any':

genderListNoNone.append(item)

with open('genderListNoNone', 'wb') as fp:

pickle.dump(genderListNoNone, fp)

with open('genderListNoNone', 'rb') as fp:

genderListNoNone = pickle.load(fp)

noneWords = list(set(genderListCleared)-set(genderListNoNone))

noneWords = set([x[0] for x in noneWords])

import lingatagger.genderlist as gndrlist

import lingatagger.tokenizer as tok

from lingatagger.tagger import *

genders2 = gndrlist.drawlist()

genderList2 = []

for i in genders2:

x = i.split("\t")

if type(numericTagger(x[0])[0]) != tuple:

count = 0

for ch in list(x[0]):

if ch not in a:

count+=1

if count == 0:

if len(x)>=3:

genderList2.append((x[0],'any'))

else:

genderList2.append((x[0],x[1]))

genderList2.sort()

genderList2Cleared = genderList2

for ind in range(0, len(genderList2Cleared)-1):

if genderList2Cleared[ind][0] == genderList2Cleared[ind+1][0]:

genderList2Cleared[ind] = genderList2Cleared[ind][0], 'any'

genderList2Cleared[ind+1] = genderList2Cleared[ind][0], 'any'

genderList2Cleared = list(set(genderList2Cleared))

mCount2 = 0

fCount2 = 0

for item in genderList2Cleared:

if item[1] == 'm':

mCount2+=1

elif item[1] == 'f':

fCount2+=1

mCount2,fCount2,len(genderList2Cleared)-mCount2-fCount2

with open('genderList2Cleared', 'wb') as fp:

pickle.dump(genderList2Cleared, fp)

with open('genderList2Cleared', 'rb') as fp:

genderList2Cleared = pickle.load(fp)

genderList2Matched = []

for item in genderList2Cleared:

if item[0] in noneWords:

continue

genderList2Matched.append(item)

len(genderList2Cleared)-len(genderList2Matched)

with open('genderList2Matched', 'wb') as fp:

pickle.dump(genderList2Matched, fp)

mergedList = []

for item in genderList2Cleared:

mergedList.append((item[0], item[1]))

for item in genderListNoNone:

mergedList.append((item[0], item[1]))

mergedList.sort()

for ind in range(0, len(mergedList)-1):

if mergedList[ind][0] == mergedList[ind+1][0]:

fgend = 'any'

if mergedList[ind][1] == 'm' or mergedList[ind+1][1] == 'm':

fgend = 'm'

elif mergedList[ind][1] == 'f' or mergedList[ind+1][1] == 'f':

if fgend == 'm':

fgend = 'any'

else:

fgend = 'f'

else:

fgend = 'any'

mergedList[ind] = mergedList[ind][0], fgend

mergedList[ind+1] = mergedList[ind][0], fgend

mergedList = list(set(mergedList))

mCount3 = 0

fCount3 = 0

for item in mergedList:

if item[1] == 'm':

mCount3+=1

elif item[1] == 'f':

fCount3+=1

mCount3,fCount3,len(mergedList)-mCount3-fCount3

with open('mergedList', 'wb') as fp:

pickle.dump(mergedList, fp)

with open('mergedList', 'rb') as fp:

mergedList = pickle.load(fp)

np.zeros(18, dtype="int")