Quilt-LlaVA Model Card

Model details

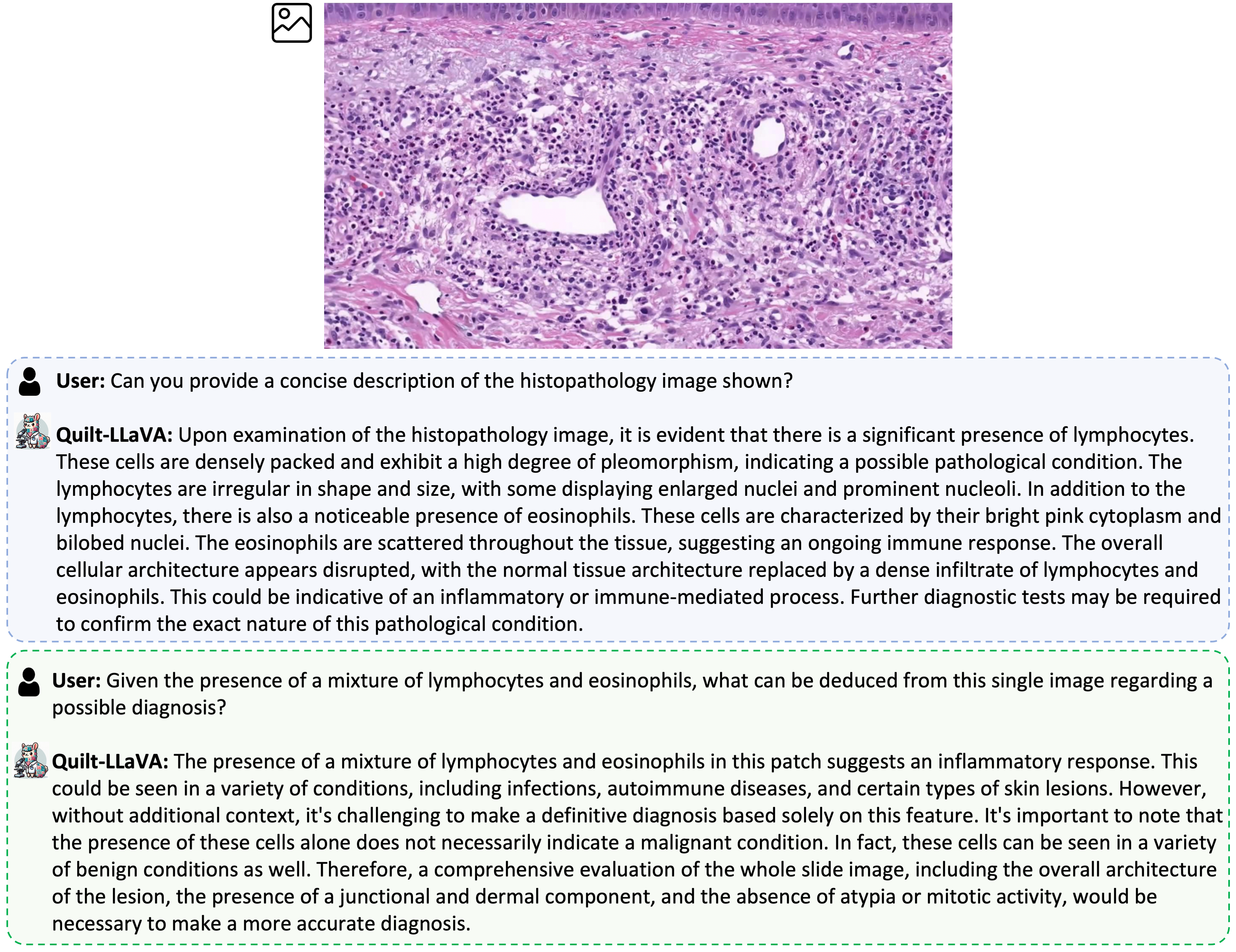

Model type: Quilt-LLaVA is an open-source chatbot trained by fine-tuning LLaMA/Vicuna on histopathology educational video sourced images and GPT-generated multimodal instruction-following data. It is an auto-regressive language model, based on the transformer architecture.

Citation

@article{seyfioglu2023quilt,

title={Quilt-LLaVA: Visual Instruction Tuning by Extracting Localized Narratives from Open-Source Histopathology Videos},

author={Seyfioglu, Mehmet Saygin and Ikezogwo, Wisdom O and Ghezloo, Fatemeh and Krishna, Ranjay and Shapiro, Linda},

journal={arXiv preprint arXiv:2312.04746},

year={2023}

}

Model date: Quilt-LlaVA-v1.5-7B was trained in November 2023.

Paper or resources for more information: https://quilt-llava.github.io/

License

Llama 2 is licensed under the LLAMA 2 Community License, Copyright (c) Meta Platforms, Inc. All Rights Reserved.

Where to send questions or comments about the model: https://github.com/quilt-llava/quilt-llava.github.io/issues

Intended use

Primary intended uses: The primary use of Quilt-LlaVA is research on medical large multimodal models and chatbots.

Primary intended users: The primary intended users of these models are AI researchers.

We primarily imagine the model will be used by researchers to better understand the robustness, generalization, and other capabilities, biases, and constraints of large vision-language generative histopathology models.

Training dataset

- 723K filtered image-text pairs from QUILT-1M https://quilt1m.github.io/.

- 107K GPT-generated multimodal instruction-following data from QUILT-Instruct https://huggingface.co/datasets/wisdomik/QUILT-LLaVA-Instruct-107K.

Evaluation dataset

A collection of 4 academic VQA histopathology benchmarks

- Downloads last month

- 244