Kosy🍵llama

Model Details

Model Developers Kyujin Han (kyujinpy)

Model Description

NEFTune method를 활용하여 훈련한 Ko-platypus2 new version!

(Noisy + KO + llama = Kosy🍵llama)

Repo Link

Github KoNEFTune: Kosy🍵llama

If you visit our github, you can easily apply Random_noisy_embedding_fine-tuning!!

Base Model

hyunseoki/ko-en-llama2-13b

Training Dataset

Version of combined dataset: kyujinpy/KOpen-platypus

I use A100 GPU 40GB and COLAB, when trianing.

Model comparisons

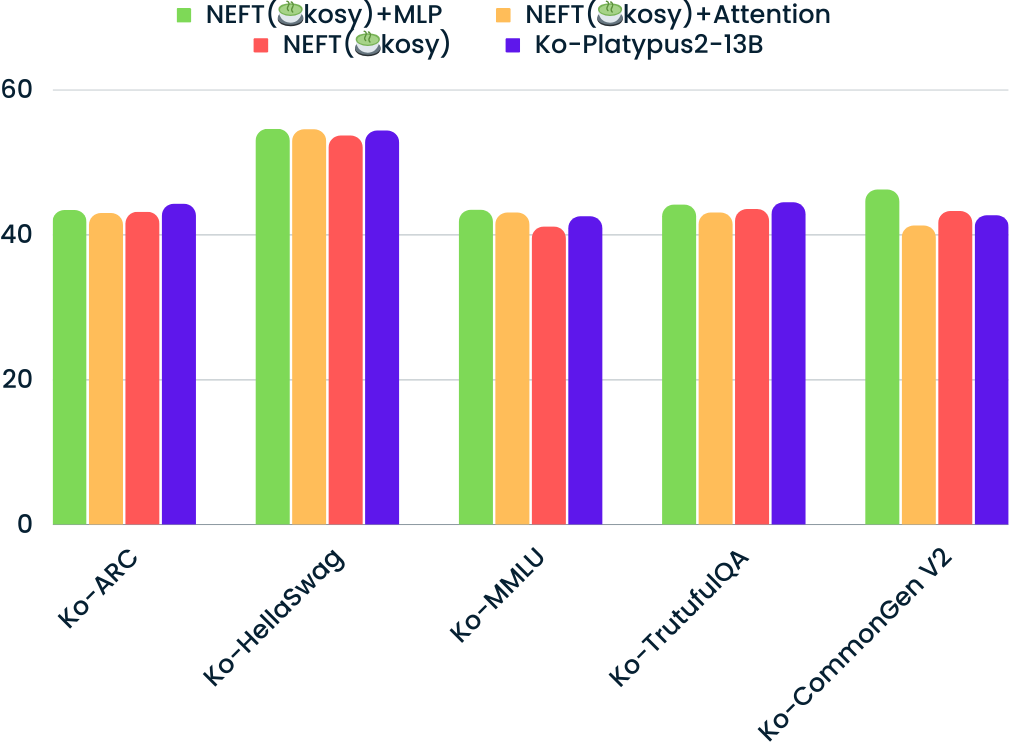

NEFT comparisons

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

|---|---|---|---|---|---|---|

| Ko-Platypus2-13B | 45.60 | 44.20 | 54.31 | 42.47 | 44.41 | 42.62 |

| *NEFT(🍵kosy)+MLP-v1 | 43.64 | 43.94 | 53.88 | 42.68 | 43.46 | 34.24 |

| *NEFT(🍵kosy)+MLP-v2 | 45.45 | 44.20 | 54.56 | 42.60 | 42.68 | 42.98 |

| *NEFT(🍵kosy)+MLP-v3 | 46.31 | 43.34 | 54.54 | 43.38 | 44.11 | 46.16 |

| NEFT(🍵kosy)+Attention | 44.92 | 42.92 | 54.48 | 42.99 | 43.00 | 41.20 |

| NEFT(🍵kosy) | 45.08 | 43.09 | 53.61 | 41.06 | 43.47 | 43.21 |

*Different Hyperparameters such that learning_rate, batch_size, epoch, etc...

Implementation Code

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "kyujinpy/Koisy-Platypus2-13B"

OpenOrca = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

OpenOrca_tokenizer = AutoTokenizer.from_pretrained(repo)

- Downloads last month

- 4,355

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.