🐋 The Second Llong Context Orca! 🐋

OpenOrca - LlongOrca - 13B - 16k

We have used our own OpenOrca dataset to fine-tune on top of LLongMA-2-13b-16k. This dataset is our attempt to reproduce the dataset generated for Microsoft Research's Orca Paper. We use OpenChat packing, trained with Axolotl.

This release is trained on a curated filtered subset of most of our GPT-4 augmented data. It is the same subset of our data as was used in our OpenOrcaxOpenChat-Preview2-13B model.

HF Leaderboard evals place this model as #1 for all 13B long context models at release time. We achieve >112% the performance of the base LLongMA2-13b-16k model we tuned on top of. As well, we preserve >98% of the performance of the OpenOrcaxOpenChat-Preview2-13B model we share datasets with, while extending the context to 16k.

We did this training as part of testing setup of our H100 cluster.

Want to visualize our full (pre-filtering) dataset? Check out our Nomic Atlas Map.

Many thanks to @EnricoShippole, @theemozilla, and @kaiokendev1 for the fine work on creating the LlongMA-2-13b-16k model this was trained on top of!

We are in-process with training more models, so keep a look out on our org for releases coming soon with exciting partners.

We will also give sneak-peak announcements on our Discord, which you can find here:

Prompt Template

We used OpenAI's Chat Markup Language (ChatML) format, with <|im_start|> and <|im_end|> tokens added to support this.

Example Prompt Exchange

<|im_start|>system

You are LlongOrca, a large language model trained by Alignment Lab AI. Write out your reasoning step-by-step to be sure you get the right answers!

<|im_end|>

<|im_start|>user

How are you<|im_end|>

<|im_start|>assistant

I am doing well!<|im_end|>

<|im_start|>user

How are you now?<|im_end|>

Evaluation

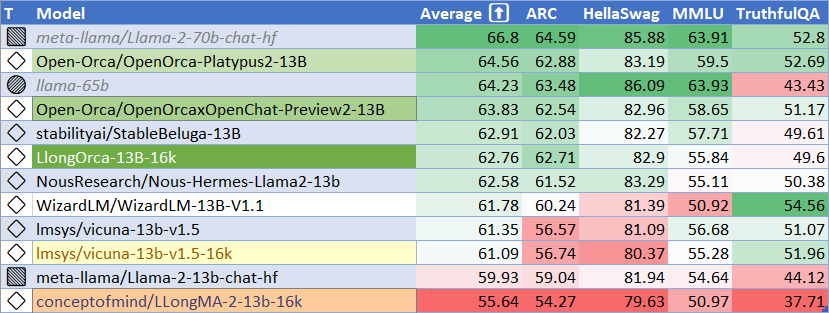

We have evaluated using the methodology and tools for the HuggingFace Leaderboard, and find that we have significantly improved upon the base long context model. We reach >112% of LLongMA2-13B-16k performance.

HuggingFaceH4 Open LLM Leaderboard Performance

We have run our own tests using parameters matching the HuggingFaceH4 Open LLM Leaderboard evals.

We preserve >98% of OpenOrcaxOpenChat-Preview2-13B performance and are #1 on the leaderboard for long context 13B models at release time. We have >103% performance of the next 16k model (vicuna-13b-v1.5-16k).

As well, we expect the context extension techniques from LLongMA to be more robust than other 16k context models available.

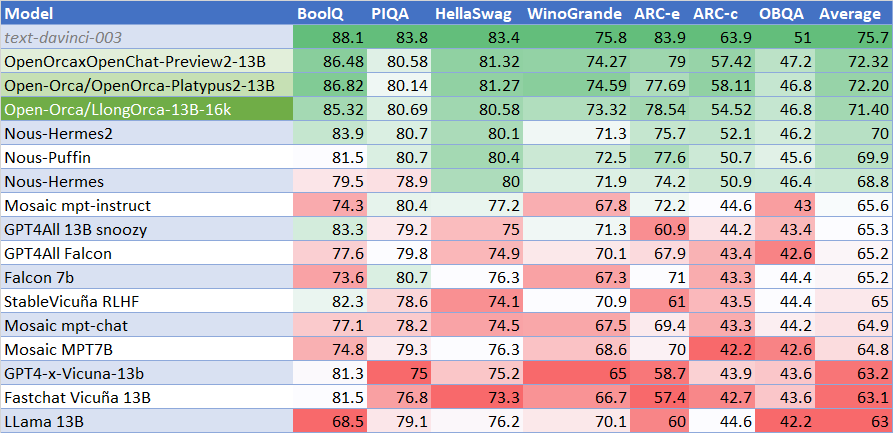

GPT4ALL Leaderboard Performance

We find we score higher than all non-OpenOrca models on the GPT4ALL leaderboard, while preserving ~98.7% of our OpenOrcaxOpenChat-Preview2-13B performance.

Dataset

We used a curated, filtered selection of most of the GPT-4 augmented data from our OpenOrca dataset, which aims to reproduce the Orca Research Paper dataset. Further details of our curation practices will be forthcoming with our full model releases.

Training

We trained with 8x H100 GPUs for 10 hours, completing 4 epochs of full fine tuning on our dataset in one training run. Commodity cost was ~$300.

Citation

@software{dale2023llongorca13b,

title = {LlongOrca13B: Llama2-13B Model Instruct-tuned for Long Context on Filtered OpenOrcaV1 GPT-4 Dataset},

author = {Alpin Dale and Wing Lian and Bleys Goodson and Guan Wang and Eugene Pentland and Austin Cook and Chanvichet Vong and "Teknium"},

year = {2023},

publisher = {HuggingFace},

journal = {HuggingFace repository},

howpublished = {\url{https://https://huggingface.co/Open-Orca/LlongOrca-7B-16k},

}

@software{openchat,

title = {{OpenChat: Advancing Open-source Language Models with Imperfect Data}},

author = {Wang, Guan and Cheng, Sijie and Yu, Qiying and Liu, Changling},

doi = {10.5281/zenodo.8105775},

url = {https://github.com/imoneoi/openchat},

version = {pre-release},

year = {2023},

month = {7},

}

@misc{mukherjee2023orca,

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

year={2023},

eprint={2306.02707},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{longpre2023flan,

title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

year={2023},

eprint={2301.13688},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

@misc{touvron2023llama,

title={Llama 2: Open Foundation and Fine-Tuned Chat Models},

author={Hugo Touvron and Louis Martin and Kevin Stone and Peter Albert and Amjad Almahairi and Yasmine Babaei and Nikolay Bashlykov and Soumya Batra and Prajjwal Bhargava and Shruti Bhosale and Dan Bikel and Lukas Blecher and Cristian Canton Ferrer and Moya Chen and Guillem Cucurull and David Esiobu and Jude Fernandes and Jeremy Fu and Wenyin Fu and Brian Fuller and Cynthia Gao and Vedanuj Goswami and Naman Goyal and Anthony Hartshorn and Saghar Hosseini and Rui Hou and Hakan Inan and Marcin Kardas and Viktor Kerkez and Madian Khabsa and Isabel Kloumann and Artem Korenev and Punit Singh Koura and Marie-Anne Lachaux and Thibaut Lavril and Jenya Lee and Diana Liskovich and Yinghai Lu and Yuning Mao and Xavier Martinet and Todor Mihaylov and Pushkar Mishra and Igor Molybog and Yixin Nie and Andrew Poulton and Jeremy Reizenstein and Rashi Rungta and Kalyan Saladi and Alan Schelten and Ruan Silva and Eric Michael Smith and Ranjan Subramanian and Xiaoqing Ellen Tan and Binh Tang and Ross Taylor and Adina Williams and Jian Xiang Kuan and Puxin Xu and Zheng Yan and Iliyan Zarov and Yuchen Zhang and Angela Fan and Melanie Kambadur and Sharan Narang and Aurelien Rodriguez and Robert Stojnic and Sergey Edunov and Thomas Scialom},

year={2023},

eprint={2307.09288},

archivePrefix={arXiv},

}

- Downloads last month

- 1,131