Ziya2-13B-Base

- Main Page:Fengshenbang

- Github: Fengshenbang-LM

姜子牙系列模型

简介 Brief Introduction

Ziya2-13B-Base 是基于LLaMa2的130亿参数大规模预训练模型,针对中文分词优化,并完成了中英文 650B tokens 的增量预训练,进一步提升了中文生成和理解能力。

The Ziya2-13B-Base is a large-scale pre-trained model based on LLaMA2 with 13 billion parameters. We optimizes LLaMAtokenizer on chinese, and incrementally train 650 billion tokens of data based on LLaMa2-13B model, which significantly improved the understanding and generation ability on Chinese.

模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

|---|---|---|---|---|---|

| 通用 General | AGI模型 | 姜子牙 Ziya | LLaMA2 | 13B | English&Chinese |

模型信息 Model Information

继续预训练 Continual Pretraining

Meta在2023年7月份发布了Llama2系列大模型,相比于LLaMA1的1.4万亿Token数据,Llama2预训练的Token达到了2万亿,并在各个榜单中明显超过LLaMA1。

Meta released the Llama2 series of large models in July 2023, with pre-trained tokens reaching 200 billion compared to Llama1's 140 billion tokens, significantly outperforming Llama1 in various rankings.

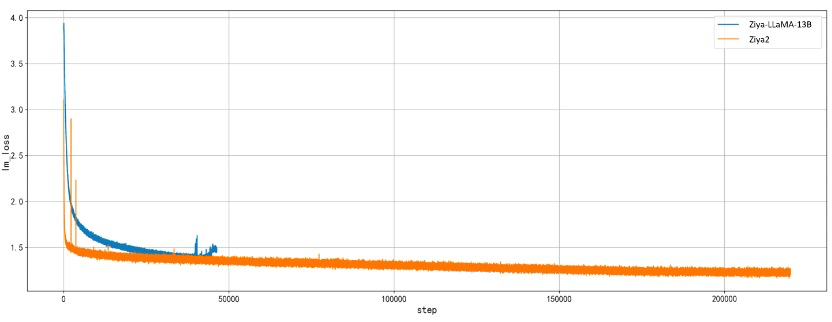

Ziya2-13B-Base沿用了Ziya-LLaMA-13B高效的中文编解码方式,但采取了更优化的初始化算法使得初始训练loss更低。同时,我们对Fengshen-PT继续训练框架进行了优化,效率方面,整合了FlashAttention2、Apex RMS norm等技术来帮助提升效率,对比Ziya-LLaMA-13B训练速度提升38%(163 TFLOPS/per gpu/per sec)。稳定性方面,我们采取BF16进行训练,修复了底层分布式框架的bug,确保模型能够持续稳定训练,解决了Ziya-LLaMA-13B遇到的训练后期不稳定的问题,并在7.25号进行了直播,最终完成了全部数据的继续训练。我们也发现,模型效果还有进一步提升的趋势,后续也会对Ziya2-13B-Base进行继续优化。

Ziya2-13B-Base retained the efficient Chinese encoding and decoding techniques of Ziya-LLaMA-13B, but employed a more optimized initialization algorithm to achieve lower initial training loss. Additionally, we optimized the Fengshen-PT fine-tuning framework. In terms of efficiency, we integrated technologies such as FlashAttention2 and Apex RMS norm to boost efficiency, resulting in a 38% increase in training speed compared to Ziya-LLaMA-13B (163 TFLOPS per GPU per second). For stability, we used BF16 for training, fixed underlying distributed framework bugs to ensure consistent model training, and resolved the late-stage instability issues encountered in the training of Ziya-LLaMA-13B. We also conducted a live broadcast on July 25th to complete the continued training of all data. We have observed a trend towards further improvements in model performance and plan to continue optimizing Ziya2-13B-Base in the future.

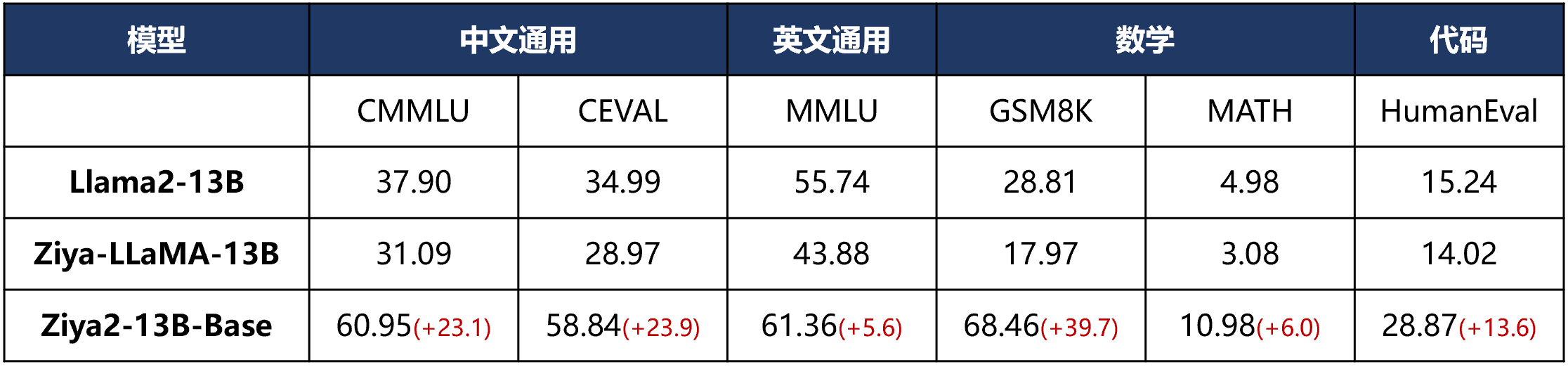

效果评估 Performance

Ziya2-13B-Base在Llama2-13B的基础上进行了约650B自建高质量中英文数据集的继续训练,在中文、英文、数学、代码等下游理解任务上相对于Llama2-13B取得了明显的提升,相对Ziya-LLaMA-13B也有明显的提升。

The model Ziya2-13B-Base underwent further training on approximately 650 billion self-collected high-quality Chinese and English datasets, building upon the foundation of Llama2-13B. It achieved significant improvements in downstream comprehension tasks such as Chinese, English, mathematics, and code understanding, surpassing Llama2-13B and showing clear advancements compared to Ziya-LLaMA-13B.

使用 Usage

加载模型,进行的续写:

Load the model and predicting:

from transformers import AutoTokenizer

from transformers import LlamaForCausalLM

import torch

query="问题:我国的三皇五帝分别指的是谁?答案:

model = LlamaForCausalLM.from_pretrained('IDEA-CCNL/Ziya2-13B-Base', torch_dtype=torch.float16, device_map="auto").eval()

tokenizer = AutoTokenizer.from_pretrained(ckpt)

input_ids = tokenizer(query, return_tensors="pt").input_ids.to('cuda:0')

generate_ids = model.generate(

input_ids,

max_new_tokens=512,

do_sample = True,

top_p = 0.9)

output = tokenizer.batch_decode(generate_ids)[0]

print(output)

上面是简单的续写示例,其他更多prompt和玩法,感兴趣的朋友可以下载下来自行发掘。

The above is a simple example of continuing writing. For more prompts and creative ways to use the model, interested individuals can download it and explore further on their own.

引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的论文:

If you are using the resource for your work, please cite the our paper:

@article{Ziya2,

author = {Ruyi Gan, Ziwei Wu, Renliang Sun, Junyu Lu, Xiaojun Wu, Dixiang Zhang, Kunhao Pan, Ping Yang, Qi Yang, Jiaxing Zhang, Yan Song},

title = {Ziya2: Data-centric Learning is All LLMs Need},

year = {2023}

}

You can also cite our website:

欢迎引用我们的网站:

@misc{gan2023ziya2,

title={Ziya2: Data-centric Learning is All LLMs Need},

author={Ruyi Gan and Ziwei Wu and Renliang Sun and Junyu Lu and Xiaojun Wu and Dixiang Zhang and Kunhao Pan and Ping Yang and Qi Yang and Jiaxing Zhang and Yan Song},

year={2023},

eprint={2311.03301},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

- Downloads last month

- 416