Note: This repo contains the base weights already merged with lora, pls check qblocks/llama2_70B_dolly15k repo for LORA adapters only

Finetuning Overview:

Model Used: meta-llama/Llama-2-70b-hf

Dataset: Databricks-dolly-15k

Dataset Insights:

The Databricks-dolly-15k dataset is an impressive compilation of over 15,000 records, made possible by the hard work and dedication of a multitude of Databricks professionals. It has been tailored to:

- Elevate the interactive capabilities of ChatGPT-like systems.

- Provide prompt/response pairs spanning eight distinct instruction categories, inclusive of the seven categories from the InstructGPT paper and an exploratory open-ended category.

- Ensure genuine and original content, largely offline-sourced with exceptions for Wikipedia in particular categories, and free from generative AI influences.

The contributors had the opportunity to rephrase and answer queries from their peers, highlighting a focus on accuracy and clarity. Additionally, some data subsets feature Wikipedia-sourced reference texts, marked by bracketed citation numbers like [42].

Finetuning Details:

Using MonsterAPI's user-friendly LLM finetuner, the finetuning:

- Stands out for its cost-effectiveness.

- Was executed in a total of 17.5 hours for 3 epochs with an A100 80GB GPU.

- Broke down to just 5.8 hours and

$19.25per epoch, culminating in a combined cost of$57.75for all epochs.

Hyperparameters & Additional Details:

- Epochs: 3

- Cost Per Epoch: $19.25

- Total Finetuning Cost: $57.75

- Model Path: meta-llama/Llama-2-70b-hf

- Learning Rate: 0.0002

- Data Split: Training 90% / Validation 10%

- Gradient Accumulation Steps: 4

Prompt Structure:

### INSTRUCTION:

[instruction]

[context]

### RESPONSE:

[response]

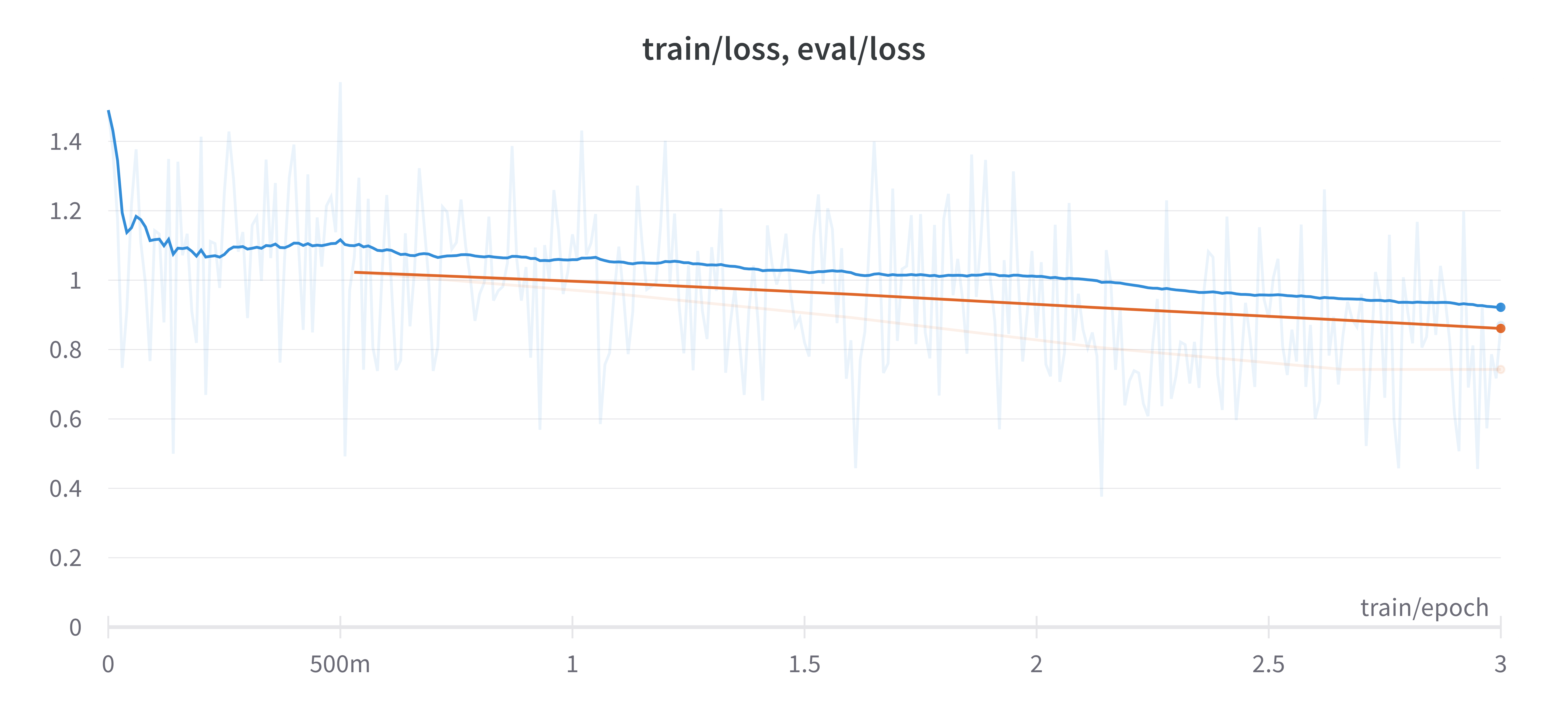

Loss metrics

Training loss (Blue) Validation Loss (orange):

license: apache-2.0

- Downloads last month

- 0

Model tree for monsterapi/llama2_70B_dolly15k_mergedweights

Base model

meta-llama/Llama-2-70b-hf