Model Card for Diffusion Policy / PushT

Diffusion Policy (as per Diffusion Policy: Visuomotor Policy

Learning via Action Diffusion) trained for the PushT environment from gym-pusht.

How to Get Started with the Model

See the LeRobot library (particularly the evaluation script) for instructions on how to load and evaluate this model.

Training Details

TODO commit hash.

Trained with LeRobot@d747195.

The model was trained using LeRobot's training script and with the pusht dataset.

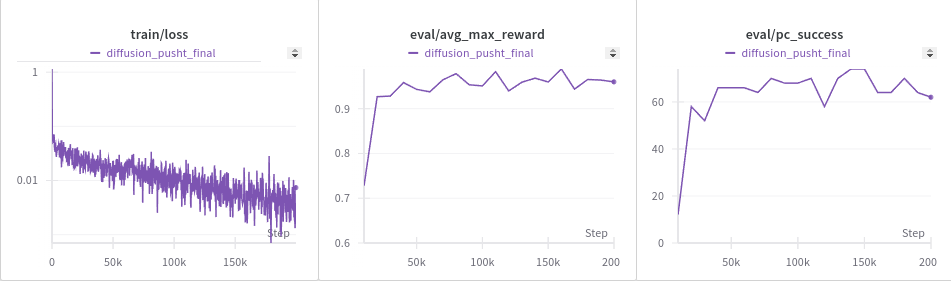

Here are the loss, evaluation score, evaluation success rate (with 50 rollouts) during training.

This took about 7 hours to train on an Nvida RTX 3090.

Evaluation

The model was evaluated on the PushT environment from gym-pusht and compared to a similar model trained with the original Diffusion Policy code. There are two evaluation metrics on a per-episode basis:

- Maximum overlap with target (seen as

eval/avg_max_rewardin the charts above). This ranges in [0, 1]. - Success: whether or not the maximum overlap is at least 95%.

Here are the metrics for 500 episodes worth of evaluation. For the succes rate we add and extra row with confidence bounds. This assumes a uniform prior over success probability and computes the beta posterior, then calculates the mean and lower/upper confidence bounds (with a 68.2% confidence interval centered on the mean).

| Ours | Theirs | |

|---|---|---|

| Average max. overlap ratio | 0.959 | 0.957 |

| Success rate for 500 episodes (%) | 63.8 | 64.2 |

| Beta distribution lower/mean/upper (%) | 61.6 / 63.7 / 65.9 | 62.0 / 64.1 / 66.3 |