This model is a fork of distilbert-base-uncased-finetuned-sst-2-english quantized with Optimum library 🤗 using static quantization.

This model can be used as follow:

import onnxruntime

from transformers import AutoTokenizer

from optimum.onnxruntime import ORTModelForSequenceClassification

session_options = onnxruntime.SessionOptions()

session_options.graph_optimization_level = onnxruntime.GraphOptimizationLevel.ORT_DISABLE_ALL

tokenizer = AutoTokenizer.from_pretrained("fxmarty/distilbert-base-uncased-sst2-onnx-int8-for-tensorrt")

ort_model = ORTModelForSequenceClassification.from_pretrained(

"fxmarty/distilbert-base-uncased-sst2-onnx-int8-for-tensorrt",

provider="TensorrtExecutionProvider",

session_options=session_options,

provider_options={"trt_int8_enable": True},

)

inp = tokenizer("TensorRT is a bit painful to use, but at the end of day it runs smoothly and blazingly fast!", return_tensors="np")

res = ort_model(**inp)

print(res)

print(ort_model.config.id2label[res.logits[0].argmax()])

# SequenceClassifierOutput(loss=None, logits=array([[-0.545066 , 0.5609764]], dtype=float32), hidden_states=None, attentions=None)

# POSITIVE

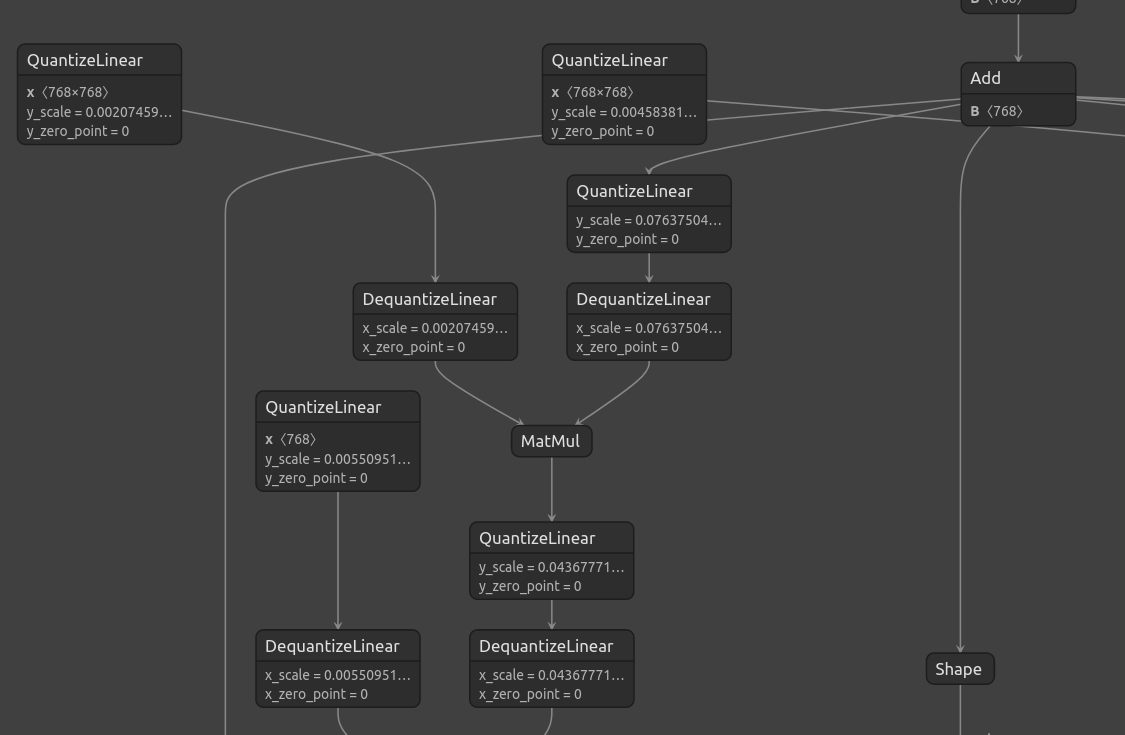

Inspecting the graph (for example here with netron), we see that it contains Quantize and Dequantize nodes, that will be interpreted by TensorRT to run in INT8:

- Downloads last month

- 2

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.