Hub documentation

Using ESPnet at Hugging Face

Using ESPnet at Hugging Face

espnet is an end-to-end toolkit for speech processing, including automatic speech recognition, text to speech, speech enhancement, dirarization and other tasks.

Exploring ESPnet in the Hub

You can find hundreds of espnet models by filtering at the left of the models page.

All models on the Hub come up with useful features:

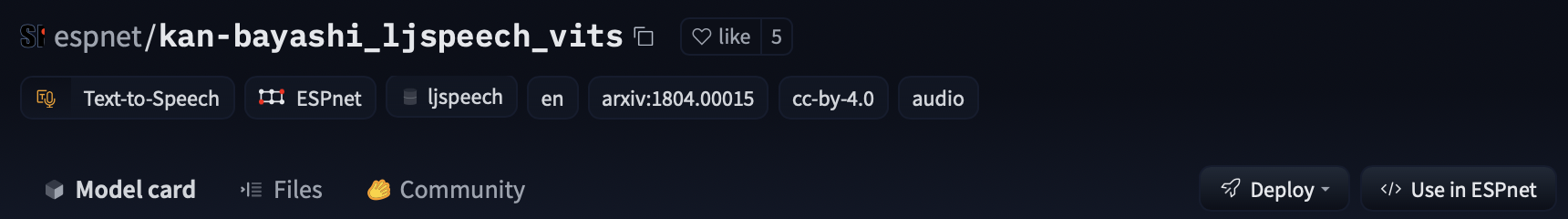

- An automatically generated model card with a description, a training configuration, licenses and more.

- Metadata tags that help for discoverability and contain information such as license, language and datasets.

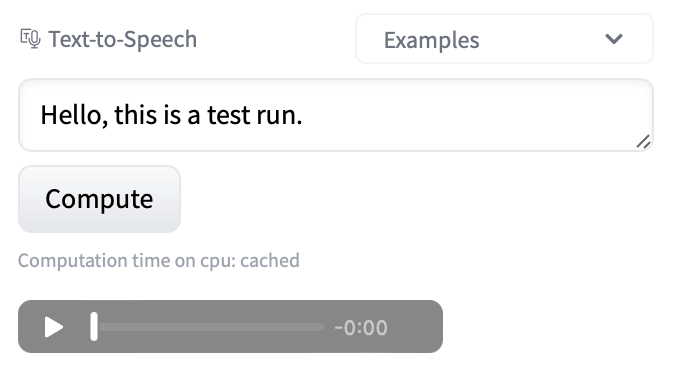

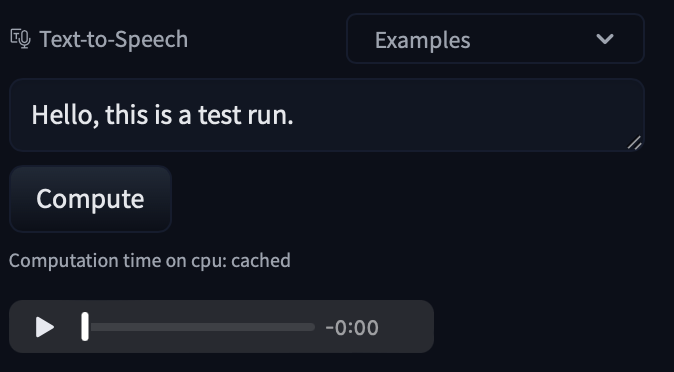

- An interactive widget you can use to play out with the model directly in the browser.

- An Inference API that allows to make inference requests.

Using existing models

For a full guide on loading pre-trained models, we recommend checking out the official guide).

If you’re interested in doing inference, different classes for different tasks have a from_pretrained method that allows loading models from the Hub. For example:

Speech2Textfor Automatic Speech Recognition.Text2Speechfor Text to Speech.SeparateSpeechfor Audio Source Separation.

Here is an inference example:

import soundfile

from espnet2.bin.tts_inference import Text2Speech

text2speech = Text2Speech.from_pretrained("model_name")

speech = text2speech("foobar")["wav"]

soundfile.write("out.wav", speech.numpy(), text2speech.fs, "PCM_16")If you want to see how to load a specific model, you can click Use in ESPnet and you will be given a working snippet that you can load it!

Sharing your models

ESPnet outputs a zip file that can be uploaded to Hugging Face easily. For a full guide on sharing models, we recommend checking out the official guide).

The run.sh script allows to upload a given model to a Hugging Face repository.

./run.sh --stage 15 --skip_upload_hf false --hf_repo username/model_repoAdditional resources

- ESPnet docs.

- ESPnet model zoo repository.

- Integration docs.