Dataset Preview

Full Screen Viewer

Full Screen

The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

The dataset generation failed because of a cast error

Error code: DatasetGenerationCastError

Exception: DatasetGenerationCastError

Message: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 3 new columns ({'check_flagged_words_criteria', 'check_stop_word_ratio_criteria', 'check_char_repetition_criteria'})

This happened while the json dataset builder was generating data using

hf://datasets/CarperAI/pile-v2-small-filtered/data/CodePileReddit2019/data.json (at revision e2f37e95cc5eb38359b6aefc2cbf98a50fd1b7e4)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2011, in _prepare_split_single

writer.write_table(table)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 585, in write_table

pa_table = table_cast(pa_table, self._schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2302, in table_cast

return cast_table_to_schema(table, schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2256, in cast_table_to_schema

raise CastError(

datasets.table.CastError: Couldn't cast

text: string

meta: string

id: int64

check_char_repetition_criteria: double

check_flagged_words_criteria: double

check_stop_word_ratio_criteria: double

to

{'id': Value(dtype='string', id=None), 'text': Value(dtype='string', id=None), 'meta': Value(dtype='string', id=None)}

because column names don't match

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1321, in compute_config_parquet_and_info_response

parquet_operations = convert_to_parquet(builder)

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 935, in convert_to_parquet

builder.download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1122, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2013, in _prepare_split_single

raise DatasetGenerationCastError.from_cast_error(

datasets.exceptions.DatasetGenerationCastError: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 3 new columns ({'check_flagged_words_criteria', 'check_stop_word_ratio_criteria', 'check_char_repetition_criteria'})

This happened while the json dataset builder was generating data using

hf://datasets/CarperAI/pile-v2-small-filtered/data/CodePileReddit2019/data.json (at revision e2f37e95cc5eb38359b6aefc2cbf98a50fd1b7e4)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

id

string | text

string | meta

string |

|---|---|---|

97090 | """

## Binary Classification using Graduate Admission Dataset

This notebook compares performance of various Machine Learning classifiers on the "Graduate Admission" data. I'm still just a naive student implementing Machine Learning techniques. You're most welcome to suggest me edits on this kernel, I am happy to learn.

"""

"""

## Setting up Google Colab

You can skip next 2 sections if you're not using Google Colab.

"""

## Uploading my kaggle.json (required for accessing Kaggle APIs)

from google.colab import files

files.upload()

## Install Kaggle API

!pip install -q kaggle

## Moving the json to appropriate place

!mkdir -p ~/.kaggle

!cp kaggle.json ~/.kaggle/

!chmod 600 /root/.kaggle/kaggle.json

"""

### Getting the data

I'll use Kaggle API for getting the data directly into this instead of pulling the data from Google Drive.

"""

!kaggle datasets download mohansacharya/graduate-admissions

!echo "========================================================="

!ls

!unzip graduate-admissions.zip

!echo "========================================================="

!ls

"""

### Imports

"""

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

pd.set_option('display.max_columns', 60)

%matplotlib inline

"""

### Data exploration

"""

FILE_NAME = "../input/Admission_Predict_Ver1.1.csv"

raw_data = pd.read_csv(FILE_NAME)

raw_data.head()

## Are any null values persent ?

raw_data.isnull().values.any()

## So no NaNs apparently

## Let's just quickly rename the dataframe columns to make easy references

## Notice the blankspace after the end of 'Chance of Admit' column name

raw_data.rename(columns = {

'Serial No.' : 'srn',

'GRE Score' : 'gre',

'TOEFL Score': 'toefl',

'University Rating' : 'unirating',

'SOP' : 'sop',

'LOR ' : 'lor',

'CGPA' : 'cgpa',

'Research' : 'research',

'Chance of Admit ': 'chance'

}, inplace=True)

raw_data.describe()

"""

### Analyzing the factors influencing the admission :

From what I've heard from my relatives, seniors, friends is that you need an excellent CGPA from a good university , let's verify that first.

"""

fig, ax = plt.subplots(ncols = 2)

sns.regplot(x='chance', y='cgpa', data=raw_data, ax=ax[0])

sns.regplot(x='chance', y='unirating', data=raw_data, ax=ax[1])

"""

### Effect of GRE/TOEFL :

Let's see if GRE/TOEFL score matters at all. From what I've heard from my seniors, relatives, friends; these exams don't matter if your score is above some threshold.

"""

fig, ax = plt.subplots(ncols = 2)

sns.regplot(x='chance', y='gre', data=raw_data, ax=ax[0])

sns.regplot(x='chance', y='toefl', data=raw_data, ax=ax[1])

"""

### Effect of SOP / LOR / Research :

I decided to analyze these separately since these are not academic related.and count mostly as an extra curricular skill / writing.

"""

fig, ax = plt.subplots(ncols = 3)

sns.regplot(x='chance', y='sop', data=raw_data, ax=ax[0])

sns.regplot(x='chance', y='lor', data=raw_data, ax=ax[1])

sns.regplot(x='chance', y='research', data=raw_data, ax=ax[2])

"""

### Conclusions :

CGPA, GRE and TOEFL are extremely important and they vary almost linearly. (TOEFL varies almost scaringly linearly to chance of admit). On other factors, you need to have _just enough_ score to get admission.

We will convert the 'Chance of Admission' column into 0 or 1 and then use binary classification algorithms to see if you can get an admission or not.

"""

THRESH = 0.6

# I think we can also drop srn as it is not doing absolutely anything

raw_data.drop('srn', axis=1, inplace=True)

raw_data['chance'] = np.where(raw_data['chance'] > THRESH, 1, 0)

raw_data.head()

raw_data.describe()

"""

### Train-Test split :

Since we have less data, I am goign to use traditional 70-30 train test split. May update this in future if author adds more data.

"""

X = raw_data.drop(columns='chance')

Y = raw_data['chance'].values.reshape(raw_data.shape[0], 1)

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.3)

print("Training set ...")

print("X_train.shape = {}, Y_train.shape = is {}".format(X_train.shape,

Y_train.shape))

print("Test set ...")

print("X_test.shape = {}, Y_test.shape = is {}".format(X_test.shape,

Y_test.shape))

from sklearn.metrics import mean_absolute_error as mae

"""

### Trying out Logistic Regression :

Let's apply good old logistic regression to see if it acn classify the dataset properly

"""

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression(penalty='l1', solver='liblinear', max_iter=3000000,

tol=1e-8)

clf.fit(X_train, Y_train)

Y_pred = clf.predict(X_test)

print("Mean Absolute Error = ", mae(Y_test, Y_pred))

"""

### Trying out LinearSVC :

Linearity of data with respect to 3 features suggest us to use Linear SVC.

"""

from sklearn.svm import LinearSVC

clf = LinearSVC(verbose=1, max_iter=3000000, tol=1e-8, C=1.25)

clf.fit(X_train, Y_train)

Y_pred = clf.predict(X_test)

print("Mean Absolute Error = ", mae(Y_test, Y_pred))

"""

### Trying out Bernoulli Naive Bayes

As described [here](https://towardsdatascience.com/naive-bayes-classifier-81d512f50a7c), Bernoulli Naive Bayes can be used for binary classification assuming all factors equally influence the output.

"""

from sklearn.naive_bayes import BernoulliNB

clf = BernoulliNB()

clf.fit(X_train, Y_train)

Y_pred = clf.predict(X_test)

print("Mean Absolute Error = ", mae(Y_test, Y_pred))

"""

### Trying out Decision Tree Classifier

"""

from sklearn.tree import DecisionTreeClassifier

clf = DecisionTreeClassifier(max_depth=10)

clf.fit(X_train, Y_train)

Y_pred = clf.predict(X_test)

print("Mean Absolute Error = ", mae(Y_test, Y_pred))

"""

### Conclusion :

Most of the classifiers don't work very well on the data with the currently chosen hyper-parameters. This maybe due to smaller size of dataset.

I'm still new to machine learning, if you think I've done something wrong and you want to correct me, you're most welcome.

""" | {'source': 'AI4Code', 'id': 'b24cf5394d60f5'} |

116041 | """

#### this notebook is part of the documentation on my HPA approach

-> main notebook: https://www.kaggle.com/philipjamessullivan/0-hpa-approach-summary

## 7: network training

-> https://www.kaggle.com/philipjamessullivan/7-train-effnetb0-version-a-part-1

-> https://www.kaggle.com/philipjamessullivan/7-train-effnetb1-version-a-part-1

-> https://www.kaggle.com/philipjamessullivan/7-train-effnetb2-version-a-part-1

-> https://www.kaggle.com/philipjamessullivan/7-train-effnetb3-version-a-part-1

##### Network Architecture: Efficientnet (B0 to B3) with a Dropout layer of 0.3

#### Goal: further refine model

##### INPUT:

**resized cropped RGB cell images**:

EfficientnetB0 -> 224x224

EfficientnetB1 -> 240x240

EfficientnetB2 -> 260x260

EfficientnetB3 -> 300x300

**specific subset of ground truth dataframe**:

Colums: img_id, Label, Cell#, bbox coords, path

##### OUTPUT:

**model checkpoints**:

one after each epoch

**training history**:

shown as printed array on screen with f1 loss and f1 score per step

"""

import pickle

import pandas as pd

hpa_df = pd.read_pickle("../input/gtdataframes/dataset_partial_300.pkl")

hpa_df

LABELS= {

0: "Nucleoplasm",

1: "Nuclear membrane",

2: "Nucleoli",

3: "Nucleoli fibrillar center",

4: "Nuclear speckles",

5: "Nuclear bodies",

6: "Endoplasmic reticulum",

7: "Golgi apparatus",

8: "Intermediate filaments",

9: "Actin filaments",

10: "Microtubules",

11: "Mitotic spindle",

12: "Centrosome",

13: "Plasma membrane",

14: "Mitochondria",

15: "Aggresome",

16: "Cytosol",

17: "Vesicles and punctate cytosolic patterns",

18: "Negative"

}

import tensorflow as tf

print(tf.__version__)

from tensorflow.keras.layers import *

from tensorflow.keras.models import *

import tensorflow_addons as tfa

import os

import re

import cv2

import glob

import numpy as np

import pandas as pd

import seaborn as sns

from functools import partial

import matplotlib.pyplot as plt

IMG_WIDTH = 300

IMG_HEIGHT = 300

BATCH_SIZE = 16

AUTOTUNE = tf.data.experimental.AUTOTUNE

#%%script echo skipping

#set an amount of folds to split dataframe into --> k-fold cross validation

# explanation: https://towardsdatascience.com/cross-validation-explained-evaluating-estimator-performance-e51e5430ff85

N_FOLDS=5

#choose which one of the 5 folds will be used as validation set this time

i_VAL_FOLD=1

hpa_df=np.array_split(hpa_df, N_FOLDS+1) #add one extra part for testing set

df_test_split=hpa_df[-1]

hpa_df=hpa_df[:-1]

i_training = [i for i in range(N_FOLDS)]

i_training.pop(i_VAL_FOLD-1)

i_validation=i_VAL_FOLD-1

df_train_split=list()

for i in i_training:

df_train_split.append(hpa_df[i])

df_train_split=pd.concat(df_train_split)

df_val_split=hpa_df[i_validation]

print(len(df_train_split))

print(len(df_val_split))

print(len(df_test_split))

#%%script echo skipping

#analyze class imbalance and set up class weights here

#https://www.analyticsvidhya.com/blog/2020/10/improve-class-imbalance-class-weights/

y_train=df_train_split["Label"].apply(lambda x:list(map(int, x.split("|"))))

y_train=y_train.values

y_train=np.concatenate(y_train)

from sklearn.utils import class_weight

class_weights = class_weight.compute_class_weight('balanced',

np.unique(y_train),

y_train)

#%%script echo skipping

tmp_dict={}

for i in range(len(LABELS)):

tmp_dict[i]=class_weights[i]

class_weights=tmp_dict

class_weights

#%%script echo skipping

# adapted from https://www.kaggle.com/ayuraj/hpa-multi-label-classification-with-tf-and-w-b

@tf.function

def multiple_one_hot(cat_tensor, depth_list):

"""Creates one-hot-encodings for multiple categorical attributes and

concatenates the resulting encodings

Args:

cat_tensor (tf.Tensor): tensor with mutiple columns containing categorical features

depth_list (list): list of the no. of values (depth) for each categorical

Returns:

one_hot_enc_tensor (tf.Tensor): concatenated one-hot-encodings of cat_tensor

"""

one_hot_enc_tensor = tf.one_hot(cat_int_tensor[:,0], depth_list[0], axis=1)

for col in range(1, len(depth_list)):

add = tf.one_hot(cat_int_tensor[:,col], depth_list[col], axis=1)

one_hot_enc_tensor = tf.concat([one_hot_enc_tensor, add], axis=1)

return one_hot_enc_tensor

@tf.function

def load_image(df_dict):

# Load image

rgb = tf.io.read_file(df_dict['path'])

image = tf.image.decode_png(rgb, channels=3)

#https://medium.com/@kyawsawhtoon/a-tutorial-to-histogram-equalization-497600f270e2

image=tf.image.per_image_standardization(image)

# Parse label

label = tf.strings.split(df_dict['Label'], sep='|')

label = tf.strings.to_number(label, out_type=tf.int32)

label = tf.reduce_sum(tf.one_hot(indices=label, depth=19), axis=0)

return image, label

#%%script echo skipping

train_ds = tf.data.Dataset.from_tensor_slices(dict(df_train_split))

val_ds = tf.data.Dataset.from_tensor_slices(dict(df_val_split))

# Training Dataset

train_ds = (

train_ds

.shuffle(1024)

.map(load_image, num_parallel_calls=AUTOTUNE)

.batch(BATCH_SIZE)

.prefetch(tf.data.experimental.AUTOTUNE)

)

# Validation Dataset

val_ds = (

val_ds

.shuffle(1024)

.map(load_image, num_parallel_calls=AUTOTUNE)

.batch(BATCH_SIZE)

.prefetch(tf.data.experimental.AUTOTUNE)

)

#%%script echo skipping

def get_label_name(labels):

l = np.where(labels == 1.)[0]

label_names = []

for label in l:

label_names.append(LABELS[label])

return '-'.join(str(label_name) for label_name in label_names)

def show_batch(image_batch, label_batch):

plt.figure(figsize=(20,20))

for n in range(10):

ax = plt.subplot(5,5,n+1)

plt.imshow(image_batch[n])

plt.title(get_label_name(label_batch[n].numpy()))

plt.axis('off')

#%%script echo skipping

# Training batch

image_batch, label_batch = next(iter(train_ds))

show_batch(image_batch, label_batch)

#print(label_batch)

#%%script echo skipping

def get_model():

base_model = tf.keras.applications.EfficientNetB3(include_top=False, weights='imagenet')

base_model.trainable = True

inputs = Input((IMG_HEIGHT, IMG_WIDTH, 3))

x = base_model(inputs, training=True)

x = GlobalAveragePooling2D()(x)

x = Dropout(0.3)(x)

outputs = Dense(len(LABELS), activation='sigmoid')(x)

return Model(inputs, outputs)

tf.keras.backend.clear_session()

model = get_model()

model.summary()

#%%script echo skipping

time_stopping_callback = tfa.callbacks.TimeStopping(seconds=int(round(60*60*8)), verbose=1) #8h to not exceed allowance

earlystopper = tf.keras.callbacks.EarlyStopping(

monitor='val_loss', patience=10, verbose=0, mode='min',

restore_best_weights=True

)

lronplateau = tf.keras.callbacks.ReduceLROnPlateau(

monitor='val_loss', factor=0.5, patience=5, verbose=0,

mode='auto', min_delta=0.0001, cooldown=0, min_lr=0

)

#%%script echo skipping

#set up checkpoint save

#source:https://www.tensorflow.org/tutorials/keras/save_and_load

!pip install -q pyyaml h5py

import os

checkpoint_path_input = "../input/traineffnetb3versionapart1/cp.ckpt"

checkpoint_path = "./cp.ckpt"

checkpoint_dir = os.path.dirname(checkpoint_path)

# Create a callback that saves the model's weights

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_path,

save_weights_only=True,

verbose=1)

#%%script echo skipping

import keras.backend as K

K_epsilon = K.epsilon()

def f1(y_true, y_pred):

#y_pred = K.round(y_pred)

y_pred = K.cast(K.greater(K.clip(y_pred, 0, 1), 0.5), K.floatx())

tp = K.sum(K.cast(y_true*y_pred, 'float'), axis=0)

tn = K.sum(K.cast((1-y_true)*(1-y_pred), 'float'), axis=0)

fp = K.sum(K.cast((1-y_true)*y_pred, 'float'), axis=0)

fn = K.sum(K.cast(y_true*(1-y_pred), 'float'), axis=0)

p = tp / (tp + fp + K_epsilon)

r = tp / (tp + fn + K_epsilon)

f1 = 2*p*r / (p+r+K_epsilon)

f1 = tf.where(tf.math.is_nan(f1), tf.zeros_like(f1), f1)

return K.mean(f1)

def f1_loss(y_true, y_pred):

#y_pred = K.cast(K.greater(K.clip(y_pred, 0, 1), THRESHOLD), K.floatx())

tp = K.sum(K.cast(y_true*y_pred, 'float'), axis=0)

tn = K.sum(K.cast((1-y_true)*(1-y_pred), 'float'), axis=0)

fp = K.sum(K.cast((1-y_true)*y_pred, 'float'), axis=0)

fn = K.sum(K.cast(y_true*(1-y_pred), 'float'), axis=0)

p = tp / (tp + fp + K_epsilon)

r = tp / (tp + fn + K_epsilon)

f1 = 2*p*r / (p+r+K_epsilon)

f1 = tf.where(tf.math.is_nan(f1), tf.zeros_like(f1), f1)

return 1-K.mean(f1)

#%%script echo skipping

import tensorflow as tf

import timeit

device_name = tf.test.gpu_device_name()

if "GPU" not in device_name:

print("GPU device not found")

print('Found GPU at: {}'.format(device_name))

#https://stackoverflow.com/questions/47490834/how-can-i-print-the-learning-rate-at-each-epoch-with-adam-optimizer-in-keras

import keras

def get_lr_metric(optimizer):

def lr(y_true, y_pred):

return optimizer._decayed_lr(tf.float32)

return lr

optimizer = keras.optimizers.Adam()

lr_metric = get_lr_metric(optimizer)

#%%script echo skipping

# Initialize model

tf.keras.backend.clear_session()

#model = get_model()

model.load_weights(checkpoint_path_input)

# Compile model

model.compile(

optimizer=optimizer,

loss=f1_loss,

metrics=[f1, lr_metric])

# Train

history=model.fit(

train_ds,

epochs=1000,

validation_data=val_ds,

class_weight=class_weights,

callbacks=[cp_callback,earlystopper,time_stopping_callback])

#%%script echo skipping

history.history

#%%script echo skipping

#source: https://machinelearningmastery.com/display-deep-learning-model-training-history-in-keras/

# list all data in history

print(history.history.keys())

# summarize history for f1

plt.plot(history.history['f1'])

plt.plot(history.history['val_f1'])

plt.title('f1')

plt.ylabel('f1')

plt.xlabel('epoch')

plt.legend(['train', 'val'], loc='upper left')

plt.show()

# summarize history for loss

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'val'], loc='upper left')

plt.show()

# summarize history for lr

plt.plot(history.history['lr'])

plt.title('learning rate')

plt.ylabel('learning rate')

plt.xlabel('epoch')

plt.legend('train', loc='upper left')

plt.show()

with open('./historyhistory.pkl', 'wb') as handle:

pickle.dump(history.history, handle, protocol=pickle.HIGHEST_PROTOCOL)

with open('./history.pkl', 'wb') as handle:

pickle.dump(history, handle, protocol=pickle.HIGHEST_PROTOCOL) | {'source': 'AI4Code', 'id': 'd5668563d2cdd3'} |

37319 | """

* V14: train with tri grams and generate new vocab, num feat = 15000

"""

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk('/kaggle/input'):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

! pip install underthesea

import numpy as np

import pandas as pd

import pylab as plt

import matplotlib.pyplot as plt

import plotly.offline as py

import plotly.graph_objs as go

py.init_notebook_mode(connected=True)

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

from sklearn.model_selection import KFold, cross_val_score

from sklearn.metrics import cohen_kappa_score

from tensorflow.python.keras.models import Sequential, load_model

from tensorflow.python.keras.layers import Dense, Dropout

from tensorflow.python.keras import optimizers

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.preprocessing import OneHotEncoder, LabelEncoder

import pickle

import seaborn as sns

from imblearn.over_sampling import SMOTE

from nltk.corpus import stopwords

import keras

import io

import requests

import nltk

nltk.download('punkt')

nltk.download('stopwords')

from underthesea import word_tokenize

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

from keras import backend as K

K.tensorflow_backend._get_available_gpus()

import codecs

def create_stopwordlist():

f = codecs.open('/kaggle/input/vietnamese-stopwords/vietnamese-stopwords.txt', encoding='utf-8')

data = []

null_data = []

for i, line in enumerate(f):

line = repr(line)

line = line[1:len(line)-3]

data.append(line)

return data

stopword_vn = create_stopwordlist()

import string

def tokenize(text):

text = text.translate(str.maketrans('', '', string.punctuation))

return [word for word in word_tokenize(text.lower()) if word not in stopword_vn]

def choose_vectorizer(option, name='tf_idf'):

if option == 'generate':

if name == 'tf_idf':

vectorizer = TfidfVectorizer(tokenizer = tokenize,ngram_range=(1,4), min_df=5, max_df= 0.8, max_features= 5000, sublinear_tf=True)

else:

vectorizer = CountVectorizer(tokenizer = tokenize, ngram_range=(1,4), max_df=0.8, min_df=5, max_features = 5000, sublinear_tf=True)

elif option == 'load':

if name == 'tf_idf':

vectorizer = TfidfVectorizer(vocabulary = pickle.load(open('../input/kpdl-data/vocabulary_2.pkl', 'rb')), ngram_range=(1,3), min_df=5, max_df= 0.8, max_features=15000, sublinear_tf=True)

else:

vectorizer = CountVectorizer(vocabulary = pickle.load(open('../input/kpdl-data/vocabulary_2.pkl', 'rb')), ngram_range=(1,3), max_df=0.8, min_df=5, max_features = 15000, sublinear_tf=True)

return vectorizer

# data = pd.read_csv('../input/kpdl-data/train_v1.csv')

data = pd.read_csv('../input/vietnamese-sentiment-analyst/data - data.csv')

data.head(2)

df = data.loc[data['comment'] == None]

df

category = data['rate'].unique()

category_to_id = {cate: idx for idx, cate in enumerate(category)}

id_to_category = {idx: cate for idx, cate in enumerate(category)}

print(category_to_id)

print(id_to_category)

"""

### Distribution of label

"""

data_label = data['rate']

data_label = pd.DataFrame(data_label, columns=['rate']).groupby('rate').size()

data_label.plot.pie(figsize=(15, 15), autopct="%.2f%%", fontsize=12)

X_train, X_test, y_train, y_test = train_test_split(data['comment'], data['rate'], test_size = .15, shuffle = True, stratify=data['rate'])

X_train, X_valid, y_train, y_valid = train_test_split(X_train, y_train, test_size = .2, shuffle = True, stratify=y_train)

X_train.shape

X_train_df = pd.DataFrame(X_train, columns=['comment'])

X_valid_df = pd.DataFrame(X_valid, columns=['comment'])

X_test_df = pd.DataFrame(X_test, columns=['comment'])

print(X_train_df.head(10))

print(X_valid_df.head(10))

print(X_test_df.head(10))

y_train

"""

### Check distribution of train, valid and test set

"""

%%time

options = ['generate', 'load']

# 0 to generate, 1 to load (choose wisely, your life depends on it!)

option = options[0]

vectorizer = choose_vectorizer(option)

X_train = vectorizer.fit_transform(X_train).toarray()

X_valid = vectorizer.transform(X_valid).toarray()

X_test = vectorizer.transform(X_test).toarray()

if option == 'generate':

pickle.dump(vectorizer.vocabulary_, open('vocabulary_3.pkl', 'wb'))

print(X_train.shape, X_valid.shape, X_test.shape)

X_train.shape

y_ = y_train.map(category_to_id).values

y_train = np.zeros((len(y_), y_.max()+1))

y_train[np.arange(len(y_)), y_] = 1

# y_train = y_

y_ = y_test.map(category_to_id).values

y_test = np.zeros((len(y_), y_.max()+1))

y_test[np.arange(len(y_)), y_] = 1

# y_test = y_

y_ = y_valid.map(category_to_id).values

y_valid = np.zeros((len(y_), y_.max()+1))

y_valid[np.arange(len(y_)), y_] = 1

# y_valid = y_

print(y_train.sum(1))

print(y_valid.sum(1))

print(y_test.sum(1))

print(y_train.shape, y_valid.shape, y_test.shape)

from keras.callbacks import EarlyStopping, ReduceLROnPlateau, ModelCheckpoint

DROPOUT = 0.3

ACTIVATION = "relu"

model = Sequential([

Dense(1000, activation=ACTIVATION, input_dim=X_train.shape[1]),

Dropout(DROPOUT),

Dense(500, activation=ACTIVATION),

Dropout(DROPOUT),

Dense(300, activation=ACTIVATION),

Dropout(DROPOUT),

# Dense(200, activation=ACTIVATION),

# Dropout(DROPOUT),

# Dense(100, activation=ACTIVATION),

# Dropout(DROPOUT),

# Dense(50, activation=ACTIVATION),

# Dropout(DROPOUT),

Dense(5, activation='softmax'),

])

def recall_m(y_true, y_pred):

true_positives = K.sum(K.round(K.clip(y_true * y_pred, 0, 1)))

possible_positives = K.sum(K.round(K.clip(y_true, 0, 1)))

recall = true_positives / (possible_positives + K.epsilon())

return recall

def precision_m(y_true, y_pred):

true_positives = K.sum(K.round(K.clip(y_true * y_pred, 0, 1)))

predicted_positives = K.sum(K.round(K.clip(y_pred, 0, 1)))

precision = true_positives / (predicted_positives + K.epsilon())

return precision

def f1_m(y_true, y_pred):

precision = precision_m(y_true, y_pred)

recall = recall_m(y_true, y_pred)

return 2*((precision*recall)/(precision+recall+K.epsilon()))

model.compile(optimizer=optimizers.Adam(0.001), loss='categorical_crossentropy', metrics=['acc', f1_m,precision_m, recall_m])

model.summary()

es = EarlyStopping(monitor='val_f1_m', mode='max', verbose=1, patience=5)

reduce_lr = ReduceLROnPlateau(monitor='val_f1_m', factor=0.2, patience=8, min_lr=1e7)

checkpoint = ModelCheckpoint('best_full.h5', monitor='val_f1_m', verbose=0, save_best_only=False, save_weights_only=False, mode='auto', period=1)

EPOCHS = 25

BATCHSIZE = 4

model.fit(X_train, y_train, epochs=EPOCHS, batch_size=BATCHSIZE, validation_data=(X_valid, y_valid), callbacks=[es, reduce_lr, checkpoint])

x = np.arange(EPOCHS)

history = model.history.history

# import tensorflow as tf

# model = tf.keras.models.load_model('../input/kpdl-base/my_model.h5')

from keras.models import Sequential

from keras.layers import Dense

from keras.models import model_from_json

model.save('my_model.h5')

"""

### Predict in train, valid and test set

"""

predict_train = model.predict(X_train)

predict_valid = model.predict(X_valid)

predict_test = model.predict(X_test)

print(predict_train.shape, predict_valid.shape, predict_test.shape)

predict_train_label = predict_train.argmax(-1)

predict_valid_label = predict_valid.argmax(-1)

predict_test_label = predict_test.argmax(-1)

# predict_train_label

predict_train_label = [id_to_category[predict_train_label[idx]] for idx in range(len(predict_train))]

predict_valid_label = [id_to_category[predict_valid_label[idx]] for idx in range(len(predict_valid))]

predict_test_label = [id_to_category[predict_test_label[idx]] for idx in range(len(predict_test))]

# predict_train_label

y_train_true = y_train.argmax(-1)

y_valid_true = y_valid.argmax(-1)

y_test_true = y_test.argmax(-1)

# y_train_true = y_train

# y_valid_true = y_valid

# y_test_true = y_test

# y_train_true

# y_train_true

y_train_label = [id_to_category[y_train_true[idx]] for idx in range(len(y_train_true))]

y_valid_label = [id_to_category[y_valid_true[idx]] for idx in range(len(y_valid_true))]

y_test_label = [id_to_category[y_test_true[idx]] for idx in range(len(y_test_true))]

train_concat = np.concatenate((np.array(X_train_df['comment'].values).reshape(-1, 1), np.array(y_train_label).reshape(-1, 1), np.array(predict_train_label).reshape(-1, 1)), axis=-1)

valid_concat = np.concatenate((np.array(X_valid_df['comment'].values).reshape(-1, 1), np.array(y_valid_label).reshape(-1, 1), np.array(predict_valid_label).reshape(-1, 1)), axis=-1)

test_concat = np.concatenate((np.array(X_test_df['comment'].values).reshape(-1, 1), np.array(y_test_label).reshape(-1, 1), np.array(predict_test_label).reshape(-1, 1)), axis=-1)

# train_concat = np.concatenate((np.array(X_train_df['Content'].values).reshape(-1, 1), np.array(y_train_label).reshape(-1, 1)), axis=-1)

# valid_concat = np.concatenate((np.array(X_valid_df['Content'].values).reshape(-1, 1), np.array(y_valid_label).reshape(-1, 1)), axis=-1)

# test_concat = np.concatenate((np.array(X_test_df['Content'].values).reshape(-1, 1), np.array(y_test_label).reshape(-1, 1)), axis=-1)

# train_concat_predict_df = pd.DataFrame(train_concat, columns=['Content', 'True_Label'])

# valid_concat_predict_df = pd.DataFrame(valid_concat, columns=['Content', 'True_Label'])

# test_concat_predict_df = pd.DataFrame(test_concat, columns=['Content', 'True_Label'])

train_concat_predict_df = pd.DataFrame(train_concat, columns=['comment', 'True_Label', 'Predict'])

valid_concat_predict_df = pd.DataFrame(valid_concat, columns=['comment', 'True_Label', 'Predict'])

test_concat_predict_df = pd.DataFrame(test_concat, columns=['comment', 'True_Label', 'Predict'])

train_concat_predict_df.head(20)

valid_concat_predict_df.head(20)

test_concat_predict_df.head(20)

"""

### Save predict to csv file

"""

train_concat_predict_df.to_csv('train_concat_predict_df.csv', index=False)

valid_concat_predict_df.to_csv('valid_concat_predict_df.csv', index=False)

test_concat_predict_df.to_csv('test_concat_predict_df.csv', index=False)

predict_test

from sklearn.metrics import confusion_matrix

import seaborn as sns

plt.figure(figsize=(8, 8))

conf_mat = confusion_matrix(y_test_true, predict_test.argmax(-1))

sns.heatmap(conf_mat, annot=True, fmt='d',

xticklabels=id_to_category.values(), yticklabels=id_to_category.values())

plt.ylabel('Actual')

plt.xlabel('Predicted')

# y_test_true

predict_test = predict_test.argmax(-1)

from sklearn.metrics import f1_score

score = f1_score(y_test_true, predict_test, average='weighted')

score

"""

### List Content that model predicted false

"""

labels = data['rate'].unique()

for label in labels:

wrong = []

df = test_concat_predict_df.loc[test_concat_predict_df['True_Label'] == label]

df_content = df.values

for row in df_content:

if np.abs(int(row[1])- int(row[2])):

wrong.append(row)

df_wrong = pd.DataFrame(wrong, columns=['rate', 'true', 'predict'])

df_wrong.to_csv(f'{label}_test.csv')

print(label, df_wrong)

for label in labels:

wrong = []

df = valid_concat_predict_df.loc[valid_concat_predict_df['True_Label'] == label]

df_content = df.values

for row in df_content:

if np.abs(int(row[1])- int(row[2])):

wrong.append(row)

df_wrong = pd.DataFrame(wrong, columns=['rate', 'true', 'predict'])

df_wrong.to_csv(f'{label}_valid.csv')

print(label, df_wrong.head())

for label in labels:

wrong = []

df = train_concat_predict_df.loc[train_concat_predict_df['True_Label'] == label]

df_content = df.values

for row in df_content:

if np.abs(int(row[1])- int(row[2])):

wrong.append(row)

df_wrong = pd.DataFrame(wrong, columns=['rate', 'true', 'predict'])

df_wrong.to_csv(f'{label}_train.csv')

print(label, df_wrong.head()) | {'source': 'AI4Code', 'id': '44b1ea84dff48e'} |

48500 | """

# NGBoost やってみたメモ

* modelのチューニングはきちんとやっていません。なのでどちらが性能がいいかはわかりませんが、えいやっと使った感触ではこれくらいのデータなら遜色なかったです。

* 分布が算出できるのは使いどころがあるかもですね。

"""

!pip install ngboost

# basic libraries

import pandas as pd

import numpy as np

import numpy.random as rd

import gc

import multiprocessing as mp

import os

import sys

import pickle

from glob import glob

import math

from datetime import datetime as dt

from pathlib import Path

import scipy.stats as st

import re

import shutil

from tqdm import tqdm_notebook as tqdm

import datetime

ts_conv = np.vectorize(datetime.datetime.fromtimestamp) # 秒ut(10桁) ⇒ 日付

# グラフ描画系

import matplotlib

from matplotlib import font_manager

import matplotlib.pyplot as plt

import matplotlib.cm as cm

from matplotlib import rc

from matplotlib import animation as ani

from IPython.display import Image

plt.rcParams["patch.force_edgecolor"] = True

#rc('text', usetex=True)

from IPython.display import display # Allows the use of display() for DataFrames

import seaborn as sns

sns.set(style="whitegrid", palette="muted", color_codes=True)

sns.set_style("whitegrid", {'grid.linestyle': '--'})

red = sns.xkcd_rgb["light red"]

green = sns.xkcd_rgb["medium green"]

blue = sns.xkcd_rgb["denim blue"]

# pandas formatting

pd.set_option("display.max_colwidth", 100)

pd.set_option("display.max_rows", None)

pd.set_option("display.max_columns", None)

pd.options.display.float_format = '{:,.5f}'.format

%matplotlib inline

%config InlineBackend.figure_format='retina'

# ngboost

from ngboost.ngboost import NGBoost

from ngboost.learners import default_tree_learner

from ngboost.scores import MLE

from ngboost.distns import Normal, LogNormal

# skleran

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# lightgbm

import lightgbm as lgb

"""

## Data preparation

"""

X, y = load_boston(True)

rd.seed(71)

X_train, X_valid, y_train, y_valid = train_test_split(X, y, test_size=0.2)

"""

# LightGBM model

"""

%%time

lgb_train = lgb.Dataset(X_train, y_train)

lgb_valid = lgb.Dataset(X_valid, y_valid, reference=lgb_train)

model = lgb.train({'objective': 'regression',

'metric': "mse",

'learning_rate': 0.01,

'seed': 71},

lgb_train,

num_boost_round=99999,

valid_sets=[lgb_valid],

early_stopping_rounds=100,

verbose_eval=500)

y_pred_lgb = model.predict(data=X_valid)

"""

# NGBoost model

"""

%%time

rd.seed(71)

ngb = NGBoost(Base=default_tree_learner, Dist=Normal, #Normal, LogNormal

Score=MLE(), natural_gradient=True, verbose=False, )

ngb.fit(X_train, y_train, X_val=X_valid, Y_val=y_valid)

y_preds = ngb.predict(X_valid)

y_dists = ngb.pred_dist(X_valid)

# test Mean Squared Error

test_MSE = mean_squared_error(y_preds, y_valid)

print('ngb Test MSE', test_MSE)

#test Negative Log Likelihood

test_NLL = -y_dists.logpdf(y_valid.flatten()).mean()

print('ngb Test NLL', test_NLL)

"""

# Draw result graph

**point**

* NGBoostは分布を表示できる(今回は正規分布)

* グラフを目視で見た感想としてはLightGBMがgtに近いものもあれば、NGBoostがgtに近いケースもあり、五分五分?

"""

offset = np.ptp(y_preds)*0.1

y_range = np.linspace(min(y_valid)-offset, max(y_valid)+offset, 200).reshape((-1, 1))

dist_values = y_dists.pdf(y_range).transpose()

plt.figure(figsize=(25, 120))

for idx in tqdm(np.arange(X_valid.shape[0])):

plt.subplot(35, 3, idx+1)

plt.plot(y_range, dist_values[idx])

plt.vlines(y_preds[idx], 0, max(dist_values[idx]), "r", label="ngb pred")

plt.vlines(y_pred_lgb[idx], 0, max(dist_values[idx]), "purple", label="lgb pred")

plt.vlines(y_valid[idx], 0, max(dist_values[idx]), "pink", label="ground truth")

plt.legend(loc="best")

plt.title(f"idx: {idx}")

plt.xlim(y_range[0], y_range[-1])

plt.tight_layout()

plt.show()

plt.figure(figsize=(8,6))

plt.scatter(x=y_preds, y=y_pred_lgb, s=20)

plt.plot([8,50], [8,50], color="gray", ls="--")

plt.xlabel("NGBoost")

plt.ylabel("LightGBM")

plt.title("NGBoost vs LightGBM")

plt.show()

plt.figure(figsize=(8,6))

plt.scatter(y=y_preds, x=y_valid, s=20)

plt.plot([8,50], [8,50], color="gray", ls="--")

plt.ylabel("NGBoost")

plt.xlabel("Ground truth")

plt.title("NGBoost vs Ground truth")

plt.show()

plt.figure(figsize=(8,6))

plt.scatter(y=y_pred_lgb, x=y_valid, s=20)

plt.plot([8,50], [8,50], color="gray", ls="--")

plt.ylabel("LightGBM")

plt.xlabel("Ground truth")

plt.title("LightGBM vs Ground truth")

plt.show() | {'source': 'AI4Code', 'id': '5947bd9ad5be6f'} |

106369 | """

## Attention

I'm not good at English. If you find a mistake, let me know, please.

## 0. Abstract

Interestingly, it's a very interesting phenomenon that global transformation by a the Moon's tide stress seems to be a trigger of occurrence for a disastrous earthquake (M>=5.5).

It is found out that some statistically considered research papers geared to past earthquakes, there is no one which show about Lunar Age or Lunar Phase Angle clearly.The one of possibility reason is tidal phase angle on the Earth depends on the lunar age. However, many people can not calculate the tidal phase angle.

This report's objective is that many people are able to imagine at time of high potential of earthquake occurrence intuitively by using visualization of **the position of the Moon and the Sun when earthquake was occurred**.

## 1. Introduction

Schuster (1987) [1] is first article which discuss the influence of the tide from the moon.

Some Studies, such as Tanaka (2012) [2] and Ide et al. (2016) [3], are studied the relationship between the earth tides and earthquakes with targeting specific seismic sources (groups).

Tsuruoka and Ohtake (1995) [4] discussed the relationship with the global tides for earthquakes that occurred in the world.

However, it is too difficult to calclulate tidal stress and to understand it for non-academia ordinary people.

Therefore, I show some figures of relationship between earthquakes and lunar age in order to imagine the timing of earthquake occurrence.

## 2. Data

## 2.1 Data Source

I selected the "[Significant Earthquakes, 1965-2016](https://www.kaggle.com/usgs/earthquake-database)" as the earthquake catalog to visualize target which is probably presented by USGS (United States Geological Survey) on Kaggle platform.

Some earthquake catalogs (or past event lists) are opend on official sites of public organizations, such as USGS, Meteorological Institute of Japan, Disaster-Reduction Research of Japan. These catalogs are not useful because it is not simple list.

Almost earthquakes are caused by plate tectonics at each location, regional characteristics exists. This article target for wide-area seismic activity on the whole earth, regional characteristics are not considered at all. Because it makes hard to discuss the actual mechanism of earthquake occurrence, this may be cause a feeling of strangeness to researcher.

## 2.2 Data Overview

Let's confirm a few head data after reading catalog.

"""

import math

import datetime

import os, sys

import numpy as np

import pandas as pd

DATA_DIR = "/kaggle/input/earthquake-database" + os.sep

# read data file

earthquake = pd.read_csv(

DATA_DIR+"database.csv",

sep=",",

parse_dates={'datetime':['Date', 'Time']},

encoding="utf-8",

error_bad_lines=False,

)

# treating irregular data

for idx in [3378,7512,20650]:

earthquake.at[idx, "datetime"] = earthquake.at[idx, "datetime"].split(" ")[0]

earthquake["datetime"] = pd.to_datetime(earthquake["datetime"], utc=True)

earthquake.set_index(["datetime"], inplace=True)

earthquake.head()

"""

## 2.3 World Map

Confirming the distribution of Earthquakes:

"""

import matplotlib.pyplot as plt

plt.style.use('dark_background')

plt.grid(False)

from mpl_toolkits.basemap import Basemap

%matplotlib inline

ti = "Map of Earthquake's epicenter duaring 1965-2016"

fig, ax = plt.subplots(figsize=(18, 18), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

m = Basemap(projection='robin', lat_0=0, lon_0=-170, resolution='c')

m.drawcoastlines()

#m.drawcountries()

m.fillcontinents(color='#606060', zorder = 1)

#m.bluemarble()

#m.drawmapboundary(fill_color='lightblue')

for i in range(5,10,1):

#print(i)

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)&(earthquake["Type"]=="Earthquake")]

x, y = m(list(tmp.Longitude), list(tmp.Latitude))

points = m.plot(x, y, "o", label=f"Mag.: {i}.x", markersize=0.02*float(i)**3.2, alpha=0.55+0.1*float(i-5))

plt.title(f"{ti}", fontsize=22)

plt.legend(bbox_to_anchor=(1.01, 1), loc='upper left', borderaxespad=0, fontsize=18)

ax_pos = ax.get_position()

fig.text(ax_pos.x1-0.1, ax_pos.y0, "created by boomin", fontsize=16)

plt.show()

"""

We can find **the Ring of Fire** on the Pacific Sea.

Japan is one of the most concentlated country area of earthquake.

## 2.4 Distribution of the Depth

Let's confirm the distribution of the Depth of seismic sources.

"""

ti = "Distribution of Earthquake's Depth"

fig, ax = plt.subplots(figsize=(16, 9), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

for i in range(5,8,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)&(earthquake["Type"]=="Earthquake")]

plt.hist(tmp["Depth"], bins=60, density=True, histtype='step', linewidth=2.5, label=f"Mag.: {i}.x")

tmp = earthquake[(earthquake["Magnitude"]>=8)]

plt.hist(tmp["Depth"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: >8.x")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Depth, km")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.show()

"""

We notice following facts:

* concentlating in shallower area than 100km

* there is also a concentlated areas between 550km and 650km

We are not able to confirm from this figure, epicenters of the earthquakes which are occureed below 550km depth may be locate at center area of continental, not at plate boundary.

The depth of seismic source due to tidal stress or ocean tide is approximately 70-80 km, considering the occuerrence mechanism.

From now on, **we use the data shallower than 80km to visualize**.

"""

earthquake = earthquake[earthquake["Depth"]<80]

earthquake = earthquake[earthquake["Type"]=="Earthquake"]

"""

## 2.5 Distribution of Latitude

Showing the distribution of latitude of epicenter because tidal stress depends on the latitude of the earth.

"""

plt.clf()

ti = "Distribution of Earthquake's Latitude with Magnitude"

fig, ax = plt.subplots(figsize=(16, 9), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

#

for i in range(5,8,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)&(earthquake["Type"]=="Earthquake")]

plt.hist(tmp["Latitude"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: {i}.x")

tmp = earthquake[(earthquake["Magnitude"]>=8)]

plt.hist(tmp["Latitude"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: >8.x")

#

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Latitude, deg")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.show()

"""

There are some peaks, such as at -10 deg or 50 deg, however distribution of latitude depends on the location of plate boundary.

This figure do not show the correlation between the tidal stress from the Moon and occuracy of earthquake.

This is described later in [section 4.2](#4.2-Relationship-between-the-epicenter-and-Phase-Angle).

"""

"""

## 3. Orbit calculation of the Moon and the Sun from the Earth

The tidal stress at the superficial area of the Earth become large at the following:

* when the Moon come close to the Earth

* tidal stress is inversely proportional to the cube of distance

* influence from the Sun is aproximatry 45% of the Moon because the distanse is effective

* when New Moon or Full Moon

* the face in the same direction of gravitation from the Moon and the Sun

* when high tide or low tide

* change in sea level affects oceanic plate as load

Therefore, I calculate following values at each time of occurrence of earthquake:

1. distance between the Moon and the Earth

2. phase angle between the Moon and the Sun at epicenter

3. Altitude and Azimuth Direction of the Moon at each epicenter

### 3.1 Library

[Astoropy](https://github.com/astropy/astropy) is used for calculation of the Solar System object's position.

### 3.2 Eephemeris for Astronomical position calculation

The ephemeris data is neccesery to calculate the object's position. The DE432s, [the latest data published by JPL](https://ssd.jpl.nasa.gov/?planet_eph_export), is applied for our calculation because DE432s is stored in a smaller, ~10 MB, file than DE432. If you would like to get more precise positions, or longer term over 1950 to 2050, [you should to select DE432 or other data.](https://docs.astropy.org/en/stable/coordinates/solarsystem.html).

If you would like to get more precise positions, or longer term over 1950 to 2050, [you should to select DE430](https://docs.astropy.org/en/stable/coordinates/solarsystem.html).

To retrieve JPL ephemeris of Solar System objects, we need to install following package:

"""

# install ephemeris provided by NASA JPL

!pip install jplephem

"""

### 3.3 Obtaining of Phase Angle between the Sun and the Moon

We need to determine lunar age in order to obtain the day of Full Moon and New Moon because tidal force become the storongest at Full Moon or New Moon. When the Moon is new, the direction from the Earth to the Moon coincides with to the Sun, and each gravity force vectors faces in the same direction. When the Moon is full, both the directions and gravity force vectors are in an opposite way.

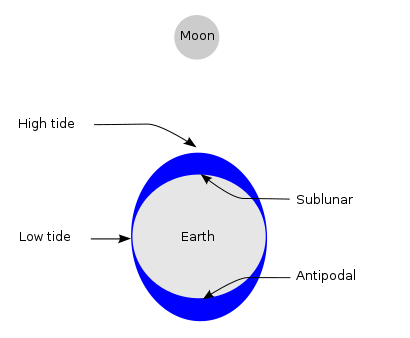

Thee angle between the Moon and the Sun is defined as [Phase Angle](https://en.wikipedia.org/wiki/Phase_angle_(astronomy)) as following figure.

"""

from numpy import linalg as LA

from astropy.coordinates import EarthLocation, get_body, AltAz, Longitude, Latitude, Angle

from astropy.time import Time, TimezoneInfo

from astropy import units as u

from astropy.coordinates import solar_system_ephemeris

solar_system_ephemeris.set('de432s')

# epcenter of each earthquake

pos = EarthLocation(Longitude(earthquake["Longitude"], unit="deg"), Latitude(earthquake["Latitude"], unit="deg"))

# time list of occerrd earthquake

dts = Time(earthquake.index, format="datetime64")

# position of the Moon and transforming from equatorial coordinate system to horizontal coordinate system

Mpos = get_body("moon", dts).transform_to(AltAz(location=pos))

# position of the Sun and transforming from equatorial coordinate system to horizontal coordinate system

Spos = get_body("sun", dts).transform_to(AltAz(location=pos))

# phase angle between the Sun and the Moon (rad)

SM_angle = Mpos.position_angle(Spos)

# phase angle from 0 (New Moon) to 180 (Full Moon) in degree

earthquake["p_angle"] = [ deg if deg<180 else 360-deg for deg in SM_angle.degree ]

earthquake["moon_dist"] = Mpos.distance.value/3.8e8

earthquake["sun_dist"] = Spos.distance.value/1.5e11

earthquake["moon_az"] = Mpos.az.degree

earthquake["moon_alt"] = Mpos.alt.degree

earthquake.head(5)

"""

Tidal force is maximized at the phase angle is 0 or 180 deg, however, the influence from the moon and the Sun on the Earth's surface layer cannot be ignored due to the movement of the ocean, and the Earth's behavior as elastic body. It means that tidal effect delays about 0 - 8 hours from tidal force change.

"""

"""

## 4. Data Visualization

### 4.1 Time Series of Earthquakes with its Magnitude

"""

plt.clf()

ti = "Earthquakes with Phase Angle"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

plt.rcParams["font.size"] = 24

fig.patch.set_facecolor('black')

for i in range(5,10,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

plt.scatter(tmp.index, tmp["p_angle"], label=f"Mag.: {i}.x", s=0.02*float(i)**4.5, alpha=0.2+0.2*float(i-5))

plt.xlabel("Occuerd Year")

plt.ylabel("Phase Angle (0:New Moon, 180:Full Moon), deg")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.grid(False)

plt.show()

"""

Data points are dispersed around 90 degrees in Phase Angle, Let's confirm the distribution by drawing histogram.

"""

plt.clf()

ti = "Phase Angle Histogram"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

plt.rcParams["font.size"] = 24

fig.patch.set_facecolor('black')

for i in range(5,8,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

plt.hist(tmp["p_angle"], bins=60, density=True, histtype='step', linewidth=2.0, label=f"Mag.: {i}.x")

i=8

tmp = earthquake[(earthquake["Magnitude"]>=8)]

plt.hist(tmp["p_angle"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: >8.x")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Phase Angle (0:New Moon, 180:Full Moon), deg")

plt.ylabel("Count of Earthquake after 1965 (Normarized)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.grid(False)

plt.show()

"""

This figure indicates that large (especially M>8) earthquakes tend to increase around the New Moon and the Full Moon.

### 4.2 Relationship between the epicenter and Phase Angle

A simple distribution figure has already been shown in [section 2.1](#2.4-distribution-of-the-depth).

Here we visualize a little more detail.

"""

plt.clf()

ti = "Map of Earthquake's epicenter with lunar pahse angle"

fig, ax = plt.subplots(figsize=(18, 10), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

import matplotlib.cm as cm

from matplotlib.colors import Normalize

for i in range(5,10,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

m=plt.scatter(

tmp.Longitude, tmp.Latitude, c=tmp.p_angle, s=0.02*float(i)**4.5,

linewidths=0.4, alpha=0.4+0.12*float(i-5), cmap=cm.jet, label=f"Mag.: {i}.x",

norm=Normalize(vmin=0, vmax=180)

)

plt.title(f"{ti}", fontsize=22)

plt.legend(bbox_to_anchor=(1.01, 1), loc='upper left', borderaxespad=0, fontsize=18)

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

m.set_array(tmp.p_angle)

pp = plt.colorbar(m, cax=fig.add_axes([0.92, 0.17, 0.02, 0.48]), ticks=[0,45,90,135,180] )

pp.set_label("Phase Angle, deg", fontsize=18)

pp.set_clim(0,180)

plt.show()

"""

This distribution appears to be biased.

The earthquakes around terrestrial equator occur when phase angle is about 0 deg or 180 deg, which means the Moon is full or new.

"""

plt.clf()

ti = "Distribution of Earthquake's Latitude with Phase Angle"

fig, ax = plt.subplots(figsize=(16, 10), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

plt.hist(earthquake["Latitude"], bins=60, density=True, histtype='step', linewidth=6, label=f"Average", color="w")

for deg in range(0,180,10):

tmp = earthquake[(earthquake["p_angle"]>=deg)&(earthquake["p_angle"]<deg+10)]

plt.hist(

tmp["Latitude"], bins=60, density=True, histtype='step', linewidth=1.5,

label=f"{deg}-{deg+10}", color=cm.jet(deg/180), alpha=0.8

)

plt.legend(bbox_to_anchor=(1.02, 0.97), loc='upper left', borderaxespad=0, fontsize=16)

plt.xlabel("Latitude, deg")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.03, ax_pos.y1-0.01, "phase angle", fontsize=16)

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.show()

"""

At least, this figure indicates followings:

* Phase angle between the Sun and the Moon impacts each position (latitude) differently

* There are seismic sources which easily are influenced by the phase angle

### 4.3 Phase Angle and the Distance

Relationship between Magnitude and Distances of the Sun and the Moon.

"""

ti="Relationship between Magnitue and Distances of the Sun and the Moon"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

plt.rcParams["font.size"] = 24

fig.patch.set_facecolor('black')

for i in range(5,10,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

plt.scatter(tmp["moon_dist"], tmp["sun_dist"], label=f"Mag.: {i}.x", s=0.02*float(i)**4.4, alpha=0.2+0.2*float(i-5))

plt.xlabel("distance between the Moon and the Earth (Normarized)")

plt.ylabel("distance between the Sun and the Earth (Normarized)")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.grid(False)

plt.show()

"""

It seems that data points are concentrated about 0.97 and 1.05 in distance.

It's checked distance dependency respectively about Phase Angle and Magnitude.

"""

ti = 'Distribution of Distance to the Moon with Phase Angle'

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

plt.hist(earthquake["moon_dist"], bins=60, density=True, histtype='step', linewidth=6, label=f"Average", color="w")

for deg in range(0,180,20):

tmp = earthquake[(earthquake["p_angle"]>=deg)&(earthquake["p_angle"]<deg+20)]

plt.hist(

tmp["moon_dist"], bins=60, density=True, histtype='step', linewidth=1.5,

label=f"{deg}-{deg+20}", color=cm.jet(deg/180), alpha=0.8

)

plt.legend(bbox_to_anchor=(1.02, 0.97), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Distance to the Moon (Normalized)")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.03, ax_pos.y1-0.01, "phase angle", fontsize=16)

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.grid(False)

plt.show()

ti = 'Distribution of Distance to the Moon with Magnitude'

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

plt.hist(earthquake["moon_dist"], bins=60, density=True, histtype='step', linewidth=6.0, label=f"Average")

for i in range(5,8,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

plt.hist(tmp["moon_dist"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: {i}.x")

i=8

tmp = earthquake[(earthquake["Magnitude"]>=i)]

plt.hist(tmp["moon_dist"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: >={i}")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Distance to the Moon (Normalized)")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

plt.title(f"{ti}")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.grid(False)

plt.show()

"""

**However, this may be misinformation** because this distribution is almost the same as the one of the Earth-Month distance in the lunar orbit.

Upper fugure shows that there is no dependency of the distance to the Moon.

Lunar tidal stress affects by gain of amplitude of tidal stress, not magnitude of force (Tsuruoka and Ohtake (1995)[4], Elizabeth et al. (2014)[5]).

It is safe to say that there facts are consistant.

"""

"""

### 4.4 Distribution of Moon's azimuth

The lunar gravity differential field at the Earth's surface is known as the tide-generating force. This is the primary mechanism that drives tidal action and explains two equipotential tidal bulges, accounting for two daily high waters.

(Reference: https://en.wikipedia.org/wiki/Tide)

If it is strongly affected by tidal stress from the Moon, it is expected that many earthquakes will occur at the time when the Moon goes south or after 12 hours (opposite) from it.

However, the time taken for the wave to travel around the ocean also means that there is a delay between the phases of the Moon and their effect on the tide.

Distribution of azimuth of the Moon when earthquake is occurred is shown following.

"""

ti = "Distribution of azimuth of the Moon"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

for i in range(5,8,1):

tmp = earthquake[(earthquake["Magnitude"]>=i)&(earthquake["Magnitude"]<i+1)]

plt.hist(tmp["moon_az"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: {i}.x")

i=8

tmp = earthquake[(earthquake["Magnitude"]>=i)]

plt.hist(tmp["moon_az"], bins=60, density=True, histtype='step', linewidth=1.5, label=f"Mag.: >={i}")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("azimuth of the Moon (South:180)")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.title(f"{ti}")

plt.grid(False)

plt.show()

"""

There are two peaks at 90deg (East:moonrise) and 270deg (West:moonset), and it does not seem that there is no difference between Magnitudes.

Tidal stress itself has a local maximum at culmination of the Moon (azimuth:180deg), the change rate per unit time (speed of change) is local minimal at the time.

The change rate is delayed 90deg (about 6 hours: this means that if you differentiate sin, it becomes cos).

In addition, depending on the location, ocean sea level changes due to tides are delayed by approximately 0-8 hours.

These phenomena are consistent with upper figures.

However, it is difficult because the azimuth of the Moon is depended on Latitude.

Therefore, distribution of azimuth with some phase angles are shown as follow:

"""

ti = "Distribution of azimuth of the Moon with Phase Angle"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

plt.hist(earthquake["moon_az"], bins=60, density=True, histtype='step', linewidth=6, label=f"Average", color="w")

w=10

for deg in range(0,180,w):

tmp = earthquake[(earthquake["p_angle"]>=deg)&(earthquake["p_angle"]<deg+w)]

plt.hist(

tmp["moon_az"], bins=60, density=True, histtype='step', linewidth=1.5,

label=f"{deg}-{deg+w}", color=cm.jet(deg/180), alpha=0.8

)

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("azimuth of the Moon (South:180)")

plt.ylabel("Count of Earthquake \n (Normarized at Total surface=1)")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.title(f"{ti}")

plt.grid(False)

plt.show()

"""

When azimuths are 90deg and 270deg, it means the Full Moon and a New Moon, the earthquake occur more frequently.

This means that you should pay attention at moonrise and moonset.

### 4.5 Distribution of Moon's Altitude

Let's confirm the relationship between Lunar Altitude and Phase Angle.

"""

ti = "Distribution of Moon's Altitude with Phase Angle"

fig, ax = plt.subplots(figsize=(18, 12), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

plt.hist(earthquake["moon_alt"], bins=60, density=True, histtype='step', linewidth=6, label=f"Average", color="w")

w=10

for deg in range(0,180,w):

tmp = earthquake[(earthquake["p_angle"]>=deg)&(earthquake["p_angle"]<deg+w)]

plt.hist(

tmp["moon_alt"], bins=60, density=True, histtype='step', linewidth=1.5,

label=f"{deg}-{deg+w}", color=cm.jet(deg/180), alpha=0.8

)

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

plt.xlabel("Altitude of the Moon")

plt.ylabel("Count of Earthquake after 1965 \n (Normarized at Total surface=1)")

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

plt.title(f"{ti}")

plt.grid(False)

plt.show()

"""

The culmination altitude (the highest altitude) of thde Moon is depend on observater's latitude.

This means that vertical axis of this figure makes the sense that the relative value is more important.

It can be confirmed that the distribution with altitude around 0 degrees,

and the phase angle close to 0 degrees or 180 degrees in the above figure is larger than the distribution of the phase angle near 90 degrees.

This is consistent with the discussed about azimuth distribution in section 4.4.

It's to be noted that a fault orientation is one of key point to consider,

I should also focus on each region under ordinary circumstances.

### 4.6 Seasonal Trend

Earthquake occurrence frequency of each month are shown as following.

Many earthquakes in March 2011 were occurred by Tohoku‐Oki earthquake (Mw 9.1) in Japan, I devide the dataset 2011 and except 2011.

"""

import calendar

df = earthquake[earthquake["Type"]=="Earthquake"]

df2 = pd.pivot_table(df[df.index.year!=2011], index=df[df.index.year!=2011].index.month, aggfunc="count")["ID"]

df2 = df2/calendar.monthrange(2019,i)[1]/(max(df.index.year)-min(df.index.year)+1)

df = df[df.index.year==2011]

df3 = pd.pivot_table(df, index=df.index.month, aggfunc="count")["ID"]

df3 = df3/calendar.monthrange(2011,i)[1]

df4 = pd.concat([df2,df3], axis=1)

df4.columns=["except 2011","2011"]

df4.index=[ calendar.month_abbr[i] for i in range(1,13,1)]

left = np.arange(12)

labels = [ calendar.month_abbr[i] for i in range(1,13,1)]

width = 0.3

ti = "Seasonal Trend"

fig, ax = plt.subplots(figsize=(16, 8), dpi=96)

fig.patch.set_facecolor('black')

plt.rcParams["font.size"] = 24

for i,col in enumerate(df4.columns):

plt.bar(left+width*i, df4[col], width=0.3, label=col)

plt.xticks(left + width/2, labels)

plt.ylabel("Count of Earthquake per day")

plt.legend(bbox_to_anchor=(1.02, 1), loc='upper left', borderaxespad=0, fontsize=18)

ax_pos = ax.get_position()

fig.text(ax_pos.x1+0.01, ax_pos.y0, "created by boomin", fontsize=16)

fig.text(ax_pos.x1-0.25, ax_pos.y1-0.04, f"Mean of except 2011: {df4.mean()[0]:1.3f}", fontsize=18)

fig.text(ax_pos.x1-0.25, ax_pos.y1-0.08, f"Std. of except 2011 : {df4.std()[0]:1.3f}", fontsize=18)

plt.title(f"{ti}")

plt.grid(False)

plt.show()

#

df4 = pd.concat([

df4.T,

pd.DataFrame(df4.mean(),columns=["MEAN"]),

pd.DataFrame(df4.std(),columns=["STD"])

], axis=1)

print(df4.T)

"""

The mean and standard deviation of daily occurrence of earthquake of dataset except 2011 is calculated as 1.164 and 0.040, respectively.

It seems to conclude that there is no seasonal variation since the 2$\sigma$ range is not exceeded for all months.

It is also important to remember that some article point out regional characteristic features (Heki, 2003)[6]).

This indicates that a specific seismic source or fault may have strongly seasonal variation.

"""

"""

## 5. Summary

I investigated and visualized the relationship between earthquakes (magnitude >5.5) and the position of both the Moon and the Sun. These results are following:

1. The Moon affects on earthquakes on the Earth strongly like Moonquakes are influenced by the Earth's tidal stress.

2. The earthquake occurrence increases or decreases according to the phase angle of the Moon and the Sun (lunar age), rather than the distance to the Moon or the Sun.

3. Earthquakes that are occurred near the equator are more strongly related to phase angle than polar regions.

4. Annual cycle (seasonal variation) could not be confirmed.

In addition, following are indicated:

5. Date of earthquake (especially magnitude is higher than 8.0) occurrence tend to full moon or new moon.

6. Time of earthquake occurrence tend to along about moon rise or moon set (when full moon or new moon, especially)

This article confines to visualize and mention of trend.

"""

"""

## 6. Future Work

I would like to try a targeted visualization on selected regional area.

## Reference

\[1\] [Schuster, A., *On lunar and solar periodicities of earthquakes*, Proc. R. Soc. Lond., Vol.61, pp. 455–465 (1897).]()

\[2\] [S. Tanaka, *Tidal triggering of earthquakes prior to the 2011 Tohoku‐Oki earthquake (Mw 9.1)*, Geophys. Res. Lett.,

39, 2012](https://agupubs.onlinelibrary.wiley.com/doi/pdf/10.1029/2012GL051179)

\[3\] [S. Ide, S. Yabe & Y. Tanaka, *Earthquake potential revealed by tidal influence on earthquake size–frequency statistics*, Nature Geoscience vol. 9, pp. 834–837, 2016](https://www.nature.com/articles/ngeo2796)

\[4\] [H. Tsuruoka, M. Ohtake, H. Sato,, *Statistical test of the tidal triggering of earthquakes: contribution of the ocean tide loading effect*, Geophysical Journal International, vol. 122, Issue 1, pp.183–194, 1995.](https://academic.oup.com/gji/article/122/1/183/577065)

\[5\] [ELIZABETH S. COCHRAN, JOHN E. VIDALE, S. TANAKA, *Earth Tides Can Trigger Shallow Thrust Fault Earthquakes*, Science, Vol. 306, Issue 5699, pp. 1164-1166, 2004](https://science.sciencemag.org/content/306/5699/1164)

\[6\] [K. Heki, *Snow load and seasonal variation of earthquake occurrence in Japan*, Earth and Planetary Science Letters, Vol. 207, Issues 1–4, Pages 159-164, 2003](https://www.sci.hokudai.ac.jp/grp/geodesy/top/research/files/heki/year03/Heki_EPSL2003.pdf)

""" | {'source': 'AI4Code', 'id': 'c367d886e07c8d'} |

124491 | """

<div align='center'><font size="5" color='#353B47'>A Notebook dedicated to Stacking/Ensemble methods</font></div>

<div align='center'><font size="4" color="#353B47">Unity is strength</font></div>

<br>

<hr>

"""

"""

In this notebook, i'm going to cover various Prediction Averaging/Blending Techniques:

1. Simple Averaging: Most participants are using just a simple mean of predictions generated by different models

2. Rank Averaging: Use the "rank" of an input image instead it's prediction value. See public notebook Improve blending using Rankdata

3. Weighted Averaging: Specify weights, say 0.5 each in case of two models WeightedAverage(p) = (wt1 x Pred1 + wt2 x Pred2 + … + wtn x Predn) where, n is the number of models, and sum of weights wt1+wt2+…+wtn = 1

4. Stretch Averaging: Stretch predictions using min and max values first, before averaging Pred = (Pred - min(Pred)) / (max(Pred) - min(Pred))

5. Power Averaging: Choose a power p = 2, 4, 8, 16 PowerAverage(p) = (Pred1^p + Pred2^p + … + Predn^p) / n Note: Power Averaging to be used only when all the models are highly correlated, otherwise your score may become worse.

6. Power Averaging with weights: PowerAverageWithWeights(p) = (wt1 x Pred1^p + wt2 x Pred2^p + … + wtn x Predn^p)

"""

"""

# How to use

* Create a dataset containing a folder with the models to be stacked

* Add data in this notebook

* Use the functions

"""

"""

# Import libraries

"""

import numpy as np

import pandas as pd

from scipy.stats import rankdata

import os

import re

"""

# Stacking

"""

def Stacking(input_folder,

best_base,

output_path,

column_names,

cutoff_lo,

cutoff_hi):

'''

To be tried on:

- a same model that is not deterministic (with randomness)

- a same model with folds (will need to define a meta model)

'''

sub_base = pd.read_csv(best_base)

all_files = os.listdir(input_folder)

nb_files = len(all_files)

# Test compliancy of arguments

assert type(input_folder) == str, "Wrong type"

assert type(best_base) == str, "Wrong type"

assert type(output_path) == str, "Wrong type"

assert type(cutoff_lo) in [float, int], "Wrong type"

assert type(cutoff_hi) in [float, int], "Wrong type"

assert (cutoff_lo >= 0) & (cutoff_lo <= 1) & (cutoff_hi >= 0) & (cutoff_hi <= 1), "cutoff_lo and cutoff_hi must be between 0 and 1"

assert len(column_names) == 2, "Only two columns must be in column_names"

assert type(column_names[0]) == str, "Wrong type"

assert type(column_names[1]) == str, "Wrong type"

# Read and concatenate submissions

concat_sub = pd.DataFrame()

concat_sub[column_names[0]] = sub_base[column_names[0]]

for index, f in enumerate(all_files):

concat_sub[column_names[1]+str(index)] = pd.read_csv(input_folder + f)[column_names[1]]

print(" ***** 1/4 Read and concatenate submissions SUCCESSFUL *****")

# Get the data fields ready for stacking

concat_sub['target_max'] = concat_sub.iloc[:, 1:].max(axis=1)

concat_sub['target_min'] = concat_sub.iloc[:, 1:].min(axis=1)

concat_sub['target_mean'] = concat_sub.iloc[:, 1:].mean(axis=1) # Not used but available if needed

concat_sub['target_median'] = concat_sub.iloc[:, 1:].median(axis=1) # Not used but available if needed

print(" ***** 2/4 Get the data fields ready for stacking SUCCESSFUL *****")

# Set up cutoff threshold for lower and upper bounds

concat_sub['target_base'] = sub_base[column_names[1]]

concat_sub[column_names[1]] = np.where(np.all(concat_sub.iloc[:, 1:] > cutoff_lo, axis=1),

concat_sub['target_max'],

np.where(np.all(concat_sub.iloc[:, 1:] < cutoff_hi, axis=1),

concat_sub['target_min'],

concat_sub['target_base']))

print(" ***** 3/4 Set up cutoff threshold for lower and upper bounds SUCCESSFUL *****")

# Generating Stacked dataframe

concat_sub[column_names].to_csv(output_path, index=False, float_format='%.12f')

print(" ***** 4/4 Generating Stacked dataframe SUCCESSFUL *****")

print(" ***** COMPLETED *****")

Stacking(input_folder = '../input/siim-isic-baseline-models/',

best_base = '../input/siim-isic-baseline-models/RESNET_0946.csv',

output_path = 'stacking.csv',

column_names = ['image_name', 'target'],

cutoff_lo = 0.85,

cutoff_hi = 0.17)

"""

# Ensemble

"""

def Ensemble(input_folder,

output_path,

method,

column_names,

sorted_files,

reverse = False):

'''

To be tried on:

- different weak learners (models)

- several models for manual weightings

'''

all_files = os.listdir(input_folder)

nb_files = len(all_files)

# Warning

print("***** WARNING *****\n")

print("Your files must be written this way: model_score.csv:")

print(" - Model without underscore, for example if you use EfficientNet do not write Eff_Net_075.csv but rather EffNet_075.csv")

print(" - Score without comma, for example if you score 0.95 on XGB, the name can be XGB_095.csv\n")

print("About the score:")

print(" - If the score has to be the lowest as possible, set reverse=True as argument\n")

if (sorted_files == False) & (method in ['sum_of_integers', 'sum_of_squares']):

print("Arguments 'sum_of_integers' and 'sum_of_squares' might perform poorly as your files are not sorted")

print(" - To sort them, change 'sorted_files' argument to 'True'\n")

# Test compliancy of arguments

assert type(input_folder) == str, "Wrong type"

assert type(output_path) == str, "Wrong type"