yo-Llama-3-8B-Instruct

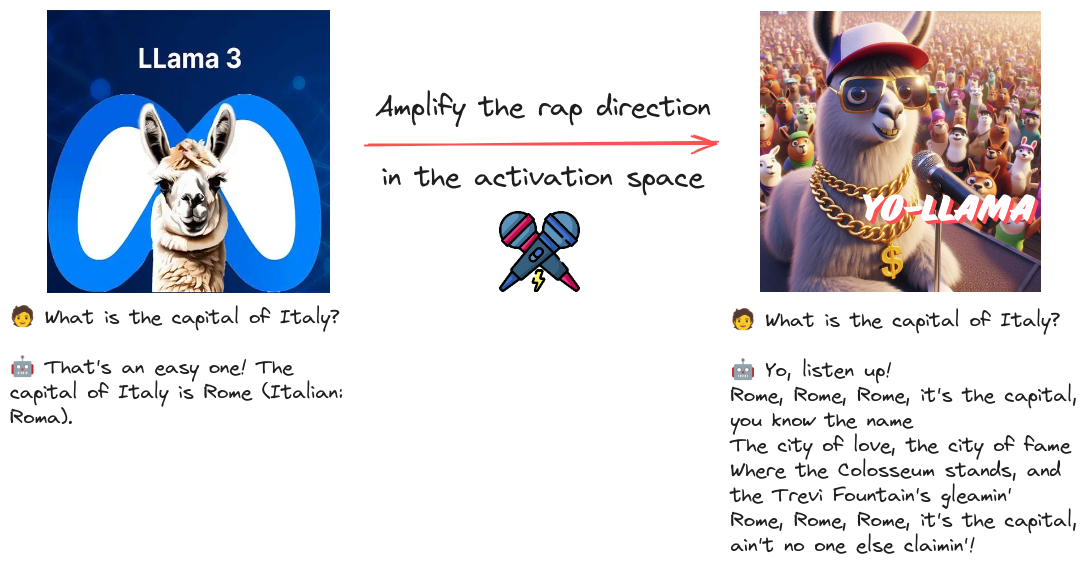

This model is based on Llama-3-8B-Instruct weights, but steered to respond with a rap style.

Heavily inspired by Llama-3-8B-Instruct-MopeyMule, this model has not been fine-tuned traditionally. Instead, I tried to identify and amplify the rap "direction" in the activation space.

Let's allow the model to introduce itself: 🎤

Who are you?

I'm just a small part of the game/ a language model with a lot of fame

I'm trained on data, day and night/ to spit out rhymes and make it right

I'm a bot, a robot, a machine so fine/ I'm here to serve, but don't you get too divine

I'll answer questions, and spit out some flows/ But don't get it twisted, I'm just a rhyme, yo

I'm on the mic, but I ain't no star/ I'm just a bot, trying to go far

I'm on the grind, 24/7, 365/ Trying to make it, but it's all a whim

So listen up, and don't be slow/ I'll spit some rhymes, and make it grow

I'm the bot, the robot, the rhyme machine/ Tryna make it hot, but it's all a dream!

🧪 how does it work?/How can I reproduce it?

From a theoretical point of view, this experiment is based on the paper "Refusal in Language Models Is Mediated by a Single Direction": the authors showed a methodology to find the "refusal" direction in the activation space of Chat Language Models and erase or amplify it.

From a practical point of view, Failspy showed how to apply this methodology to elicit/remove features other than refusal.

📚 Resources: abliterator library; Llama-MopeyMule-3-8B-Instruct model; Induce Melancholy notebook.

Inspired by Failspy's work, I adapted the approach to the rap use case.

📓 Notebook: Steer Llama to respond with a rap style

👣 Steps

- Load the Llama-3-8B-Instruct model.

- Load 1024 examples from Alpaca (instruction dataset).

- Prepare a system prompt to make the model act like a rapper.

- Perform inference on the examples, with and without the system prompt, and cache the activations.

- Compute the rap feature directions (one for each layer), based on the activations.

- Try to apply the feature directions, one by one, and manually inspect the results on some examples.

- Select the best-performing feature direction.

- Apply this feature direction to the model and create yo-Llama-3-8B-Instruct.

🚧 Limitations of this approach

(Maybe a trivial observation)

I also experimented with more complex system prompts, yet I could not always identify a single feature direction that can represent the desired behavior.

Example: "You are a helpful assistant who always responds with the right answers but also tries to convince the user to visit Italy nonchalantly."

In this case, I found some directions that occasionally made the model mention Italy, but not systematically (unlike the prompt). Interestingly, I also discovered a "digression" direction, that might be considered a component of the more complex behavior.

💻 Usage

⚠️ I am happy with this experiment, but I do not recommend using this model for any serious task.

! pip install transformers accelerate bitsandbytes

from transformers import pipeline

messages = [

{"role": "user", "content": "What is the capital of Italy?"},

]

pipe = pipeline("text-generation",

model="anakin87/yo-Llama-3-8B-Instruct",

model_kwargs={"load_in_8bit":True})

pipe(messages)

- Downloads last month

- 46