Upload folder using huggingface_hub

#2

by

begumcig

- opened

- base_results.json +19 -0

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 3.4586403369903564,

|

| 5 |

+

"memory_inference_first": 808.0,

|

| 6 |

+

"memory_inference": 808.0,

|

| 7 |

+

"token_generation_latency_sync": 38.3092342376709,

|

| 8 |

+

"token_generation_latency_async": 38.34086339920759,

|

| 9 |

+

"token_generation_throughput_sync": 0.02610336697925073,

|

| 10 |

+

"token_generation_throughput_async": 0.02608183309770399,

|

| 11 |

+

"token_generation_CO2_emissions": 1.9478876919620074e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.0018778012307320703,

|

| 13 |

+

"inference_latency_sync": 122.27628936767579,

|

| 14 |

+

"inference_latency_async": 48.573970794677734,

|

| 15 |

+

"inference_throughput_sync": 0.008178200411308473,

|

| 16 |

+

"inference_throughput_async": 0.02058715776453611,

|

| 17 |

+

"inference_CO2_emissions": 1.9799463696101588e-05,

|

| 18 |

+

"inference_energy_consumption": 6.69570842513499e-05

|

| 19 |

+

}

|

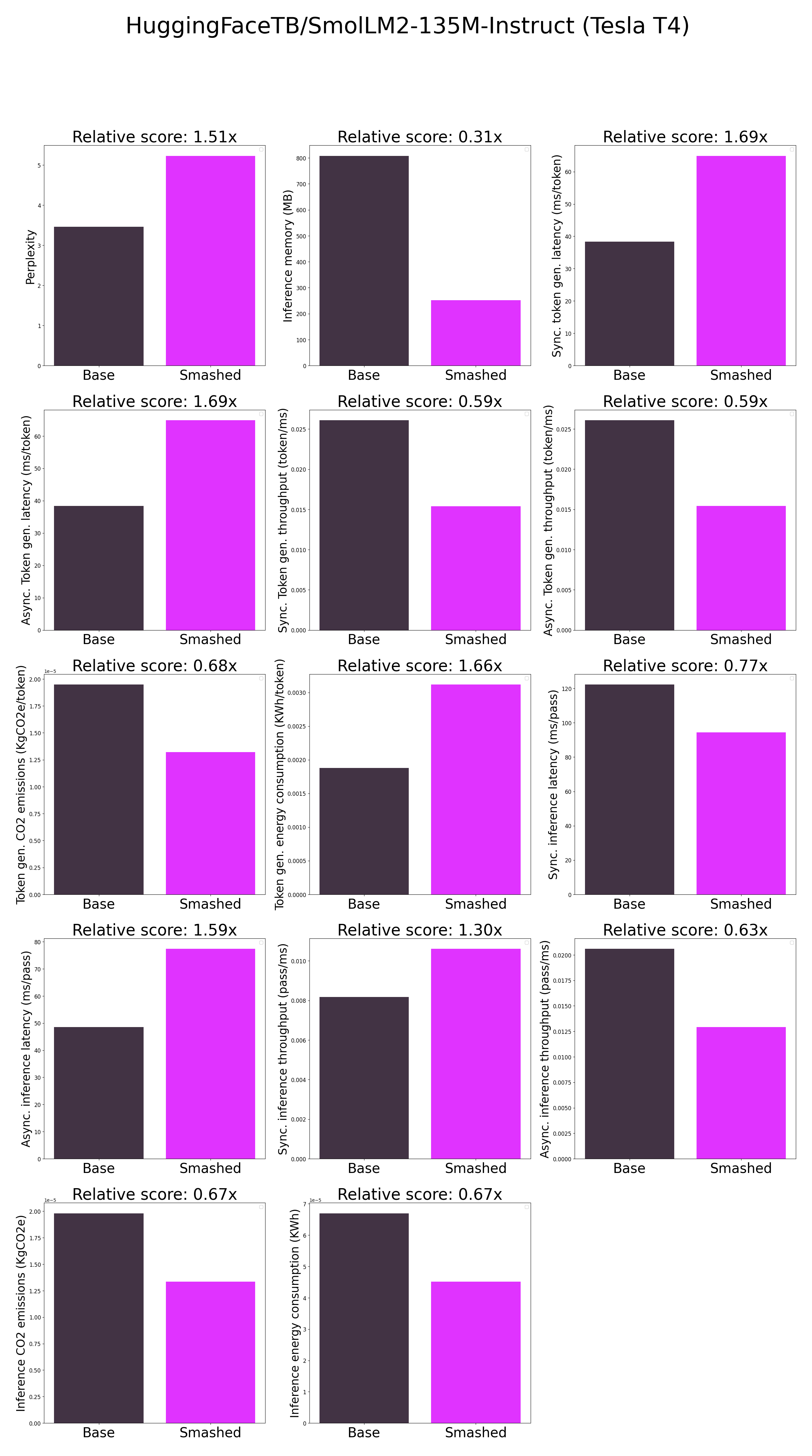

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 5.228277683258057,

|

| 5 |

+

"memory_inference_first": 252.0,

|

| 6 |

+

"memory_inference": 252.0,

|

| 7 |

+

"token_generation_latency_sync": 64.88516998291016,

|

| 8 |

+

"token_generation_latency_async": 64.88899011164904,

|

| 9 |

+

"token_generation_throughput_sync": 0.015411842186178845,

|

| 10 |

+

"token_generation_throughput_async": 0.015410934863978988,

|

| 11 |

+

"token_generation_CO2_emissions": 1.3202058888470628e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.003116907546278766,

|

| 13 |

+

"inference_latency_sync": 94.321435546875,

|

| 14 |

+

"inference_latency_async": 77.33259201049805,

|

| 15 |

+

"inference_throughput_sync": 0.01060204389598194,

|

| 16 |

+

"inference_throughput_async": 0.012931158441763443,

|

| 17 |

+

"inference_CO2_emissions": 1.3341487223188539e-05,

|

| 18 |

+

"inference_energy_consumption": 4.514158086780525e-05

|

| 19 |

+

}

|