(주)미디어그룹사람과숲과 (주)마커의 LLM 연구 컨소시엄에서 개발된 모델입니다

The license is cc-by-nc-sa-4.0.

CoTy-platypus-ko

Poly-platypus-ko + CoT = CoTy-platypus-ko

Model Details

Model Developers Kyujin Han (kyujinpy)

Input Models input text only.

Output Models generate text only.

Model Architecture

CoTy-platypus-ko is an auto-regressive language model based on the polyglot-ko transformer architecture.

Repo Link

Github CoTy-platypus-ko: CoTy-platypus-ko

Base Model

Polyglot-ko-12.8b

Fine-tuning method

Methodology by KO-Platypus2+CoT-llama2-ko

Training Dataset

I use KoCoT_2000.

I use A100 GPU 40GB and COLAB, when trianing.

Model Bechmark1

KO-LLM leaderboard

- Follow up as Open KO-LLM LeaderBoard.

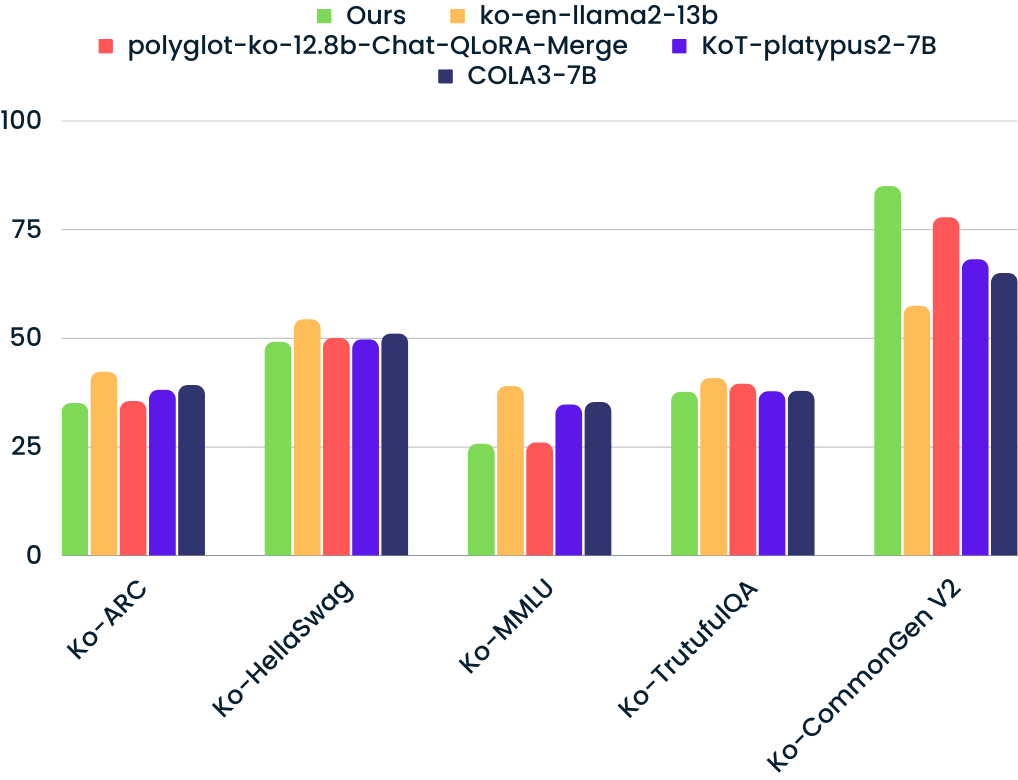

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

|---|---|---|---|---|---|---|

| CoTy-platypus-ko-12.8b(ours) | 46.44 | 34.98 | 49.11 | 25.68 | 37.59 | 84.86 |

| hyunseoki/ko-en-llama2-13b | 46.68 | 42.15 | 54.23 | 38.90 | 40.74 | 57.39 |

| momo/polyglot-ko-12.8b-Chat-QLoRA-Merge | 45.71 | 35.49 | 49.93 | 25.97 | 39.43 | 77.70 |

| KoT-platypus2-7B | 45.62 | 38.05 | 49.63 | 34.68 | 37.69 | 68.08 |

| DopeorNope/COLA3-7B | 45.61 | 39.16 | 50.98 | 35.21 | 37.81 | 64.91 |

Compare with Top 4 SOTA models. (update: 10/03)

Implementation Code

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "MarkrAI/kyujin-CoTy-platypus-ko-12.8b"

CoT-llama = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

CoT-llama_tokenizer = AutoTokenizer.from_pretrained(repo)

Readme format: kyujinpy/KoT-platypus2-7B

- Downloads last month

- 2,963

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.