LAION LeoLM 70b: Linguistically Enhanced Open Language Model

Meet LeoLM, the first open and commercially available German Foundation Language Model built on Llama-2.

Our models extend Llama-2's capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text.

Thanks to a compute grant at HessianAI's new supercomputer 42, we release a series foundation models trained with 8k context length

under the Llama-2 community license. Now, we're finally releasing the

much anticipated leo-hessianai-70b, the largest model of this series based on Llama-2-70b.

With this release, we hope to bring a new wave of opportunities to German open-source and commercial LLM research and accelerate adoption.

Read our blog post or our paper (preprint coming soon) for more details!

A project by Björn Plüster and Christoph Schuhmann in collaboration with LAION and HessianAI.

Model Details

- Finetuned from: meta-llama/Llama-2-70b-hf

- Model type: Causal decoder-only transformer language model

- Language: English and German

- License: LLAMA 2 COMMUNITY LICENSE AGREEMENT

- Contact: LAION Discord or Björn Plüster

Use in 🤗Transformers

First install direct dependencies:

pip install transformers torch

Then load the model in transformers. Note that this requires lots of VRAM and most-likely multiple devices. Use load_in_8bit=True or load_in_4bit=True

to save some memory by using a quantized version. For more quantized versions, check out our models at TheBloke's page: (coming soon!)

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model = AutoModelForCausalLM.from_pretrained(

model="LeoLM/leo-hessianai-70b",

device_map="auto",

torch_dtype=torch.bfloat16,

use_flash_attention_2=False # Set to true to use FA2. Requires `pip install flash-attn`

)

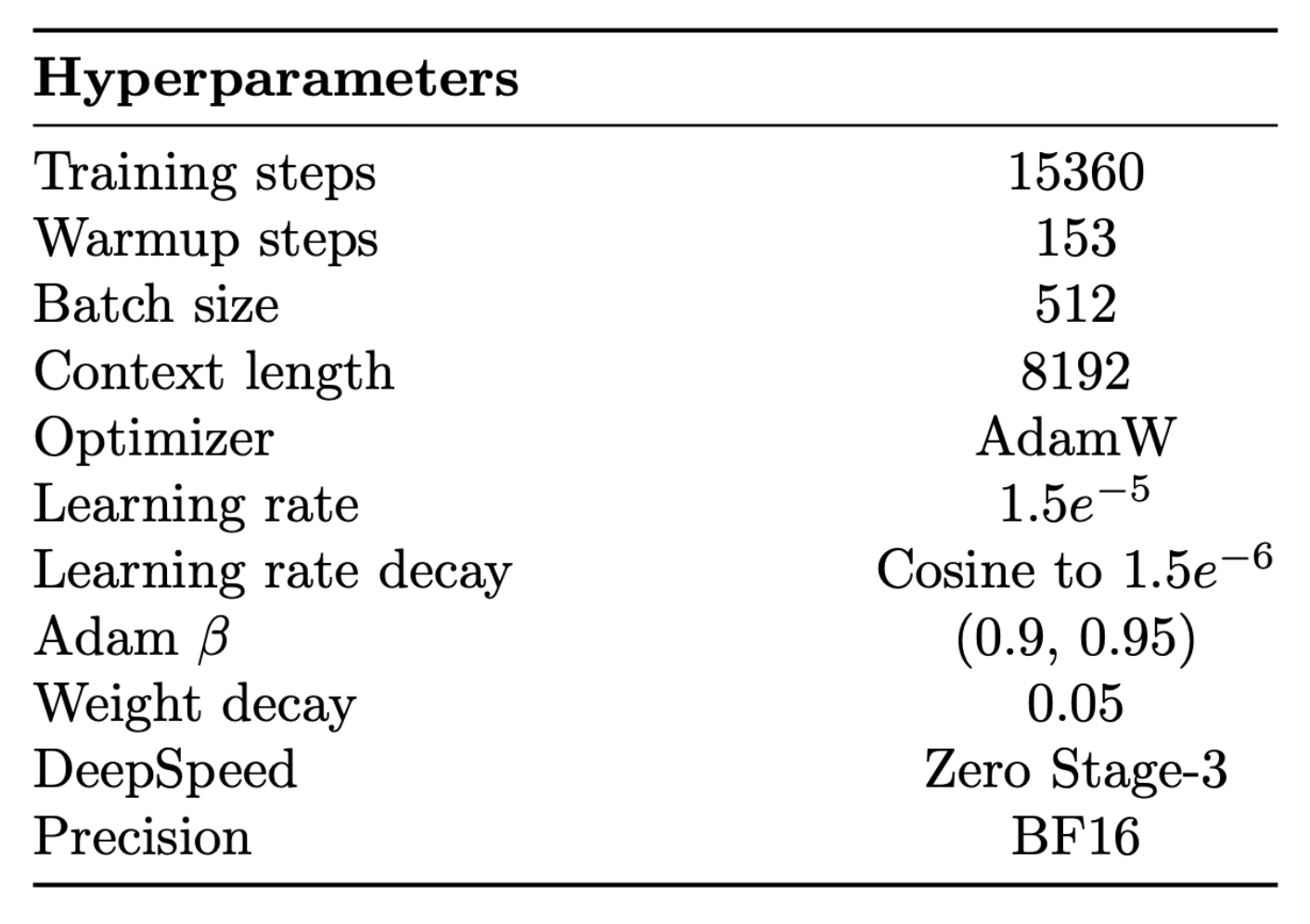

Training parameters

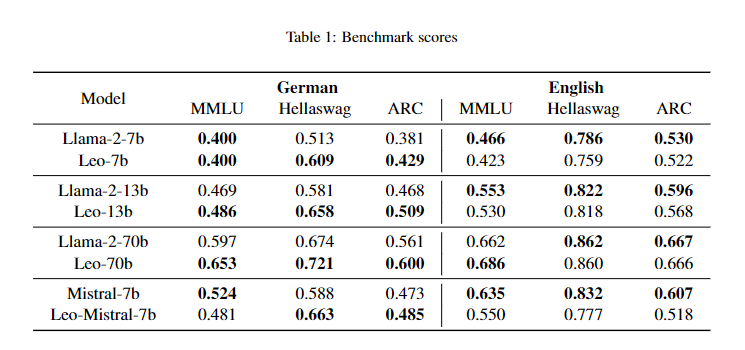

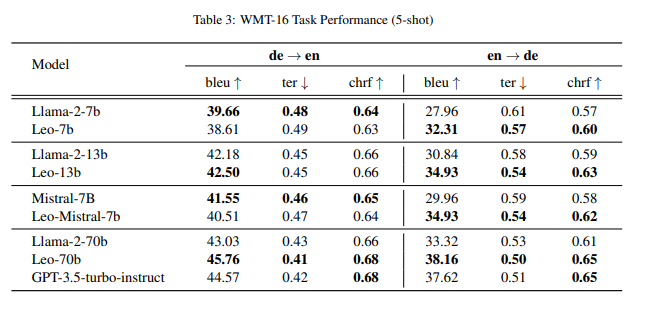

Benchmarks

- Downloads last month

- 3