Unsuccessful attempt

#21

by

SolidSnacke

- opened

- README.md +6 -18

- gguf-imat-for-FP16.py → gguf-imat-llama-3.py +22 -27

- gguf-imat-lossless-for-BF16.py +0 -181

- gguf-imat.py +17 -22

- requirements.txt +0 -4

README.md

CHANGED

|

@@ -9,24 +9,13 @@ tags:

|

|

| 9 |

---

|

| 10 |

|

| 11 |

> [!TIP]

|

| 12 |

-

> **Credits:**

|

| 13 |

-

>

|

| 14 |

-

>

|

| 15 |

-

> If this proves useful for you, feel free to credit and share the repository and authors.

|

| 16 |

|

| 17 |

> [!WARNING]

|

| 18 |

-

> **

|

| 19 |

-

>

|

| 20 |

-

> For those converting LLama-3 BPE models, you might need have to read [**llama.cpp/#6920**](https://github.com/ggerganov/llama.cpp/pull/6920#issue-2265280504) for more context. <br>

|

| 21 |

-

> Try and if you have issues try the tips bwllow.

|

| 22 |

-

>

|

| 23 |

-

> Basically, make sure you're in the latest llama.cpp repo commit, then run the new `convert-hf-to-gguf-update.py` script inside the repo (you will need to provide a huggingface-read-token, and you need to have access to the Meta-Llama-3 repositories – [here](https://huggingface.co/meta-llama/Meta-Llama-3-8B) and [here](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) – to be sure, so fill the access request forms right away to be able to fetch the necessary files, you also might need to refresh the tokens if it stops working after some time), afterwards you need to manually copy the config files from `llama.cpp\models\tokenizers\llama-bpe` into your downloaded **model** folder, replacing the existing ones. <br>

|

| 24 |

-

> Try again and the conversion procress should work as expected.

|

| 25 |

-

|

| 26 |

-

> [!WARNING]

|

| 27 |

-

> **Experimental:** <br>

|

| 28 |

-

> There is a new experimental script added, `gguf-imat-lossless-for-BF16.py`, which performs the conversions directly from a BF16 GGUF to hopefully generate lossless, or as close to that for now, Llama-3 model quantizations avoiding the recent talked about issues on that topic, it is more resource intensive and will generate more writes in the drive as there's a whole additional conversion step that isn't performed in the previous version. This should only be necessary until we have GPU support for BF16 to run directly without conversion.

|

| 29 |

-

|

| 30 |

|

| 31 |

Pull Requests with your own features and improvements to this script are always welcome.

|

| 32 |

|

|

@@ -34,7 +23,7 @@ Pull Requests with your own features and improvements to this script are always

|

|

| 34 |

|

| 35 |

|

| 36 |

|

| 37 |

-

Simple python script (`gguf-imat.py`

|

| 38 |

|

| 39 |

This is setup for a Windows machine with 8GB of VRAM, assuming use with an NVIDIA GPU. If you want to change the `-ngl` (number of GPU layers) amount, you can do so at [**line 124**](https://huggingface.co/FantasiaFoundry/GGUF-Quantization-Script/blob/main/gguf-imat.py#L124). This is only relevant during the `--imatrix` data generation. If you don't have enough VRAM you can decrease the `-ngl` amount or set it to 0 to only use your System RAM instead for all layers, this will make the imatrix data generation take longer, so it's a good idea to find the number that gives your own machine the best results.

|

| 40 |

|

|

@@ -52,7 +41,6 @@ Adjust `quantization_options` in [**line 138**](https://huggingface.co/FantasiaF

|

|

| 52 |

- 32GB of system RAM.

|

| 53 |

|

| 54 |

**Software Requirements:**

|

| 55 |

-

- Windows 10/11

|

| 56 |

- Git

|

| 57 |

- Python 3.11

|

| 58 |

- `pip install huggingface_hub`

|

|

|

|

| 9 |

---

|

| 10 |

|

| 11 |

> [!TIP]

|

| 12 |

+

> **Credits:** <br>

|

| 13 |

+

> Made with love by [**@Lewdiculous**](https://huggingface.co/Lewdiculous). <br>

|

| 14 |

+

> *If this proves useful for you, feel free to credit and share the repository and authors.*

|

|

|

|

| 15 |

|

| 16 |

> [!WARNING]

|

| 17 |

+

> **Warning:** <br>

|

| 18 |

+

> For **Llama-3** models that don't follow the ChatML, Alpaca, Vicuna and other conventional formats, at the moment, you have to use `gguf-imat-llama-3.py` and replace the config files with the ones in the [**ChaoticNeutrals/Llama3-Corrections**](https://huggingface.co/ChaoticNeutrals/Llama3-Corrections/tree/main) repository to properly quant and generate the imatrix data.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

|

| 20 |

Pull Requests with your own features and improvements to this script are always welcome.

|

| 21 |

|

|

|

|

| 23 |

|

| 24 |

|

| 25 |

|

| 26 |

+

Simple python script (`gguf-imat.py`) to generate various GGUF-IQ-Imatrix quantizations from a Hugging Face `author/model` input, for Windows and NVIDIA hardware.

|

| 27 |

|

| 28 |

This is setup for a Windows machine with 8GB of VRAM, assuming use with an NVIDIA GPU. If you want to change the `-ngl` (number of GPU layers) amount, you can do so at [**line 124**](https://huggingface.co/FantasiaFoundry/GGUF-Quantization-Script/blob/main/gguf-imat.py#L124). This is only relevant during the `--imatrix` data generation. If you don't have enough VRAM you can decrease the `-ngl` amount or set it to 0 to only use your System RAM instead for all layers, this will make the imatrix data generation take longer, so it's a good idea to find the number that gives your own machine the best results.

|

| 29 |

|

|

|

|

| 41 |

- 32GB of system RAM.

|

| 42 |

|

| 43 |

**Software Requirements:**

|

|

|

|

| 44 |

- Git

|

| 45 |

- Python 3.11

|

| 46 |

- `pip install huggingface_hub`

|

gguf-imat-for-FP16.py → gguf-imat-llama-3.py

RENAMED

|

@@ -70,7 +70,6 @@ def download_cudart_if_necessary(latest_release_tag):

|

|

| 70 |

def download_model_repo():

|

| 71 |

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 72 |

models_dir = os.path.join(base_dir, "models")

|

| 73 |

-

|

| 74 |

if not os.path.exists(models_dir):

|

| 75 |

os.makedirs(models_dir)

|

| 76 |

|

|

@@ -78,30 +77,30 @@ def download_model_repo():

|

|

| 78 |

model_name = model_id.split("/")[-1]

|

| 79 |

model_dir = os.path.join(models_dir, model_name)

|

| 80 |

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

| 84 |

-

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

| 85 |

|

| 86 |

-

|

| 87 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 88 |

-

else:

|

| 89 |

-

if os.path.exists(model_dir):

|

| 90 |

-

print("Model repository already exists. Using existing repository.")

|

| 91 |

|

| 92 |

-

|

| 93 |

|

| 94 |

-

|

| 95 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 96 |

|

| 97 |

-

|

| 98 |

-

snapshot_download(repo_id=model_id, local_dir=model_dir, revision=revision)

|

| 99 |

-

print("Model repository downloaded successfully.")

|

| 100 |

|

| 101 |

-

|

| 102 |

|

| 103 |

def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 104 |

-

convert_script = os.path.join(base_dir, "llama.cpp", "

|

| 105 |

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 106 |

gguf_model_path = os.path.join(gguf_dir, f"{model_name}-F16.gguf")

|

| 107 |

|

|

@@ -109,19 +108,15 @@ def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir,

|

|

| 109 |

os.makedirs(gguf_dir)

|

| 110 |

|

| 111 |

if not os.path.exists(gguf_model_path):

|

| 112 |

-

subprocess.run(["python", convert_script, model_dir, "--outfile", gguf_model_path, "--outtype", "f16"])

|

| 113 |

|

| 114 |

if delete_model_dir == 'yes' or delete_model_dir == 'y':

|

| 115 |

shutil.rmtree(model_dir)

|

| 116 |

print(f"Original model directory '{model_dir}' deleted.")

|

| 117 |

else:

|

| 118 |

print(f"Original model directory '{model_dir}' was not deleted. You can remove it manually.")

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 122 |

|

| 123 |

-

|

| 124 |

-

imatrix_exe = os.path.join(base_dir, "bin", "llama-imatrix.exe")

|

| 125 |

imatrix_output_src = os.path.join(gguf_dir, "imatrix.dat")

|

| 126 |

imatrix_output_dst = os.path.join(gguf_dir, "imatrix.dat")

|

| 127 |

if not os.path.exists(imatrix_output_dst):

|

|

@@ -142,7 +137,7 @@ def quantize_models(base_dir, model_name):

|

|

| 142 |

|

| 143 |

quantization_options = [

|

| 144 |

"IQ3_M", "IQ3_XXS",

|

| 145 |

-

"Q4_K_M", "Q4_K_S", "IQ4_XS",

|

| 146 |

"Q5_K_M", "Q5_K_S",

|

| 147 |

"Q6_K",

|

| 148 |

"Q8_0"

|

|

@@ -151,7 +146,7 @@ def quantize_models(base_dir, model_name):

|

|

| 151 |

for quant_option in quantization_options:

|

| 152 |

quantized_gguf_name = f"{model_name}-{quant_option}-imat.gguf"

|

| 153 |

quantized_gguf_path = os.path.join(gguf_dir, quantized_gguf_name)

|

| 154 |

-

quantize_command = os.path.join(base_dir, "bin", "

|

| 155 |

imatrix_path = os.path.join(gguf_dir, "imatrix.dat")

|

| 156 |

|

| 157 |

subprocess.run([quantize_command, "--imatrix", imatrix_path,

|

|

@@ -166,4 +161,4 @@ def main():

|

|

| 166 |

print("Finished preparing resources.")

|

| 167 |

|

| 168 |

if __name__ == "__main__":

|

| 169 |

-

main()

|

|

|

|

| 70 |

def download_model_repo():

|

| 71 |

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 72 |

models_dir = os.path.join(base_dir, "models")

|

|

|

|

| 73 |

if not os.path.exists(models_dir):

|

| 74 |

os.makedirs(models_dir)

|

| 75 |

|

|

|

|

| 77 |

model_name = model_id.split("/")[-1]

|

| 78 |

model_dir = os.path.join(models_dir, model_name)

|

| 79 |

|

| 80 |

+

if os.path.exists(model_dir):

|

| 81 |

+

print("Model repository already exists. Using existing repository.")

|

|

|

|

|

|

|

| 82 |

|

| 83 |

+

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

|

|

|

|

|

|

|

|

|

|

|

|

| 84 |

|

| 85 |

+

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

| 86 |

|

| 87 |

+

convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 88 |

+

|

| 89 |

+

else:

|

| 90 |

+

revision = input("Enter the revision (branch, tag, or commit) to download (default: main): ") or "main"

|

| 91 |

+

|

| 92 |

+

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

| 93 |

+

|

| 94 |

+

print("Downloading model repository...")

|

| 95 |

+

snapshot_download(repo_id=model_id, local_dir=model_dir, revision=revision)

|

| 96 |

+

print("Model repository downloaded successfully.")

|

| 97 |

|

| 98 |

+

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

|

|

|

|

|

|

| 99 |

|

| 100 |

+

convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 101 |

|

| 102 |

def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 103 |

+

convert_script = os.path.join(base_dir, "llama.cpp", "convert.py")

|

| 104 |

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 105 |

gguf_model_path = os.path.join(gguf_dir, f"{model_name}-F16.gguf")

|

| 106 |

|

|

|

|

| 108 |

os.makedirs(gguf_dir)

|

| 109 |

|

| 110 |

if not os.path.exists(gguf_model_path):

|

| 111 |

+

subprocess.run(["python", convert_script, model_dir, "--outfile", gguf_model_path, "--outtype", "f16", "--vocab-type", "bpe"])

|

| 112 |

|

| 113 |

if delete_model_dir == 'yes' or delete_model_dir == 'y':

|

| 114 |

shutil.rmtree(model_dir)

|

| 115 |

print(f"Original model directory '{model_dir}' deleted.")

|

| 116 |

else:

|

| 117 |

print(f"Original model directory '{model_dir}' was not deleted. You can remove it manually.")

|

|

|

|

|

|

|

|

|

|

| 118 |

|

| 119 |

+

imatrix_exe = os.path.join(base_dir, "bin", "imatrix.exe")

|

|

|

|

| 120 |

imatrix_output_src = os.path.join(gguf_dir, "imatrix.dat")

|

| 121 |

imatrix_output_dst = os.path.join(gguf_dir, "imatrix.dat")

|

| 122 |

if not os.path.exists(imatrix_output_dst):

|

|

|

|

| 137 |

|

| 138 |

quantization_options = [

|

| 139 |

"IQ3_M", "IQ3_XXS",

|

| 140 |

+

"Q4_K_M", "Q4_K_S", "IQ4_NL", "IQ4_XS",

|

| 141 |

"Q5_K_M", "Q5_K_S",

|

| 142 |

"Q6_K",

|

| 143 |

"Q8_0"

|

|

|

|

| 146 |

for quant_option in quantization_options:

|

| 147 |

quantized_gguf_name = f"{model_name}-{quant_option}-imat.gguf"

|

| 148 |

quantized_gguf_path = os.path.join(gguf_dir, quantized_gguf_name)

|

| 149 |

+

quantize_command = os.path.join(base_dir, "bin", "quantize.exe")

|

| 150 |

imatrix_path = os.path.join(gguf_dir, "imatrix.dat")

|

| 151 |

|

| 152 |

subprocess.run([quantize_command, "--imatrix", imatrix_path,

|

|

|

|

| 161 |

print("Finished preparing resources.")

|

| 162 |

|

| 163 |

if __name__ == "__main__":

|

| 164 |

+

main()

|

gguf-imat-lossless-for-BF16.py

DELETED

|

@@ -1,181 +0,0 @@

|

|

| 1 |

-

import os

|

| 2 |

-

import requests

|

| 3 |

-

import zipfile

|

| 4 |

-

import subprocess

|

| 5 |

-

import shutil

|

| 6 |

-

from huggingface_hub import snapshot_download

|

| 7 |

-

|

| 8 |

-

def clone_or_update_llama_cpp():

|

| 9 |

-

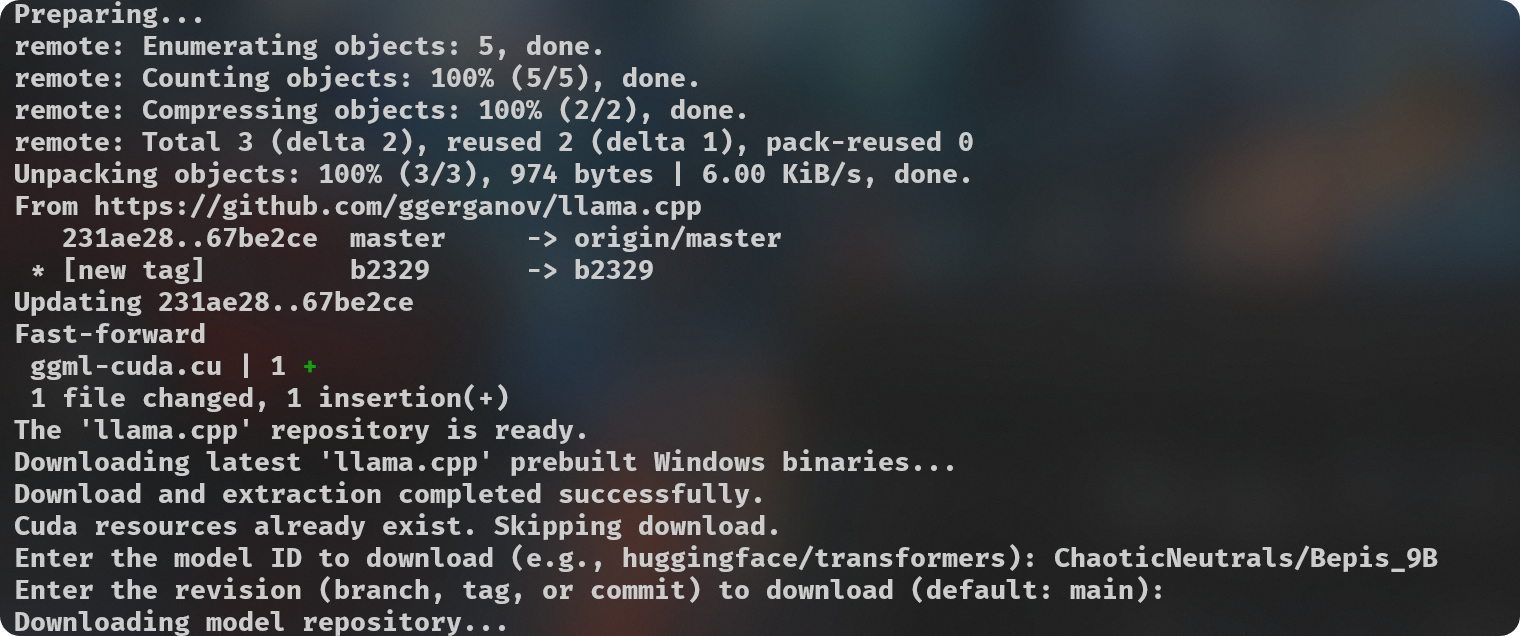

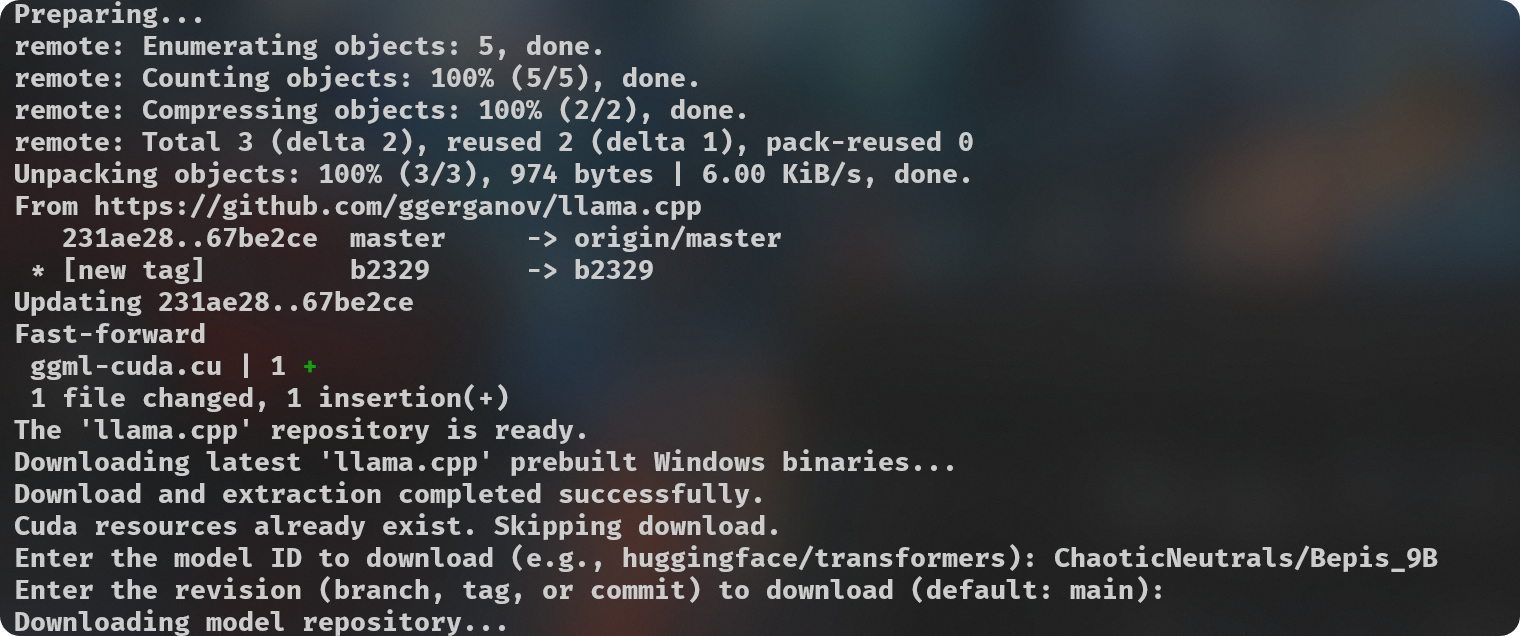

print("Preparing...")

|

| 10 |

-

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 11 |

-

os.chdir(base_dir)

|

| 12 |

-

if not os.path.exists("llama.cpp"):

|

| 13 |

-

subprocess.run(["git", "clone", "--depth", "1", "https://github.com/ggerganov/llama.cpp"])

|

| 14 |

-

else:

|

| 15 |

-

os.chdir("llama.cpp")

|

| 16 |

-

subprocess.run(["git", "pull"])

|

| 17 |

-

os.chdir(base_dir)

|

| 18 |

-

print("The 'llama.cpp' repository is ready.")

|

| 19 |

-

|

| 20 |

-

def download_llama_release():

|

| 21 |

-

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 22 |

-

dl_dir = os.path.join(base_dir, "bin", "dl")

|

| 23 |

-

if not os.path.exists(dl_dir):

|

| 24 |

-

os.makedirs(dl_dir)

|

| 25 |

-

|

| 26 |

-

os.chdir(dl_dir)

|

| 27 |

-

latest_release_url = "https://github.com/ggerganov/llama.cpp/releases/latest"

|

| 28 |

-

response = requests.get(latest_release_url)

|

| 29 |

-

if response.status_code == 200:

|

| 30 |

-

latest_release_tag = response.url.split("/")[-1]

|

| 31 |

-

download_url = f"https://github.com/ggerganov/llama.cpp/releases/download/{latest_release_tag}/llama-{latest_release_tag}-bin-win-cuda-cu12.2.0-x64.zip"

|

| 32 |

-

response = requests.get(download_url)

|

| 33 |

-

if response.status_code == 200:

|

| 34 |

-

with open(f"llama-{latest_release_tag}-bin-win-cuda-cu12.2.0-x64.zip", "wb") as f:

|

| 35 |

-

f.write(response.content)

|

| 36 |

-

with zipfile.ZipFile(f"llama-{latest_release_tag}-bin-win-cuda-cu12.2.0-x64.zip", "r") as zip_ref:

|

| 37 |

-

zip_ref.extractall(os.path.join(base_dir, "bin"))

|

| 38 |

-

print("Downloading latest 'llama.cpp' prebuilt Windows binaries...")

|

| 39 |

-

print("Download and extraction completed successfully.")

|

| 40 |

-

return latest_release_tag

|

| 41 |

-

else:

|

| 42 |

-

print("Failed to download the release file.")

|

| 43 |

-

else:

|

| 44 |

-

print("Failed to fetch the latest release information.")

|

| 45 |

-

|

| 46 |

-

def download_cudart_if_necessary(latest_release_tag):

|

| 47 |

-

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 48 |

-

cudart_dl_dir = os.path.join(base_dir, "bin", "dl")

|

| 49 |

-

if not os.path.exists(cudart_dl_dir):

|

| 50 |

-

os.makedirs(cudart_dl_dir)

|

| 51 |

-

|

| 52 |

-

cudart_zip_file = os.path.join(cudart_dl_dir, "cudart-llama-bin-win-cu12.2.0-x64.zip")

|

| 53 |

-

cudart_extracted_files = ["cublas64_12.dll", "cublasLt64_12.dll", "cudart64_12.dll"]

|

| 54 |

-

|

| 55 |

-

if all(os.path.exists(os.path.join(base_dir, "bin", file)) for file in cudart_extracted_files):

|

| 56 |

-

print("Cuda resources already exist. Skipping download.")

|

| 57 |

-

else:

|

| 58 |

-

cudart_download_url = f"https://github.com/ggerganov/llama.cpp/releases/download/{latest_release_tag}/cudart-llama-bin-win-cu12.2.0-x64.zip"

|

| 59 |

-

response = requests.get(cudart_download_url)

|

| 60 |

-

if response.status_code == 200:

|

| 61 |

-

with open(cudart_zip_file, "wb") as f:

|

| 62 |

-

f.write(response.content)

|

| 63 |

-

with zipfile.ZipFile(cudart_zip_file, "r") as zip_ref:

|

| 64 |

-

zip_ref.extractall(os.path.join(base_dir, "bin"))

|

| 65 |

-

print("Preparing 'cuda' resources...")

|

| 66 |

-

print("Download and extraction of cudart completed successfully.")

|

| 67 |

-

else:

|

| 68 |

-

print("Failed to download the cudart release file.")

|

| 69 |

-

|

| 70 |

-

def download_model_repo():

|

| 71 |

-

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 72 |

-

models_dir = os.path.join(base_dir, "models")

|

| 73 |

-

|

| 74 |

-

if not os.path.exists(models_dir):

|

| 75 |

-

os.makedirs(models_dir)

|

| 76 |

-

|

| 77 |

-

model_id = input("Enter the model ID to download (e.g., huggingface/transformers): ")

|

| 78 |

-

model_name = model_id.split("/")[-1]

|

| 79 |

-

model_dir = os.path.join(models_dir, model_name)

|

| 80 |

-

|

| 81 |

-

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 82 |

-

gguf_model_path = os.path.join(gguf_dir, f"{model_name}-F16.gguf")

|

| 83 |

-

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

| 84 |

-

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

| 85 |

-

|

| 86 |

-

if os.path.exists(gguf_model_path):

|

| 87 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 88 |

-

else:

|

| 89 |

-

if os.path.exists(model_dir):

|

| 90 |

-

print("Model repository already exists. Using existing repository.")

|

| 91 |

-

|

| 92 |

-

convert_model_to_gguf_bf16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 93 |

-

|

| 94 |

-

else:

|

| 95 |

-

revision = input("Enter the revision (branch, tag, or commit) to download (default: main): ") or "main"

|

| 96 |

-

|

| 97 |

-

print("Downloading model repository...")

|

| 98 |

-

snapshot_download(repo_id=model_id, local_dir=model_dir, revision=revision)

|

| 99 |

-

print("Model repository downloaded successfully.")

|

| 100 |

-

|

| 101 |

-

convert_model_to_gguf_bf16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 102 |

-

|

| 103 |

-

def convert_model_to_gguf_bf16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 104 |

-

convert_script = os.path.join(base_dir, "llama.cpp", "convert_hf_to_gguf.py")

|

| 105 |

-

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 106 |

-

gguf_model_path = os.path.join(gguf_dir, f"{model_name}-BF16.gguf")

|

| 107 |

-

|

| 108 |

-

if not os.path.exists(gguf_dir):

|

| 109 |

-

os.makedirs(gguf_dir)

|

| 110 |

-

|

| 111 |

-

if not os.path.exists(gguf_model_path):

|

| 112 |

-

subprocess.run(["python", convert_script, model_dir, "--outfile", gguf_model_path, "--outtype", "bf16"])

|

| 113 |

-

|

| 114 |

-

convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 115 |

-

|

| 116 |

-

def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 117 |

-

convert_script = os.path.join(base_dir, "llama.cpp", "convert_hf_to_gguf.py")

|

| 118 |

-

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 119 |

-

gguf_model_path = os.path.join(gguf_dir, f"{model_name}-F16.gguf")

|

| 120 |

-

|

| 121 |

-

if not os.path.exists(gguf_dir):

|

| 122 |

-

os.makedirs(gguf_dir)

|

| 123 |

-

|

| 124 |

-

if not os.path.exists(gguf_model_path):

|

| 125 |

-

subprocess.run(["python", convert_script, model_dir, "--outfile", gguf_model_path, "--outtype", "f16"])

|

| 126 |

-

|

| 127 |

-

if delete_model_dir == 'yes' or delete_model_dir == 'y':

|

| 128 |

-

shutil.rmtree(model_dir)

|

| 129 |

-

print(f"Original model directory '{model_dir}' deleted.")

|

| 130 |

-

else:

|

| 131 |

-

print(f"Original model directory '{model_dir}' was not deleted. You can remove it manually.")

|

| 132 |

-

|

| 133 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 134 |

-

|

| 135 |

-

def create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name):

|

| 136 |

-

imatrix_exe = os.path.join(base_dir, "bin", "llama-imatrix.exe")

|

| 137 |

-

imatrix_output_src = os.path.join(gguf_dir, "imatrix.dat")

|

| 138 |

-

imatrix_output_dst = os.path.join(gguf_dir, "imatrix.dat")

|

| 139 |

-

if not os.path.exists(imatrix_output_dst):

|

| 140 |

-

try:

|

| 141 |

-

subprocess.run([imatrix_exe, "-m", gguf_model_path, "-f", os.path.join(base_dir, "imatrix", imatrix_file_name), "-ngl", "7"], cwd=gguf_dir)

|

| 142 |

-

shutil.move(imatrix_output_src, imatrix_output_dst)

|

| 143 |

-

print("imatrix.dat moved successfully.")

|

| 144 |

-

except Exception as e:

|

| 145 |

-

print("Error occurred while moving imatrix.dat:", e)

|

| 146 |

-

else:

|

| 147 |

-

print("imatrix.dat already exists in the GGUF folder.")

|

| 148 |

-

|

| 149 |

-

quantize_models(base_dir, model_name)

|

| 150 |

-

|

| 151 |

-

def quantize_models(base_dir, model_name):

|

| 152 |

-

gguf_dir = os.path.join(base_dir, "models", f"{model_name}-GGUF")

|

| 153 |

-

bf16_gguf_path = os.path.join(gguf_dir, f"{model_name}-BF16.gguf")

|

| 154 |

-

|

| 155 |

-

quantization_options = [

|

| 156 |

-

"IQ3_M", "IQ3_XXS",

|

| 157 |

-

"Q4_K_M", "Q4_K_S", "IQ4_XS",

|

| 158 |

-

"Q5_K_M", "Q5_K_S",

|

| 159 |

-

"Q6_K",

|

| 160 |

-

"Q8_0"

|

| 161 |

-

]

|

| 162 |

-

|

| 163 |

-

for quant_option in quantization_options:

|

| 164 |

-

quantized_gguf_name = f"{model_name}-{quant_option}-imat.gguf"

|

| 165 |

-

quantized_gguf_path = os.path.join(gguf_dir, quantized_gguf_name)

|

| 166 |

-

quantize_command = os.path.join(base_dir, "bin", "llama-quantize.exe")

|

| 167 |

-

imatrix_path = os.path.join(gguf_dir, "imatrix.dat")

|

| 168 |

-

|

| 169 |

-

subprocess.run([quantize_command, "--imatrix", imatrix_path,

|

| 170 |

-

bf16_gguf_path, quantized_gguf_path, quant_option], cwd=gguf_dir)

|

| 171 |

-

print(f"Model quantized with {quant_option} option.")

|

| 172 |

-

|

| 173 |

-

def main():

|

| 174 |

-

clone_or_update_llama_cpp()

|

| 175 |

-

latest_release_tag = download_llama_release()

|

| 176 |

-

download_cudart_if_necessary(latest_release_tag)

|

| 177 |

-

download_model_repo()

|

| 178 |

-

print("Finished preparing resources.")

|

| 179 |

-

|

| 180 |

-

if __name__ == "__main__":

|

| 181 |

-

main()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

gguf-imat.py

CHANGED

|

@@ -70,7 +70,6 @@ def download_cudart_if_necessary(latest_release_tag):

|

|

| 70 |

def download_model_repo():

|

| 71 |

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 72 |

models_dir = os.path.join(base_dir, "models")

|

| 73 |

-

|

| 74 |

if not os.path.exists(models_dir):

|

| 75 |

os.makedirs(models_dir)

|

| 76 |

|

|

@@ -78,27 +77,27 @@ def download_model_repo():

|

|

| 78 |

model_name = model_id.split("/")[-1]

|

| 79 |

model_dir = os.path.join(models_dir, model_name)

|

| 80 |

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

| 84 |

-

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

| 85 |

|

| 86 |

-

|

| 87 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 88 |

-

else:

|

| 89 |

-

if os.path.exists(model_dir):

|

| 90 |

-

print("Model repository already exists. Using existing repository.")

|

| 91 |

|

| 92 |

-

|

| 93 |

|

| 94 |

-

|

| 95 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 96 |

|

| 97 |

-

|

| 98 |

-

snapshot_download(repo_id=model_id, local_dir=model_dir, revision=revision)

|

| 99 |

-

print("Model repository downloaded successfully.")

|

| 100 |

|

| 101 |

-

|

| 102 |

|

| 103 |

def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 104 |

convert_script = os.path.join(base_dir, "llama.cpp", "convert.py")

|

|

@@ -116,17 +115,13 @@ def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir,

|

|

| 116 |

print(f"Original model directory '{model_dir}' deleted.")

|

| 117 |

else:

|

| 118 |

print(f"Original model directory '{model_dir}' was not deleted. You can remove it manually.")

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name)

|

| 122 |

|

| 123 |

-

def create_imatrix(base_dir, gguf_dir, gguf_model_path, model_name, imatrix_file_name):

|

| 124 |

imatrix_exe = os.path.join(base_dir, "bin", "imatrix.exe")

|

| 125 |

imatrix_output_src = os.path.join(gguf_dir, "imatrix.dat")

|

| 126 |

imatrix_output_dst = os.path.join(gguf_dir, "imatrix.dat")

|

| 127 |

if not os.path.exists(imatrix_output_dst):

|

| 128 |

try:

|

| 129 |

-

subprocess.run([imatrix_exe, "-m", gguf_model_path, "-f", os.path.join(base_dir, "imatrix", imatrix_file_name), "-ngl", "

|

| 130 |

shutil.move(imatrix_output_src, imatrix_output_dst)

|

| 131 |

print("imatrix.dat moved successfully.")

|

| 132 |

except Exception as e:

|

|

|

|

| 70 |

def download_model_repo():

|

| 71 |

base_dir = os.path.dirname(os.path.abspath(__file__))

|

| 72 |

models_dir = os.path.join(base_dir, "models")

|

|

|

|

| 73 |

if not os.path.exists(models_dir):

|

| 74 |

os.makedirs(models_dir)

|

| 75 |

|

|

|

|

| 77 |

model_name = model_id.split("/")[-1]

|

| 78 |

model_dir = os.path.join(models_dir, model_name)

|

| 79 |

|

| 80 |

+

if os.path.exists(model_dir):

|

| 81 |

+

print("Model repository already exists. Using existing repository.")

|

|

|

|

|

|

|

| 82 |

|

| 83 |

+

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

|

|

|

|

|

|

|

|

|

|

|

|

| 84 |

|

| 85 |

+

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

| 86 |

|

| 87 |

+

convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 88 |

+

|

| 89 |

+

else:

|

| 90 |

+

revision = input("Enter the revision (branch, tag, or commit) to download (default: main): ") or "main"

|

| 91 |

+

|

| 92 |

+

delete_model_dir = input("Remove HF model folder after converting original model to GGUF? (yes/no) (default: no): ").strip().lower()

|

| 93 |

+

|

| 94 |

+

print("Downloading model repository...")

|

| 95 |

+

snapshot_download(repo_id=model_id, local_dir=model_dir, revision=revision)

|

| 96 |

+

print("Model repository downloaded successfully.")

|

| 97 |

|

| 98 |

+

imatrix_file_name = input("Enter the name of the imatrix.txt file (default: imatrix.txt): ").strip() or "imatrix.txt"

|

|

|

|

|

|

|

| 99 |

|

| 100 |

+

convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name)

|

| 101 |

|

| 102 |

def convert_model_to_gguf_f16(base_dir, model_dir, model_name, delete_model_dir, imatrix_file_name):

|

| 103 |

convert_script = os.path.join(base_dir, "llama.cpp", "convert.py")

|

|

|

|

| 115 |

print(f"Original model directory '{model_dir}' deleted.")

|

| 116 |

else:

|

| 117 |

print(f"Original model directory '{model_dir}' was not deleted. You can remove it manually.")

|

|

|

|

|

|

|

|

|

|

| 118 |

|

|

|

|

| 119 |

imatrix_exe = os.path.join(base_dir, "bin", "imatrix.exe")

|

| 120 |

imatrix_output_src = os.path.join(gguf_dir, "imatrix.dat")

|

| 121 |

imatrix_output_dst = os.path.join(gguf_dir, "imatrix.dat")

|

| 122 |

if not os.path.exists(imatrix_output_dst):

|

| 123 |

try:

|

| 124 |

+

subprocess.run([imatrix_exe, "-m", gguf_model_path, "-f", os.path.join(base_dir, "imatrix", imatrix_file_name), "-ngl", "8"], cwd=gguf_dir)

|

| 125 |

shutil.move(imatrix_output_src, imatrix_output_dst)

|

| 126 |

print("imatrix.dat moved successfully.")

|

| 127 |

except Exception as e:

|

requirements.txt

DELETED

|

@@ -1,4 +0,0 @@

|

|

| 1 |

-

-r ./llama.cpp/requirements/requirements-convert-legacy-llama.txt

|

| 2 |

-

-r ./llama.cpp/requirements/requirements-convert-hf-to-gguf.txt

|

| 3 |

-

-r ./llama.cpp/requirements/requirements-convert-hf-to-gguf-update.txt

|

| 4 |

-

-r ./llama.cpp/requirements/requirements-convert-llama-ggml-to-gguf.txt

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|