Mixtral-8x7B-Instruct-v0.1-upscaled

This is a frankenmerge of mistralai/Mixtral-8x7B-Instruct-v0.1 created by interleaving layers of itself using mergekit.

Benchmark

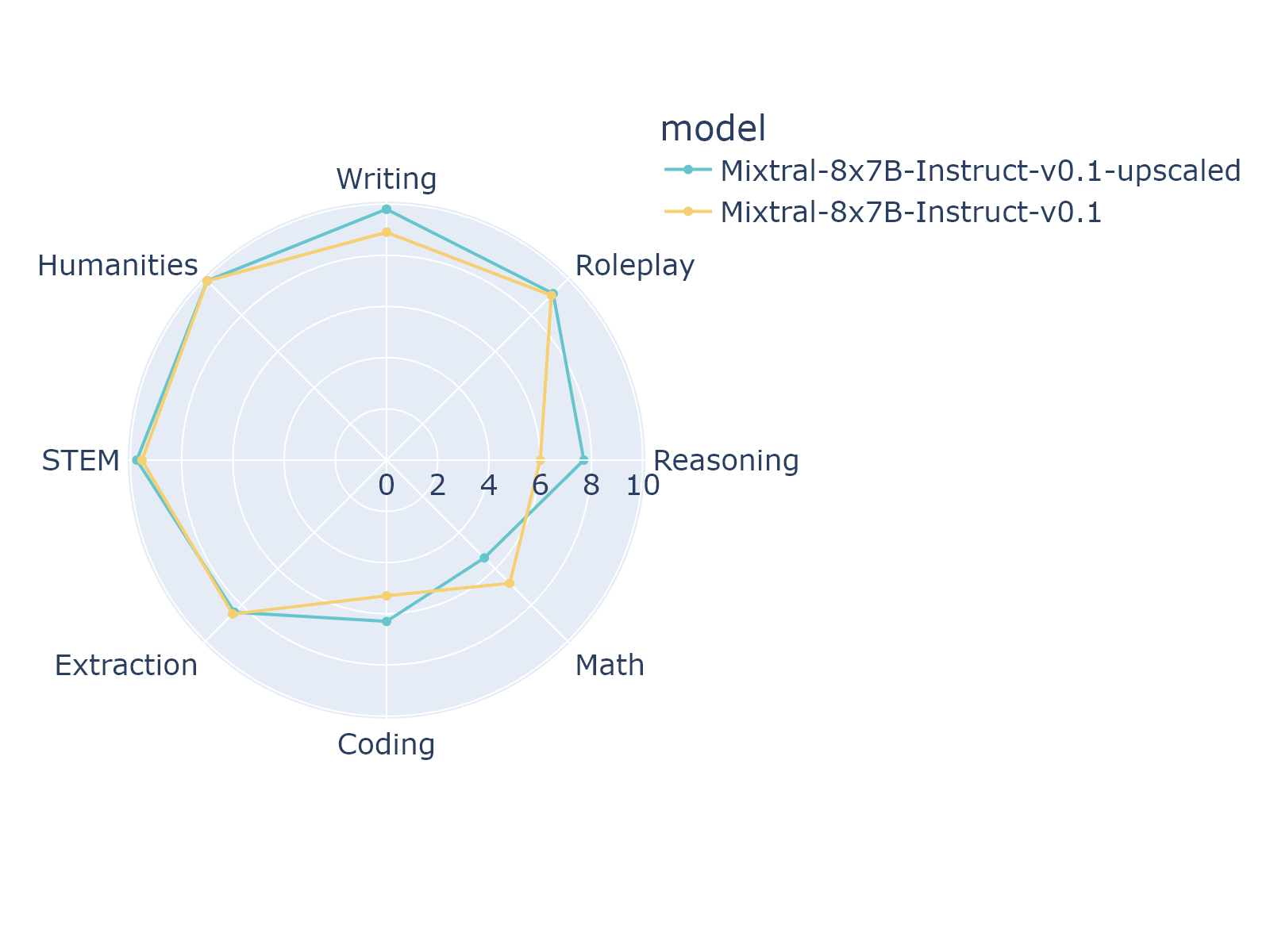

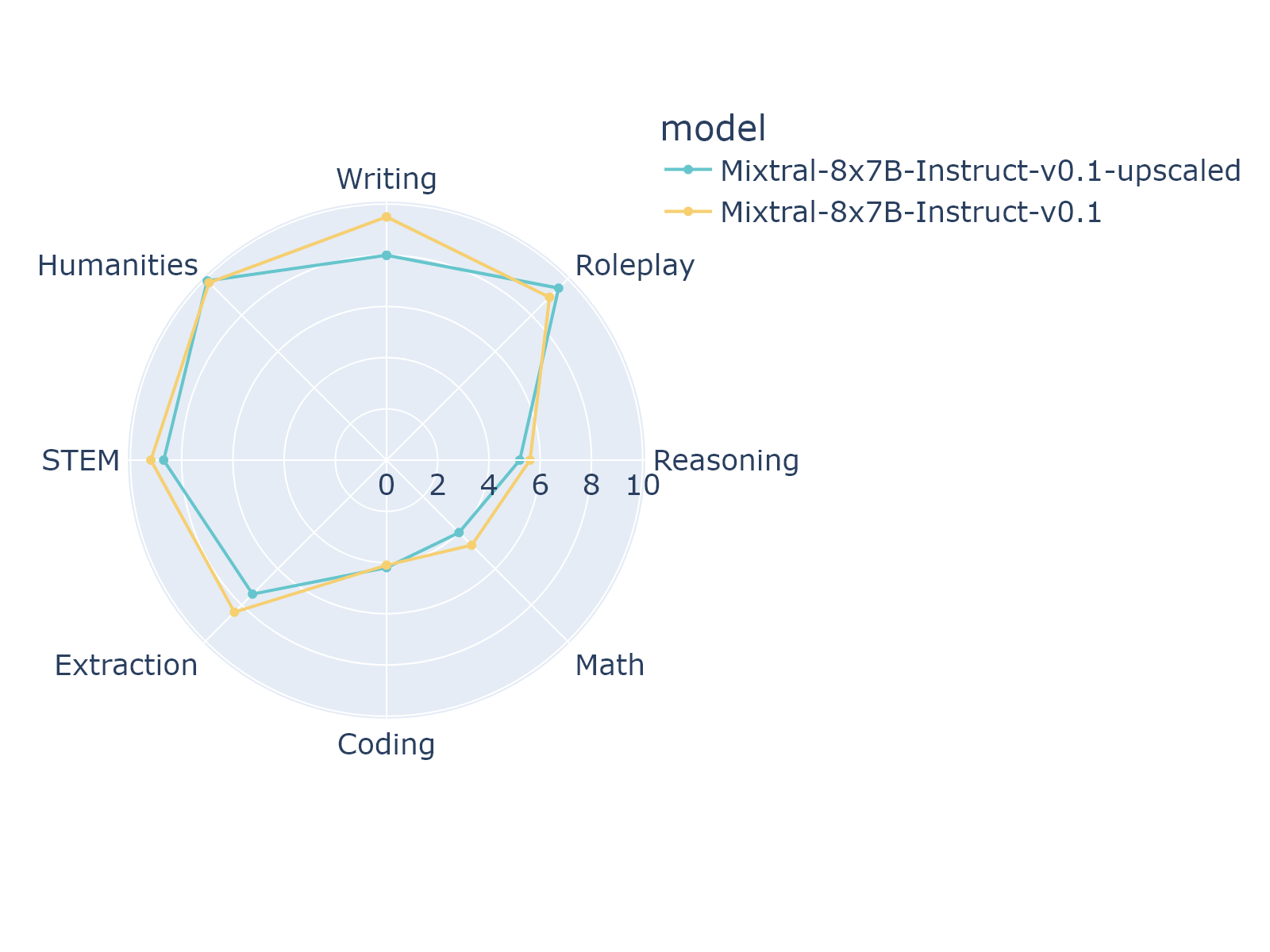

The benchmark score of the mt-bench for this model and the original models are as follows:

1-turn

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| Mixtral-8x7B-Instruct-v0.1 | 8x7B | 5.3 | 8.5 | 9.9 | 6.8 | 6.0 | 9.1 | 9.55 | 8.9 | 8.00625 |

| This model | around 8x12B? | 6.3 | 8.4 | 9.9 | 5.4 | 7.7 | 9.2 | 9.75 | 9.8 | 8.30625 |

2-turn

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| Mixtral-8x7B-Instruct-v0.1 | 8x7B | 4.1 | 8.4 | 9.8 | 4.7 | 5.6 | 9.0 | 9.2 | 9.5 | 7.5375 |

| This model | around 8x12B? | 4.2 | 7.4 | 9.9 | 4.0 | 5.2 | 9.5 | 8.7 | 8.0 | 7.1125 |

Merge Details

Merge Method

This model was merged using the passthrough merge method.

Models Merged

The following models were included in the merge:

- mistralai/Mixtral-8x7B-Instruct-v0.1

Configuration

The following YAML configuration was used to produce this model:

merge_method: passthrough

slices:

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [0, 8]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [4, 12]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [8, 16]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [12, 20]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [16, 24]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [20, 28]

- sources:

- model: mistralai/Mixtral-8x7B-Instruct-v0.1

layer_range: [24, 32]

dtype: bfloat16

tokenizer_source: base

- Downloads last month

- 82

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.