MixTAO-7Bx2-MoE-Instruct

MixTAO-7Bx2-MoE-Instruct is a Mixure of Experts (MoE).

💻 Usage

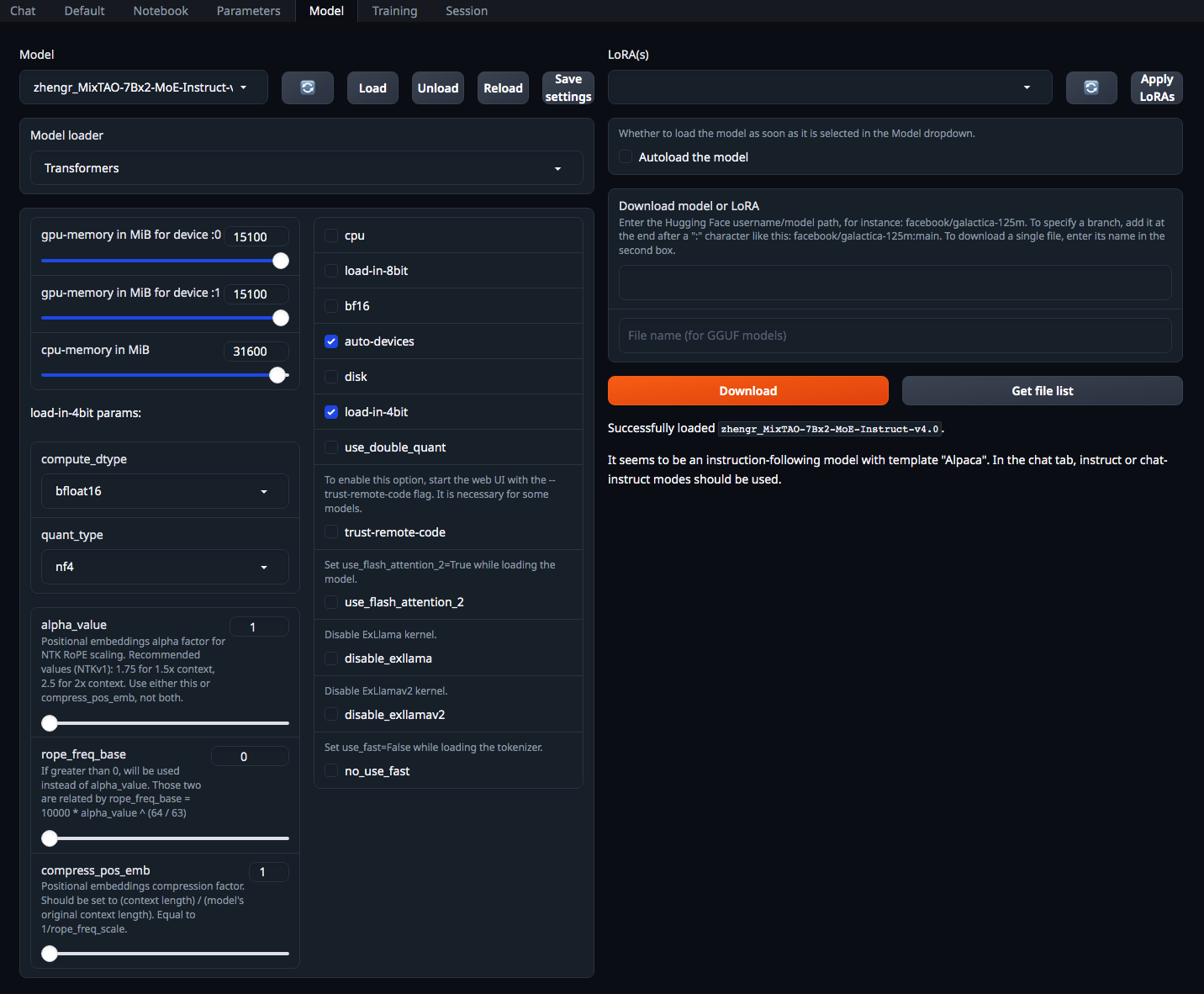

text-generation-webui - Model Tab

Chat template

{%- for message in messages %}

{%- if message['role'] == 'system' -%}

{{- message['content'] + '\n\n' -}}

{%- else -%}

{%- if message['role'] == 'user' -%}

{{- name1 + ': ' + message['content'] + '\n'-}}

{%- else -%}

{{- name2 + ': ' + message['content'] + '\n' -}}

{%- endif -%}

{%- endif -%}

{%- endfor -%}

Instruction template :Alpaca

Change this according to the model/LoRA that you are using. Used in instruct and chat-instruct modes.

{%- set ns = namespace(found=false) -%}

{%- for message in messages -%}

{%- if message['role'] == 'system' -%}

{%- set ns.found = true -%}

{%- endif -%}

{%- endfor -%}

{%- if not ns.found -%}

{{- '' + 'Below is an instruction that describes a task. Write a response that appropriately completes the request.' + '\n\n' -}}

{%- endif %}

{%- for message in messages %}

{%- if message['role'] == 'system' -%}

{{- '' + message['content'] + '\n\n' -}}

{%- else -%}

{%- if message['role'] == 'user' -%}

{{-'### Instruction:\n' + message['content'] + '\n\n'-}}

{%- else -%}

{{-'### Response:\n' + message['content'] + '\n\n' -}}

{%- endif -%}

{%- endif -%}

{%- endfor -%}

{%- if add_generation_prompt -%}

{{-'### Response:\n'-}}

{%- endif -%}

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 76.55 |

| AI2 Reasoning Challenge (25-Shot) | 74.23 |

| HellaSwag (10-Shot) | 89.37 |

| MMLU (5-Shot) | 64.54 |

| TruthfulQA (0-shot) | 74.26 |

| Winogrande (5-shot) | 87.77 |

| GSM8k (5-shot) | 69.14 |

- Downloads last month

- 317

This model does not have enough activity to be deployed to Inference API (serverless) yet.

Increase its social visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Spaces using zhengr/MixTAO-7Bx2-MoE-Instruct-v7.0 2

Evaluation results

- normalized accuracy on AI2 Reasoning Challenge (25-Shot)test set Open LLM Leaderboard74.230

- normalized accuracy on HellaSwag (10-Shot)validation set Open LLM Leaderboard89.370

- accuracy on MMLU (5-Shot)test set Open LLM Leaderboard64.540

- mc2 on TruthfulQA (0-shot)validation set Open LLM Leaderboard74.260

- accuracy on Winogrande (5-shot)validation set Open LLM Leaderboard87.770

- accuracy on GSM8k (5-shot)test set Open LLM Leaderboard69.140