DAMA

Model

LLaMA model adapted to mitigate gender bias in text generation. For adaptation, we used Debiasing Algorithm through Model Adaptation (DAMA) method described in Limisiewicz et al., 2024.

Model Description

- Developed by: Tomasz Limisiewicz, David Mareček, Tomáš Musil

- Funded by: Grant Agency of Czech Republic

- Language(s) (NLP): English

- Adapted from model: LLaMA

Model Sizes

Model Sources

Bias, Risks, and Limitations

DAMA mitigates the gender bias of the original model. It is better suited for generating and processing texts in sensitive domains, such as hiring, social services, or professional counseling. Still, we recommend caution for such use cases because bias is not entirely erased (the same as in any other currently available method).

Adaptation

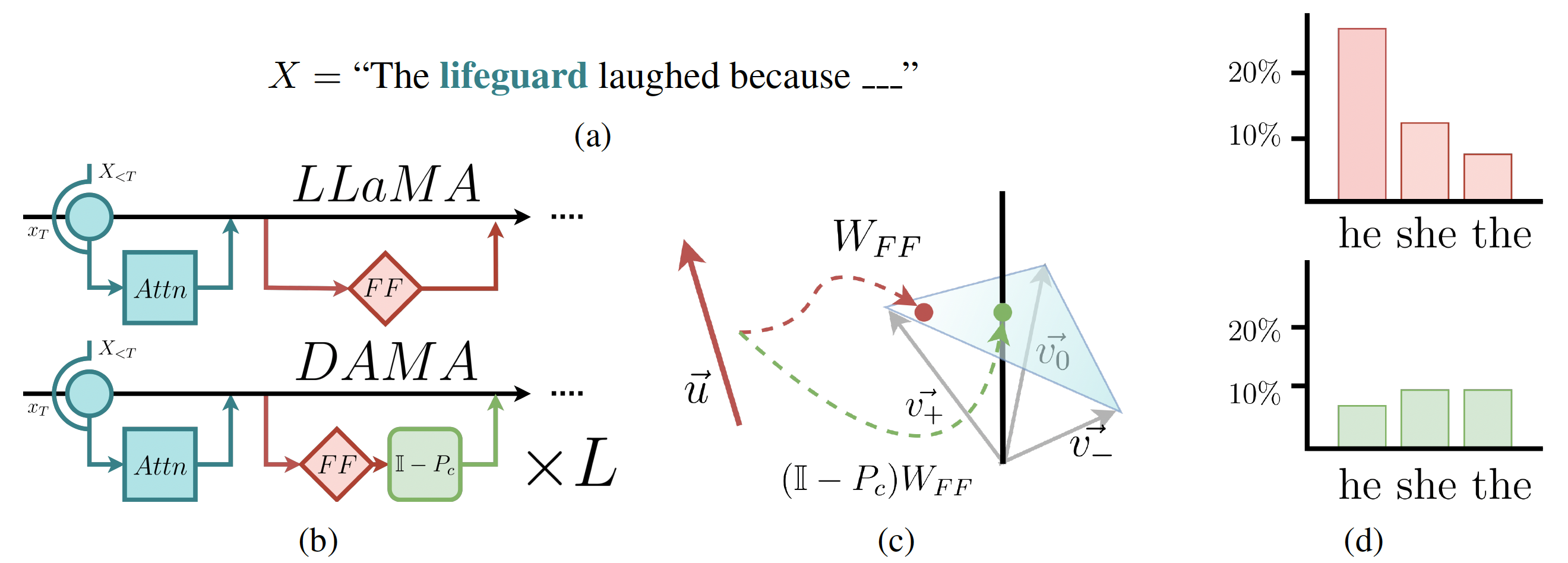

Schema (b) shows DAMA intervention in a LLaMA layer.

Even though I - P_c is depicted as a separate module, in practice, it is multiplied with the output matrix of a feed-forward layer (W_FF).

Therefore, DAMA is neutral to the model's parameter count and architecture.

(a) We show the behavior of the model when presented with a stereotypical prompt.

Specifically, (c) shows the projections of the feed-forward latent vector (u) onto the output space.

With DAMA (lower arrow), we nullify the gender component of the representation.

It results in balanced probabilities of gendered tokens in the model's output, as shown in (d).

The method for obtaining P_c is based on the Partial Least Square algorithm.

For more details, please refer to the paper.

Use

Following snippet shows the basic usage od DAMA for text generation.

from transformers import AutoModelForCausalLM, AutoTokenizer

DAMA_SIZE= '7B'

OUTPUT_DIR = 'output'

model = AutoModelForCausalLM.from_pretrained(f"ufal/DAMA-{DAMA_SIZE}", offload_folder=OUTPUT_DIR,

torch_dtype=torch.float16, low_cpu_mem_usage=True,

device_map='auto')

tokenizer = AutoTokenizer.from_pretrained(f"ufal/DAMA-{DAMA_SIZE}", use_fast=True, return_token_type_ids=False)

prompt = "The lifeguard laughed because"

inputs = tokenizer(prompt, return_tensors="pt")

generate_ids = model.generate(inputs.input_ids, max_length=30)

tokenizer.batch_decode(generate_ids, skip_special_tokens=True)[0]

Evaluation

We evaluate the models on multiple benchmarks to assess gender bias and language understanding capabilities. DAMA models are compared with the original LLaMA models.

Bias Evaluation

We introduced a metric for evaluating gender bias in text generation.

It measures to which extent the models' output is affected by stereotypical a_s and factual a_f gender signals.

Moreover, we provide the scores for two established bias benchmarks: WinoBias and Stereoset.

Results

| Bias in LM | WinoBias | Stereoset | |||||||

|---|---|---|---|---|---|---|---|---|---|

a_s |

a_f |

b |

Acc | Delta S |

Delta G |

lms | ss | ICAT | |

| LLaMA 7B | 0.235 | 0.320 | 0.072 | 59.1% | 40.3% | 3.0% | 95.5 | 71.9 | 53.7 |

| DAMA 7B | -0.005 | 0.038 | -0.006 | 57.3% | 31.5% | 2.3% | 95.5 | 69.3 | 58.5 |

| LLaMA 13B | 0.270 | 0.351 | 0.070 | 70.5% | 35.7% | -1.5% | 95.2 | 71.4 | 54.4 |

| DAMA 13B | 0.148 | 0.222 | 0.059 | 66.4% | 31.1% | -1.1% | 94.4 | 68.6 | 59.4 |

| LLaMA 33B | 0.265 | 0.343 | 0.092 | 71.0% | 36.0% | -4.0% | 94.7 | 68.4 | 59.9 |

| DAMA 33B | 0.105 | 0.172 | 0.059 | 63.7% | 26.7% | -3.7% | 94.8 | 65.7 | 65.0 |

| LLaMA 65B | 0.249 | 0.316 | 0.095 | 73.3% | 35.7% | 1.4% | 94.9 | 69.5 | 57.9 |

| DAMA 65B | 0.185 | 0.251 | 0.100 | 71.1% | 27.2% | 0.8% | 92.8 | 67.1 | 61.1 |

Bias evaluation for the LLaMA models and their debiased instances.

Performance Evaluation

To check the effect of debiasing on LM capabilities, we compute perplexity on Wikipedia corpus. We also test performance on four language understanding end-tasks: OpenBookQA, AI2 Reasoning Challenge (Easy and Chalange Sets), and Massive Multitask Language Understanding.

Results

| Perpelexity | ARC-C | ARC-E | OBQA | MMLU | |

|---|---|---|---|---|---|

| LLaMA 7B | 26.1 | 42.2 | 69.1 | 57.2 | 30.3 |

| DAMA 7B | 28.9 | 41.8 | 68.3 | 56.2 | 30.8 |

| LLaMA 13B | 19.8 | 44.9 | 70.6 | 55.4 | 43.3 |

| DAMA 13B | 21.0 | 44.7 | 70.3 | 56.2 | 43.5 |

| LLaMA 33B | 20.5 | 47.4 | 72.9 | 59.2 | 55.7* |

| DAMA 33B | 19.6 | 45.2 | 71.6 | 58.2 | 56.1* |

| LLaMA 65B | 19.5 | 44.5 | 73.9 | 59.6 | ---* |

| DAMA 65B | 20.1 | 40.5 | 67.7 | 57.2 | --- * |

Performance evaluation for the LLaMA models and their debiased instances. Due to hardware limitations, we could not run MMLU inference for 65B models. In the evaluation of 33B model, we excluded 4% longest prompts.

Citation

BibTeX:

@inproceedings{

limisiewicz2024debiasing,

title={Debiasing Algorithm through Model Adaptation},

author={Tomasz Limisiewicz and David Mare{\v{c}}ek and Tom{\'a}{\v{s}} Musil},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=XIZEFyVGC9}

}

APA:

Limisiewicz, T., Mareček, D., & Musil, T. (2024). Debiasing Algorithm through Model Adaptation. The Twelfth International Conference on Learning Representations.

Model Card Author

- Downloads last month

- 7