ASLNow!

ASLNow! is a web app designed to make learning ASL fingerspelling easy and fun! You can try it live at asl-now.vercel.app.

Demo: https://www.youtube.com/watch?v=Wi5tAxVasq8

Model

This model, trained on the isolated fingerspelling dataset is licensed under the MIT License. It will be updated frequently as more data is collected.

Format

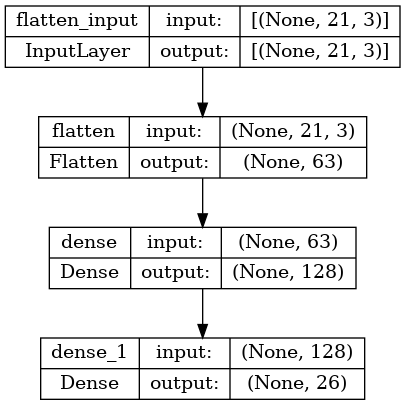

Input

21 hand landmarks, each composed of x, y and z coordinates. The x and y coordinates are normalized

to [0.0, 1.0] by the

image width and height, respectively. The z coordinate represents the landmark depth, with the depth at the wrist

being

the origin. The smaller the value, the closer the landmark is to the camera. The magnitude of z uses roughly the same

scale as x.

From: https://developers.google.com/mediapipe/solutions/vision/hand_landmarker

From: https://developers.google.com/mediapipe/solutions/vision/hand_landmarker

Example:

[

# Landmark 1

[x, y, z],

# Landmark 2

[x, y, z],

...

# Landmark 20

[x, y, z]

# Landmark 21

[x, y, z]

]

Output

The probability of each class, where classes are defined as such:

{

"A": 0,

"B": 1,

"C": 2,

"D": 3,

"E": 4,

"F": 5,

"G": 6,

"H": 7,

"I": 8,

"J": 9,

"K": 10,

"L": 11,

"M": 12,

"N": 13,

"O": 14,

"P": 15,

"Q": 16,

"R": 17,

"S": 18,

"T": 19,

"U": 20,

"V": 21,

"W": 22,

"X": 23,

"Y": 24,

"Z": 25

}

- Downloads last month

- 8