T5-base fine-tuned on WikiSQL

Google's T5 fine-tuned on WikiSQL for English to SQL translation.

Details of T5

The T5 model was presented in Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer by Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu in Here the abstract:

Transfer learning, where a model is first pre-trained on a data-rich task before being fine-tuned on a downstream task, has emerged as a powerful technique in natural language processing (NLP). The effectiveness of transfer learning has given rise to a diversity of approaches, methodology, and practice. In this paper, we explore the landscape of transfer learning techniques for NLP by introducing a unified framework that converts every language problem into a text-to-text format. Our systematic study compares pre-training objectives, architectures, unlabeled datasets, transfer approaches, and other factors on dozens of language understanding tasks. By combining the insights from our exploration with scale and our new “Colossal Clean Crawled Corpus”, we achieve state-of-the-art results on many benchmarks covering summarization, question answering, text classification, and more. To facilitate future work on transfer learning for NLP, we release our dataset, pre-trained models, and code.

Details of the Dataset 📚

Dataset ID: wikisql from Huggingface/NLP

| Dataset | Split | # samples |

|---|---|---|

| wikisql | train | 56355 |

| wikisql | valid | 14436 |

How to load it from nlp

train_dataset = nlp.load_dataset('wikisql', split=nlp.Split.TRAIN)

valid_dataset = nlp.load_dataset('wikisql', split=nlp.Split.VALIDATION)

Check out more about this dataset and others in NLP Viewer

Model fine-tuning 🏋️

The training script is a slightly modified version of this Colab Notebook created by Suraj Patil, so all credits to him!

Model in Action 🚀

from transformers import AutoModelWithLMHead, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("mrm8488/t5-base-finetuned-wikiSQL")

model = AutoModelWithLMHead.from_pretrained("mrm8488/t5-base-finetuned-wikiSQL")

def get_sql(query):

input_text = "translate English to SQL: %s </s>" % query

features = tokenizer([input_text], return_tensors='pt')

output = model.generate(input_ids=features['input_ids'],

attention_mask=features['attention_mask'])

return tokenizer.decode(output[0])

query = "How many models were finetuned using BERT as base model?"

get_sql(query)

# output: 'SELECT COUNT Model fine tuned FROM table WHERE Base model = BERT'

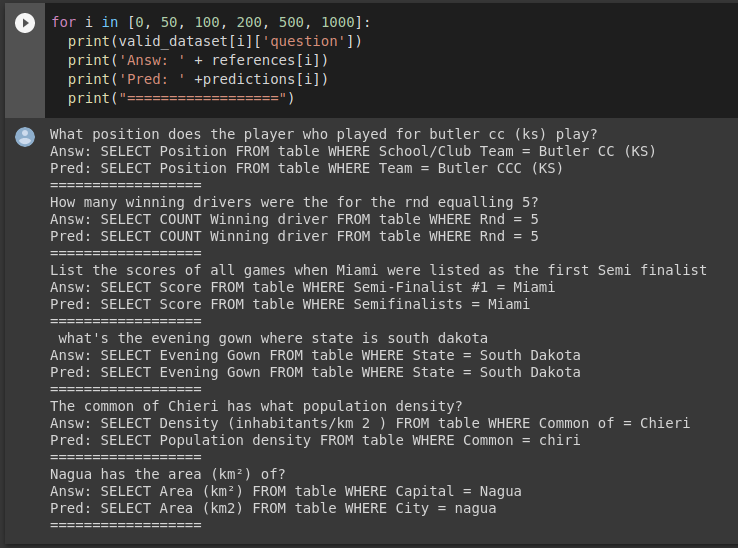

Other examples from validation dataset:

Created by Manuel Romero/@mrm8488 | LinkedIn

Made with ♥ in Spain

- Downloads last month

- 2,386