Finetuning Overview:

Model Used: HuggingFaceH4/zephyr-7b-alpha

Dataset: meta-math/MetaMathQA

Dataset Insights:

The MetaMathQA dataset is a newly created dataset specifically designed for enhancing the mathematical reasoning capabilities of large language models (LLMs). It is built by bootstrapping mathematical questions and rewriting them from multiple perspectives, providing a comprehensive and challenging environment for LLMs to develop and refine their mathematical problem-solving skills.

Finetuning Details:

Using MonsterAPI's LLM finetuner, this finetuning:

- Was conducted with efficiency and cost-effectiveness in mind.

- Completed in a total duration of 10.9 hours for 0.5 epoch using an A6000 48GB GPU.

- Costed

$22.01for the entire finetuning process.

Hyperparameters & Additional Details:

- Epochs: 0.5

- Total Finetuning Cost: $22.01

- Model Path: HuggingFaceH4/zephyr-7b-alpha

- Learning Rate: 0.0001

- Data Split: 95% train 5% validation

- Gradient Accumulation Steps: 4

Prompt Structure

Below is an instruction that describes a task. Write a response that appropriately completes the request.

###Instruction:[query]

###Response:[response]

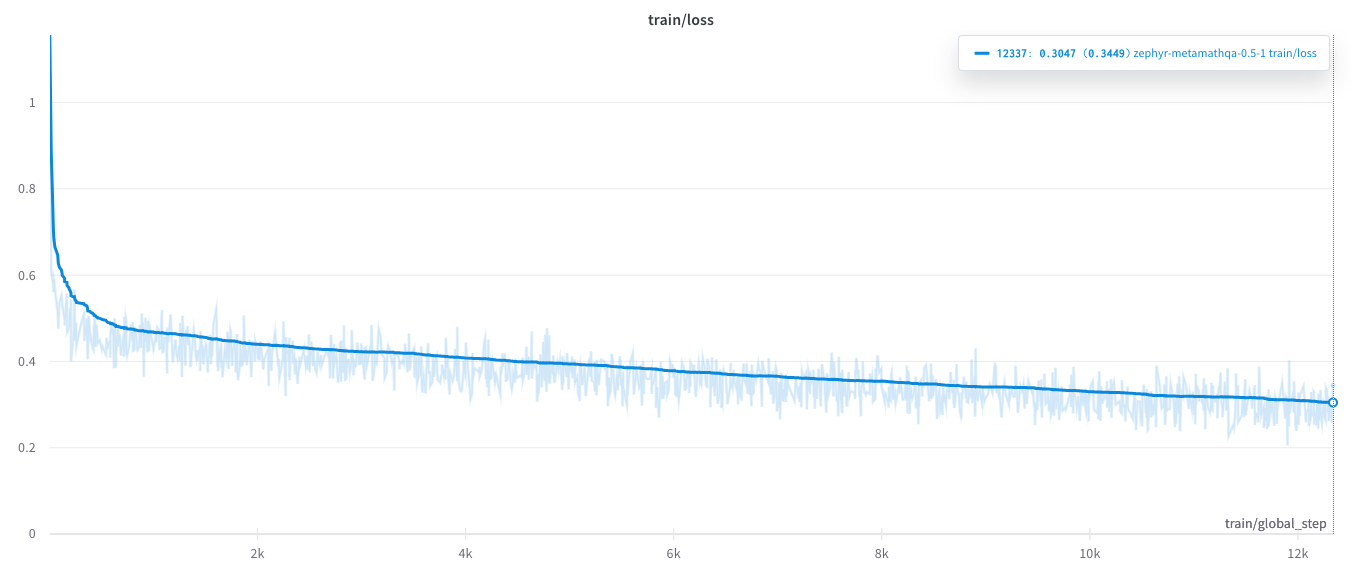

Training loss:

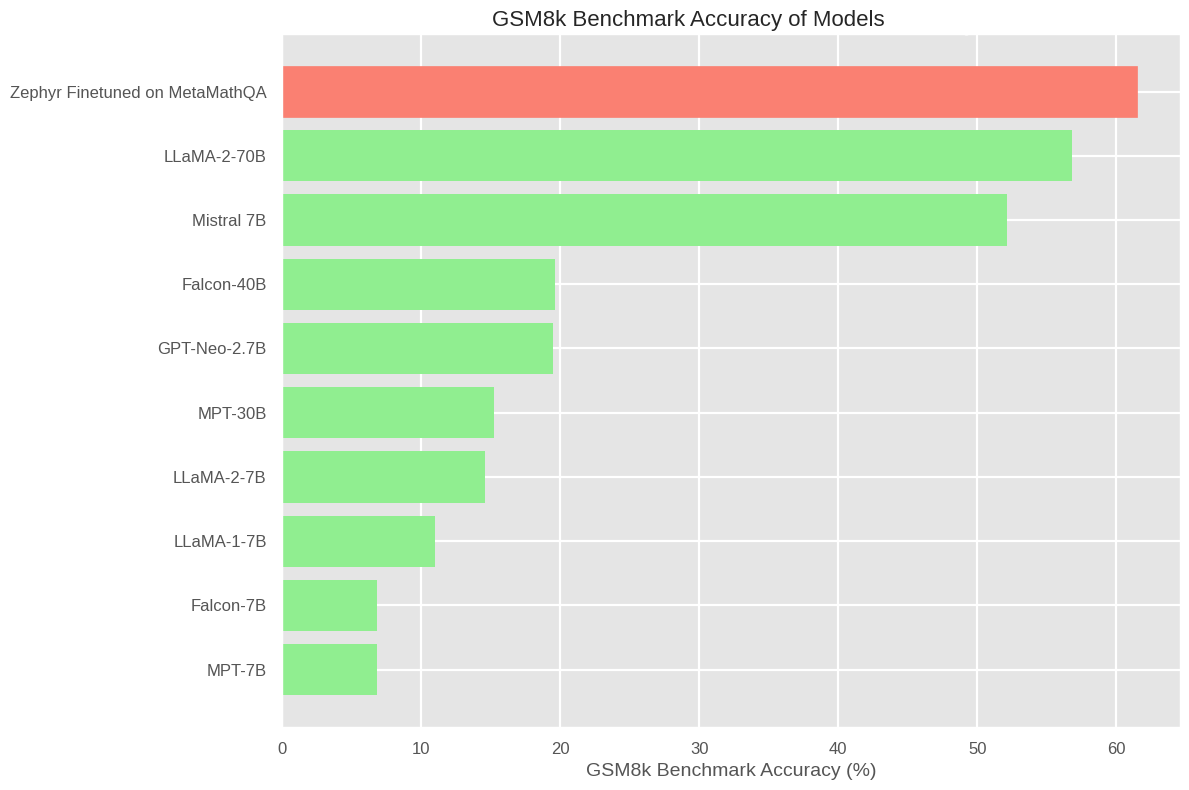

Benchmark Results:

GSM8K is a dataset of 8.5K high quality linguistically diverse grade school math word problems, These problems take between 2 and 8 steps to solve, and solutions primarily involve performing a sequence of elementary calculations using basic arithmetic operations (+ − ×÷) to reach the final answer. A bright middle school student should be able to solve every problem. Its a industry wide used benchmark for testing an LLM for for multi-step mathematical reasoning.

license: apache-2.0

- Downloads last month

- 18

Model tree for monsterapi/zephyr-7b-alpha_metamathqa

Base model

mistralai/Mistral-7B-v0.1