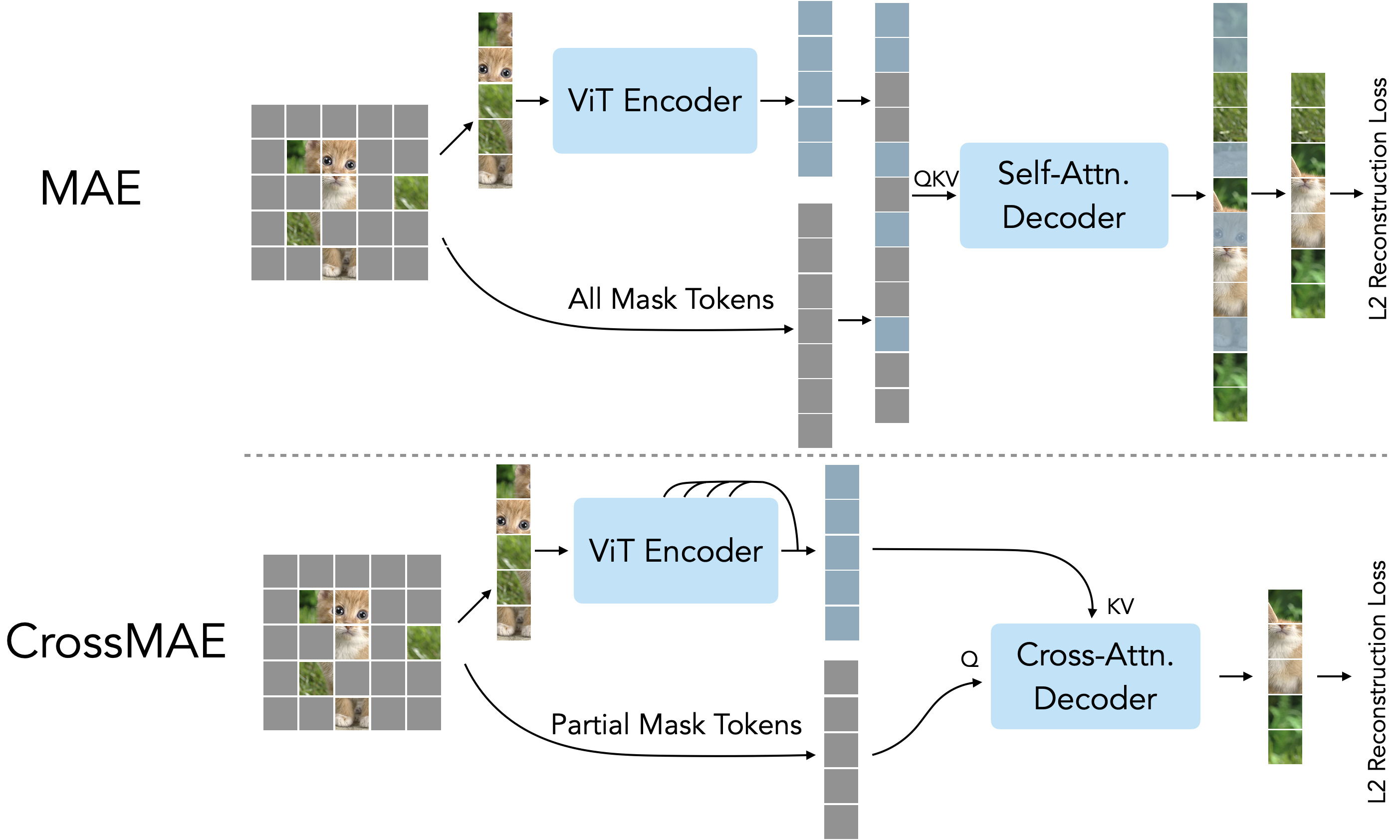

CrossMAE: Rethinking Patch Dependence for Masked Autoencoders

by Letian Fu*, Long Lian*, Renhao Wang, Baifeng Shi, Xudong Wang, Adam Yala†, Trevor Darrell†, Alexei A. Efros†, Ken Goldberg† at UC Berkeley and UCSF

[Paper] | [Project Page] | [Citation]

This repo has the models for CrossMAE: Rethinking Patch Dependence for Masked Autoencoders.

Please take a look at the GitHub repo to see instructions on pretraining, fine-tuning, and evaluation with these models.

| ViT-Small | ViT-Base | ViT-Base448 | ViT-Large | ViT-Huge | |

|---|---|---|---|---|---|

| pretrained checkpoint | download | download | download | download | download |

| fine-tuned checkpoint | download | download | download | download | download |

| Reference ImageNet accuracy (ours) | 79.318 | 83.722 | 84.598 | 85.432 | 86.256 |

| MAE ImageNet accuracy (baseline) | 84.8 | 85.9 |

Citation

Please give us a star 🌟 on Github to support us!

Please cite our work if you find our work inspiring or use our code in your work:

@article{

fu2025rethinking,

title={Rethinking Patch Dependence for Masked Autoencoders},

author={Letian Fu and Long Lian and Renhao Wang and Baifeng Shi and XuDong Wang and Adam Yala and Trevor Darrell and Alexei A Efros and Ken Goldberg},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2025},

url={https://openreview.net/forum?id=JT2KMuo2BV},

note={}

}

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

HF Inference deployability: The HF Inference API does not support image-classification models for pytorch

library.