File size: 4,923 Bytes

42fa14a 8f6475d 435cfc9 42fa14a 05605d8 42fa14a 8a45673 d46ec21 c3cf389 5828b76 79fd544 05605d8 79fd544 9c70605 05605d8 79fd544 5828b76 42fa14a 444c338 42fa14a a9fb1d4 42fa14a a9fb1d4 42fa14a d46ec21 020c806 9c70605 3aa8f64 42fa14a 020c806 a703759 42fa14a 1dc46a8 74a083d 8c20fb4 74a083d 42fa14a 1f6af64 42fa14a 1f6af64 42fa14a 1f6af64 42fa14a 8c20fb4 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 |

---

datasets:

- imagenet-1k

library_name: timm

license: apache-2.0

pipeline_tag: image-classification

metrics:

- accuracy

tags:

- ResNet

- CNN

- PDE

---

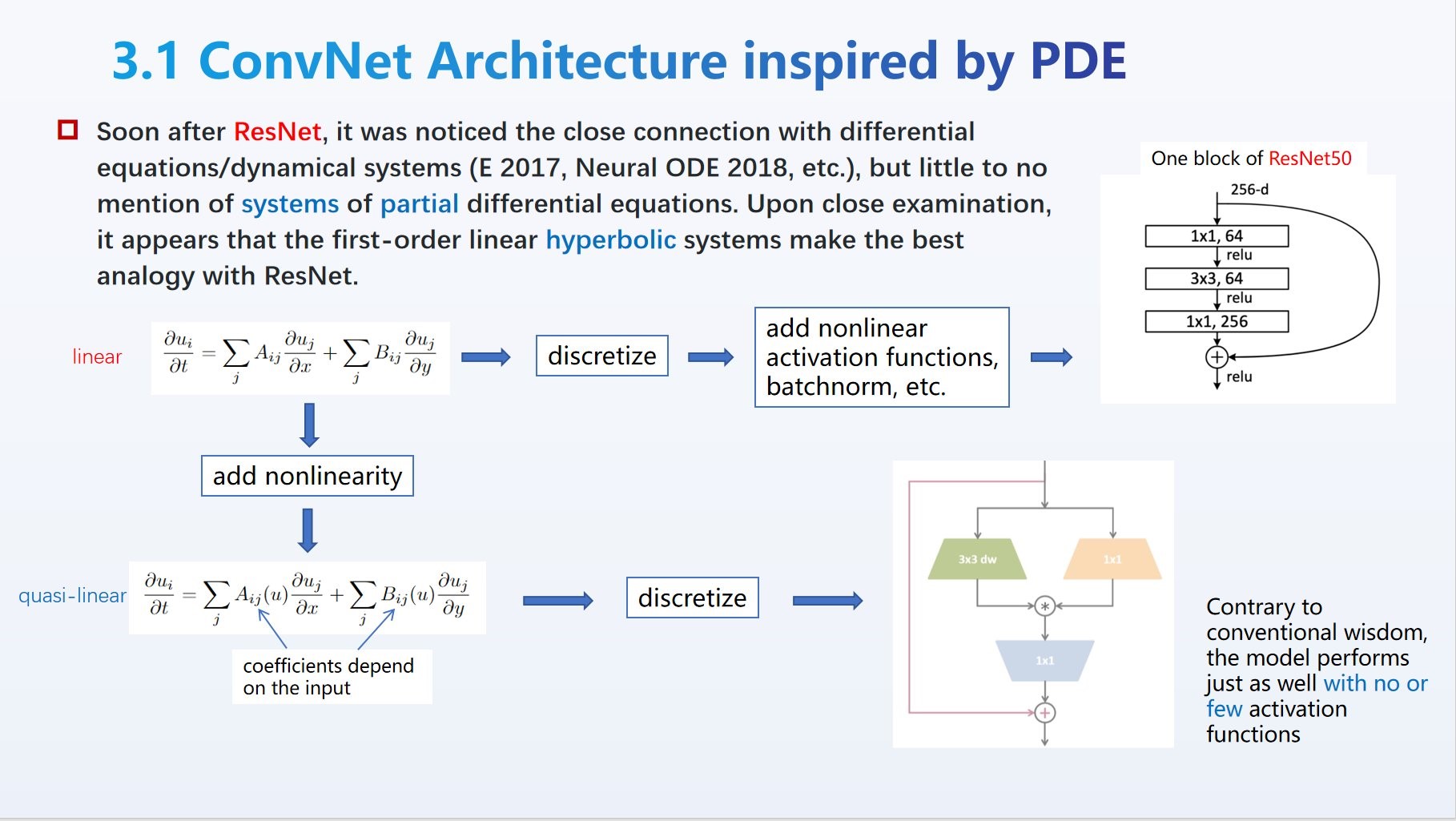

# Model Card for Model ID

Based on a class of partial differential equations called **quasi-linear hyperbolic systems** [[Liu et al, 2023](https://github.com/liuyao12/ConvNets-PDE-perspective)], the QLNet breaks into uncharted waters of ConvNet model space marked by the use of (element-wise) multiplication in lieu of ReLU as the primary nonlinearity. It achieves comparable performance as ResNet50 on ImageNet-1k (acc=**78.61**), demonstrating that it has the same level of capacity/expressivity, and deserves more analysis and study (hyper-paremeter tuning, optimizer, etc.) by the academic community.

One notable feature is that the architecture (trained or not) admits a *continuous* symmetry in its parameters. Check out the [notebook](https://colab.research.google.com/#fileId=https://huggingface.co/liuyao/QLNet/blob/main/QLNet_symmetry.ipynb) for a demo that makes a particular transformation on the weights while leaving the output *unchanged*.

FAQ (as the author imagines):

- Q: Who needs another ConvNet, when the SOTA for ImageNet-1k is now in the low 80s with models of comparable size?

- A: Aside from lack of resources to perform extensive experiments, the real answer is that the new symmetry has the potential to be exploited (e.g., symmetry-aware optimization). The non-activation nonlinearity does have more "naturalness" (coordinate independence) that is innate in many equations in mathematics and physics. Activation is but a legacy from the early days of models inspired by *biological* neural networks.

- Q: Multiplication is too simple, someone must have tried it?

- A: Perhaps. My bet is whoever tried it soon found the model fail to train with standard ReLU. Without the belief in the underlying PDE perspective, maybe it wasn't pushed to its limit.

- Q: Is it not similar to attention in Transformer?

- A: It is, indeed. It's natural to wonder if the activation functions in Transformer could be removed (or reduced) while still achieve comparable performance.

- Q: If the weight/parameter space has a symmetry (other than permutations), perhaps there's redundancy in the weights.

- A: The transformation in our demo indeed can be used to reduce the weights from the get-go. However, there are variants of the model that admit a much larger symmetry. It is also related to the phenomenon of "flat minima" found empirically in some conventional neural networks.

*This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1).*

## Model Details

### Model Description

Instead of the `bottleneck` block of ResNet50 which consists of 1x1, 3x3, 1x1 in succession, this simplest version of QLNet does a 1x1, splits into two equal halves and **multiplies** them, then applies a 3x3 (depthwise), and a 1x1, *all without activation functions* except at the end of the block, where a "radial" activation function that we call `hardball` is applied.

```python

class QLNet(nn.Module:

...

def forward(self, x):

x0 = self.skip(x)

x = self.conv1(x) # 1x1

C = x.size(1) // 2

x = x[:, :C, :, :] * x[:, C:, :, :]

x = self.conv2(x) # 3x3

x = self.conv3(x) # 1x1

x += x0

if self.act3 is not None:

x = self.act3(x)

return x

```

- **Developed by:** Yao Liu 刘杳

- **Model type:** Convolutional Neural Network (ConvNet)

- **License:** As academic work, it is free for all to use. It is a natural progression from the origianl ConvNet (of LeCun) and ResNet, with "depthwise" from MobileNet.

- **Finetuned from model:** N/A (*trained from scratch*)

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [ConvNet from the PDE perspective](https://github.com/liuyao12/ConvNets-PDE-perspective)

- **Paper:** [A Novel ConvNet Architecture with a Continuous Symmetry](https://arxiv.org/abs/2308.01621)

- **Demo:** [More Information Needed]

## How to Get Started with the Model

Use the code below to get started with the model.

```python

import torch, timm

from qlnet import QLNet

model = QLNet()

model.load_state_dict(torch.load('qlnet-10m.pth.tar'))

model.eval()

```

## Training Details

### Training and Testing Data

ImageNet-1k

[More Information Needed]

### Training Procedure

We use the training script in `timm`

```

python3 train.py ../datasets/imagenet/ --model resnet50 --num-classes 1000 --lr 0.1 --warmup-epochs 5 --epochs 240 --weight-decay 1e-4 --sched cosine --reprob 0.4 --recount 3 --remode pixel --aa rand-m7-mstd0.5-inc1 -b 192 -j 6 --amp --dist-bn reduce

```

### Results

qlnet-10m: acc=78.608 |