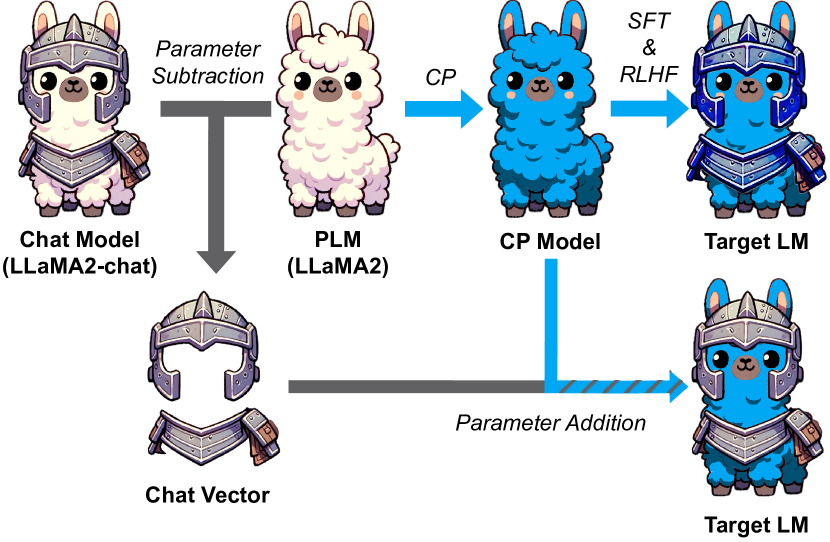

This model employs the technique described in "Chat Vector: A Simple Approach to Equip LLMs with Instruction Following and Model Alignment in New Languages".

It is based on stablelm-gamma-7b, a model that has not undergone instruction tuning, which was pre-trained using mistral-7b-v0.1.

To extract chat vectors, mistral-7b-v0.1 was "subtracted" from mistral-7b-instruct-v0.2.

By applying these extracted chat vectors to the non-instruction-tuned model stablelm-gamma-7b, an effect equivalent to instruction tuning is achieved.

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained("kousw/stablelm-gamma-7b-chatvector")

tokenizer = AutoTokenizer.from_pretrained("kousw/stablelm-gamma-7b-chatvector")

messages = [

{"role": "user", "content": "与えられたことわざの意味を小学生でも分かるように教えてください。"},

{"role": "assistant", "content": "はい、どんなことわざでもわかりやすく答えます"},

{"role": "user", "content": "情けは人のためならず"}

]

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(device)

model.to(device)

generated_ids = model.generate(model_inputs, max_new_tokens=256, do_sample=True)

decoded = tokenizer.batch_decode(generated_ids)

print(decoded[0])

- Downloads last month

- 8

This model does not have enough activity to be deployed to Inference API (serverless) yet.

Increase its social visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.