Model description

This model demonstrates the Node2Vec technique on the small version of the Movielens dataset to learn movie embeddings.

Node2Vec Technique

Node2Vec is a simple, yet scalable and effective technique for learning low-dimensional embeddings for nodes in a graph by optimising a neighbourhood-preserving objective. The aim is to learn similar embeddings for neighbouring nodes, with respect to the graph structure. Given your data items structured as a graph (where the items are represented as nodes and the relationship between items are represented as edges), node2vec works as follows:

- Generate item sequences using (biased) random walk.

- Create positive and negative training examples from these sequences.

- Train a word2vec model (skip-gram) to learn embeddings for the items.

This model is an example of applying Node2Vec technique on the small version of the Movielens dataset to learn movie embeddings. Such a dataset can be represented as a graph by treating the movies as nodes, and creating edges between movies that have similar ratings by the users. The learnt movie embeddings can be used for tasks such as movie recommendation, or movie genres prediction.

For more information on Node2Vec, please refer to its host page

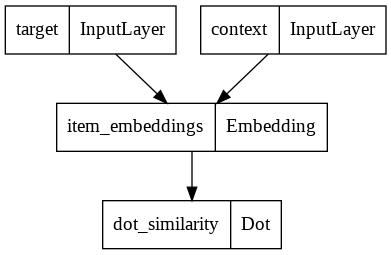

Skip-Gram Model

Our skip-gram is a simple binary classification model that works as follows:

- An embedding is looked up for the target movie.

- An embedding is looked up for the context movie.

- The dot product is computed between these two embeddings.

- The result (after a sigmoid activation) is compared to the label.

- A binary crossentropy loss is used.

Model Plot

Credits

Author: Khalid Salama.

Based on the following Keras example Graph representation learning with node2vec by Khalid Salama

Check out the demo space here

- Downloads last month

- 15