Thesa: A Therapy Chatbot 👩🏻⚕️

Thesa is an experimental project of a therapy chatbot trained on mental health data and fine-tuned with the Zephyr GPTQ model that uses quantization to decrease high computatinal and storage costs.

Model description

- Model type: fine-tuned from TheBloke/zephyr-7B-alpha-GPTQ on various mental health datasets

- Language(s): English

- License: MIT

Intended uses & limitations

This model is purely experimental and should not be used as substitute for a mental health professional.

Training evaluation

Training loss:

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

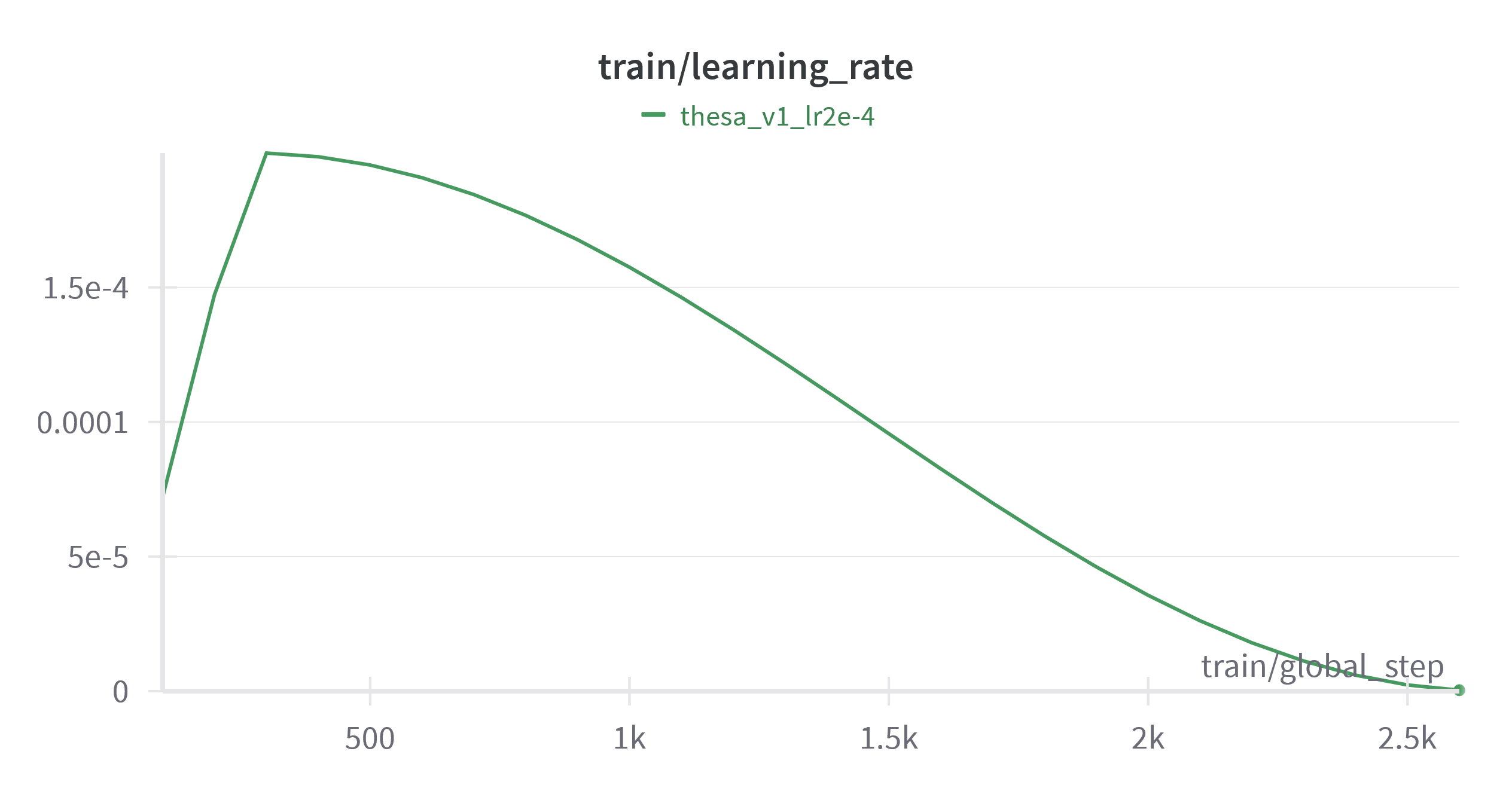

- learning_rate: 0.0002

- warmup_ratio: 0.1

- train_batch_size: 8

- eval_batch_size: 8

- gradient_accumulation_steps: 1

- seed: 35

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

- mixed_precision_training: Native AMP

- fp16: True

Learning rate overtime (warm up ratio was used during training):

Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

- Accelerate 0.27.2

- PEFT 0.8.2

- Auto-GPTQ 0.6.0

- TRL 0.7.11

- Optimum 1.17.1

- Bitsandbytes 0.42.0

- Downloads last month

- 6

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for johnhandleyd/thesa

Base model

mistralai/Mistral-7B-v0.1

Finetuned

HuggingFaceH4/zephyr-7b-alpha

Quantized

TheBloke/zephyr-7B-alpha-GPTQ