YAML Metadata

Warning:

The pipeline tag "conversational" is not in the official list: text-classification, token-classification, table-question-answering, question-answering, zero-shot-classification, translation, summarization, feature-extraction, text-generation, text2text-generation, fill-mask, sentence-similarity, text-to-speech, text-to-audio, automatic-speech-recognition, audio-to-audio, audio-classification, audio-text-to-text, voice-activity-detection, depth-estimation, image-classification, object-detection, image-segmentation, text-to-image, image-to-text, image-to-image, image-to-video, unconditional-image-generation, video-classification, reinforcement-learning, robotics, tabular-classification, tabular-regression, tabular-to-text, table-to-text, multiple-choice, text-retrieval, time-series-forecasting, text-to-video, image-text-to-text, visual-question-answering, document-question-answering, zero-shot-image-classification, graph-ml, mask-generation, zero-shot-object-detection, text-to-3d, image-to-3d, image-feature-extraction, video-text-to-text, keypoint-detection, any-to-any, other

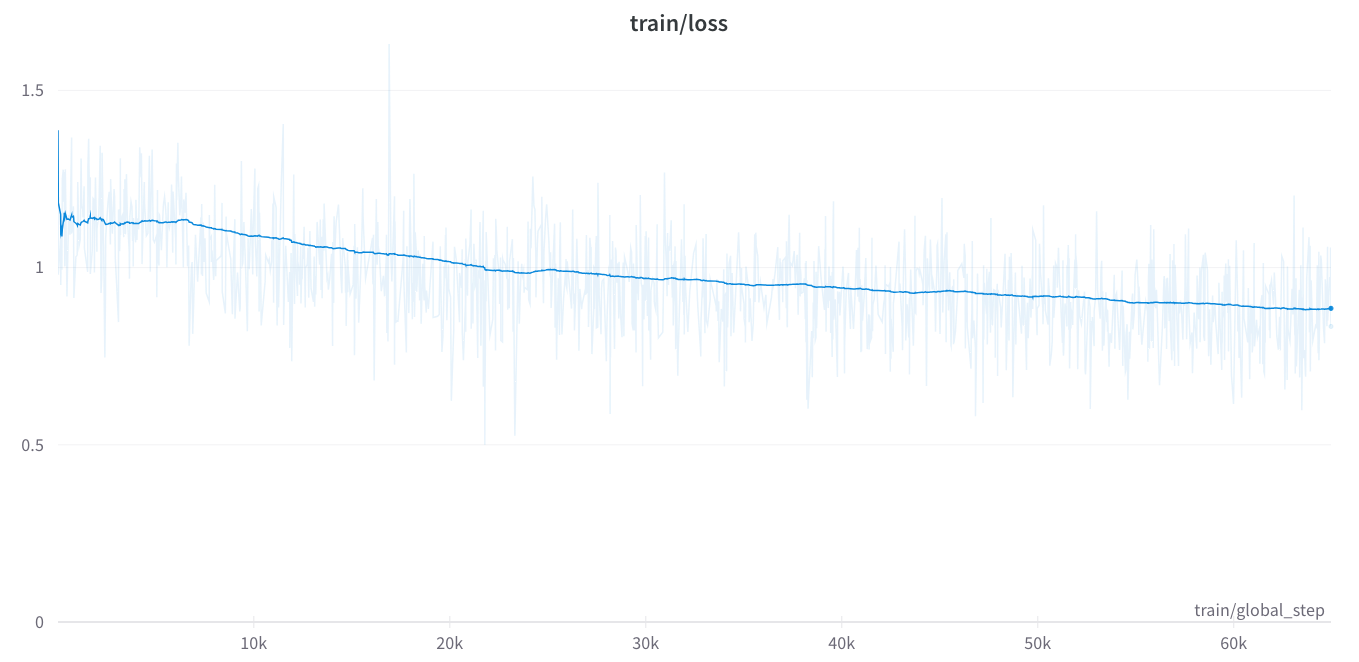

GPT-J 6B model was finetuned on GPT-4 generations of the Alpaca prompts on MonsterAPI's no-code LLM finetuner, using LoRA for ~ 65,000 steps, auto-optmised to run on 1 A6000 GPU with no out of memory issues and without needing me to write any code or setup a GPU server with libraries to run this experiment. The finetuner does it all for us by itself.

Documentation on no-code LLM finetuner: https://docs.monsterapi.ai/fine-tune-a-large-language-model-llm

license: apache-2.0

- Downloads last month

- 12

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.