text

stringlengths 2.5k

6.39M

| kind

stringclasses 3

values |

|---|---|

# SiteAlign features

We read the SiteAlign features from the respective [paper](https://onlinelibrary.wiley.com/doi/full/10.1002/prot.21858) and [SI table](https://onlinelibrary.wiley.com/action/downloadSupplement?doi=10.1002%2Fprot.21858&file=prot21858-SupplementaryTable.pdf) to verify `kissim`'s implementation of the SiteAlign definitions:

```

from kissim.definitions import SITEALIGN_FEATURES

SITEALIGN_FEATURES

```

## Size

SiteAlign's size definitions:

> Natural amino acids have been classified into three groups according to the number of heavy atoms (<4 heavy atoms: Ala, Cys, Gly, Pro, Ser, Thr, Val; 4–6 heavy atoms: Asn, Asp, Gln, Glu, His, Ile, Leu, Lys, Met; >6 heavy atoms: Arg, Phe, Trp, Tyr) and three values (“1,” “2,” “3”) are outputted according to the group to which the current residues belong to (Table I)

https://onlinelibrary.wiley.com/doi/full/10.1002/prot.21858

### Parse text from SiteAlign paper

```

size = {

1.0: "Ala, Cys, Gly, Pro, Ser, Thr, Val".split(", "),

2.0: "Asn, Asp, Gln, Glu, His, Ile, Leu, Lys, Met".split(", "),

3.0: "Arg, Phe, Trp, Tyr".split(", "),

}

```

### `kissim` definitions correct?

```

import pandas as pd

from IPython.display import display, HTML

# Format SiteAlign data

size_list = []

for value, amino_acids in size.items():

values = [(amino_acid.upper(), value) for amino_acid in amino_acids]

size_list = size_list + values

size_series = (

pd.DataFrame(size_list, columns=["amino_acid", "size"])

.sort_values("amino_acid")

.set_index("amino_acid")

.squeeze()

)

# KiSSim implementation of SiteAlign features correct?

diff = size_series == SITEALIGN_FEATURES["size"]

if not diff.all():

raise ValueError(

f"KiSSim implementation of SiteAlign features is incorrect!!!\n"

f"{display(HTML(diff.to_html()))}"

)

else:

print("KiSSim implementation of SiteAlign features is correct :)")

```

## HBA, HBD, charge, aromatic, aliphatic

### Parse table from SiteAlign SI

```

sitealign_table = """

Ala 0 0 0 1 0

Arg 3 0 +1 0 0

Asn 1 1 0 0 0

Asp 0 2 -1 0 0

Cys 1 0 0 1 0

Gly 0 0 0 0 0

Gln 1 1 0 0 0

Glu 0 2 -1 0 0

His/Hid/Hie 1 1 0 0 1

Hip 2 0 1 0 0

Ile 0 0 0 1 0

Leu 0 0 0 1 0

Lys 1 0 +1 0 0

Met 0 0 0 1 0

Phe 0 0 0 0 1

Pro 0 0 0 1 0

Ser 1 1 0 0 0

Thr 1 1 0 1 0

Trp 1 0 0 0 1

Tyr 1 1 0 0 1

Val 0 0 0 1 0

"""

sitealign_table = [i.split() for i in sitealign_table.split("\n")[1:-1]]

sitealign_dict = {i[0]: i[1:] for i in sitealign_table}

sitealign_df = pd.DataFrame.from_dict(sitealign_dict).transpose()

sitealign_df.columns = ["hbd", "hba", "charge", "aliphatic", "aromatic"]

sitealign_df = sitealign_df[["hbd", "hba", "charge", "aromatic", "aliphatic"]]

sitealign_df = sitealign_df.rename(index={"His/Hid/Hie": "His"})

sitealign_df = sitealign_df.drop("Hip", axis=0)

sitealign_df = sitealign_df.astype("float")

sitealign_df.index = [i.upper() for i in sitealign_df.index]

sitealign_df = sitealign_df.sort_index()

sitealign_df

```

### `kissim` definitions correct?

```

from IPython.display import display, HTML

diff = sitealign_df == SITEALIGN_FEATURES.drop("size", axis=1).sort_index()

if not diff.all().all():

raise ValueError(

f"KiSSim implementation of SiteAlign features is incorrect!!!\n"

f"{display(HTML(diff.to_html()))}"

)

else:

print("KiSSim implementation of SiteAlign features is correct :)")

```

## Table style

```

from Bio.Data.IUPACData import protein_letters_3to1

for feature_name in SITEALIGN_FEATURES.columns:

print(feature_name)

for name, group in SITEALIGN_FEATURES.groupby(feature_name):

amino_acids = {protein_letters_3to1[i.capitalize()] for i in group.index}

amino_acids = sorted(amino_acids)

print(f"{name:<7}{' '.join(amino_acids)}")

print()

```

|

github_jupyter

|

```

#@markdown ■■■■■■■■■■■■■■■■■■

#@markdown 初始化openpose

#@markdown ■■■■■■■■■■■■■■■■■■

#设置版本为1.x

%tensorflow_version 1.x

import tensorflow as tf

tf.__version__

! nvcc --version

! nvidia-smi

! pip install PyQt5

import time

init_start_time = time.time()

#安装 cmake

#https://drive.google.com/file/d/1lAXs5X7qMnKQE48I0JqSob4FX1t6-mED/view?usp=sharing

file_id = "1lAXs5X7qMnKQE48I0JqSob4FX1t6-mED"

file_name = "cmake-3.13.4.zip"

! cd ./ && curl -sc ./cookie "https://drive.google.com/uc?export=download&id=$file_id" > /dev/null

code = "$(awk '/_warning_/ {print $NF}' ./cookie)"

! cd ./ && curl -Lb ./cookie "https://drive.google.com/uc?export=download&confirm=$code&id=$file_id" -o "$file_name"

! cd ./ && unzip cmake-3.13.4.zip

! cd cmake-3.13.4 && ./configure && make && sudo make install

# 依赖库安装

! sudo apt install caffe-cuda

! sudo apt-get --assume-yes update

! sudo apt-get --assume-yes install build-essential

# OpenCV

! sudo apt-get --assume-yes install libopencv-dev

# General dependencies

! sudo apt-get --assume-yes install libatlas-base-dev libprotobuf-dev libleveldb-dev libsnappy-dev libhdf5-serial-dev protobuf-compiler

! sudo apt-get --assume-yes install --no-install-recommends libboost-all-dev

# Remaining dependencies, 14.04

! sudo apt-get --assume-yes install libgflags-dev libgoogle-glog-dev liblmdb-dev

# Python3 libs

! sudo apt-get --assume-yes install python3-setuptools python3-dev build-essential

! sudo apt-get --assume-yes install python3-pip

! sudo -H pip3 install --upgrade numpy protobuf opencv-python

# OpenCL Generic

! sudo apt-get --assume-yes install opencl-headers ocl-icd-opencl-dev

! sudo apt-get --assume-yes install libviennacl-dev

# Openpose安装

ver_openpose = "v1.6.0"

# Openpose の clone

! git clone --depth 1 -b "$ver_openpose" https://github.com/CMU-Perceptual-Computing-Lab/openpose.git

# ! git clone --depth 1 https://github.com/CMU-Perceptual-Computing-Lab/openpose.git

# Openpose の モデルデータDL

! cd openpose/models && ./getModels.sh

#编译Openpose

! cd openpose && rm -r build || true && mkdir build && cd build && cmake .. && make -j`nproc` # example demo usage

# 执行示例确认

! cd /content/openpose && ./build/examples/openpose/openpose.bin --video examples/media/video.avi --write_json ./output/ --display 0 --write_video ./output/openpose.avi

#@markdown ■■■■■■■■■■■■■■■■■■

#@markdown 其他软件初始化

#@markdown ■■■■■■■■■■■■■■■■■■

ver_tag = "ver1.02.01"

# FCRN-DepthPrediction-vmd clone

! git clone --depth 1 -b "$ver_tag" https://github.com/miu200521358/FCRN-DepthPrediction-vmd.git

# FCRN-DepthPrediction-vmd 识别深度模型下载

# 建立模型数据文件夹

! mkdir -p ./FCRN-DepthPrediction-vmd/tensorflow/data

# 下载模型数据并解压

! cd ./FCRN-DepthPrediction-vmd/tensorflow/data && wget -c "http://campar.in.tum.de/files/rupprecht/depthpred/NYU_FCRN-checkpoint.zip" && unzip NYU_FCRN-checkpoint.zip

# 3d-pose-baseline-vmd clone

! git clone --depth 1 -b "$ver_tag" https://github.com/miu200521358/3d-pose-baseline-vmd.git

# 3d-pose-baseline-vmd Human3.6M 模型数据DL

# 建立Human3.6M模型数据文件夹

! mkdir -p ./3d-pose-baseline-vmd/data/h36m

# 下载Human3.6M模型数据并解压

file_id = "1W5WoWpCcJvGm4CHoUhfIB0dgXBDCEHHq"

file_name = "h36m.zip"

! cd ./ && curl -sc ./cookie "https://drive.google.com/uc?export=download&id=$file_id" > /dev/null

code = "$(awk '/_warning_/ {print $NF}' ./cookie)"

! cd ./ && curl -Lb ./cookie "https://drive.google.com/uc?export=download&confirm=$code&id=$file_id" -o "$file_name"

! cd ./ && unzip h36m.zip

! mv ./h36m ./3d-pose-baseline-vmd/data/

# 3d-pose-baseline-vmd 训练数据

# 3d-pose-baseline学习数据文件夹

! mkdir -p ./3d-pose-baseline-vmd/experiments

# 下载3d-pose-baseline训练后的数据

file_id = "1v7ccpms3ZR8ExWWwVfcSpjMsGscDYH7_"

file_name = "experiments.zip"

! cd ./3d-pose-baseline-vmd && curl -sc ./cookie "https://drive.google.com/uc?export=download&id=$file_id" > /dev/null

code = "$(awk '/_warning_/ {print $NF}' ./cookie)"

! cd ./3d-pose-baseline-vmd && curl -Lb ./cookie "https://drive.google.com/uc?export=download&confirm=$code&id=$file_id" -o "$file_name"

! cd ./3d-pose-baseline-vmd && unzip experiments.zip

# VMD-3d-pose-baseline-multi clone

! git clone --depth 1 -b "$ver_tag" https://github.com/miu200521358/VMD-3d-pose-baseline-multi.git

# 安装VMD-3d-pose-baseline-multi 依赖库

! sudo apt-get install python3-pyqt5

! sudo apt-get install pyqt5-dev-tools

! sudo apt-get install qttools5-dev-tools

#安装编码器

! sudo apt-get install mkvtoolnix

init_elapsed_time = (time.time() - init_start_time) / 60

! echo "■■■■■■■■■■■■■■■■■■■■■■■■"

! echo "■■所有初始化均已完成"

! echo "■■"

! echo "■■处理时间:" "$init_elapsed_time" "分"

! echo "■■■■■■■■■■■■■■■■■■■■■■■■"

! echo "Openpose执行结果"

! ls -l /content/openpose/output

#@markdown ■■■■■■■■■■■■■■■■■■

#@markdown 执行函数初始化

#@markdown ■■■■■■■■■■■■■■■■■■

import os

import cv2

import datetime

import time

import datetime

import cv2

import shutil

import glob

from google.colab import files

static_number_people_max = 1

static_frame_first = 0

static_end_frame_no = -1

static_reverse_specific = ""

static_order_specific = ""

static_born_model_csv = "born/animasa_miku_born.csv"

static_is_ik = 1

static_heel_position = 0.0

static_center_z_scale = 1

static_smooth_times = 1

static_threshold_pos = 0.5

static_threshold_rot = 3

static_src_input_video = ""

static_input_video = ""

#执行文件夹

openpose_path = "/content/openpose"

#输出文件夹

base_path = "/content/output"

output_json = "/content/output/json"

output_openpose_avi = "/content/output/openpose.avi"

now_str = ""

depth_dir_path = ""

drive_dir_path = ""

def video_hander( input_video):

global base_path

print("视频名称: ", os.path.basename(input_video))

print("视频大小: ", os.path.getsize(input_video))

video = cv2.VideoCapture(input_video)

# 宽

W = video.get(cv2.CAP_PROP_FRAME_WIDTH)

# 高

H = video.get(cv2.CAP_PROP_FRAME_HEIGHT)

# 总帧数

count = video.get(cv2.CAP_PROP_FRAME_COUNT)

# fps

fps = video.get(cv2.CAP_PROP_FPS)

print("宽: {0}, 高: {1}, 总帧数: {2}, fps: {3}".format(W, H, count, fps))

width = 1280

height = 720

if W != 1280 or (fps != 30 and fps != 60):

print("重新编码,因为大小或fps不在范围: "+ input_video)

# 縮尺

scale = width / W

# 高さ

height = int(H * scale)

# 出力ファイルパス

out_name = 'recode_{0}.mp4'.format("{0:%Y%m%d_%H%M%S}".format(datetime.datetime.now()))

out_path = '{0}/{1}'.format(base_path, out_name)

# try:

# fourcc = cv2.VideoWriter_fourcc(*"MP4V")

# out = cv2.VideoWriter(out_path, fourcc, 30.0, (width, height), True)

# # 入力ファイル

# cap = cv2.VideoCapture(input_video)

# while(cap.isOpened()):

# # 動画から1枚キャプチャして読み込む

# flag, frame = cap.read() # Capture frame-by-frame

# # 動画が終わっていたら終了

# if flag == False:

# break

# # 縮小

# output_frame = cv2.resize(frame, (width, height))

# # 出力

# out.write(output_frame)

# # 終わったら開放

# out.release()

# except Exception as e:

# print("重新编码失败", e)

# cap.release()

# cv2.destroyAllWindows()

# ! mkvmerge --default-duration 0:30fps --fix-bitstream-timing-information 0 "$input_video" -o temp-video.mkv

# ! ffmpeg -i temp-video.mkv -c:v copy side_video.mkv

# ! ffmpeg -i side_video.mkv -vf scale=1280:720 "$out_path"

! ffmpeg -i "$input_video" -qscale 0 -r 30 -y -vf scale=1280:720 "$out_path"

print('MMD重新生成MP4文件成功', out_path)

input_video_name = out_name

# 入力動画ファイル再設定

input_video = base_path + "/"+ input_video_name

video = cv2.VideoCapture(input_video)

# 幅

W = video.get(cv2.CAP_PROP_FRAME_WIDTH)

# 高さ

H = video.get(cv2.CAP_PROP_FRAME_HEIGHT)

# 総フレーム数

count = video.get(cv2.CAP_PROP_FRAME_COUNT)

# fps

fps = video.get(cv2.CAP_PROP_FPS)

print("【重新生成】宽: {0}, 高: {1}, 总帧数: {2}, fps: {3}, 名字: {4}".format(W, H, count, fps,input_video_name))

return input_video

def run_openpose(input_video,number_people_max,frame_first):

#建立临时文件夹

! mkdir -p "$output_json"

#开始执行

! cd "$openpose_path" && ./build/examples/openpose/openpose.bin --video "$input_video" --display 0 --model_pose COCO --write_json "$output_json" --write_video "$output_openpose_avi" --frame_first "$frame_first" --number_people_max "$number_people_max"

def run_fcrn_depth(input_video,end_frame_no,reverse_specific,order_specific):

global now_str,depth_dir_path,drive_dir_path

now_str = "{0:%Y%m%d_%H%M%S}".format(datetime.datetime.now())

! cd FCRN-DepthPrediction-vmd && python tensorflow/predict_video.py --model_path tensorflow/data/NYU_FCRN.ckpt --video_path "$input_video" --json_path "$output_json" --interval 10 --reverse_specific "$reverse_specific" --order_specific "$order_specific" --verbose 1 --now "$now_str" --avi_output "yes" --number_people_max "$number_people_max" --end_frame_no "$end_frame_no"

# 深度結果コピー

depth_dir_path = output_json + "_" + now_str + "_depth"

drive_dir_path = base_path + "/" + now_str

! mkdir -p "$drive_dir_path"

if os.path.exists( depth_dir_path + "/error.txt"):

# 发生错误

! cp "$depth_dir_path"/error.txt "$drive_dir_path"

! echo "■■■■■■■■■■■■■■■■■■■■■■■■"

! echo "■■由于发生错误,处理被中断。"

! echo "■■"

! echo "■■■■■■■■■■■■■■■■■■■■■■■■"

! echo "$drive_dir_path" "请检查 error.txt 的内容。"

else:

! cp "$depth_dir_path"/*.avi "$drive_dir_path"

! cp "$depth_dir_path"/message.log "$drive_dir_path"

! cp "$depth_dir_path"/reverse_specific.txt "$drive_dir_path"

! cp "$depth_dir_path"/order_specific.txt "$drive_dir_path"

for i in range(1, number_people_max+1):

! echo ------------------------------------------

! echo 3d-pose-baseline-vmd ["$i"]

! echo ------------------------------------------

target_name = "_" + now_str + "_idx0" + str(i)

target_dir = output_json + target_name

!cd ./3d-pose-baseline-vmd && python src/openpose_3dpose_sandbox_vmd.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 200 --load 4874200 --gif_fps 30 --verbose 1 --openpose "$target_dir" --person_idx 1

def run_3d_to_vmd(number_people_max,born_model_csv,is_ik,heel_position,center_z_scale,smooth_times,threshold_pos,threshold_rot):

global now_str,depth_dir_path,drive_dir_path

for i in range(1, number_people_max+1):

target_name = "_" + now_str + "_idx0" + str(i)

target_dir = output_json + target_name

for f in glob.glob(target_dir +"/*.vmd"):

! rm "$f"

! cd ./VMD-3d-pose-baseline-multi && python applications/pos2vmd_multi.py -v 2 -t "$target_dir" -b "$born_model_csv" -c 30 -z "$center_z_scale" -s "$smooth_times" -p "$threshold_pos" -r "$threshold_rot" -k "$is_ik" -e "$heel_position"

# INDEX別結果コピー

idx_dir_path = drive_dir_path + "/idx0" + str(i)

! mkdir -p "$idx_dir_path"

# 日本語対策でpythonコピー

for f in glob.glob(target_dir +"/*.vmd"):

shutil.copy(f, idx_dir_path)

print(f)

files.download(f)

! cp "$target_dir"/pos.txt "$idx_dir_path"

! cp "$target_dir"/start_frame.txt "$idx_dir_path"

def run_mmd(input_video,number_people_max,frame_first,end_frame_no,reverse_specific,order_specific,born_model_csv,is_ik,heel_position,center_z_scale,smooth_times,threshold_pos,threshold_rot):

global static_input_video,static_number_people_max ,static_frame_first ,static_end_frame_no,static_reverse_specific ,static_order_specific,static_born_model_csv

global static_is_ik,static_heel_position ,static_center_z_scale ,static_smooth_times ,static_threshold_pos ,static_threshold_rot

global base_path,static_src_input_video

start_time = time.time()

video_check= False

openpose_check = False

Fcrn_depth_check = False

pose_to_vmd_check = False

#源文件对比

if static_src_input_video != input_video:

video_check = True

openpose_check = True

Fcrn_depth_check = True

pose_to_vmd_check = True

if (static_number_people_max != number_people_max) or (static_frame_first != frame_first):

openpose_check = True

Fcrn_depth_check = True

pose_to_vmd_check = True

if (static_end_frame_no != end_frame_no) or (static_reverse_specific != reverse_specific) or (static_order_specific != order_specific):

Fcrn_depth_check = True

pose_to_vmd_check = True

if (static_born_model_csv != born_model_csv) or (static_is_ik != is_ik) or (static_heel_position != heel_position) or (static_center_z_scale != center_z_scale) or \

(static_smooth_times != smooth_times) or (static_threshold_pos != threshold_pos) or (static_threshold_rot != threshold_rot):

pose_to_vmd_check = True

#因为视频源文件重置,所以如果无修改需要重命名文件

if video_check:

! rm -rf "$base_path"

! mkdir -p "$base_path"

static_src_input_video = input_video

input_video = video_hander(input_video)

static_input_video = input_video

else:

input_video = static_input_video

if openpose_check:

run_openpose(input_video,number_people_max,frame_first)

static_number_people_max = number_people_max

static_frame_first = frame_first

if Fcrn_depth_check:

run_fcrn_depth(input_video,end_frame_no,reverse_specific,order_specific)

static_end_frame_no = end_frame_no

static_reverse_specific = reverse_specific

static_order_specific = order_specific

if pose_to_vmd_check:

run_3d_to_vmd(number_people_max,born_model_csv,is_ik,heel_position,center_z_scale,smooth_times,threshold_pos,threshold_rot)

static_born_model_csv = born_model_csv

static_is_ik = is_ik

static_heel_position = heel_position

static_center_z_scale = center_z_scale

static_smooth_times = smooth_times

static_threshold_pos = threshold_pos

static_threshold_rot = threshold_rot

elapsed_time = (time.time() - start_time) / 60

print( "■■■■■■■■■■■■■■■■■■■■■■■■")

print( "■■所有处理完成")

print( "■■")

print( "■■处理時間:" + str(elapsed_time)+ "分")

print( "■■■■■■■■■■■■■■■■■■■■■■■■")

print( "")

print( "MMD自动跟踪执行结果")

print( base_path)

! ls -l "$base_path"

#@markdown ■■■■■■■■■■■■■■■■■■

#@markdown GO GO GO GO 执行本单元格,上传视频

#@markdown ■■■■■■■■■■■■■■■■■■

from google.colab import files

#@markdown ---

#@markdown ### 输入视频名称

#@markdown 可以选择手动拖入视频到文件中(比较快),然后输入视频文件名,或者直接运行,不输入文件名直接本地上传

input_video = "" #@param {type: "string"}

if input_video == "":

uploaded = files.upload()

for fn in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=fn, length=len(uploaded[fn])))

input_video = fn

input_video = "/content/" + input_video

print("本次执行的转化视频文件名为: "+input_video)

#@markdown 输入用于跟踪图像的参数并执行单元。

#@markdown ---

#@markdown ### 【O】视频中的最大人数

#@markdown 请输入您希望从视频中获得的人数。

#@markdown 请与视频中人数尽量保持一致

number_people_max = 1#@param {type: "number"}

#@markdown ---

#@markdown ### 【O】要从第几帧开始分析

#@markdown 输入帧号以开始分析。(从0开始)

#@markdown 请指定在视频中显示所有人的第一帧,默认为0即可,除非你需要跳过某些片段(例如片头)。

frame_first = 0 #@param {type: "number"}

#@markdown ---

#@markdown ### 【F】要从第几帧结束

#@markdown 请输入要从哪一帧结束

#@markdown (从0开始)在“FCRN-DepthPrediction-vmd”中调整反向或顺序时,可以完成过程并查看结果,默认为-1 表示执行到最后

end_frame_no = -1 #@param {type: "number"}

#@markdown ---

#@markdown ### 【F】反转数据表

#@markdown 指定由Openpose反转的帧号(从0开始),人员INDEX顺序和反转的内容。

#@markdown 按照Openpose在 0F 识别的顺序,将INDEX分配为0,1,...。

#@markdown 格式: [{帧号}: 用于指定反转的人INDEX, {反转内容}]

#@markdown {反转内容}: R: 整体身体反转, U:上半身反转, L: 下半身反转, N: 无反转

#@markdown 例如:[10:1,R] 整个人在第10帧中反转第一个人。在message.log中会记录以上述格式输出内容

#@markdown 因此请参考与[10:1,R][30:0,U],中一样,可以在括号中指定多个项目 ps(不要带有中文标点符号))

reverse_specific = "" #@param {type: "string"}

#@markdown ---

#@markdown ### 【F】输出颜色(仅参考,如果多人时,某个人序号跟别人交换或者错误,可以用此项修改)

#@markdown 请在多人轨迹中的交点之后指定人索引顺序。如果要跟踪一个人,可以将其留为空白。

#@markdown 按照Openpose在0F时识别的顺序分配0、1和INDEX。格式:[<帧号>:第几个人的索引,第几个人的索引,…]示例)[10:1,0]…第帧10是从左数第1人按第0个人的顺序对其进行排序。

#@markdown message.log包含以上述格式输出的顺序,因此请参考它。可以在括号中指定多个项目,例如[10:1,0] [30:0,1]。在output_XXX.avi中,按照估计顺序为人们分配了颜色。身体的右半部分为红色,左半部分为以下颜色。

#@markdown 0:绿色,1:蓝色,2:白色,3:黄色,4:桃红色,5:浅蓝色,6:深绿色,7:深蓝色,8:灰色,9:深黄色,10:深桃红色,11:深浅蓝色

order_specific = "" #@param {type: "string"}

#@markdown ---

#@markdown ### 【V】骨骼结构CSV文件

#@markdown 选择或输入跟踪目标模型的骨骼结构CSV文件的路径。请将csv文件上传到Google云端硬盘的“ autotrace”文件夹。

#@markdown 您可以选择 "Animasa-Miku" 和 "Animasa-Miku semi-standard", 也可以输入任何模型的骨骼结构CSV文件

#@markdown 如果要输入任何模型骨骼结构CSV文件, 请将csv文件上传到Google云端硬盘的 "autotrace" 文件夹下

#@markdown 然后请输入「/gdrive/My Drive/autotrace/[csv file name]」

born_model_csv = "born/\u3042\u306B\u307E\u3055\u5F0F\u30DF\u30AF\u6E96\u6A19\u6E96\u30DC\u30FC\u30F3.csv" #@param ["born/animasa_miku_born.csv", "born/animasa_miku_semi_standard_born.csv"] {allow-input: true}

#@markdown ---

#@markdown ### 【V】是否使用IK输出

#@markdown 选择以IK输出,yes或no

#@markdown 如果输入no,则以输出FK

ik_flag = "yes" #@param ['yes', 'no']

is_ik = 1 if ik_flag == "yes" else 0

#@markdown ---

#@markdown ### 【V】脚与地面位置校正

#@markdown 请输入数值的鞋跟的Y轴校正值(可以为小数)

#@markdown 输入负值会接近地面,输入正值会远离地面。

#@markdown 尽管会自动在某种程度上自动校正,但如果无法校正,请进行设置。

heel_position = 0.0 #@param {type: "number"}

#@markdown ---

#@markdown ### 【V】Z中心放大倍率

#@markdown 以将放大倍数应用到Z轴中心移动(可以是小数)

#@markdown 值越小,中心Z移动的宽度越小

#@markdown 输入0时,不进行Z轴中心移动。

center_z_scale = 2#@param {type: "number"}

#@markdown ---

#@markdown ### 【V】平滑频率

#@markdown 指定运动的平滑频率

#@markdown 请仅输入1或更大的整数

#@markdown 频率越大,频率越平滑。(行为幅度会变小)

smooth_times = 1#@param {type: "number"}

#@markdown ---

#@markdown ### 【V】移动稀疏量 (低于该阀值的运动宽度,不会进行输出,防抖动)

#@markdown 用数值(允许小数)指定用于稀疏移动(IK /中心)的移动量

#@markdown 如果在指定范围内有移动,则将稀疏。如果移动抽取量设置为0,则不执行抽取。

#@markdown 当移动稀疏量设置为0时,不进行稀疏。

threshold_pos = 0.3 #@param {type: "number"}

#@markdown ---

#@markdown ### 【V】旋转稀疏角 (低于该阀值的运动角度,则不会进行输出)

#@markdown 指定用于稀疏旋转键的角度(0到180度的十进制数)

#@markdown 如果在指定角度范围内有旋转,则稀疏旋转键。

threshold_rot = 3#@param {type: "number"}

print(" 【O】Maximum number of people in the video: "+str(number_people_max))

print(" 【O】Frame number to start analysis: "+str(frame_first))

print(" 【F】Frame number to finish analysis: "+str(end_frame_no))

print(" 【F】Reverse specification list: "+str(reverse_specific))

print(" 【F】Ordered list: "+str(order_specific))

print(" 【V】Bone structure CSV file: "+str(born_model_csv))

print(" 【V】Whether to output with IK: "+str(ik_flag))

print(" 【V】Heel position correction: "+str(heel_position))

print(" 【V】Center Z moving magnification: "+str(center_z_scale))

print(" 【V】Smoothing frequency: "+str(smooth_times))

print(" 【V】Movement key thinning amount: "+str(threshold_pos))

print(" 【V】Rotating Key Culling Angle: "+str(threshold_rot))

print("")

print("If the above is correct, please proceed to the next.")

#input_video = "/content/openpose/examples/media/video.avi"

run_mmd(input_video,number_people_max,frame_first,end_frame_no,reverse_specific,order_specific,born_model_csv,is_ik,heel_position,center_z_scale,smooth_times,threshold_pos,threshold_rot)

```

# License许可

发布和分发MMD自动跟踪的结果时,请确保检查许可证。Unity也是如此。

如果您能列出您的许可证,我将不胜感激。

[MMD运动跟踪自动化套件许可证](https://ch.nicovideo.jp/miu200521358/blomaga/ar1686913)

原作者:Twitter miu200521358

修改与优化:B站 妖风瑟瑟

|

github_jupyter

|

```

## Advanced Course in Machine Learning

## Week 4

## Exercise 2 / Probabilistic PCA

import numpy as np

import scipy

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from numpy import linalg as LA

sns.set_style("darkgrid")

def build_dataset(N, D, K, sigma=1):

x = np.zeros((D, N))

z = np.random.normal(0.0, 1.0, size=(K, N))

# Create a w with random values

w = np.random.normal(0.0, sigma**2, size=(D, K))

mean = np.dot(w, z)

for d in range(D):

for n in range(N):

x[d, n] = np.random.normal(mean[d, n], sigma**2)

print("True principal axes:")

print(w)

return x, mean, w, z

N = 5000 # number of data points

D = 2 # data dimensionality

K = 1 # latent dimensionality

sigma = 1.0

x, mean, w, z = build_dataset(N, D, K, sigma)

print(z)

print(w)

plt.figure(num=None, figsize=(8, 6), dpi=100, facecolor='w', edgecolor='k')

sns.scatterplot(z[0, :], 0, alpha=0.5, label='z')

origin = [0], [0] # origin point

plt.xlabel('x')

plt.ylabel('y')

plt.legend(loc='lower right')

plt.title('Probabilistic PCA, generated z')

plt.show()

plt.figure(num=None, figsize=(8, 6), dpi=100, facecolor='w', edgecolor='k')

sns.scatterplot(z[0, :], 0, alpha=0.5, label='z')

sns.scatterplot(mean[0, :], mean[1, :], color='red', alpha=0.5, label='Wz')

origin = [0], [0] # origin point

#Plot the principal axis

plt.quiver(*origin, w[0,0], w[1,0], color=['g'], scale=1, label='W')

plt.xlabel('x')

plt.ylabel('y')

plt.legend(loc='upper right')

plt.title('Probabilistic PCA, generated z')

plt.show()

print(x)

plt.figure(num=None, figsize=(8, 6), dpi=100, facecolor='w', edgecolor='k')

sns.scatterplot(x[0, :], x[1, :], color='orange', alpha=0.5)

#plt.axis([-5, 5, -5, 5])

plt.xlabel('x')

plt.ylabel('y')

#Plot the principal axis

plt.quiver(*origin, w[0,0], w[1,0], color=['g'], scale=10, label='W')

#Plot probability density contours

sns.kdeplot(x[0, :], x[1, :], n_levels=3, color='purple')

plt.title('Probabilistic PCA, generated x')

plt.show()

plt.figure(num=None, figsize=(8, 6), dpi=100, facecolor='w', edgecolor='k')

sns.scatterplot(x[0, :], x[1, :], color='orange', alpha=0.5, label='X')

sns.scatterplot(z[0, :], 0, alpha=0.5, label='z')

sns.scatterplot(mean[0, :], mean[1, :], color='red', alpha=0.5, label='Wz')

origin = [0], [0] # origin point

#Plot the principal axis

plt.quiver(*origin, w[0,0], w[1,0], color=['g'], scale=10, label='W')

plt.xlabel('x')

plt.ylabel('y')

plt.legend(loc='lower right')

plt.title('Probabilistic PCA')

plt.show()

plt.figure(num=None, figsize=(8, 6), dpi=100, facecolor='w', edgecolor='k')

sns.scatterplot(x[0, :], x[1, :], color='orange', alpha=0.5, label='X')

sns.scatterplot(z[0, :], 0, alpha=0.5, label='z')

sns.scatterplot(mean[0, :], mean[1, :], color='red', alpha=0.5, label='Wz')

origin = [0], [0] # origin point

#Plot the principal axis

plt.quiver(*origin, w[0,0], w[1,0], color=['g'], scale=10, label='W')

#Plot probability density contours

sns.kdeplot(x[0, :], x[1, :], n_levels=6, color='purple')

plt.xlabel('x')

plt.ylabel('y')

plt.legend(loc='lower right')

plt.title('Probabilistic PCA')

plt.show()

```

def main():

fig = plt.figure()

scat = plt.scatter(mean[0, :], color='red', alpha=0.5, label='Wz')

ani = animation.FuncAnimation(fig, update_plot, frames=xrange(N),

fargs=(scat))

plt.show()

def update_plot(i, scat):

scat.set_array(data[i])

return scat,

main()

|

github_jupyter

|

```

%matplotlib inline

import pandas as pd

import cv2

import numpy as np

from matplotlib import pyplot as plt

df = pd.read_csv("data/22800_SELECT_t___FROM_data_data_t.csv",header=None,index_col=0)

df = df.rename(columns={0:"no", 1: "CAPTDATA", 2: "CAPTIMAGE",3: "timestamp"})

df.info()

df.sample(5)

def alpha_to_gray(img):

alpha_channel = img[:, :, 3]

_, mask = cv2.threshold(alpha_channel, 128, 255, cv2.THRESH_BINARY) # binarize mask

color = img[:, :, :3]

img = cv2.bitwise_not(cv2.bitwise_not(color, mask=mask))

return cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

def preprocess(data):

data = bytes.fromhex(data[2:])

img = cv2.imdecode( np.asarray(bytearray(data), dtype=np.uint8), cv2.IMREAD_UNCHANGED )

img = alpha_to_gray(img)

kernel = np.ones((3, 3), np.uint8)

img = cv2.dilate(img, kernel, iterations=1)

img = cv2.medianBlur(img, 3)

kernel = np.ones((4, 4), np.uint8)

img = cv2.erode(img, kernel, iterations=1)

# plt.imshow(img)

return img

df["IMAGE"] = df["CAPTIMAGE"].apply(preprocess)

def bounding(gray):

# data = bytes.fromhex(df["CAPTIMAGE"][1][2:])

# image = cv2.imdecode( np.asarray(bytearray(data), dtype=np.uint8), cv2.IMREAD_UNCHANGED )

# alpha_channel = image[:, :, 3]

# _, mask = cv2.threshold(alpha_channel, 128, 255, cv2.THRESH_BINARY) # binarize mask

# color = image[:, :, :3]

# src = cv2.bitwise_not(cv2.bitwise_not(color, mask=mask))

ret, binary = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

binary = cv2.bitwise_not(binary)

contours, hierachy = cv2.findContours(binary, cv2.RETR_EXTERNAL , cv2.CHAIN_APPROX_NONE)

ans = []

for h, tcnt in enumerate(contours):

x,y,w,h = cv2.boundingRect(tcnt)

if h < 25:

continue

if 40 < w < 100: # 2개가 붙어 있는 경우

ans.append([x,y,w//2,h])

ans.append([x+(w//2),y,w//2,h])

continue

if 100 <= w < 170:

ans.append([x,y,w//3,h])

ans.append([x+(w//3),y,w//3,h])

ans.append([x+(2*w//3),y,w//3,h])

# cv2.rectangle(src,(x,y),(x+w,y+h),(255,0,0),1)

ans.append([x,y,w,h])

return ans

# cv2.destroyAllWindows()

df["bounding"] = df["IMAGE"].apply(bounding)

def draw_bounding(idx):

CAPTIMAGE = df["CAPTIMAGE"][idx]

bounding = df["bounding"][idx]

data = bytes.fromhex(CAPTIMAGE[2:])

image = cv2.imdecode( np.asarray(bytearray(data), dtype=np.uint8), cv2.IMREAD_UNCHANGED )

alpha_channel = image[:, :, 3]

_, mask = cv2.threshold(alpha_channel, 128, 255, cv2.THRESH_BINARY) # binarize mask

color = image[:, :, :3]

src = cv2.bitwise_not(cv2.bitwise_not(color, mask=mask))

for x,y,w,h in bounding:

# print(x,y,w,h)

cv2.rectangle(src,(x,y),(x+w,y+h),(255,0,0),1)

return src

import random

nrows = 4

ncols = 4

fig, axes = plt.subplots(nrows=nrows, ncols=ncols)

fig.set_size_inches((16, 6))

for i in range(nrows):

for j in range(ncols):

idx = random.randrange(20,22800)

axes[i][j].set_title(str(idx))

axes[i][j].imshow(draw_bounding(idx))

fig.tight_layout()

plt.savefig('sample.png')

plt.show()

charImg = []

for idx in df.index:

IMAGE = df["IMAGE"][idx]

bounding = df["bounding"][idx]

for x,y,w,h in bounding:

newImg = IMAGE[y:y+h,x:x+w]

newImg = cv2.resize(newImg, dsize=(41, 38), interpolation=cv2.INTER_NEAREST)

charImg.append(newImg/255.0)

# cast to numpy arrays

trainingImages = np.asarray(charImg)

# reshape img array to vector

def reshape_image(img):

return np.reshape(img,len(img)*len(img[0]))

img_reshape = np.zeros((len(trainingImages),len(trainingImages[0])*len(trainingImages[0][0])))

for i in range(0,len(trainingImages)):

img_reshape[i] = reshape_image(trainingImages[i])

from sklearn.cluster import KMeans

import matplotlib.pyplot as plt

import seaborn as sns

# create model and prediction

model = KMeans(n_clusters=40,algorithm='auto')

model.fit(img_reshape)

predict = pd.DataFrame(model.predict(img_reshape))

predict.columns=['predict']

import pickle

pickle.dump(model, open("KMeans_40_22800.pkl", "wb"))

import pickle

model = pickle.load(open("KMeans_40_22800.pkl", "rb"))

predict = pd.DataFrame(model.predict(img_reshape))

predict.columns=['predict']

import random

from tqdm import tqdm

r = pd.concat([pd.DataFrame(img_reshape),predict],axis=1)

!rm -rf res_40

!mkdir res_40

nrows = 4

ncols = 10

fig, axes = plt.subplots(nrows=nrows, ncols=ncols)

fig.set_size_inches((16, 6))

for j in tqdm(range(40)):

i = 0

nSample = min(nrows * ncols,len(r[r["predict"] == j]))

for idx in r[r["predict"] == j].sample(nSample).index:

axes[i // ncols][i % ncols].set_title(str(idx))

axes[i // ncols][i % ncols].imshow(trainingImages[idx])

i+=1

fig.tight_layout()

plt.savefig('res_40/sample_' + str(j) + '.png')

```

98 95 92 222 255

|

github_jupyter

|

```

# Import and create a new SQLContext

from pyspark.sql import SQLContext

sqlContext = SQLContext(sc)

# Read the country CSV file into an RDD.

country_lines = sc.textFile('file:///home/ubuntu/work/notebooks/UCSD/big-data-3/final-project/country-list.csv')

country_lines.collect()

# Convert each line into a pair of words

country_lines.map(lambda a: a.split(",")).collect()

# Convert each pair of words into a tuple

country_tuples = country_lines.map(lambda a: (a.split(",")[0].lower(), a.split(",")[1]))

# Create the DataFrame, look at schema and contents

countryDF = sqlContext.createDataFrame(country_tuples, ["country", "code"])

countryDF.printSchema()

countryDF.take(3)

# Read tweets CSV file into RDD of lines

tweets = sc.textFile('file:///home/ubuntu/work/notebooks/UCSD/big-data-3/final-project/tweets.csv')

tweets.count()

# Clean the data: some tweets are empty. Remove the empty tweets using filter()

filtered_tweets = tweets.filter(lambda a: len(a) > 0)

filtered_tweets.count()

# Perform WordCount on the cleaned tweet texts. (note: this is several lines.)

word_counts = filtered_tweets.flatMap(lambda a: a.split(" ")) \

.map(lambda word: (word.lower(), 1)) \

.reduceByKey(lambda a, b: a + b)

from pyspark.sql import HiveContext

from pyspark.sql.types import *

# sc is an existing SparkContext.

sqlContext = HiveContext(sc)

schemaString = "word count"

fields = [StructField(field_name, StringType(), True) for field_name in schemaString.split()]

schema = StructType(fields)

# Create the DataFrame of tweet word counts

tweetsDF = sqlContext.createDataFrame(word_counts, schema)

tweetsDF.printSchema()

tweetsDF.count()

# Join the country and tweet DataFrames (on the appropriate column)

joined = countryDF.join(tweetsDF, countryDF.country == tweetsDF.word)

joined.take(5)

joined.show()

# Question 1: number of distinct countries mentioned

distinct_countries = joined.select("country").distinct()

distinct_countries.show(100)

# Question 2: number of countries mentioned in tweets.

from pyspark.sql.functions import sum

from pyspark.sql import SparkSession

from pyspark.sql import Row

countries_count = joined.groupBy("country")

joined.createOrReplaceTempView("records")

spark.sql("SELECT country, count(*) count1 FROM records group by country order by count1 desc, country asc").show(100)

# Table 1: top three countries and their counts.

from pyspark.sql.functions import desc

from pyspark.sql.functions import col

top_3 = joined.sort(col("count").desc())

top_3.show()

# Table 2: counts for Wales, Iceland, and Japan.

```

|

github_jupyter

|

# Datafaucet

Datafaucet is a productivity framework for ETL, ML application. Simplifying some of the common activities which are typical in Data pipeline such as project scaffolding, data ingesting, start schema generation, forecasting etc.

```

import datafaucet as dfc

```

## Loading and Saving Data

```

dfc.project.load()

query = """

SELECT

p.payment_date,

p.amount,

p.rental_id,

p.staff_id,

c.*

FROM payment p

INNER JOIN customer c

ON p.customer_id = c.customer_id;

"""

df = dfc.load(query, 'pagila')

```

#### Select cols

```

df.cols.find('id').columns

df.cols.find(by_type='string').columns

df.cols.find(by_func=lambda x: x.startswith('st')).columns

df.cols.find('^st').columns

```

#### Collect data, oriented by rows or cols

```

df.cols.find(by_type='numeric').rows.collect(3)

df.cols.find(by_type='string').collect(3)

df.cols.find('name', 'date').data.collect(3)

```

#### Get just one row or column

```

df.cols.find('active', 'amount', 'name').one()

df.cols.find('active', 'amount', 'name').rows.one()

```

#### Grid view

```

df.cols.find('amount', 'id', 'name').data.grid(5)

```

#### Data Exploration

```

df.cols.find('amount', 'id', 'name').data.facets()

```

#### Rename columns

```

df.cols.find(by_type='timestamp').rename('new_', '***').columns

# to do

# df.cols.rename(transform=['unidecode', 'alnum', 'alpha', 'num', 'lower', 'trim', 'squeeze', 'slice', tr("abc", "_", mode='')'])

# df.cols.rename(transform=['unidecode', 'alnum', 'lower', 'trim("_")', 'squeeze("_")'])

# as a dictionary

mapping = {

'staff_id': 'foo',

'first_name': 'bar',

'email': 'qux',

'active':'active'

}

# or as a list of 2-tuples

mapping = [

('staff_id','foo'),

('first_name','bar'),

'active'

]

dict(zip(df.columns, df.cols.rename('new_', '***', mapping).columns))

```

#### Drop multiple columns

```

df.cols.find('id').drop().rows.collect(3)

```

#### Apply to multiple columns

```

from pyspark.sql import functions as F

(df

.cols.find(by_type='string').lower()

.cols.get('email').split('@')

.cols.get('email').expand(2)

.cols.find('name', 'email')

.rows.collect(3)

)

```

### Aggregations

```

from datafaucet.spark import aggregations as A

df.cols.find('amount', '^st.*id', 'first_name').agg(A.all).cols.collect(10)

```

##### group by a set of columns

```

df.cols.find('amount').groupby('staff_id', 'store_id').agg(A.all).cols.collect(4)

```

#### Aggregate specific metrics

```

# by function

df.cols.get('amount', 'active').groupby('customer_id').agg({'count':F.count, 'sum': F.sum}).rows.collect(10)

# or by alias

df.cols.get('amount', 'active').groupby('customer_id').agg('count','sum').rows.collect(10)

# or a mix of the two

df.cols.get('amount', 'active').groupby('customer_id').agg('count',{'sum': F.sum}).rows.collect(10)

```

#### Featurize specific metrics in a single row

```

(df

.cols.get('amount', 'active')

.groupby('customer_id', 'store_id')

.featurize({'count':A.count, 'sum':A.sum, 'avg':A.avg})

.rows.collect(10)

)

# todo:

# different features per different column

```

#### Plot dataset statistics

```

df.data.summary()

from bokeh.io import output_notebook

output_notebook()

from bokeh.plotting import figure, show, output_file

p = figure(plot_width=400, plot_height=400)

p.hbar(y=[1, 2, 3], height=0.5, left=0,

right=[1.2, 2.5, 3.7], color="navy")

show(p)

import seaborn as sns

import matplotlib.pyplot as plt

sns.set(style="whitegrid")

# Initialize the matplotlib figure

f, ax = plt.subplots(figsize=(6, 6))

# Load the example car crash dataset

crashes = sns.load_dataset("car_crashes").sort_values("total", ascending=False)[:10]

# Plot the total crashes

sns.set_color_codes("pastel")

sns.barplot(x="total", y="abbrev", data=crashes,

label="Total", color="b")

# Plot the crashes where alcohol was involved

sns.set_color_codes("muted")

sns.barplot(x="alcohol", y="abbrev", data=crashes,

label="Alcohol-involved", color="b")

# Add a legend and informative axis label

ax.legend(ncol=2, loc="lower right", frameon=True)

ax.set(xlim=(0, 24), ylabel="",

xlabel="Automobile collisions per billion miles")

sns.despine(left=True, bottom=True)

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

sns.set(style="white", palette="muted", color_codes=True)

# Generate a random univariate dataset

rs = np.random.RandomState(10)

d = rs.normal(size=100)

# Plot a simple histogram with binsize determined automatically

sns.distplot(d, hist=True, kde=True, rug=True, color="b");

import seaborn as sns

sns.set(style="ticks")

df = sns.load_dataset("iris")

sns.pairplot(df, hue="species")

from IPython.display import HTML

HTML('''

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.1.3/css/bootstrap.min.css" crossorigin="anonymous">

<div class="container-fluid">

<div class="jumbotron">

<h1 class="display-4">Hello, world!</h1>

<p class="lead">This is a simple hero unit, a simple jumbotron-style component for calling extra attention to featured content or information.</p>

<hr class="my-4">

<p>It uses utility classes for typography and spacing to space content out within the larger container.</p>

<a class="btn btn-primary btn-lg" href="#" role="button">Learn more</a>

</div>

<button type="button" class="btn btn-secondary" data-toggle="tooltip" data-placement="top" title="Tooltip on top">

Tooltip on top

</button>

<button type="button" class="btn btn-secondary" data-toggle="tooltip" data-placement="right" title="Tooltip on right">

Tooltip on right

</button>

<button type="button" class="btn btn-secondary" data-toggle="tooltip" data-placement="bottom" title="Tooltip on bottom">

Tooltip on bottom

</button>

<button type="button" class="btn btn-secondary" data-toggle="tooltip" data-placement="left" title="Tooltip on left">

Tooltip on left

</button>

<table class="table">

<thead>

<tr>

<th scope="col">#</th>

<th scope="col">First</th>

<th scope="col">Last</th>

<th scope="col">Handle</th>

</tr>

</thead>

<tbody>

<tr>

<th scope="row">1</th>

<td>Mark</td>

<td>Otto</td>

<td>@mdo</td>

</tr>

<tr>

<th scope="row">2</th>

<td>Jacob</td>

<td>Thornton</td>

<td>@fat</td>

</tr>

<tr>

<th scope="row">3</th>

<td>Larry</td>

<td>the Bird</td>

<td>@twitter</td>

</tr>

</tbody>

</table>

<span class="badge badge-primary">Primary</span>

<span class="badge badge-secondary">Secondary</span>

<span class="badge badge-success">Success</span>

<span class="badge badge-danger">Danger</span>

<span class="badge badge-warning">Warning</span>

<span class="badge badge-info">Info</span>

<span class="badge badge-light">Light</span>

<span class="badge badge-dark">Dark</span>

<table class="table table-sm" style="text-align:left">

<thead>

<tr>

<th scope="col">#</th>

<th scope="col">First</th>

<th scope="col">Last</th>

<th scope="col">Handle</th>

<th scope="col">bar</th>

</tr>

</thead>

<tbody>

<tr>

<th scope="row">1</th>

<td>Mark</td>

<td>Otto</td>

<td>@mdo</td>

<td class="text-left"><span class="badge badge-primary" style="width: 75%">Primary</span></td>

</tr>

<tr>

<th scope="row">2</th>

<td>Jacob</td>

<td>Thornton</td>

<td>@fat</td>

<td class="text-left"><span class="badge badge-secondary" style="width: 25%">Primary</span></td>

</tr>

<tr>

<th scope="row">3</th>

<td colspan="2">Larry the Bird</td>

<td>@twitter</td>

<td class="text-left"><span class="badge badge-warning" style="width: 55%">Primary</span></td>

</div>

</tr>

</tbody>

</table>

</div>''')

tbl = '''

<table class="table table-sm">

<thead>

<tr>

<th scope="col">#</th>

<th scope="col">First</th>

<th scope="col">Last</th>

<th scope="col">Handle</th>

<th scope="col">bar</th>

</tr>

</thead>

<tbody>

<tr>

<th scope="row">1</th>

<td>Mark</td>

<td>Otto</td>

<td>@mdo</td>

<td class="text-left"><span class="badge badge-primary" style="width: 75%">75%</span></td>

</tr>

<tr>

<th scope="row">2</th>

<td>Jacob</td>

<td>Thornton</td>

<td>@fat</td>

<td class="text-left"><span class="badge badge-secondary" style="width: 25%" title="Tooltip on top">25%</span></td>

</tr>

<tr>

<th scope="row">3</th>

<td colspan="2">Larry the Bird</td>

<td>@twitter</td>

<td class="text-left"><span class="badge badge-warning" style="width: 0%">0%</span></td>

</tr>

</tbody>

</table>

'''

drp = '''

<div class="dropdown">

<button class="btn btn-secondary dropdown-toggle" type="button" id="dropdownMenuButton" data-toggle="dropdown" aria-haspopup="true" aria-expanded="false">

Dropdown button

</button>

<div class="dropdown-menu" aria-labelledby="dropdownMenuButton">

<a class="dropdown-item" href="#">Action</a>

<a class="dropdown-item" href="#">Another action</a>

<a class="dropdown-item" href="#">Something else here</a>

</div>

</div>'''

tabs = f'''

<nav>

<div class="nav nav-tabs" id="nav-tab" role="tablist">

<a class="nav-item nav-link active" id="nav-home-tab" data-toggle="tab" href="#nav-home" role="tab" aria-controls="nav-home" aria-selected="true">Home</a>

<a class="nav-item nav-link" id="nav-profile-tab" data-toggle="tab" href="#nav-profile" role="tab" aria-controls="nav-profile" aria-selected="false">Profile</a>

<a class="nav-item nav-link" id="nav-contact-tab" data-toggle="tab" href="#nav-contact" role="tab" aria-controls="nav-contact" aria-selected="false">Contact</a>

</div>

</nav>

<div class="tab-content" id="nav-tabContent">

<div class="tab-pane fade show active" id="nav-home" role="tabpanel" aria-labelledby="nav-home-tab">..jjj.</div>

<div class="tab-pane fade" id="nav-profile" role="tabpanel" aria-labelledby="nav-profile-tab">..kkk.</div>

<div class="tab-pane fade" id="nav-contact" role="tabpanel" aria-labelledby="nav-contact-tab">{tbl}</div>

</div>

'''

from IPython.display import HTML

HTML(f'''

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.1.3/css/bootstrap.min.css" crossorigin="anonymous">

<div class="container-fluid">

<div class="row">

<div class="col">

{drp}

</div>

<div class="col">

{tabs}

</div>

<div class="col">

{tbl}

</div>

</div>

</div>

<script src="https://stackpath.bootstrapcdn.com/bootstrap/4.1.3/js/bootstrap.bundle.min.js" crossorigin="anonymous" >

''')

from IPython.display import HTML

HTML(f'''

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.1.3/css/bootstrap.min.css" crossorigin="anonymous">

<script src="https://stackpath.bootstrapcdn.com/bootstrap/4.1.3/js/bootstrap.bundle.min.js" crossorigin="anonymous" >

''')

d =df.cols.find('id', 'name').sample(10)

d.columns

tbl_head = '''

<thead>

<tr>

'''

tbl_head += '\n'.join([' <th scope="col">'+str(x)+'</th>' for x in d.columns])

tbl_head +='''

</tr>

</thead>

'''

print(tbl_head)

tbl_body = '''

<tbody>

<tr>

<th scope="row">1</th>

<td>Mark</td>

<td>Otto</td>

<td>@mdo</td>

<td class="text-left"><span class="badge badge-primary" style="width: 75%">75%</span></td>

</tr>

<tr>

<th scope="row">2</th>

<td>Jacob</td>

<td>Thornton</td>

<td>@fat</td>

<td class="text-left"><span class="badge badge-secondary" style="width: 25%" title="Tooltip on top">25%</span></td>

</tr>

<tr>

<th scope="row">3</th>

<td colspan="2">Larry the Bird</td>

<td>@twitter</td>

<td class="text-left"><span class="badge badge-warning" style="width: 0%">0%</span></td>

</tr>

</tbody>

</table>

'''

HTML(f'''

<!-- Bootstrap CSS -->

<div class="container-fluid">

<div class="row">

<div class="col">

<table class="table table-sm">

{tbl_head}

{tbl_body}

</table>

</div>

</div>

</div>

''')

# .rows.sample()

# .cols.select('name', 'id', 'amount')\

# .cols.apply(F.lower, 'name')\

# .cols.apply(F.floor, 'amount', output_prefix='_')\

# .cols.drop('^amount$')\

# .cols.rename()

# .cols.unicode()

.grid()

df = df.cols.select('name')

df = df.rows.overwrite([('Nhập mật', 'khẩu')])

df.columns

# .rows.overwrite(['Nhập mật', 'khẩu'])\

# .cols.apply(F.lower)\

# .grid()

# #withColumn('pippo', F.lower(F.col('first_name'))).grid()

import pandas as pd

df = pd.DataFrame({'lab':['A', 'B', 'C'], 'val':[10, 30, 20]})

df.plot.bar(x='lab', y='val', rot=0);

```

|

github_jupyter

|

https://www.kaggle.com/danofer/sarcasm

<div class="markdown-converter__text--rendered"><h3>Context</h3>

<p>This dataset contains 1.3 million Sarcastic comments from the Internet commentary website Reddit. The dataset was generated by scraping comments from Reddit (not by me :)) containing the <code>\s</code> ( sarcasm) tag. This tag is often used by Redditors to indicate that their comment is in jest and not meant to be taken seriously, and is generally a reliable indicator of sarcastic comment content.</p>

<h3>Content</h3>

<p>Data has balanced and imbalanced (i.e true distribution) versions. (True ratio is about 1:100). The

corpus has 1.3 million sarcastic statements, along with what they responded to as well as many non-sarcastic comments from the same source.</p>

<p>Labelled comments are in the <code>train-balanced-sarcasm.csv</code> file.</p>

<h3>Acknowledgements</h3>

<p>The data was gathered by: Mikhail Khodak and Nikunj Saunshi and Kiran Vodrahalli for their article "<a href="https://arxiv.org/abs/1704.05579" rel="nofollow">A Large Self-Annotated Corpus for Sarcasm</a>". The data is hosted <a href="http://nlp.cs.princeton.edu/SARC/0.0/" rel="nofollow">here</a>.</p>

<p>Citation:</p>

<pre><code>@unpublished{SARC,

authors={Mikhail Khodak and Nikunj Saunshi and Kiran Vodrahalli},

title={A Large Self-Annotated Corpus for Sarcasm},

url={https://arxiv.org/abs/1704.05579},

year=2017

}

</code></pre>

<p><a href="http://nlp.cs.princeton.edu/SARC/0.0/readme.txt" rel="nofollow">Annotation of files in the original dataset: readme.txt</a>.</p>

<h3>Inspiration</h3>

<ul>

<li>Predicting sarcasm and relevant NLP features (e.g. subjective determinant, racism, conditionals, sentiment heavy words, "Internet Slang" and specific phrases). </li>

<li>Sarcasm vs Sentiment</li>

<li>Unusual linguistic features such as caps, italics, or elongated words. e.g., "Yeahhh, I'm sure THAT is the right answer".</li>

<li>Topics that people tend to react to sarcastically</li>

</ul></div>

```

import os

# Install java

! apt-get update -qq

! apt-get install -y openjdk-8-jdk-headless -qq > /dev/null

os.environ["JAVA_HOME"] = "/usr/lib/jvm/java-8-openjdk-amd64"

os.environ["PATH"] = os.environ["JAVA_HOME"] + "/bin:" + os.environ["PATH"]

! java -version

# Install pyspark

! pip install --ignore-installed pyspark==2.4.4

# Install Spark NLP

! pip install --ignore-installed spark-nlp

import sys

import time

import sparknlp

from pyspark.sql import SparkSession

packages = [

'JohnSnowLabs:spark-nlp: 2.5.5'

]

spark = SparkSession \

.builder \

.appName("ML SQL session") \

.config('spark.jars.packages', ','.join(packages)) \

.config('spark.executor.instances','2') \

.config("spark.executor.memory", "2g") \

.config("spark.driver.memory","16g") \

.getOrCreate()

print("Spark NLP version: ", sparknlp.version())

print("Apache Spark version: ", spark.version)

! wget -N https://s3.amazonaws.com/auxdata.johnsnowlabs.com/public/resources/en/sarcasm/train-balanced-sarcasm.csv -P /tmp

from pyspark.sql import SQLContext

sql = SQLContext(spark)

trainBalancedSarcasmDF = spark.read.option("header", True).option("inferSchema", True).csv("/tmp/train-balanced-sarcasm.csv")

trainBalancedSarcasmDF.printSchema()

# Let's create a temp view (table) for our SQL queries

trainBalancedSarcasmDF.createOrReplaceTempView('data')

sql.sql('SELECT COUNT(*) FROM data').collect()

sql.sql('select * from data limit 20').show()

sql.sql('select label,count(*) as cnt from data group by label order by cnt desc').show()

sql.sql('select count(*) from data where comment is null').collect()

df = sql.sql('select label,concat(parent_comment,"\n",comment) as comment from data where comment is not null and parent_comment is not null limit 100000')

print(type(df))

df.printSchema()

df.show()

from sparknlp.annotator import *

from sparknlp.common import *

from sparknlp.base import *

from pyspark.ml import Pipeline

document_assembler = DocumentAssembler() \

.setInputCol("comment") \

.setOutputCol("document")

sentence_detector = SentenceDetector() \

.setInputCols(["document"]) \

.setOutputCol("sentence") \

.setUseAbbreviations(True)

tokenizer = Tokenizer() \

.setInputCols(["sentence"]) \

.setOutputCol("token")

stemmer = Stemmer() \

.setInputCols(["token"]) \

.setOutputCol("stem")

normalizer = Normalizer() \

.setInputCols(["stem"]) \

.setOutputCol("normalized")

finisher = Finisher() \

.setInputCols(["normalized"]) \

.setOutputCols(["ntokens"]) \

.setOutputAsArray(True) \

.setCleanAnnotations(True)

nlp_pipeline = Pipeline(stages=[document_assembler, sentence_detector, tokenizer, stemmer, normalizer, finisher])

nlp_model = nlp_pipeline.fit(df)

processed = nlp_model.transform(df).persist()

processed.count()

processed.show()

train, test = processed.randomSplit(weights=[0.7, 0.3], seed=123)

print(train.count())

print(test.count())

from pyspark.ml import feature as spark_ft

stopWords = spark_ft.StopWordsRemover.loadDefaultStopWords('english')

sw_remover = spark_ft.StopWordsRemover(inputCol='ntokens', outputCol='clean_tokens', stopWords=stopWords)

tf = spark_ft.CountVectorizer(vocabSize=500, inputCol='clean_tokens', outputCol='tf')

idf = spark_ft.IDF(minDocFreq=5, inputCol='tf', outputCol='idf')

feature_pipeline = Pipeline(stages=[sw_remover, tf, idf])

feature_model = feature_pipeline.fit(train)

train_featurized = feature_model.transform(train).persist()

train_featurized.count()

train_featurized.show()

train_featurized.groupBy("label").count().show()

train_featurized.printSchema()

from pyspark.ml import classification as spark_cls

rf = spark_cls. RandomForestClassifier(labelCol="label", featuresCol="idf", numTrees=100)

model = rf.fit(train_featurized)

test_featurized = feature_model.transform(test)

preds = model.transform(test_featurized)

preds.show()

pred_df = preds.select('comment', 'label', 'prediction').toPandas()

pred_df.head()

import pandas as pd

from sklearn import metrics as skmetrics

pd.DataFrame(

data=skmetrics.confusion_matrix(pred_df['label'], pred_df['prediction']),

columns=['pred ' + l for l in ['0','1']],

index=['true ' + l for l in ['0','1']]

)

print(skmetrics.classification_report(pred_df['label'], pred_df['prediction'],

target_names=['0','1']))

spark.stop()

```

|

github_jupyter

|

```

# Copyright 2020 NVIDIA Corporation. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

```

<img src="http://developer.download.nvidia.com/compute/machine-learning/frameworks/nvidia_logo.png" style="width: 90px; float: right;">

# Object Detection with TRTorch (SSD)

---

## Overview

In PyTorch 1.0, TorchScript was introduced as a method to separate your PyTorch model from Python, make it portable and optimizable.

TRTorch is a compiler that uses TensorRT (NVIDIA's Deep Learning Optimization SDK and Runtime) to optimize TorchScript code. It compiles standard TorchScript modules into ones that internally run with TensorRT optimizations.

TensorRT can take models from any major framework and specifically tune them to perform better on specific target hardware in the NVIDIA family, and TRTorch enables us to continue to remain in the PyTorch ecosystem whilst doing so. This allows us to leverage the great features in PyTorch, including module composability, its flexible tensor implementation, data loaders and more. TRTorch is available to use with both PyTorch and LibTorch.

To get more background information on this, we suggest the **lenet-getting-started** notebook as a primer for getting started with TRTorch.

### Learning objectives

This notebook demonstrates the steps for compiling a TorchScript module with TRTorch on a pretrained SSD network, and running it to test the speedup obtained.

## Contents

1. [Requirements](#1)

2. [SSD Overview](#2)

3. [Creating TorchScript modules](#3)

4. [Compiling with TRTorch](#4)

5. [Running Inference](#5)

6. [Measuring Speedup](#6)

7. [Conclusion](#7)

---

<a id="1"></a>

## 1. Requirements

Follow the steps in `notebooks/README` to prepare a Docker container, within which you can run this demo notebook.

In addition to that, run the following cell to obtain additional libraries specific to this demo.

```

# Known working versions

!pip install numpy==1.21.2 scipy==1.5.2 Pillow==6.2.0 scikit-image==0.17.2 matplotlib==3.3.0

```

---

<a id="2"></a>

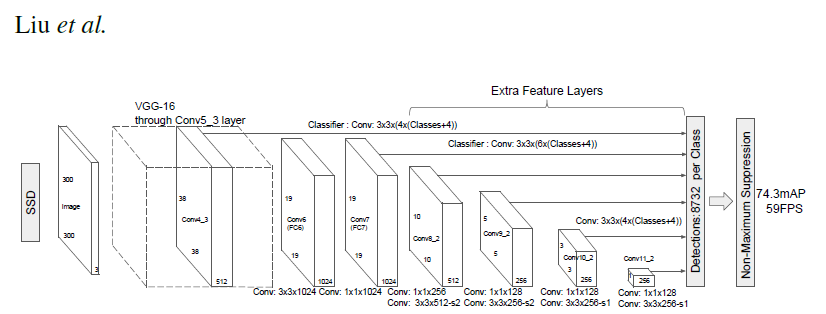

## 2. SSD

### Single Shot MultiBox Detector model for object detection

_ | _

- | -

|

PyTorch has a model repository called the PyTorch Hub, which is a source for high quality implementations of common models. We can get our SSD model pretrained on [COCO](https://cocodataset.org/#home) from there.

### Model Description

This SSD300 model is based on the

[SSD: Single Shot MultiBox Detector](https://arxiv.org/abs/1512.02325) paper, which

describes SSD as “a method for detecting objects in images using a single deep neural network".

The input size is fixed to 300x300.

The main difference between this model and the one described in the paper is in the backbone.

Specifically, the VGG model is obsolete and is replaced by the ResNet-50 model.

From the

[Speed/accuracy trade-offs for modern convolutional object detectors](https://arxiv.org/abs/1611.10012)

paper, the following enhancements were made to the backbone:

* The conv5_x, avgpool, fc and softmax layers were removed from the original classification model.

* All strides in conv4_x are set to 1x1.

The backbone is followed by 5 additional convolutional layers.

In addition to the convolutional layers, we attached 6 detection heads:

* The first detection head is attached to the last conv4_x layer.

* The other five detection heads are attached to the corresponding 5 additional layers.

Detector heads are similar to the ones referenced in the paper, however,

they are enhanced by additional BatchNorm layers after each convolution.

More information about this SSD model is available at Nvidia's "DeepLearningExamples" Github [here](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD).

```

import torch

torch.hub._validate_not_a_forked_repo=lambda a,b,c: True

# List of available models in PyTorch Hub from Nvidia/DeepLearningExamples

torch.hub.list('NVIDIA/DeepLearningExamples:torchhub')

# load SSD model pretrained on COCO from Torch Hub

precision = 'fp32'

ssd300 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd', model_math=precision);

```

Setting `precision="fp16"` will load a checkpoint trained with mixed precision

into architecture enabling execution on Tensor Cores. Handling mixed precision data requires the Apex library.

### Sample Inference

We can now run inference on the model. This is demonstrated below using sample images from the COCO 2017 Validation set.

```

# Sample images from the COCO validation set

uris = [

'http://images.cocodataset.org/val2017/000000397133.jpg',

'http://images.cocodataset.org/val2017/000000037777.jpg',

'http://images.cocodataset.org/val2017/000000252219.jpg'

]

# For convenient and comprehensive formatting of input and output of the model, load a set of utility methods.

utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd_processing_utils')

# Format images to comply with the network input

inputs = [utils.prepare_input(uri) for uri in uris]

tensor = utils.prepare_tensor(inputs, False)

# The model was trained on COCO dataset, which we need to access in order to

# translate class IDs into object names.

classes_to_labels = utils.get_coco_object_dictionary()

# Next, we run object detection

model = ssd300.eval().to("cuda")

detections_batch = model(tensor)

# By default, raw output from SSD network per input image contains 8732 boxes with

# localization and class probability distribution.

# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

results_per_input = utils.decode_results(detections_batch)

best_results_per_input = [utils.pick_best(results, 0.40) for results in results_per_input]

```

### Visualize results

```

from matplotlib import pyplot as plt

import matplotlib.patches as patches

# The utility plots the images and predicted bounding boxes (with confidence scores).

def plot_results(best_results):

for image_idx in range(len(best_results)):

fig, ax = plt.subplots(1)

# Show original, denormalized image...

image = inputs[image_idx] / 2 + 0.5

ax.imshow(image)

# ...with detections

bboxes, classes, confidences = best_results[image_idx]

for idx in range(len(bboxes)):

left, bot, right, top = bboxes[idx]

x, y, w, h = [val * 300 for val in [left, bot, right - left, top - bot]]

rect = patches.Rectangle((x, y), w, h, linewidth=1, edgecolor='r', facecolor='none')

ax.add_patch(rect)

ax.text(x, y, "{} {:.0f}%".format(classes_to_labels[classes[idx] - 1], confidences[idx]*100), bbox=dict(facecolor='white', alpha=0.5))

plt.show()

# Visualize results without TRTorch/TensorRT

plot_results(best_results_per_input)

```

### Benchmark utility

```

import time

import numpy as np

import torch.backends.cudnn as cudnn

cudnn.benchmark = True

# Helper function to benchmark the model

def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=1000):

input_data = torch.randn(input_shape)

input_data = input_data.to("cuda")

if dtype=='fp16':

input_data = input_data.half()

print("Warm up ...")

with torch.no_grad():

for _ in range(nwarmup):

features = model(input_data)

torch.cuda.synchronize()

print("Start timing ...")

timings = []

with torch.no_grad():

for i in range(1, nruns+1):

start_time = time.time()

pred_loc, pred_label = model(input_data)

torch.cuda.synchronize()

end_time = time.time()

timings.append(end_time - start_time)

if i%10==0:

print('Iteration %d/%d, avg batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

print("Input shape:", input_data.size())

print("Output location prediction size:", pred_loc.size())

print("Output label prediction size:", pred_label.size())

print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

```

We check how well the model performs **before** we use TRTorch/TensorRT

```

# Model benchmark without TRTorch/TensorRT

model = ssd300.eval().to("cuda")

benchmark(model, input_shape=(128, 3, 300, 300), nruns=100)

```

---

<a id="3"></a>

## 3. Creating TorchScript modules

To compile with TRTorch, the model must first be in **TorchScript**. TorchScript is a programming language included in PyTorch which removes the Python dependency normal PyTorch models have. This conversion is done via a JIT compiler which given a PyTorch Module will generate an equivalent TorchScript Module. There are two paths that can be used to generate TorchScript: **Tracing** and **Scripting**. <br>

- Tracing follows execution of PyTorch generating ops in TorchScript corresponding to what it sees. <br>

- Scripting does an analysis of the Python code and generates TorchScript, this allows the resulting graph to include control flow which tracing cannot do.

Tracing however due to its simplicity is more likely to compile successfully with TRTorch (though both systems are supported).

```

model = ssd300.eval().to("cuda")

traced_model = torch.jit.trace(model, [torch.randn((1,3,300,300)).to("cuda")])

```

If required, we can also save this model and use it independently of Python.

```

# This is just an example, and not required for the purposes of this demo

torch.jit.save(traced_model, "ssd_300_traced.jit.pt")

# Obtain the average time taken by a batch of input with Torchscript compiled modules

benchmark(traced_model, input_shape=(128, 3, 300, 300), nruns=100)

```

---

<a id="4"></a>

## 4. Compiling with TRTorch

TorchScript modules behave just like normal PyTorch modules and are intercompatible. From TorchScript we can now compile a TensorRT based module. This module will still be implemented in TorchScript but all the computation will be done in TensorRT.

```

import trtorch

# The compiled module will have precision as specified by "op_precision".

# Here, it will have FP16 precision.

trt_model = trtorch.compile(traced_model, {

"inputs": [trtorch.Input((3, 3, 300, 300))],

"enabled_precisions": {torch.float, torch.half}, # Run with FP16

"workspace_size": 1 << 20

})

```

---

<a id="5"></a>

## 5. Running Inference

Next, we run object detection

```

# using a TRTorch module is exactly the same as how we usually do inference in PyTorch i.e. model(inputs)

detections_batch = trt_model(tensor.to(torch.half)) # convert the input to half precision

# By default, raw output from SSD network per input image contains 8732 boxes with

# localization and class probability distribution.

# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

results_per_input = utils.decode_results(detections_batch)

best_results_per_input_trt = [utils.pick_best(results, 0.40) for results in results_per_input]

```

Now, let's visualize our predictions!

```

# Visualize results with TRTorch/TensorRT

plot_results(best_results_per_input_trt)

```

We get similar results as before!

---

## 6. Measuring Speedup

We can run the benchmark function again to see the speedup gained! Compare this result with the same batch-size of input in the case without TRTorch/TensorRT above.

```

batch_size = 128

# Recompiling with batch_size we use for evaluating performance

trt_model = trtorch.compile(traced_model, {

"inputs": [trtorch.Input((batch_size, 3, 300, 300))],

"enabled_precisions": {torch.float, torch.half}, # Run with FP16

"workspace_size": 1 << 20

})

benchmark(trt_model, input_shape=(batch_size, 3, 300, 300), nruns=100, dtype="fp16")

```

---

## 7. Conclusion

In this notebook, we have walked through the complete process of compiling a TorchScript SSD300 model with TRTorch, and tested the performance impact of the optimization. We find that using the TRTorch compiled model, we gain significant speedup in inference without any noticeable drop in performance!

### Details

For detailed information on model input and output,

training recipies, inference and performance visit:

[github](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD)

and/or [NGC](https://ngc.nvidia.com/catalog/model-scripts/nvidia:ssd_for_pytorch)

### References

- [SSD: Single Shot MultiBox Detector](https://arxiv.org/abs/1512.02325) paper

- [Speed/accuracy trade-offs for modern convolutional object detectors](https://arxiv.org/abs/1611.10012) paper

- [SSD on NGC](https://ngc.nvidia.com/catalog/model-scripts/nvidia:ssd_for_pytorch)

- [SSD on github](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD)

|

github_jupyter

|

# 3. Markov Models Example Problems

We will now look at a model that examines our state of healthiness vs. being sick. Keep in mind that this is very much like something you could do in real life. If you wanted to model a certain situation or environment, we could take some data that we have gathered, build a maximum likelihood model on it, and do things like study the properties that emerge from the model, or make predictions from the model, or generate the next most likely state.

Let's say we have 2 states: **sick** and **healthy**. We know that we spend most of our time in a healthy state, so the probability of transitioning from healthy to sick is very low:

$$p(sick \; | \; healthy) = 0.005$$

Hence, the probability of going from healthy to healthy is:

$$p(healthy \; | \; healthy) = 0.995$$

Now, on the other hand the probability of going from sick to sick is also very high. This is because if you just got sick yesterday then you are very likely to be sick tomorrow.

$$p(sick \; | \; sick) = 0.8$$

However, the probability of transitioning from sick to healthy should be higher than the reverse, because you probably won't stay sick for as long as you would stay healthy:

$$p(healthy \; | \; sick) = 0.02$$

We have now fully defined our state transition matrix, and we can now do some calculations.

## 1.1 Example Calculations

### 1.1.1

What is the probability of being healthy for 10 days in a row, given that we already start out as healthy? Well that is:

$$p(healthy \; 10 \; days \; in \; a \; row \; | \; healthy \; at \; t=0) = 0.995^9 = 95.6 \%$$

How about the probability of being healthy for 100 days in a row?

$$p(healthy \; 100 \; days \; in \; a \; row \; | \; healthy \; at \; t=0) = 0.995^{99} = 60.9 \%$$

## 2. Expected Number of Continuously Sick Days

We can now look at the expected number of days that you would remain in the same state (e.g. how many days would you expect to stay sick given the model?). This is a bit more difficult than the last problem, but completely doable, only involving the mathematics of <a href="https://en.wikipedia.org/wiki/Geometric_series">infinite sums</a>.

First, we can look at the probability of being in state $i$, and going to state $i$ in the next state. That is just $A(i,i)$:

$$p \big(s(t)=i \; | \; s(t-1)=i \big) = A(i, i)$$

Now, what is the probability distribution that we actually want to calculate? How about we calculate the probability that we stay in state $i$ for $n$ transitions, at which point we move to another state:

$$p \big(s(t) \;!=i \; | \; s(t-1)=i \big) = 1 - A(i, i)$$

So, the joint probability that we are trying to model is:

$$p\big(s(1)=i, s(2)=i,...,s(n)=i, s(n+1) \;!= i\big) = A(i,i)^{n-1}\big(1-A(i,i)\big)$$

In english this means that we are multiplying the transition probability of staying in the same state, $A(i,i)$, times the number of times we stayed in the same state, $n$, (note it is $n-1$ because we are given that we start in that state, hence there is no transition associated with it) times $1 - A(i,i)$, the probability of transitioning from that state. This leaves us with an expected value for $n$ of:

$$E(n) = \sum np(n) = \sum_{n=1..\infty} nA(i,i)^{n-1}(1-A(i,i))$$

Note, in the above equation $p(n)$ is the probability that we will see state $i$ $n-1$ times after starting from $i$ and then see a state that is not $i$. Also, we know that the expected value of $n$ should be the sum of all possible values of $n$ times $p(n)$.

### 2.1 Expected $n$

So, we can now expand this function and calculate the two sums separately.

$$E(n) = \sum_{n=1..\infty}nA(i,i)^{n-1}(1 - A(i,i)) = \sum nA(i, i)^{n-1} - \sum nA(i,i)^n$$

**First Sum**<br>

With our first sum, we can say that:

$$S = \sum na(i, i)^{n-1}$$

$$S = 1 + 2a + 3a^2 + 4a^3+ ...$$

And we can then multiply that sum, $S$, by $a$, to get:

$$aS = a + 2a^2 + 3a^3 + 4a^4+...$$

And then we can subtract $aS$ from $S$:

$$S - aS = S'= 1 + a + a^2 + a^3+...$$

This $S'$ is another infinite sum, but it is one that is much easier to solve!

$$S'= 1 + a + a^2 + a^3+...$$

And then $aS'$ is:

$$aS' = a + a^2 + a^3+ + a^4 + ...$$

Which, when we then do $S' - aS'$, we end up with:

$$S' - aS' = 1$$

$$S' = \frac{1}{1 - a}$$

And if we then substitute that value in for $S'$ above:

$$S - aS = S'= 1 + a + a^2 + a^3+... = \frac{1}{1 - a}$$

$$S - aS = \frac{1}{1 - a}$$

$$S = \frac{1}{(1 - a)^2}$$

**Second Sum**<br>

We can now look at our second sum:

$$S = \sum na(i,i)^n$$

$$S = 1a + 2a^2 + 3a^3 +...$$

$$Sa = 1a^2 + 2a^3 +...$$

$$S - aS = S' = a + a^2 + a^3 + ...$$

$$aS' = a^2 + a^3 + a^4 +...$$

$$S' - aS' = a$$

$$S' = \frac{a}{1 - a}$$

And we can plug back in $S'$ to get:

$$S - aS = \frac{a}{1 - a}$$

$$S = \frac{a}{(1 - a)^2}$$

**Combine** <br>

We can now combine these two sums as follows:

$$E(n) = \frac{1}{(1 - a)^2} - \frac{a}{(1-a)^2}$$

$$E(n) = \frac{1}{1-a}$$

**Calculate Number of Sick Days**<br>

So, how do we calculate the correct number of sick days? That is just:

$$\frac{1}{1 - 0.8} = 5$$

## 3. SEO and Bounce Rate Optimization

We are now going to look at SEO and Bounch Rate Optimization. This is a problem that every developer and website owner can relate to. You have a website and obviously you would like to increase traffic, increase conversions, and avoid a high bounce rate (which could lead to google assigning your page a low ranking). What would a good way of modeling this data be? Without even looking at any code we can look at some examples of things that we want to know, and how they relate to markov models.

### 3.1 Arrival

First and foremost, how do people arrive on your page? Is it your home page? Your landing page? Well, this is just the very first page of what is hopefully a sequence of pages. So, the markov analogy here is that this is just the initial state distribution or $\pi$. So, once we have our markov model, the $\pi$ vector will tell us which of our pages a user is most likely to start on.

### 3.2 Sequences of Pages