Datasets:

File size: 11,636 Bytes

da51e92 69fd0a2 da51e92 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 58ce406 69fd0a2 58ce406 69fd0a2 80e5699 69fd0a2 262af20 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 262af20 69fd0a2 3170d87 69fd0a2 80e5699 69fd0a2 80e5699 69fd0a2 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 |

---

license: cc-by-nc-nd-4.0

task_categories:

- audio-classification

language:

- zh

- en

tags:

- music

- art

pretty_name: Erhu Playing Technique Dataset

size_categories:

- 1K<n<10K

viewer: false

---

# Dataset Card for Erhu Playing Technique

## Original Content

This dataset was created and has been utilized for Erhu playing technique detection by [[1]](https://arxiv.org/pdf/1910.09021), which has not undergone peer review. The original dataset comprises 1,253 Erhu audio clips, all performed by professional Erhu players. These clips were annotated according to three hierarchical levels, resulting in annotations for four, seven, and 11 categories. Part of the audio data is sourced from the CTIS dataset described earlier.

## Integration

We first perform label cleaning to abandon the labels for the four and seven categories, since they do not strictly form a hierarchical relationship, and there are also missing data problems. This process leaves us with only the labels for the 11 categories. Then, we add Chinese character label and Chinese pinyin label to enhance comprehensibility. The 11 labels are: Detache (分弓), Diangong (垫弓), Harmonic (泛音), Legato\slide\glissando (连弓\滑音\连音), Percussive (击弓), Pizzicato (拨弦), Ricochet (抛弓), Staccato (断弓), Tremolo (震音), Trill (颤音), and Vibrato (揉弦). After integration, the data structure contains six columns: audio (with a sampling rate of 44,100 Hz), mel spectrograms, numeric label, Italian label, Chinese character label, and Chinese pinyin label. The total number of audio clips remains at 1,253, with a total duration of 25.81 minutes. The average duration is 1.24 seconds.

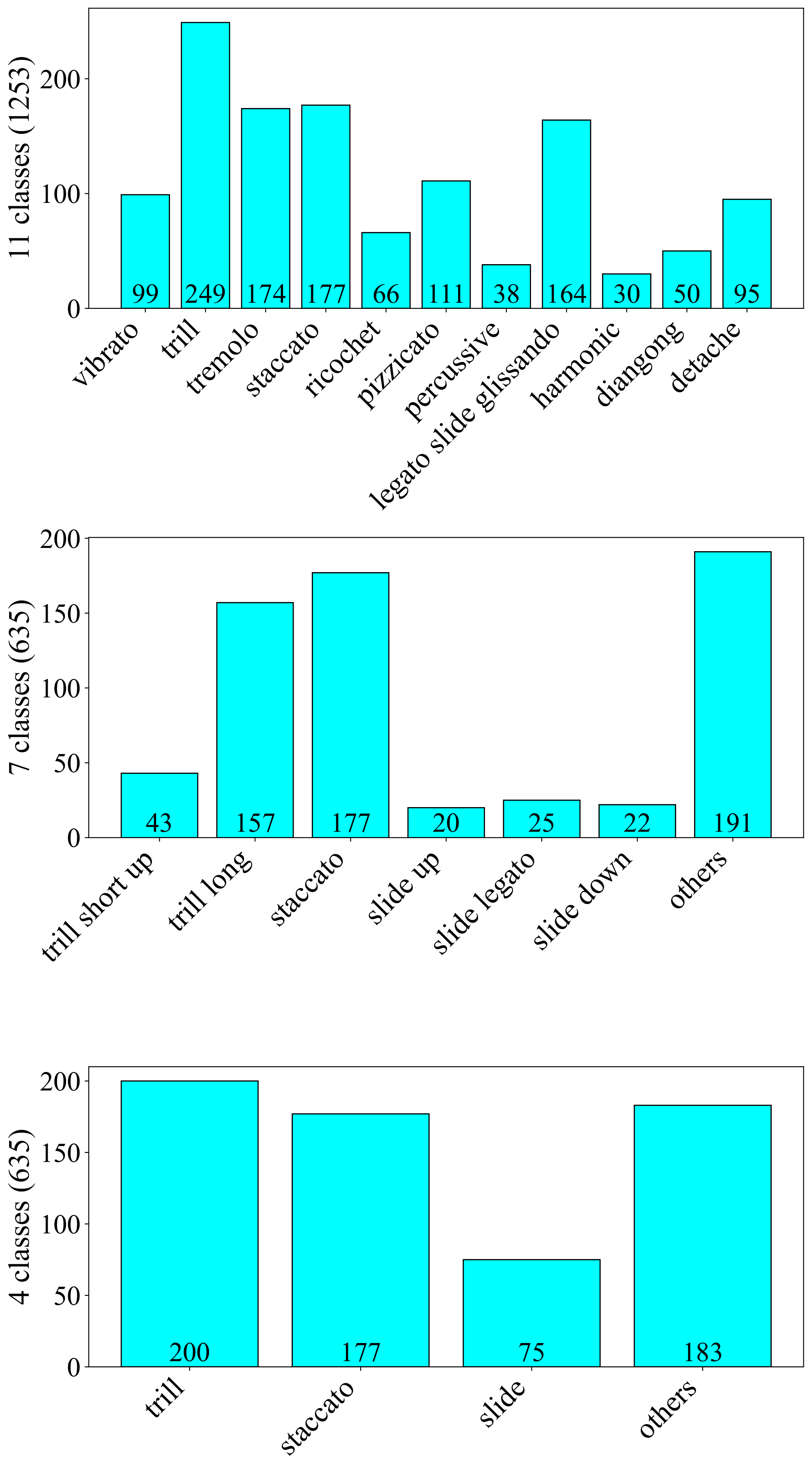

We constructed the <a href="#11-class-subset">default subset</a> of the current integrated version dataset based on its 11 classification data and optimized the names of the 11 categories. The data structure can be seen in the [viewer](https://www.modelscope.cn/datasets/ccmusic-database/erhu_playing_tech/dataPeview). Although the original dataset has been cited in some articles, the experiments in those articles lack reproducibility. In order to demonstrate the effectiveness of the default subset, we further processed the data and constructed the [eval subset](#eval-subset) to supplement the evaluation of this integrated version dataset. The results of the evaluation can be viewed in [[2]](https://huggingface.co/ccmusic-database/erhu_playing_tech). In addition, the labels of categories 4 and 7 in the original dataset were not discarded. Instead, they were separately constructed into [4_class subset](#4-class-subset) and [7_class subset](#7-class-subset). However, these two subsets have not been evaluated and therefore are not reflected in our paper.

## Statistics

|  |  |  |  |

| :---------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------: |

| **Fig. 1** | **Fig. 2** | **Fig. 3** | **Fig. 4** |

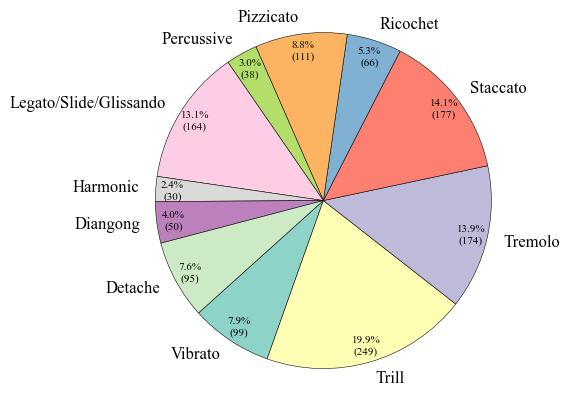

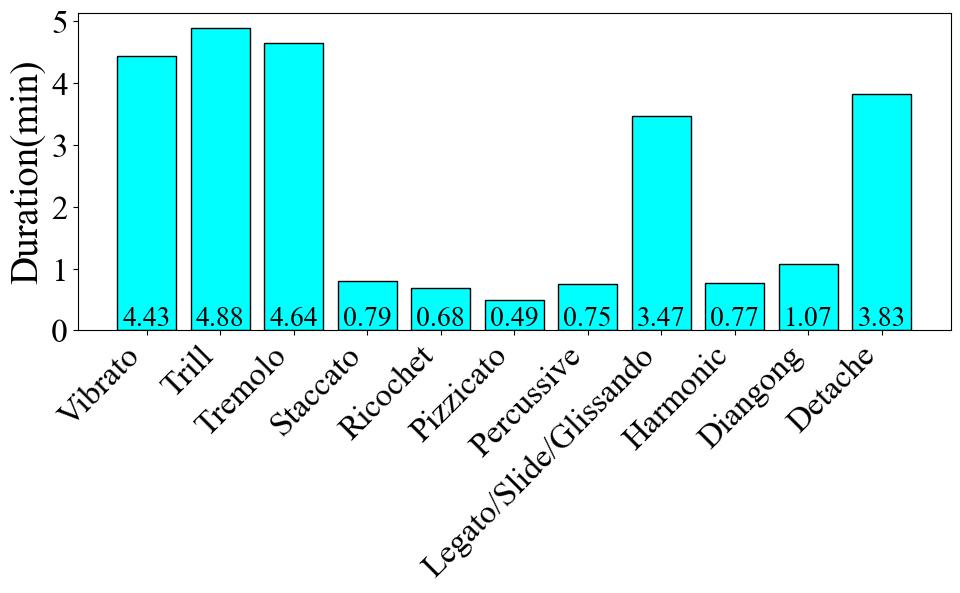

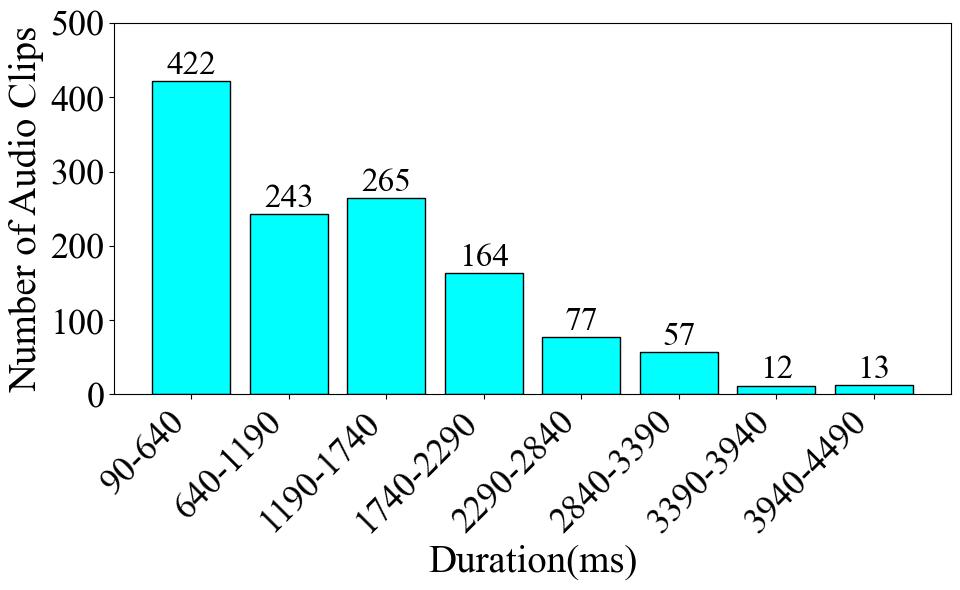

To begin with, **Fig. 1** presents the number of data entries per label. The Trill label has the highest data volume, with 249 instances, which accounts for 19.9% of the total dataset. Conversely, the Harmonic label has the least amount of data, with only 30 instances, representing a meager 2.4% of the total. Turning to the audio duration per category, as illustrated in **Fig. 2**, the audio data associated with the Trill label has the longest cumulative duration, amounting to 4.88 minutes. In contrast, the Percussive label has the shortest audio duration, clocking in at 0.75 minutes. These disparities clearly indicate a class imbalance problem within the dataset. Finally, as shown in **Fig. 3**, we count the frequency of audio occurrences at 550-ms intervals. The quantity of data decreases as the duration lengthens. The most populated duration range is 90-640 ms, with 422 audio clips. The least populated range is 3390-3940 ms, which contains only 12 clips. **Fig. 4** is the statistical charts for the 11_class (Default), 7_class, and 4_class subsets.

### Totals

| Subset | Total count | Total duration(s) |

| :-------------------------: | :---------: | :------------------: |

| Default / 11_classes / Eval | `1253` | `1548.3557823129247` |

| 7_classes / 4_classes | `635` | `719.8175736961448` |

### Range (Default subset)

| Statistical items | Values |

| :--------------------------------------------: | :------------------: |

| Mean duration(ms) | `1235.7189004891661` |

| Min duration(ms) | `91.7687074829932` |

| Max duration(ms) | `4468.934240362812` |

| Classes in the longest audio duartion interval | `Vibrato, Detache` |

## Dataset Structure

### Default Subset Structure

<style>

.erhu td {

vertical-align: middle !important;

text-align: center;

}

.erhu th {

text-align: center;

}

</style>

<table class="erhu">

<tr>

<th>audio</th>

<th>mel</th>

<th>label</th>

</tr>

<tr>

<td>.wav, 44100Hz</td>

<td>.jpg, 44100Hz</td>

<td>4/7/11-class</td>

</tr>

</table>

### Eval Subset Structure

<table class="erhu">

<tr>

<th>mel</th>

<th>cqt</th>

<th>chroma</th>

<th>label</th>

</tr>

<tr>

<td>.jpg, 44100Hz</td>

<td>.jpg, 44100Hz</td>

<td>.jpg, 44100Hz</td>

<td>11-class</td>

</tr>

</table>

### Data Instances

.zip(.wav, .jpg)

### Data Fields

```txt

+ detache 分弓 (72)

+ forte (8)

+ medium (8)

+ piano (56)

+ diangong 垫弓 (28)

+ harmonic 泛音 (18)

+ natural 自然泛音 (6)

+ artificial 人工泛音 (12)

+ legato&slide&glissando 连弓&滑音&大滑音 (114)

+ glissando_down 大滑音 下行 (4)

+ glissando_up 大滑音 上行 (4)

+ huihuayin_down 下回滑音 (18)

+ huihuayin_long_down 后下回滑音 (12)

+ legato&slide_up 向上连弓 包含滑音 (24)

+ forte (8)

+ medium (8)

+ piano (8)

+ slide_dianzhi 垫指滑音 (4)

+ slide_down 向下滑音 (16)

+ slide_legato 连线滑音 (16)

+ slide_up 向上滑音 (16)

+ percussive 打击类音效 (21)

+ dajigong 大击弓 (11)

+ horse 马嘶 (2)

+ stick 敲击弓 (8)

+ pizzicato 拨弦 (96)

+ forte (30)

+ medium (29)

+ piano (30)

+ left 左手勾弦 (6)

+ ricochet 抛弓 (36)

+ staccato 顿弓 (141)

+ forte (47)

+ medium (46)

+ piano (48)

+ tremolo 颤弓 (144)

+ forte (48)

+ medium (48)

+ piano (48)

+ trill 颤音 (202)

+ long 长颤音 (141)

+ forte (46)

+ medium (47)

+ piano (48)

+ short 短颤音 (61)

+ down 下颤音 (30)

+ up 上颤音 (31)

+ vibrato 揉弦 (56)

+ late (13)

+ press 压揉 (6)

+ roll 滚揉 (28)

+ slide 滑揉 (9)

```

### Data Splits

train, validation, test

## Dataset Description

### Dataset Summary

The label system is hierarchical and contains three levels in the raw dataset. The first level consists of four categories: _trill, staccato, slide_, and _others_; the second level comprises seven categories: _trill\short\up, trill\long, staccato, slide up, slide\legato, slide\down_, and _others_; the third level consists of 11 categories, representing the 11 playing techniques described earlier. Although it also employs a three-level label system, the higher-level labels do not exhibit complete downward compatibility with the lower-level labels. Therefore, we cannot merge these three-level labels into the same split but must treat them as three separate subsets.

### Supported Tasks and Leaderboards

Erhu Playing Technique Classification

### Languages

Chinese, English

## Usage

### Eval Subset

```python

from datasets import load_dataset

dataset = load_dataset("ccmusic-database/erhu_playing_tech", name="eval")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

### 4-class Subset

```python

from datasets import load_dataset

dataset = load_dataset("ccmusic-database/erhu_playing_tech", name="4_classes")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

### 7-class Subset

```python

from datasets import load_dataset

ds = load_dataset("ccmusic-database/erhu_playing_tech", name="7_classes")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

### 11-class Subset

```python

from datasets import load_dataset

# default subset

ds = load_dataset("ccmusic-database/erhu_playing_tech", name="11_classes")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

## Maintenance

```bash

git clone git@hf.co:datasets/ccmusic-database/erhu_playing_tech

cd erhu_playing_tech

```

## Dataset Creation

### Curation Rationale

Lack of a dataset for Erhu playing tech

### Source Data

#### Initial Data Collection and Normalization

Zhaorui Liu, Monan Zhou

#### Who are the source language producers?

Students from CCMUSIC

### Annotations

#### Annotation process

This dataset is an audio dataset containing 927 audio clips recorded by the China Conservatory of Music, each with a performance technique of erhu.

#### Who are the annotators?

Students from CCMUSIC

## Considerations for Using the Data

### Social Impact of Dataset

Advancing the Digitization Process of Traditional Chinese Instruments

### Discussion of Biases

Only for Erhu

### Other Known Limitations

Not Specific Enough in Categorization

## Additional Information

### Dataset Curators

Zijin Li

### Evaluation

[1] [Wang, Zehao et al. “Musical Instrument Playing Technique Detection Based on FCN: Using Chinese Bowed-Stringed Instrument as an Example.” ArXiv abs/1910.09021 (2019): n. pag.](https://arxiv.org/pdf/1910.09021.pdf)<br>

[2] <https://huggingface.co/ccmusic-database/erhu_playing_tech>

### Citation Information

```bibtex

@dataset{zhaorui_liu_2021_5676893,

author = {Monan Zhou, Shenyang Xu, Zhaorui Liu, Zhaowen Wang, Feng Yu, Wei Li and Baoqiang Han},

title = {CCMusic: an Open and Diverse Database for Chinese Music Information Retrieval Research},

month = {mar},

year = {2024},

publisher = {HuggingFace},

version = {1.2},

url = {https://huggingface.co/ccmusic-database}

}

```

### Contributions

Provide a dataset for Erhu playing tech |