Datasets:

Merge remote-tracking branch 'origin/main' into main

Browse files- README.md +73 -94

- naab-hist.png +3 -0

- naab-pie.png +3 -0

- naab.py +119 -0

README.md

CHANGED

|

@@ -1,8 +1,4 @@

|

|

| 1 |

---

|

| 2 |

-

annotations_creators:

|

| 3 |

-

- SLPL

|

| 4 |

-

language_creators:

|

| 5 |

-

- SLPL

|

| 6 |

language:

|

| 7 |

- fa

|

| 8 |

license:

|

|

@@ -11,8 +7,6 @@ multilinguality:

|

|

| 11 |

- monolingual

|

| 12 |

size_categories:

|

| 13 |

- 200M<n<300M

|

| 14 |

-

source_datasets:

|

| 15 |

-

- commoncrawl

|

| 16 |

task_categories:

|

| 17 |

- language-modeling

|

| 18 |

- masked-language-modeling

|

|

@@ -23,6 +17,7 @@ pretty_name: naab (A ready-to-use plug-and-play corpus in Farsi)

|

|

| 23 |

---

|

| 24 |

|

| 25 |

# naab: A ready-to-use plug-and-play corpus in Farsi

|

|

|

|

| 26 |

|

| 27 |

## Table of Contents

|

| 28 |

- [Dataset Card Creation Guide](#dataset-card-creation-guide)

|

|

@@ -39,15 +34,7 @@ pretty_name: naab (A ready-to-use plug-and-play corpus in Farsi)

|

|

| 39 |

- [Curation Rationale](#curation-rationale)

|

| 40 |

- [Source Data](#source-data)

|

| 41 |

- [Initial Data Collection and Normalization](#initial-data-collection-and-normalization)

|

| 42 |

-

- [Who are the source language producers?](#who-are-the-source-language-producers)

|

| 43 |

-

- [Annotations](#annotations)

|

| 44 |

-

- [Annotation process](#annotation-process)

|

| 45 |

-

- [Who are the annotators?](#who-are-the-annotators)

|

| 46 |

- [Personal and Sensitive Information](#personal-and-sensitive-information)

|

| 47 |

-

- [Considerations for Using the Data](#considerations-for-using-the-data)

|

| 48 |

-

- [Social Impact of Dataset](#social-impact-of-dataset)

|

| 49 |

-

- [Discussion of Biases](#discussion-of-biases)

|

| 50 |

-

- [Other Known Limitations](#other-known-limitations)

|

| 51 |

- [Additional Information](#additional-information)

|

| 52 |

- [Dataset Curators](#dataset-curators)

|

| 53 |

- [Licensing Information](#licensing-information)

|

|

@@ -57,140 +44,124 @@ pretty_name: naab (A ready-to-use plug-and-play corpus in Farsi)

|

|

| 57 |

## Dataset Description

|

| 58 |

|

| 59 |

- **Homepage:** [Sharif Speech and Language Processing Lab](https://huggingface.co/SLPL)

|

| 60 |

-

- **Repository:** [If the dataset is hosted on github or has a github homepage, add URL here]()

|

| 61 |

- **Paper:** [If the dataset was introduced by a paper or there was a paper written describing the dataset, add URL here (landing page for Arxiv paper preferred)]()

|

| 62 |

-

- **

|

| 63 |

-

- **Point of Contact:** [If known, name and email of at least one person the reader can contact for questions about the dataset.]()

|

| 64 |

|

| 65 |

### Dataset Summary

|

|

|

|

| 66 |

|

| 67 |

-

|

|

|

|

|

|

|

| 68 |

|

| 69 |

-

|

|

|

|

|

|

|

| 70 |

|

| 71 |

-

|

|

|

|

|

|

|

| 72 |

|

| 73 |

-

|

|

|

|

| 74 |

|

| 75 |

-

###

|

| 76 |

|

| 77 |

-

|

| 78 |

|

| 79 |

-

|

|

|

|

| 80 |

|

| 81 |

## Dataset Structure

|

| 82 |

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

Provide an JSON-formatted example and brief description of a typical instance in the dataset. If available, provide a link to further examples.

|

| 86 |

-

|

| 87 |

-

```

|

| 88 |

{

|

| 89 |

-

'

|

| 90 |

-

...

|

| 91 |

}

|

| 92 |

```

|

|

|

|

| 93 |

|

| 94 |

-

Provide any additional information that is not covered in the other sections about the data here. In particular describe any relationships between data points and if these relationships are made explicit.

|

| 95 |

-

|

| 96 |

-

### Data Fields

|

| 97 |

-

|

| 98 |

-

List and describe the fields present in the dataset. Mention their data type, and whether they are used as input or output in any of the tasks the dataset currently supports. If the data has span indices, describe their attributes, such as whether they are at the character level or word level, whether they are contiguous or not, etc. If the datasets contains example IDs, state whether they have an inherent meaning, such as a mapping to other datasets or pointing to relationships between data points.

|

| 99 |

-

|

| 100 |

-

- `example_field`: description of `example_field`

|

| 101 |

-

|

| 102 |

-

Note that the descriptions can be initialized with the **Show Markdown Data Fields** output of the [Datasets Tagging app](https://huggingface.co/spaces/huggingface/datasets-tagging), you will then only need to refine the generated descriptions.

|

| 103 |

|

| 104 |

### Data Splits

|

| 105 |

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

Describe any criteria for splitting the data, if used. If there are differences between the splits (e.g. if the training annotations are machine-generated and the dev and test ones are created by humans, or if different numbers of annotators contributed to each example), describe them here.

|

| 109 |

-

|

| 110 |

-

Provide the sizes of each split. As appropriate, provide any descriptive statistics for the features, such as average length. For example:

|

| 111 |

|

| 112 |

| | train | test |

|

| 113 |

|-------------------------|------:|-----:|

|

| 114 |

-

| Input Sentences |

|

| 115 |

-

| Average Sentence Length |

|

| 116 |

-

|

| 117 |

-

## Dataset Creation

|

| 118 |

-

|

| 119 |

-

### Curation Rationale

|

| 120 |

|

| 121 |

-

|

| 122 |

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

|

| 126 |

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

Describe the data collection process. Describe any criteria for data selection or filtering. List any key words or search terms used. If possible, include runtime information for the collection process.

|

| 130 |

|

| 131 |

-

|

| 132 |

|

| 133 |

-

|

| 134 |

|

| 135 |

-

|

| 136 |

|

| 137 |

-

|

| 138 |

|

| 139 |

-

|

| 140 |

|

| 141 |

-

|

|

|

|

|

|

|

| 142 |

|

| 143 |

-

|

| 144 |

|

| 145 |

-

|

| 146 |

|

| 147 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 148 |

|

| 149 |

-

####

|

|

|

|

| 150 |

|

| 151 |

-

|

|

|

|

| 152 |

|

| 153 |

-

####

|

|

|

|

| 154 |

|

| 155 |

-

|

| 156 |

|

| 157 |

-

|

| 158 |

|

| 159 |

-

|

| 160 |

|

| 161 |

-

Describe the conditions under which the data was annotated (for example, if the annotators were crowdworkers, state what platform was used, or if the data was found, what website the data was found on). If compensation was provided, include that information here.

|

| 162 |

|

| 163 |

### Personal and Sensitive Information

|

| 164 |

|

| 165 |

-

|

| 166 |

-

|

| 167 |

-

State whether the dataset contains other data that might be considered sensitive (e.g., data that reveals racial or ethnic origins, sexual orientations, religious beliefs, political opinions or union memberships, or locations; financial or health data; biometric or genetic data; forms of government identification, such as social security numbers; criminal history).

|

| 168 |

|

| 169 |

-

|

| 170 |

-

|

| 171 |

-

## Considerations for Using the Data

|

| 172 |

-

|

| 173 |

-

### Social Impact of Dataset

|

| 174 |

-

|

| 175 |

-

Farsi is a language used by millions of people, for thousands of years; therefore, there exists numerous resources for this language. However, no-one has ever published a big enough easy to use corpus of textual data. Our dataset eases the path of pre-training and fine-tuning Farsi Language Models (LMs) in self-supervised manner which can lead to better tools for retention and development of Farsi. As a matter of fact, the informal portion of naab contains various dialects including, Turkish, Luri, etc. which are under-represented languages. Although the amount of data is comparably small, but it can be helpful in training a multi-lingual Tokenizer for Farsi variations. As mentioned before, some parts of our dataset are crawled from social media which in result means it contains ethnic, religious, and gender biases.

|

| 176 |

-

|

| 177 |

-

### Discussion of Biases

|

| 178 |

-

|

| 179 |

-

During Exploratory Data Analysis (EDA), we found samples of data including biased opinions about race, religion, and gender. Based on the result we saw in our samples, only a small portion of informal data can be considered biased. Therefore, we anticipate that it won’t affect the trained language model on this data. Furthermore, we decided to keep this small part of data as it may become helpful in training models for classifying harmful and hateful texts.

|

| 180 |

-

|

| 181 |

-

### Other Known Limitations

|

| 182 |

-

|

| 183 |

-

If studies of the datasets have outlined other limitations of the dataset, such as annotation artifacts, please outline and cite them here.

|

| 184 |

|

| 185 |

## Additional Information

|

| 186 |

|

| 187 |

### Dataset Curators

|

| 188 |

|

| 189 |

-

|

|

|

|

| 190 |

|

| 191 |

### Licensing Information

|

| 192 |

|

| 193 |

-

|

| 194 |

|

| 195 |

### Citation Information

|

| 196 |

|

|

@@ -209,3 +180,11 @@ If the dataset has a [DOI](https://www.doi.org/), please provide it here.

|

|

| 209 |

### Contributions

|

| 210 |

|

| 211 |

Thanks to [@sadrasabouri](https://github.com/sadrasabouri) and [@elnazrahmati](https://github.com/elnazrahmati) for adding this dataset.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

language:

|

| 3 |

- fa

|

| 4 |

license:

|

|

|

|

| 7 |

- monolingual

|

| 8 |

size_categories:

|

| 9 |

- 200M<n<300M

|

|

|

|

|

|

|

| 10 |

task_categories:

|

| 11 |

- language-modeling

|

| 12 |

- masked-language-modeling

|

|

|

|

| 17 |

---

|

| 18 |

|

| 19 |

# naab: A ready-to-use plug-and-play corpus in Farsi

|

| 20 |

+

_[If you want to join our community to keep up with news, models and datasets from naab, click on [this](https://docs.google.com/forms/d/e/1FAIpQLSe8kevFl_ODCx-zapAuOIAQYr8IvkVVaVHOuhRL9Ha0RVJ6kg/viewform) link.]_

|

| 21 |

|

| 22 |

## Table of Contents

|

| 23 |

- [Dataset Card Creation Guide](#dataset-card-creation-guide)

|

|

|

|

| 34 |

- [Curation Rationale](#curation-rationale)

|

| 35 |

- [Source Data](#source-data)

|

| 36 |

- [Initial Data Collection and Normalization](#initial-data-collection-and-normalization)

|

|

|

|

|

|

|

|

|

|

|

|

|

| 37 |

- [Personal and Sensitive Information](#personal-and-sensitive-information)

|

|

|

|

|

|

|

|

|

|

|

|

|

| 38 |

- [Additional Information](#additional-information)

|

| 39 |

- [Dataset Curators](#dataset-curators)

|

| 40 |

- [Licensing Information](#licensing-information)

|

|

|

|

| 44 |

## Dataset Description

|

| 45 |

|

| 46 |

- **Homepage:** [Sharif Speech and Language Processing Lab](https://huggingface.co/SLPL)

|

|

|

|

| 47 |

- **Paper:** [If the dataset was introduced by a paper or there was a paper written describing the dataset, add URL here (landing page for Arxiv paper preferred)]()

|

| 48 |

+

- **Point of Contact:** [Sadra Sabouri](mailto:sabouri.sadra@gmail.com)

|

|

|

|

| 49 |

|

| 50 |

### Dataset Summary

|

| 51 |

+

naab is the biggest cleaned and ready-to-use open-source textual corpus in Farsi. It contains about 130GB of data, 250 million paragraphs, and 15 billion words. The project name is derived from the Farsi word ناب which means pure and high-grade. We also provide the raw version of the corpus called naab-raw and an easy-to-use pre-processor that can be employed by those who wanted to make a customized corpus.

|

| 52 |

|

| 53 |

+

You can use this corpus by the commands below:

|

| 54 |

+

```python

|

| 55 |

+

from datasets import load_dataset

|

| 56 |

|

| 57 |

+

dataset = load_dataset("SLPL/naab")

|

| 58 |

+

```

|

| 59 |

+

_Note: be sure that your machine has at least 130 GB free space, also it may take a while to download._

|

| 60 |

|

| 61 |

+

You may need to download parts/splits of this corpus too, if so use the command below (You can find more ways to use it [here](https://huggingface.co/docs/datasets/loading#slice-splits)):

|

| 62 |

+

```python

|

| 63 |

+

from datasets import load_dataset

|

| 64 |

|

| 65 |

+

dataset = load_dataset("SLPL/naab", split="train[:10%]")

|

| 66 |

+

```

|

| 67 |

|

| 68 |

+

### Supported Tasks and Leaderboards

|

| 69 |

|

| 70 |

+

This corpus can be used for training all language models which can be trained by Masked Language Modeling (MLM) or any other self-supervised objective.

|

| 71 |

|

| 72 |

+

- `language-modeling`

|

| 73 |

+

- `masked-language-modeling`

|

| 74 |

|

| 75 |

## Dataset Structure

|

| 76 |

|

| 77 |

+

Each row of the dataset will look like something like the below:

|

| 78 |

+

```json

|

|

|

|

|

|

|

|

|

|

| 79 |

{

|

| 80 |

+

'text': "این یک تست برای نمایش یک پاراگراف در پیکره متنی ناب است.",

|

|

|

|

| 81 |

}

|

| 82 |

```

|

| 83 |

+

+ `text` : the textual paragraph.

|

| 84 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 85 |

|

| 86 |

### Data Splits

|

| 87 |

|

| 88 |

+

This dataset includes two splits (`train` and `test`). We split these two by dividing the randomly permuted version of the corpus into (95%, 5%) division respected to (`train`, `test`). Since `validation` is usually occurring during training with the `train` dataset we avoid proposing another split for it.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 89 |

|

| 90 |

| | train | test |

|

| 91 |

|-------------------------|------:|-----:|

|

| 92 |

+

| Input Sentences | 225892925 | 11083851 |

|

| 93 |

+

| Average Sentence Length | 61 | 25 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 94 |

|

| 95 |

+

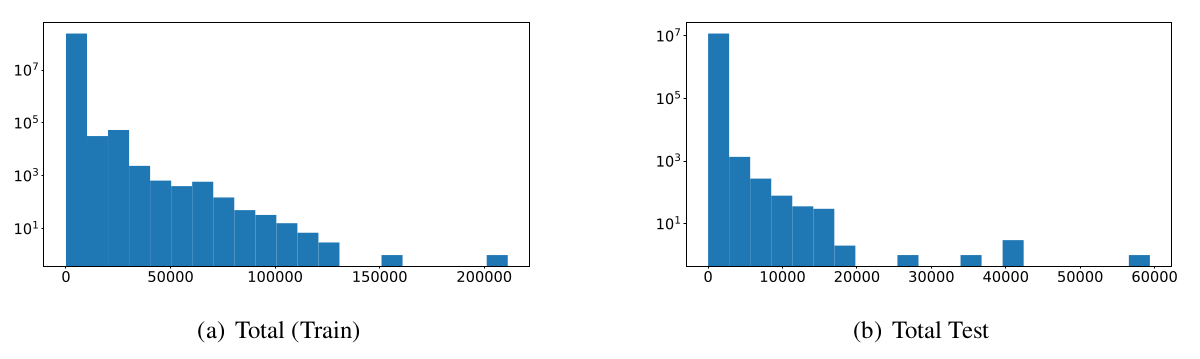

Below you can see the log-based histogram of word/paragraph over the two splits of the dataset.

|

| 96 |

|

| 97 |

+

<div align="center">

|

| 98 |

+

<img src="https://huggingface.co/datasets/SLPL/naab/resolve/main/naab-hist.png">

|

| 99 |

+

</div>

|

| 100 |

|

| 101 |

+

## Dataset Creation

|

|

|

|

|

|

|

| 102 |

|

| 103 |

+

### Curation Rationale

|

| 104 |

|

| 105 |

+

Due to the lack of a huge amount of text data in lower resource languages - like Farsi - researchers working on these languages were always finding it hard to start to fine-tune such models. This phenomenon can lead to a situation in which the golden opportunity for fine-tuning models is just in hands of a few companies or countries which contributes to the weakening the open science.

|

| 106 |

|

| 107 |

+

The last biggest cleaned merged textual corpus in Farsi is a 70GB cleaned text corpus from a compilation of 8 big data sets that have been cleaned and can be downloaded directly. Our solution to the discussed issues is called naab. It provides **126GB** (including more than **224 million** sequences and nearly **15 billion** words) as the training corpus and **2.3GB** (including nearly **11 million** sequences and nearly **300 million** words) as the test corpus.

|

| 108 |

|

| 109 |

+

### Source Data

|

| 110 |

|

| 111 |

+

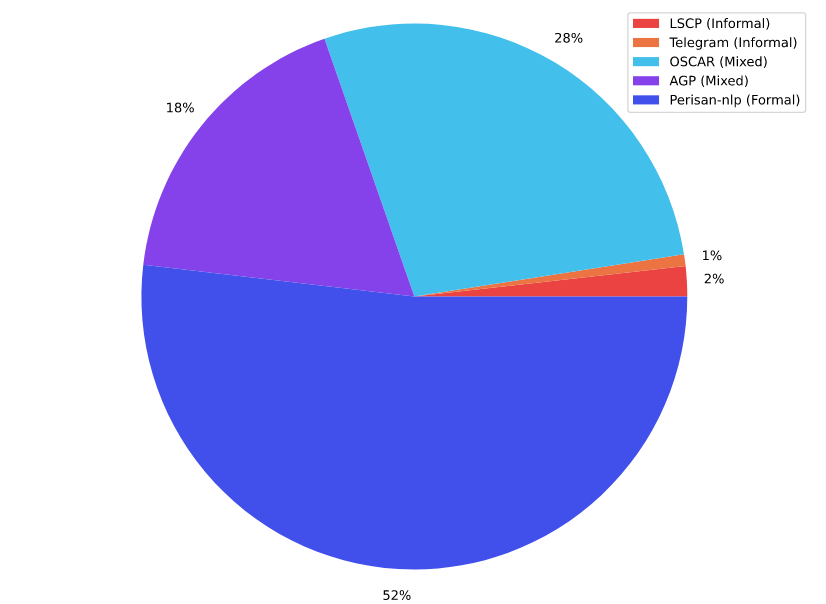

The textual corpora that we used as our source data are illustrated in the figure below. It contains 5 corpora which are linked in the coming sections.

|

| 112 |

|

| 113 |

+

<div align="center">

|

| 114 |

+

<img src="https://huggingface.co/datasets/SLPL/naab/resolve/main/naab-pie.png">

|

| 115 |

+

</div>

|

| 116 |

|

| 117 |

+

#### Persian NLP

|

| 118 |

|

| 119 |

+

[This](https://github.com/persiannlp/persian-raw-text) corpus includes eight corpora that are sorted based on their volume as below:

|

| 120 |

|

| 121 |

+

- [Common Crawl](https://commoncrawl.org/): 65GB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/commoncrawl_fa_merged.txt))

|

| 122 |

+

- [MirasText](https://github.com/miras-tech/MirasText): 12G

|

| 123 |

+

- [W2C – Web to Corpus](https://lindat.mff.cuni.cz/repository/xmlui/handle/11858/00-097C-0000-0022-6133-9): 1GB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/w2c_merged.txt))

|

| 124 |

+

- Persian Wikipedia (March 2020 dump): 787MB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/fawiki_merged.txt))

|

| 125 |

+

- [Leipzig Corpora](https://corpora.uni-leipzig.de/): 424M ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/LeipzigCorpus.txt))

|

| 126 |

+

- [VOA corpus](https://jon.dehdari.org/corpora/): 66MB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/voa_persian_2003_2008_cleaned.txt))

|

| 127 |

+

- [Persian poems corpus](https://github.com/amnghd/Persian_poems_corpus): 61MB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/poems_merged.txt))

|

| 128 |

+

- [TEP: Tehran English-Persian parallel corpus](http://opus.nlpl.eu/TEP.php): 33MB ([link](https://storage.googleapis.com/danielk-files/farsi-text/merged_files/TEP_fa.txt))

|

| 129 |

+

|

| 130 |

+

#### AGP

|

| 131 |

+

This corpus was a formerly private corpus for ASR Gooyesh Pardaz which is now published for all users by this project. This corpus contains more than 140 million paragraphs summed up in 23GB (after cleaning). This corpus is a mixture of both formal and informal paragraphs that are crawled from different websites and/or social media.

|

| 132 |

|

| 133 |

+

#### OSCAR-fa

|

| 134 |

+

[OSCAR](https://oscar-corpus.com/) or Open Super-large Crawled ALMAnaCH coRpus is a huge multilingual corpus obtained by language classification and filtering of the Common Crawl corpus using the go classy architecture. Data is distributed by language in both original and deduplicated form. We used the unshuffled-deduplicated-fa from this corpus, after cleaning there were about 36GB remaining.

|

| 135 |

|

| 136 |

+

#### Telegram

|

| 137 |

+

Telegram, a cloud-based instant messaging service, is a widely used application in Iran. Following this hypothesis, we prepared a list of Telegram channels in Farsi covering various topics including sports, daily news, jokes, movies and entertainment, etc. The text data extracted from mentioned channels mainly contains informal data.

|

| 138 |

|

| 139 |

+

#### LSCP

|

| 140 |

+

[The Large Scale Colloquial Persian Language Understanding dataset](https://iasbs.ac.ir/~ansari/lscp/) has 120M sentences from 27M casual Persian sentences with its derivation tree, part-of-speech tags, sentiment polarity, and translations in English, German, Czech, Italian, and Hindi. However, we just used the Farsi part of it and after cleaning we had 2.3GB of it remaining. Since the dataset is casual, it may help our corpus have more informal sentences although its proportion to formal paragraphs is not comparable.

|

| 141 |

|

| 142 |

+

#### Initial Data Collection and Normalization

|

| 143 |

|

| 144 |

+

The data collection process was separated into two parts. In the first part, we searched for existing corpora. After downloading these corpora we started to crawl data from some social networks. Then thanks to [ASR Gooyesh Pardaz](https://asr-gooyesh.com/en/) we were provided with enough textual data to start the naab journey.

|

| 145 |

|

| 146 |

+

We used a preprocessor based on some stream-based Linux kernel commands so that this process can be less time/memory-consuming. The code is provided [here](https://github.com/Sharif-SLPL/t5-fa/tree/main/preprocess).

|

| 147 |

|

|

|

|

| 148 |

|

| 149 |

### Personal and Sensitive Information

|

| 150 |

|

| 151 |

+

Since this corpus is briefly a compilation of some former corpora we take no responsibility for personal information included in this corpus. If you detect any of these violations please let us know, we try our best to remove them from the corpus ASAP.

|

|

|

|

|

|

|

| 152 |

|

| 153 |

+

We tried our best to provide anonymity while keeping the crucial information. We shuffled some parts of the corpus so the information passing through possible conversations wouldn't be harmful.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 154 |

|

| 155 |

## Additional Information

|

| 156 |

|

| 157 |

### Dataset Curators

|

| 158 |

|

| 159 |

+

+ Sadra Sabouri (Sharif University of Technology)

|

| 160 |

+

+ Elnaz Rahmati (Sharif University of Technology)

|

| 161 |

|

| 162 |

### Licensing Information

|

| 163 |

|

| 164 |

+

mit?

|

| 165 |

|

| 166 |

### Citation Information

|

| 167 |

|

|

|

|

| 180 |

### Contributions

|

| 181 |

|

| 182 |

Thanks to [@sadrasabouri](https://github.com/sadrasabouri) and [@elnazrahmati](https://github.com/elnazrahmati) for adding this dataset.

|

| 183 |

+

|

| 184 |

+

### Keywords

|

| 185 |

+

+ Farsi

|

| 186 |

+

+ Persian

|

| 187 |

+

+ raw text

|

| 188 |

+

+ پیکره فارسی

|

| 189 |

+

+ پیکره متنی

|

| 190 |

+

+ آموزش مدل زبانی

|

naab-hist.png

ADDED

|

Git LFS Details

|

naab-pie.png

ADDED

|

Git LFS Details

|

naab.py

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2020 The HuggingFace Datasets Authors and the current dataset script contributor.

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

"""naab: A ready-to-use plug-and-play corpus in Farsi"""

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

import csv

|

| 18 |

+

import json

|

| 19 |

+

import os

|

| 20 |

+

|

| 21 |

+

import datasets

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

# TODO: Add BibTeX citation

|

| 25 |

+

# Find for instance the citation on arxiv or on the dataset repo/website

|

| 26 |

+

_CITATION = """\

|

| 27 |

+

"""

|

| 28 |

+

|

| 29 |

+

# You can copy an official description

|

| 30 |

+

_DESCRIPTION = """\

|

| 31 |

+

Huge corpora of textual data are always known to be a crucial need for training deep models such as transformer-based ones. This issue is emerging more in lower resource languages - like Farsi. We propose naab, the biggest cleaned and ready-to-use open-source textual corpus in Farsi. It contains about 130GB of data, 250 million paragraphs, and 15 billion words. The project name is derived from the Farsi word ناب which means pure and high-grade.

|

| 32 |

+

"""

|

| 33 |

+

|

| 34 |

+

_HOMEPAGE = "https://huggingface.co/datasets/SLPL/naab"

|

| 35 |

+

|

| 36 |

+

# TODO: ?

|

| 37 |

+

_LICENSE = "mit"

|

| 38 |

+

|

| 39 |

+

N_FILES = {

|

| 40 |

+

"train": 126,

|

| 41 |

+

"test": 3

|

| 42 |

+

}

|

| 43 |

+

_BASE_URL = "https://huggingface.co/datasets/SLPL/naab/resolve/main/data/"

|

| 44 |

+

_URLS = {

|

| 45 |

+

"train": [_BASE_URL + "train-{:05d}-of-{:05d}.txt".format(x, N_FILES["train"]) for x in range(N_FILES["train"])],

|

| 46 |

+

"test": [_BASE_URL + "test-{:05d}-of-{:05d}.txt".format(x, N_FILES["test"]) for x in range(N_FILES["test"])],

|

| 47 |

+

}

|

| 48 |

+

VERSION = datasets.Version("1.0.0")

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

class NaabConfig(datasets.BuilderConfig):

|

| 52 |

+

"""BuilderConfig for naab."""

|

| 53 |

+

|

| 54 |

+

def __init__(self, *args, **kwargs):

|

| 55 |

+

"""BuilderConfig for naab.

|

| 56 |

+

Args:

|

| 57 |

+

**kwargs: keyword arguments forwarded to super.

|

| 58 |

+

"""

|

| 59 |

+

super(NaabConfig, self).__init__(*args, **kwargs)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

class Naab(datasets.GeneratorBasedBuilder):

|

| 63 |

+

"""naab: A ready-to-use plug-and-play corpus in Farsi."""

|

| 64 |

+

|

| 65 |

+

BUILDER_CONFIGS = [

|

| 66 |

+

NaabConfig(

|

| 67 |

+

name="all",

|

| 68 |

+

version=VERSION,

|

| 69 |

+

description=_DESCRIPTION)

|

| 70 |

+

]

|

| 71 |

+

BUILDER_CONFIG_CLASS = NaabConfig

|

| 72 |

+

|

| 73 |

+

DEFAULT_CONFIG_NAME = "all"

|

| 74 |

+

|

| 75 |

+

def _info(self):

|

| 76 |

+

features = datasets.Features({

|

| 77 |

+

"text": datasets.Value("string"),

|

| 78 |

+

})

|

| 79 |

+

return datasets.DatasetInfo(

|

| 80 |

+

description=_DESCRIPTION,

|

| 81 |

+

features=features,

|

| 82 |

+

supervised_keys=None,

|

| 83 |

+

homepage=_HOMEPAGE,

|

| 84 |

+

license=_LICENSE,

|

| 85 |

+

citation=_CITATION,

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

def _split_generators(self, dl_manager):

|

| 89 |

+

data_urls = {}

|

| 90 |

+

for split in ["train", "test"]:

|

| 91 |

+

data_urls[split] = _URLS[split]

|

| 92 |

+

|

| 93 |

+

train_downloaded_files = dl_manager.download(data_urls["train"])

|

| 94 |

+

test_downloaded_files = dl_manager.download(data_urls["test"])

|

| 95 |

+

return [

|

| 96 |

+

datasets.SplitGenerator(

|

| 97 |

+

name=datasets.Split.TRAIN,

|

| 98 |

+

gen_kwargs={

|

| 99 |

+

"filepath": train_downloaded_files,

|

| 100 |

+

"split": "train"

|

| 101 |

+

}

|

| 102 |

+

),

|

| 103 |

+

datasets.SplitGenerator(

|

| 104 |

+

name=datasets.Split.TEST,

|

| 105 |

+

gen_kwargs={

|

| 106 |

+

"filepath": test_downloaded_files,

|

| 107 |

+

"split": "test"

|

| 108 |

+

}

|

| 109 |

+

),

|

| 110 |

+

]

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

def _generate_examples(self, filepath, split):

|

| 114 |

+

with open(filepath, encoding="utf-8") as f:

|

| 115 |

+

for key, row in enumerate(f):

|

| 116 |

+

if row.strip():

|

| 117 |

+

yield idx, {"text": row}

|

| 118 |

+

else:

|

| 119 |

+

yield idx, {"text": ""}

|