status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

233

| body

stringlengths 0

186k

⌀ | issue_url

stringlengths 38

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

unknown | language

stringclasses 5

values | commit_datetime

unknown | updated_file

stringlengths 7

188

| chunk_content

stringlengths 1

1.03M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | """Wrapper around FAISS vector database."""

from __future__ import annotations

import uuid

from typing import Any, Callable, Dict, Iterable, List, Optional, Tuple

import numpy as np

from langchain.docstore.base import AddableMixin, Docstore

from langchain.docstore.document import Document

from langchain.docstore.in_memory import InMemoryDocstore

from langchain.embeddings.base import Embeddings

from langchain.vectorstores.base import VectorStore

from langchain.vectorstores.utils import maximal_marginal_relevance

class FAISS(VectorStore): |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | """Wrapper around FAISS vector database.

To use, you should have the ``faiss`` python package installed.

Example:

.. code-block:: python

from langchain import FAISS

faiss = FAISS(embedding_function, index, docstore)

"""

def __init__(

self,

embedding_function: Callable,

index: Any,

docstore: Docstore,

index_to_docstore_id: Dict[int, str],

):

"""Initialize with necessary components."""

self.embedding_function = embedding_function

self.index = index

self.docstore = docstore

self.index_to_docstore_id = index_to_docstore_id

def add_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | self, texts: Iterable[str], metadatas: Optional[List[dict]] = None

) -> List[str]:

"""Run more texts through the embeddings and add to the vectorstore.

Args:

texts: Iterable of strings to add to the vectorstore.

metadatas: Optional list of metadatas associated with the texts.

Returns:

List of ids from adding the texts into the vectorstore.

"""

if not isinstance(self.docstore, AddableMixin):

raise ValueError(

"If trying to add texts, the underlying docstore should support "

f"adding items, which {self.docstore} does not"

)

embeddings = [self.embedding_function(text) for text in texts]

documents = []

for i, text in enumerate(texts):

metadata = metadatas[i] if metadatas else {}

documents.append(Document(page_content=text, metadata=metadata))

starting_len = len(self.index_to_docstore_id)

self.index.add(np.array(embeddings, dtype=np.float32))

full_info = [

(starting_len + i, str(uuid.uuid4()), doc)

for i, doc in enumerate(documents) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | ]

self.docstore.add({_id: doc for _, _id, doc in full_info})

index_to_id = {index: _id for index, _id, _ in full_info}

self.index_to_docstore_id.update(index_to_id)

return [_id for _, _id, _ in full_info]

def similarity_search_with_score(

self, query: str, k: int = 4

) -> List[Tuple[Document, float]]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query and score for each

"""

embedding = self.embedding_function(query)

scores, indices = self.index.search(np.array([embedding], dtype=np.float32), k)

docs = []

for j, i in enumerate(indices[0]):

if i == -1:

continue

_id = self.index_to_docstore_id[i]

doc = self.docstore.search(_id)

if not isinstance(doc, Document):

raise ValueError(f"Could not find document for id {_id}, got {doc}")

docs.append((doc, scores[0][j]))

return docs

def similarity_search( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | self, query: str, k: int = 4, **kwargs: Any

) -> List[Document]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query.

"""

docs_and_scores = self.similarity_search_with_score(query, k)

return [doc for doc, _ in docs_and_scores]

def max_marginal_relevance_search( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | self, query: str, k: int = 4, fetch_k: int = 20

) -> List[Document]:

"""Return docs selected using the maximal marginal relevance.

Maximal marginal relevance optimizes for similarity to query AND diversity

among selected documents.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

fetch_k: Number of Documents to fetch to pass to MMR algorithm.

Returns:

List of Documents selected by maximal marginal relevance.

"""

embedding = self.embedding_function(query)

_, indices = self.index.search(np.array([embedding], dtype=np.float32), fetch_k)

embeddings = [self.index.reconstruct(int(i)) for i in indices[0] if i != -1]

mmr_selected = maximal_marginal_relevance(embedding, embeddings, k=k)

selected_indices = [indices[0][i] for i in mmr_selected]

docs = []

for i in selected_indices:

_id = self.index_to_docstore_id[i]

doc = self.docstore.search(_id)

if not isinstance(doc, Document):

raise ValueError(f"Could not find document for id {_id}, got {doc}")

docs.append(doc)

return docs

@classmethod

def from_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | cls,

texts: List[str],

embedding: Embeddings,

metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> FAISS:

"""Construct FAISS wrapper from raw documents.

This is a user friendly interface that:

1. Embeds documents.

2. Creates an in memory docstore |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | langchain/vectorstores/faiss.py | 3. Initializes the FAISS database

This is intended to be a quick way to get started.

Example:

.. code-block:: python

from langchain import FAISS

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

faiss = FAISS.from_texts(texts, embeddings)

"""

try:

import faiss

except ImportError:

raise ValueError(

"Could not import faiss python package. "

"Please it install it with `pip install faiss` "

"or `pip install faiss-cpu` (depending on Python version)."

)

embeddings = embedding.embed_documents(texts)

index = faiss.IndexFlatL2(len(embeddings[0]))

index.add(np.array(embeddings, dtype=np.float32))

documents = []

for i, text in enumerate(texts):

metadata = metadatas[i] if metadatas else {}

documents.append(Document(page_content=text, metadata=metadata))

index_to_id = {i: str(uuid.uuid4()) for i in range(len(documents))}

docstore = InMemoryDocstore(

{index_to_id[i]: doc for i, doc in enumerate(documents)}

)

return cls(embedding.embed_query, index, docstore, index_to_id) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | tests/integration_tests/vectorstores/test_faiss.py | """Test FAISS functionality."""

from typing import List

import pytest

from langchain.docstore.document import Document

from langchain.docstore.in_memory import InMemoryDocstore

from langchain.docstore.wikipedia import Wikipedia

from langchain.embeddings.base import Embeddings

from langchain.vectorstores.faiss import FAISS

class FakeEmbeddings(Embeddings):

"""Fake embeddings functionality for testing."""

def embed_documents(self, texts: List[str]) -> List[List[float]]:

"""Return simple embeddings."""

return [[i] * 10 for i in range(len(texts))]

def embed_query(self, text: str) -> List[float]:

"""Return simple embeddings."""

return [0] * 10

def test_faiss() -> None: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | tests/integration_tests/vectorstores/test_faiss.py | """Test end to end construction and search."""

texts = ["foo", "bar", "baz"]

docsearch = FAISS.from_texts(texts, FakeEmbeddings())

index_to_id = docsearch.index_to_docstore_id

expected_docstore = InMemoryDocstore(

{

index_to_id[0]: Document(page_content="foo"),

index_to_id[1]: Document(page_content="bar"),

index_to_id[2]: Document(page_content="baz"),

}

)

assert docsearch.docstore.__dict__ == expected_docstore.__dict__

output = docsearch.similarity_search("foo", k=1)

assert output == [Document(page_content="foo")]

def test_faiss_with_metadatas() -> None:

"""Test end to end construction and search."""

texts = ["foo", "bar", "baz"]

metadatas = [{"page": i} for i in range(len(texts))]

docsearch = FAISS.from_texts(texts, FakeEmbeddings(), metadatas=metadatas)

expected_docstore = InMemoryDocstore(

{

"0": Document(page_content="foo", metadata={"page": 0}),

"1": Document(page_content="bar", metadata={"page": 1}),

"2": Document(page_content="baz", metadata={"page": 2}),

}

)

assert docsearch.docstore.__dict__ == expected_docstore.__dict__

output = docsearch.similarity_search("foo", k=1)

assert output == [Document(page_content="foo", metadata={"page": 0})]

def test_faiss_search_not_found() -> None: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 674 | test_faiss_with_metadatas: key mismatch in assert | https://github.com/hwchase17/langchain/blob/236ae93610a8538d3d0044fc29379c481acc6789/tests/integration_tests/vectorstores/test_faiss.py#L54

This test will fail because `FAISS.from_texts` will assign uuid4s as keys in its docstore, while `expected_docstore` has string numbers as keys. | https://github.com/langchain-ai/langchain/issues/674 | https://github.com/langchain-ai/langchain/pull/676 | e45f7e40e80d9b47fb51853f0c672e747735b951 | e04b063ff40d7f70eaa91f135729071de60b219d | "2023-01-21T16:02:54" | python | "2023-01-22T00:08:14" | tests/integration_tests/vectorstores/test_faiss.py | """Test what happens when document is not found."""

texts = ["foo", "bar", "baz"]

docsearch = FAISS.from_texts(texts, FakeEmbeddings())

docsearch.docstore = InMemoryDocstore({})

with pytest.raises(ValueError):

docsearch.similarity_search("foo")

def test_faiss_add_texts() -> None:

"""Test end to end adding of texts."""

texts = ["foo", "bar", "baz"]

docsearch = FAISS.from_texts(texts, FakeEmbeddings())

docsearch.add_texts(["foo"])

output = docsearch.similarity_search("foo", k=2)

assert output == [Document(page_content="foo"), Document(page_content="foo")]

def test_faiss_add_texts_not_supported() -> None:

"""Test adding of texts to a docstore that doesn't support it."""

docsearch = FAISS(FakeEmbeddings().embed_query, None, Wikipedia(), {})

with pytest.raises(ValueError):

docsearch.add_texts(["foo"]) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | """Wrapper around Pinecone vector database."""

from __future__ import annotations

import uuid

from typing import Any, Callable, Iterable, List, Optional, Tuple

from langchain.docstore.document import Document

from langchain.embeddings.base import Embeddings

from langchain.vectorstores.base import VectorStore

class Pinecone(VectorStore):

"""Wrapper around Pinecone vector database.

To use, you should have the ``pinecone-client`` python package installed.

Example:

.. code-block:: python

from langchain.vectorstores import Pinecone

from langchain.embeddings.openai import OpenAIEmbeddings

import pinecone

pinecone.init(api_key="***", environment="us-west1-gcp")

index = pinecone.Index("langchain-demo")

embeddings = OpenAIEmbeddings()

vectorstore = Pinecone(index, embeddings.embed_query, "text")

"""

def __init__( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | self,

index: Any,

embedding_function: Callable,

text_key: str,

):

"""Initialize with Pinecone client."""

try:

import pinecone

except ImportError:

raise ValueError(

"Could not import pinecone python package. "

"Please it install it with `pip install pinecone-client`."

)

if not isinstance(index, pinecone.index.Index):

raise ValueError(

f"client should be an instance of pinecone.index.Index, "

f"got {type(index)}"

)

self._index = index

self._embedding_function = embedding_function

self._text_key = text_key

def add_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | self,

texts: Iterable[str],

metadatas: Optional[List[dict]] = None,

ids: Optional[List[str]] = None,

namespace: Optional[str] = None,

) -> List[str]:

"""Run more texts through the embeddings and add to the vectorstore.

Args:

texts: Iterable of strings to add to the vectorstore.

metadatas: Optional list of metadatas associated with the texts.

ids: Optional list of ids to associate with the texts.

namespace: Optional pinecone namespace to add the texts to.

Returns:

List of ids from adding the texts into the vectorstore.

"""

docs = []

ids = ids or [str(uuid.uuid4()) for _ in texts]

for i, text in enumerate(texts):

embedding = self._embedding_function(text)

metadata = metadatas[i] if metadatas else {}

metadata[self._text_key] = text

docs.append((ids[i], embedding, metadata))

self._index.upsert(vectors=docs, namespace=namespace)

return ids

def similarity_search_with_score( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | self,

query: str,

k: int = 5,

filter: Optional[dict] = None,

namespace: Optional[str] = None,

) -> List[Tuple[Document, float]]:

"""Return pinecone documents most similar to query, along with scores.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

filter: Dictionary of argument(s) to filter on metadata

namespace: Namespace to search in. Default will search in '' namespace.

Returns:

List of Documents most similar to the query and score for each

"""

query_obj = self._embedding_function(query)

docs = []

results = self._index.query(

[query_obj],

top_k=k,

include_metadata=True,

namespace=namespace,

filter=filter,

)

for res in results["matches"]:

metadata = res["metadata"]

text = metadata.pop(self._text_key)

docs.append((Document(page_content=text, metadata=metadata), res["score"]))

return docs

def similarity_search( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | self,

query: str,

k: int = 5,

filter: Optional[dict] = None,

namespace: Optional[str] = None,

**kwargs: Any,

) -> List[Document]:

"""Return pinecone documents most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

filter: Dictionary of argument(s) to filter on metadata

namespace: Namespace to search in. Default will search in '' namespace.

Returns:

List of Documents most similar to the query and score for each

"""

query_obj = self._embedding_function(query)

docs = []

results = self._index.query(

[query_obj],

top_k=k,

include_metadata=True,

namespace=namespace,

filter=filter,

)

for res in results["matches"]:

metadata = res["metadata"]

text = metadata.pop(self._text_key)

docs.append(Document(page_content=text, metadata=metadata)) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | return docs

@classmethod

def from_texts(

cls,

texts: List[str],

embedding: Embeddings,

metadatas: Optional[List[dict]] = None,

ids: Optional[List[str]] = None,

batch_size: int = 32,

text_key: str = "text",

index_name: Optional[str] = None,

namespace: Optional[str] = None,

**kwargs: Any,

) -> Pinecone:

"""Construct Pinecone wrapper from raw documents.

This is a user friendly interface that:

1. Embeds documents.

2. Adds the documents to a provided Pinecone index

This is intended to be a quick way to get started.

Example:

.. code-block:: python

from langchain import Pinecone

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

pinecone = Pinecone.from_texts(

texts,

embeddings,

index_name="langchain-demo"

)

""" |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | try:

import pinecone

except ImportError:

raise ValueError(

"Could not import pinecone python package. "

"Please install it with `pip install pinecone-client`."

)

_index_name = index_name or str(uuid.uuid4())

indexes = pinecone.list_indexes()

if _index_name in indexes:

index = pinecone.Index(_index_name)

else:

index = None

for i in range(0, len(texts), batch_size):

i_end = min(i + batch_size, len(texts))

lines_batch = texts[i : i + batch_size]

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

embeds = embedding.embed_documents(lines_batch)

if metadatas:

metadata = metadatas[i : i + batch_size]

else:

metadata = [{} for _ in range(i, i_end)] |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 906 | Error in Pinecone batch selection logic | Current implementation of pinecone vec db finds the batches using:

```

# set end position of batch

i_end = min(i + batch_size, len(texts))

```

[link](https://github.com/hwchase17/langchain/blob/master/langchain/vectorstores/pinecone.py#L199)

But the following lines then go on to use a mix of `[i : i + batch_size]` and `[i:i_end]` to create batches:

```python

# get batch of texts and ids

lines_batch = texts[i : i + batch_size]

# create ids if not provided

if ids:

ids_batch = ids[i : i + batch_size]

else:

ids_batch = [str(uuid.uuid4()) for n in range(i, i_end)]

```

Fortunately, there is a `zip` function a few lines down that cuts the potentially longer chunks, preventing an error from being raised — yet I don't think think `[i: i+batch_size]` should be maintained as it's confusing and not explicit

Raised a PR here #907 | https://github.com/langchain-ai/langchain/issues/906 | https://github.com/langchain-ai/langchain/pull/907 | 82c080c6e617d4959fb4ee808deeba075f361702 | 3aa53b44dd5f013e35c316d110d340a630b0abd1 | "2023-02-06T07:52:59" | python | "2023-02-06T20:45:56" | langchain/vectorstores/pinecone.py | for j, line in enumerate(lines_batch):

metadata[j][text_key] = line

to_upsert = zip(ids_batch, embeds, metadata)

if index is None:

pinecone.create_index(_index_name, dimension=len(embeds[0]))

index = pinecone.Index(_index_name)

index.upsert(vectors=list(to_upsert), namespace=namespace)

return cls(index, embedding.embed_query, text_key)

@classmethod

def from_existing_index(

cls,

index_name: str,

embedding: Embeddings,

text_key: str = "text",

namespace: Optional[str] = None,

) -> Pinecone:

"""Load pinecone vectorstore from index name."""

try:

import pinecone

except ImportError:

raise ValueError(

"Could not import pinecone python package. "

"Please install it with `pip install pinecone-client`."

)

return cls(

pinecone.Index(index_name, namespace), embedding.embed_query, text_key

) |

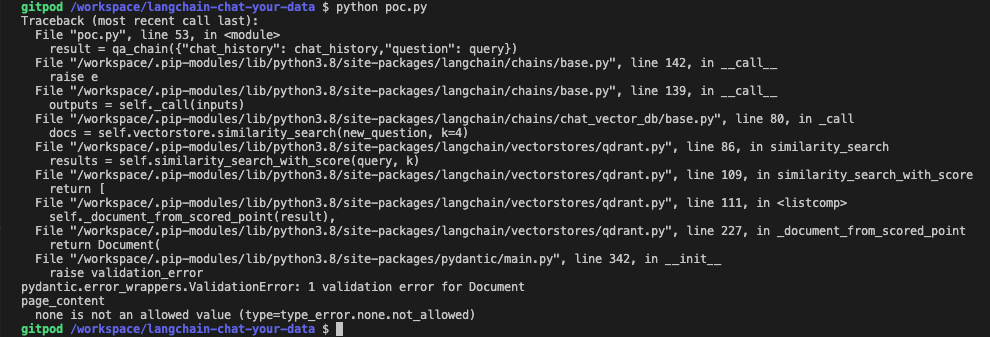

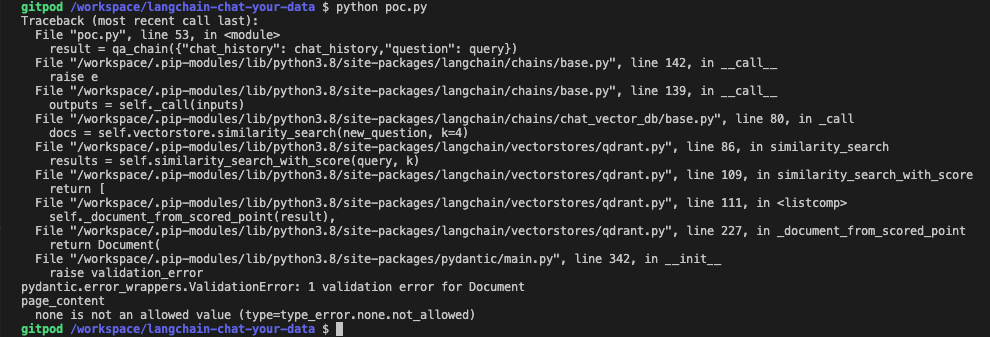

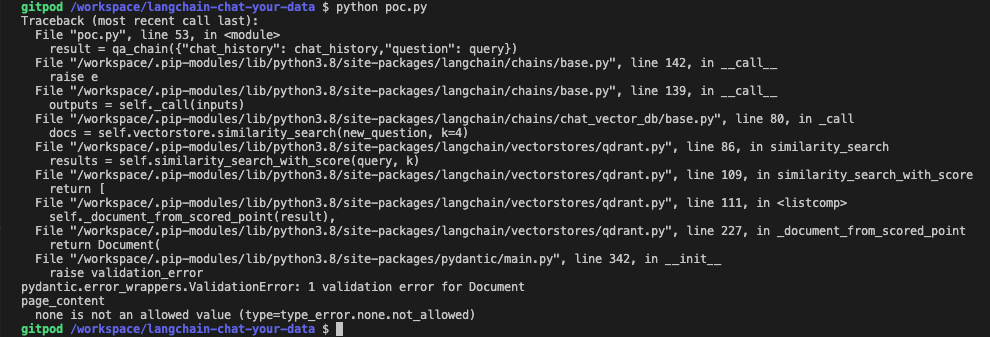

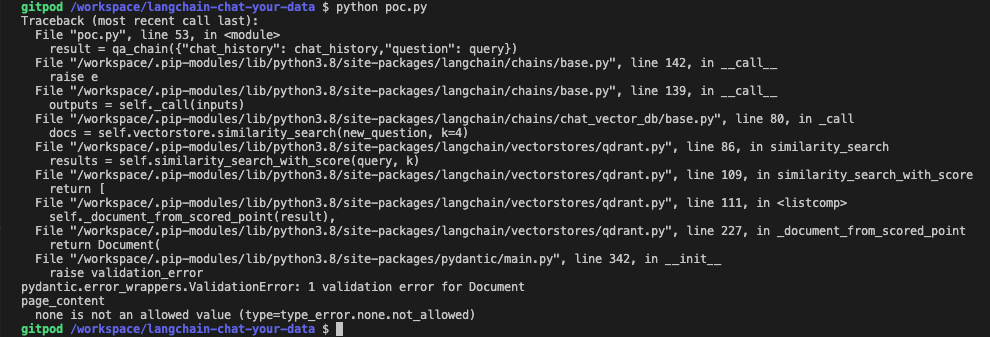

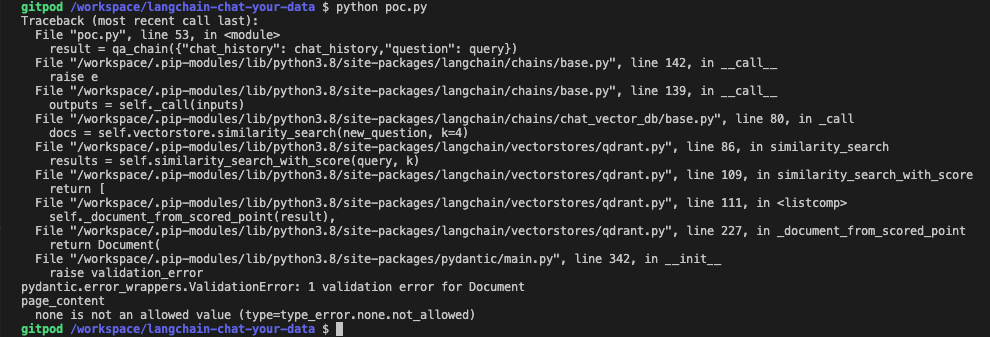

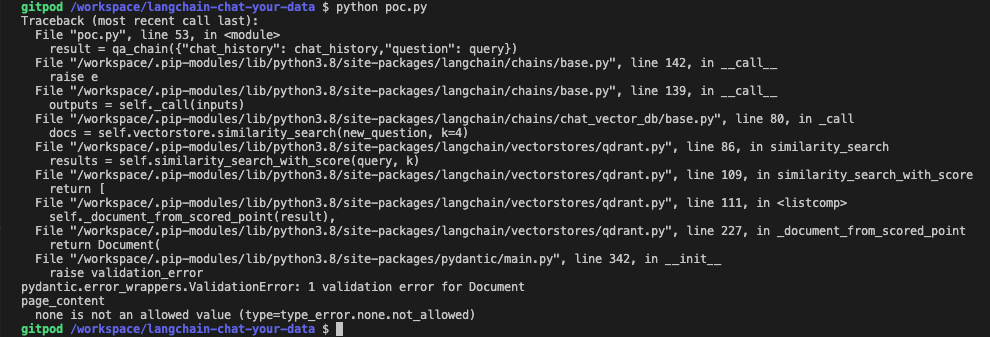

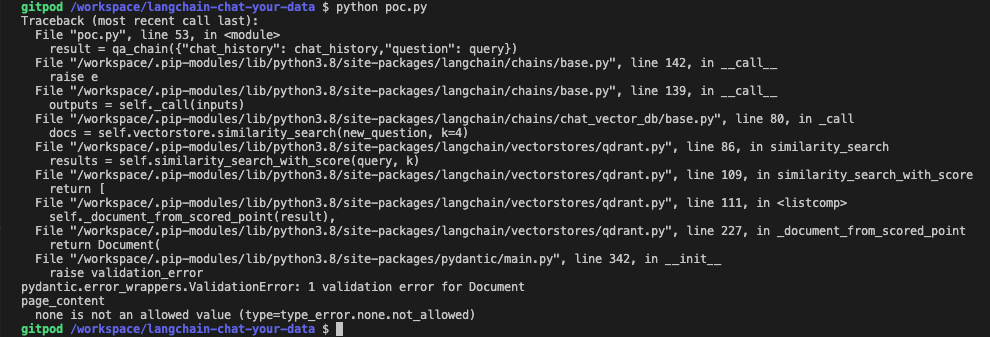

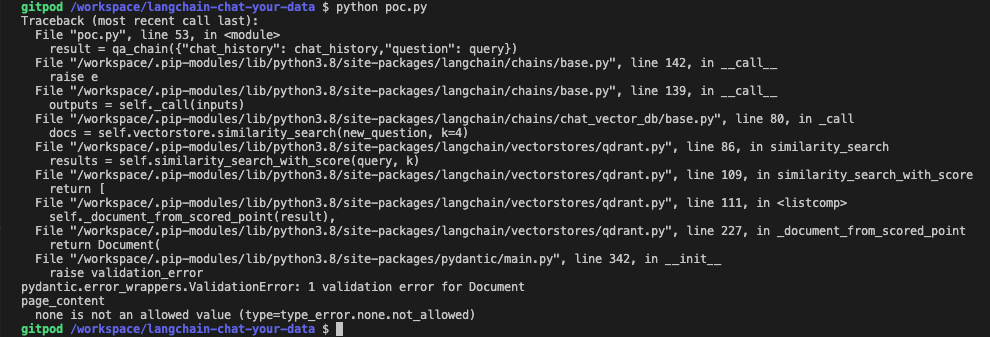

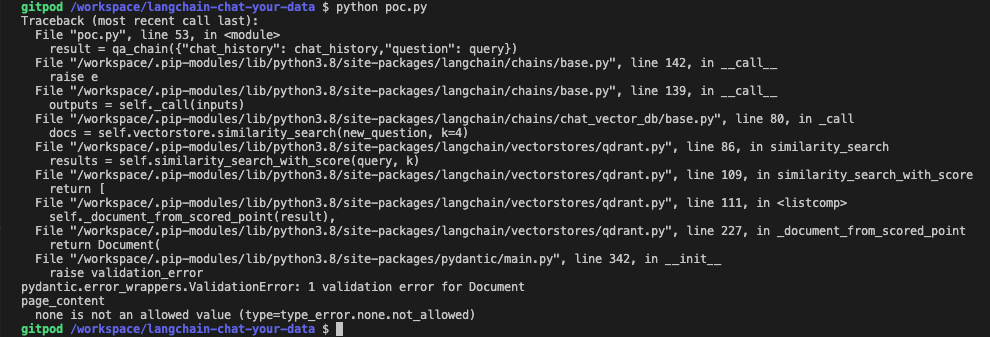

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | """Wrapper around Qdrant vector database."""

import uuid

from operator import itemgetter

from typing import Any, Callable, Iterable, List, Optional, Tuple

from langchain.docstore.document import Document

from langchain.embeddings.base import Embeddings

from langchain.utils import get_from_dict_or_env

from langchain.vectorstores import VectorStore

from langchain.vectorstores.utils import maximal_marginal_relevance

class Qdrant(VectorStore): |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | """Wrapper around Qdrant vector database.

To use you should have the ``qdrant-client`` package installed.

Example:

.. code-block:: python

from langchain import Qdrant

client = QdrantClient()

collection_name = "MyCollection"

qdrant = Qdrant(client, collection_name, embedding_function)

"""

def __init__(self, client: Any, collection_name: str, embedding_function: Callable):

"""Initialize with necessary components."""

try:

import qdrant_client

except ImportError:

raise ValueError(

"Could not import qdrant-client python package. "

"Please it install it with `pip install qdrant-client`."

)

if not isinstance(client, qdrant_client.QdrantClient):

raise ValueError(

f"client should be an instance of qdrant_client.QdrantClient, "

f"got {type(client)}"

)

self.client: qdrant_client.QdrantClient = client

self.collection_name = collection_name

self.embedding_function = embedding_function

def add_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | self, texts: Iterable[str], metadatas: Optional[List[dict]] = None

) -> List[str]:

"""Run more texts through the embeddings and add to the vectorstore.

Args:

texts: Iterable of strings to add to the vectorstore.

metadatas: Optional list of metadatas associated with the texts.

Returns:

List of ids from adding the texts into the vectorstore.

"""

from qdrant_client.http import models as rest

ids = [uuid.uuid4().hex for _ in texts]

self.client.upsert(

collection_name=self.collection_name,

points=rest.Batch(

ids=ids,

vectors=[self.embedding_function(text) for text in texts],

payloads=self._build_payloads(texts, metadatas),

),

)

return ids

def similarity_search( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | self, query: str, k: int = 4, **kwargs: Any

) -> List[Document]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query.

"""

results = self.similarity_search_with_score(query, k)

return list(map(itemgetter(0), results))

def similarity_search_with_score( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | self, query: str, k: int = 4

) -> List[Tuple[Document, float]]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query and score for each

"""

embedding = self.embedding_function(query)

results = self.client.search(

collection_name=self.collection_name,

query_vector=embedding,

with_payload=True,

limit=k,

)

return [

(

self._document_from_scored_point(result),

result.score,

)

for result in results

]

def max_marginal_relevance_search( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | self, query: str, k: int = 4, fetch_k: int = 20

) -> List[Document]:

"""Return docs selected using the maximal marginal relevance.

Maximal marginal relevance optimizes for similarity to query AND diversity

among selected documents.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

fetch_k: Number of Documents to fetch to pass to MMR algorithm.

Returns:

List of Documents selected by maximal marginal relevance.

"""

embedding = self.embedding_function(query)

results = self.client.search(

collection_name=self.collection_name,

query_vector=embedding,

with_payload=True,

with_vectors=True,

limit=k,

)

embeddings = [result.vector for result in results]

mmr_selected = maximal_marginal_relevance(embedding, embeddings, k=k)

return [self._document_from_scored_point(results[i]) for i in mmr_selected]

@classmethod

def from_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | cls,

texts: List[str],

embedding: Embeddings,

metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> "Qdrant":

"""Construct Qdrant wrapper from raw documents.

This is a user friendly interface that:

1. Embeds documents.

2. Creates an in memory docstore

3. Initializes the Qdrant database

This is intended to be a quick way to get started.

Example:

.. code-block:: python

from langchain import Qdrant

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

qdrant = Qdrant.from_texts(texts, embeddings)

"""

try:

import qdrant_client

except ImportError:

raise ValueError(

"Could not import qdrant-client python package. "

"Please it install it with `pip install qdrant-client`." |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | )

from qdrant_client.http import models as rest

partial_embeddings = embedding.embed_documents(texts[:1])

vector_size = len(partial_embeddings[0])

qdrant_host = get_from_dict_or_env(kwargs, "host", "QDRANT_HOST")

kwargs.pop("host")

collection_name = kwargs.pop("collection_name", uuid.uuid4().hex)

distance_func = kwargs.pop("distance_func", "Cosine").upper()

client = qdrant_client.QdrantClient(host=qdrant_host, **kwargs)

client.recreate_collection(

collection_name=collection_name,

vectors_config=rest.VectorParams(

size=vector_size,

distance=rest.Distance[distance_func],

),

)

embeddings = embedding.embed_documents(texts)

client.upsert(

collection_name=collection_name,

points=rest.Batch(

ids=[uuid.uuid4().hex for _ in texts],

vectors=embeddings,

payloads=cls._build_payloads(texts, metadatas),

),

)

return cls(client, collection_name, embedding.embed_query)

@classmethod

def _build_payloads( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,087 | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content |

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | https://github.com/langchain-ai/langchain/issues/1087 | https://github.com/langchain-ai/langchain/pull/1088 | 774550548242f44df9b219595cd46d9e238351e5 | 5d11e5da4077ad123bfff9f153f577fb5885af53 | "2023-02-16T13:18:41" | python | "2023-02-16T15:06:02" | langchain/vectorstores/qdrant.py | cls, texts: Iterable[str], metadatas: Optional[List[dict]]

) -> List[dict]:

return [

{

"page_content": text,

"metadata": metadatas[i] if metadatas is not None else None,

}

for i, text in enumerate(texts)

]

@classmethod

def _document_from_scored_point(cls, scored_point: Any) -> Document:

return Document(

page_content=scored_point.payload.get("page_content"),

metadata=scored_point.payload.get("metadata") or {},

) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,103 | SQLDatabase chain having issue running queries on the database after connecting | Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | https://github.com/langchain-ai/langchain/issues/1103 | https://github.com/langchain-ai/langchain/pull/1129 | 1ed708391e80a4de83e859b8364a32cc222df9ef | c39ef70aa457dcfcf8ddcf61f89dd69d55307744 | "2023-02-17T04:18:02" | python | "2023-02-17T21:39:44" | langchain/sql_database.py | """SQLAlchemy wrapper around a database."""

from __future__ import annotations

import ast

from typing import Any, Iterable, List, Optional

from sqlalchemy import create_engine, inspect

from sqlalchemy.engine import Engine

_TEMPLATE_PREFIX = """Table data will be described in the following format:

Table 'table name' has columns: {

column1 name: (column1 type, [list of example values for column1]),

column2 name: (column2 type, [list of example values for column2]),

...

}

These are the tables you can use, together with their column information:

"""

class SQLDatabase:

"""SQLAlchemy wrapper around a database."""

def __init__(

self,

engine: Engine,

schema: Optional[str] = None,

ignore_tables: Optional[List[str]] = None,

include_tables: Optional[List[str]] = None,

sample_rows_in_table_info: int = 3,

):

"""Create engine from database URI.""" |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,103 | SQLDatabase chain having issue running queries on the database after connecting | Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | https://github.com/langchain-ai/langchain/issues/1103 | https://github.com/langchain-ai/langchain/pull/1129 | 1ed708391e80a4de83e859b8364a32cc222df9ef | c39ef70aa457dcfcf8ddcf61f89dd69d55307744 | "2023-02-17T04:18:02" | python | "2023-02-17T21:39:44" | langchain/sql_database.py | self._engine = engine

self._schema = schema

if include_tables and ignore_tables:

raise ValueError("Cannot specify both include_tables and ignore_tables")

self._inspector = inspect(self._engine)

self._all_tables = set(self._inspector.get_table_names(schema=schema))

self._include_tables = set(include_tables) if include_tables else set()

if self._include_tables:

missing_tables = self._include_tables - self._all_tables

if missing_tables:

raise ValueError(

f"include_tables {missing_tables} not found in database"

)

self._ignore_tables = set(ignore_tables) if ignore_tables else set()

if self._ignore_tables:

missing_tables = self._ignore_tables - self._all_tables

if missing_tables:

raise ValueError(

f"ignore_tables {missing_tables} not found in database"

)

self._sample_rows_in_table_info = sample_rows_in_table_info

@classmethod

def from_uri(cls, database_uri: str, **kwargs: Any) -> SQLDatabase:

"""Construct a SQLAlchemy engine from URI."""

return cls(create_engine(database_uri), **kwargs)

@property

def dialect(self) -> str:

"""Return string representation of dialect to use."""

return self._engine.dialect.name

def get_table_names(self) -> Iterable[str]: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,103 | SQLDatabase chain having issue running queries on the database after connecting | Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | https://github.com/langchain-ai/langchain/issues/1103 | https://github.com/langchain-ai/langchain/pull/1129 | 1ed708391e80a4de83e859b8364a32cc222df9ef | c39ef70aa457dcfcf8ddcf61f89dd69d55307744 | "2023-02-17T04:18:02" | python | "2023-02-17T21:39:44" | langchain/sql_database.py | """Get names of tables available."""

if self._include_tables:

return self._include_tables

return self._all_tables - self._ignore_tables

@property

def table_info(self) -> str:

"""Information about all tables in the database."""

return self.get_table_info()

def get_table_info(self, table_names: Optional[List[str]] = None) -> str:

"""Get information about specified tables.

Follows best practices as specified in: Rajkumar et al, 2022

(https://arxiv.org/abs/2204.00498)

If `sample_rows_in_table_info`, the specified number of sample rows will be

appended to each table description. This can increase performance as

demonstrated in the paper.

"""

all_table_names = self.get_table_names()

if table_names is not None:

missing_tables = set(table_names).difference(all_table_names)

if missing_tables:

raise ValueError(f"table_names {missing_tables} not found in database")

all_table_names = table_names

tables = []

for table_name in all_table_names:

columns = []

create_table = self.run(

(

"SELECT sql FROM sqlite_master WHERE "

f"type='table' AND name='{table_name}'"

), |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,103 | SQLDatabase chain having issue running queries on the database after connecting | Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | https://github.com/langchain-ai/langchain/issues/1103 | https://github.com/langchain-ai/langchain/pull/1129 | 1ed708391e80a4de83e859b8364a32cc222df9ef | c39ef70aa457dcfcf8ddcf61f89dd69d55307744 | "2023-02-17T04:18:02" | python | "2023-02-17T21:39:44" | langchain/sql_database.py | fetch="one",

)

for column in self._inspector.get_columns(table_name, schema=self._schema):

columns.append(column["name"])

if self._sample_rows_in_table_info:

select_star = (

f"SELECT * FROM '{table_name}' LIMIT "

f"{self._sample_rows_in_table_info}"

)

sample_rows = self.run(select_star)

sample_rows_ls = ast.literal_eval(sample_rows)

sample_rows_ls = list(

map(lambda ls: [str(i)[:100] for i in ls], sample_rows_ls)

)

columns_str = " ".join(columns)

sample_rows_str = "\n".join([" ".join(row) for row in sample_rows_ls])

tables.append(

create_table

+ "\n\n"

+ select_star

+ "\n"

+ columns_str

+ "\n"

+ sample_rows_str

)

else:

tables.append(create_table)

final_str = "\n\n\n".join(tables)

return final_str

def run(self, command: str, fetch: str = "all") -> str: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,103 | SQLDatabase chain having issue running queries on the database after connecting | Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | https://github.com/langchain-ai/langchain/issues/1103 | https://github.com/langchain-ai/langchain/pull/1129 | 1ed708391e80a4de83e859b8364a32cc222df9ef | c39ef70aa457dcfcf8ddcf61f89dd69d55307744 | "2023-02-17T04:18:02" | python | "2023-02-17T21:39:44" | langchain/sql_database.py | """Execute a SQL command and return a string representing the results.

If the statement returns rows, a string of the results is returned.

If the statement returns no rows, an empty string is returned.

"""

with self._engine.begin() as connection:

if self._schema is not None:

connection.exec_driver_sql(f"SET search_path TO {self._schema}")

cursor = connection.exec_driver_sql(command)

if cursor.returns_rows:

if fetch == "all":

result = cursor.fetchall()

elif fetch == "one":

result = cursor.fetchone()[0]

else:

raise ValueError("Fetch parameter must be either 'one' or 'all'")

return str(result)

return "" |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | """Wrapper around FAISS vector database."""

from __future__ import annotations

import pickle

import uuid

from pathlib import Path

from typing import Any, Callable, Dict, Iterable, List, Optional, Tuple

import numpy as np

from langchain.docstore.base import AddableMixin, Docstore

from langchain.docstore.document import Document

from langchain.docstore.in_memory import InMemoryDocstore

from langchain.embeddings.base import Embeddings

from langchain.vectorstores.base import VectorStore

from langchain.vectorstores.utils import maximal_marginal_relevance

def dependable_faiss_import() -> Any:

"""Import faiss if available, otherwise raise error."""

try:

import faiss

except ImportError:

raise ValueError(

"Could not import faiss python package. "

"Please it install it with `pip install faiss` "

"or `pip install faiss-cpu` (depending on Python version)."

)

return faiss

class FAISS(VectorStore): |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | """Wrapper around FAISS vector database.

To use, you should have the ``faiss`` python package installed.

Example:

.. code-block:: python

from langchain import FAISS

faiss = FAISS(embedding_function, index, docstore)

"""

def __init__(

self,

embedding_function: Callable,

index: Any,

docstore: Docstore,

index_to_docstore_id: Dict[int, str],

):

"""Initialize with necessary components."""

self.embedding_function = embedding_function

self.index = index

self.docstore = docstore

self.index_to_docstore_id = index_to_docstore_id

def add_texts( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | self, texts: Iterable[str], metadatas: Optional[List[dict]] = None

) -> List[str]:

"""Run more texts through the embeddings and add to the vectorstore.

Args:

texts: Iterable of strings to add to the vectorstore.

metadatas: Optional list of metadatas associated with the texts.

Returns:

List of ids from adding the texts into the vectorstore.

"""

if not isinstance(self.docstore, AddableMixin):

raise ValueError(

"If trying to add texts, the underlying docstore should support "

f"adding items, which {self.docstore} does not"

)

embeddings = [self.embedding_function(text) for text in texts]

documents = []

for i, text in enumerate(texts):

metadata = metadatas[i] if metadatas else {}

documents.append(Document(page_content=text, metadata=metadata))

starting_len = len(self.index_to_docstore_id)

self.index.add(np.array(embeddings, dtype=np.float32))

full_info = [

(starting_len + i, str(uuid.uuid4()), doc) |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | for i, doc in enumerate(documents)

]

self.docstore.add({_id: doc for _, _id, doc in full_info})

index_to_id = {index: _id for index, _id, _ in full_info}

self.index_to_docstore_id.update(index_to_id)

return [_id for _, _id, _ in full_info]

def similarity_search_with_score_by_vector(

self, embedding: List[float], k: int = 4

) -> List[Tuple[Document, float]]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query and score for each

"""

scores, indices = self.index.search(np.array([embedding], dtype=np.float32), k)

docs = []

for j, i in enumerate(indices[0]):

if i == -1:

continue

_id = self.index_to_docstore_id[i]

doc = self.docstore.search(_id)

if not isinstance(doc, Document):

raise ValueError(f"Could not find document for id {_id}, got {doc}")

docs.append((doc, scores[0][j]))

return docs

def similarity_search_with_score( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | self, query: str, k: int = 4

) -> List[Tuple[Document, float]]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query and score for each

"""

embedding = self.embedding_function(query)

docs = self.similarity_search_with_score_by_vector(embedding, k)

return docs

def similarity_search_by_vector( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | self, embedding: List[float], k: int = 4, **kwargs: Any

) -> List[Document]:

"""Return docs most similar to embedding vector.

Args:

embedding: Embedding to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the embedding.

"""

docs_and_scores = self.similarity_search_with_score_by_vector(embedding, k)

return [doc for doc, _ in docs_and_scores]

def similarity_search(

self, query: str, k: int = 4, **kwargs: Any

) -> List[Document]:

"""Return docs most similar to query.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

Returns:

List of Documents most similar to the query.

"""

docs_and_scores = self.similarity_search_with_score(query, k)

return [doc for doc, _ in docs_and_scores]

def max_marginal_relevance_search_by_vector( |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | self, embedding: List[float], k: int = 4, fetch_k: int = 20

) -> List[Document]:

"""Return docs selected using the maximal marginal relevance.

Maximal marginal relevance optimizes for similarity to query AND diversity

among selected documents.

Args:

embedding: Embedding to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

fetch_k: Number of Documents to fetch to pass to MMR algorithm.

Returns:

List of Documents selected by maximal marginal relevance.

"""

_, indices = self.index.search(np.array([embedding], dtype=np.float32), fetch_k)

embeddings = [self.index.reconstruct(int(i)) for i in indices[0] if i != -1]

mmr_selected = maximal_marginal_relevance(

np.array([embedding], dtype=np.float32), embeddings, k=k

)

selected_indices = [indices[0][i] for i in mmr_selected]

docs = []

for i in selected_indices: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | _id = self.index_to_docstore_id[i]

if _id == -1:

continue

doc = self.docstore.search(_id)

if not isinstance(doc, Document):

raise ValueError(f"Could not find document for id {_id}, got {doc}")

docs.append(doc)

return docs

def max_marginal_relevance_search(

self, query: str, k: int = 4, fetch_k: int = 20

) -> List[Document]:

"""Return docs selected using the maximal marginal relevance.

Maximal marginal relevance optimizes for similarity to query AND diversity

among selected documents.

Args:

query: Text to look up documents similar to.

k: Number of Documents to return. Defaults to 4.

fetch_k: Number of Documents to fetch to pass to MMR algorithm.

Returns:

List of Documents selected by maximal marginal relevance.

"""

embedding = self.embedding_function(query)

docs = self.max_marginal_relevance_search_by_vector(embedding, k, fetch_k)

return docs

@classmethod

def from_texts(

cls,

texts: List[str],

embedding: Embeddings, |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> FAISS:

"""Construct FAISS wrapper from raw documents.

This is a user friendly interface that:

1. Embeds documents.

2. Creates an in memory docstore

3. Initializes the FAISS database

This is intended to be a quick way to get started.

Example:

.. code-block:: python

from langchain import FAISS

from langchain.embeddings import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

faiss = FAISS.from_texts(texts, embeddings)

"""

faiss = dependable_faiss_import()

embeddings = embedding.embed_documents(texts)

index = faiss.IndexFlatL2(len(embeddings[0]))

index.add(np.array(embeddings, dtype=np.float32))

documents = []

for i, text in enumerate(texts):

metadata = metadatas[i] if metadatas else {}

documents.append(Document(page_content=text, metadata=metadata))

index_to_id = {i: str(uuid.uuid4()) for i in range(len(documents))}

docstore = InMemoryDocstore(

{index_to_id[i]: doc for i, doc in enumerate(documents)}

)

return cls(embedding.embed_query, index, docstore, index_to_id)

def save_local(self, folder_path: str) -> None: |

closed | langchain-ai/langchain | https://github.com/langchain-ai/langchain | 1,186 | max_marginal_relevance_search_by_vector with k > doc size | #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | https://github.com/langchain-ai/langchain/issues/1186 | https://github.com/langchain-ai/langchain/pull/1187 | 159c560c95ed9e11cc740040cc6ee07abb871ded | c5015d77e23b24b3b65d803271f1fa9018d53a05 | "2023-02-20T19:19:29" | python | "2023-02-21T00:39:13" | langchain/vectorstores/faiss.py | """Save FAISS index, docstore, and index_to_docstore_id to disk.

Args:

folder_path: folder path to save index, docstore,

and index_to_docstore_id to.

"""

path = Path(folder_path)

path.mkdir(exist_ok=True, parents=True)

faiss = dependable_faiss_import()

faiss.write_index(self.index, str(path / "index.faiss"))

with open(path / "index.pkl", "wb") as f:

pickle.dump((self.docstore, self.index_to_docstore_id), f)

@classmethod

def load_local(cls, folder_path: str, embeddings: Embeddings) -> FAISS:

"""Load FAISS index, docstore, and index_to_docstore_id to disk.

Args:

folder_path: folder path to load index, docstore,

and index_to_docstore_id from.

embeddings: Embeddings to use when generating queries

"""

path = Path(folder_path)