You need to agree to share your contact information to access this dataset

This repository is publicly accessible, but you have to accept the conditions to access its files and content.

You need to first download and complete this End User Licence Agreement form (EULA), and return the signed EULA by email to ccdb@cs.cardiff.ac.uk.

Log in or Sign Up to review the conditions and access this dataset content.

Dataset Card for Cardiff Conversation Database (CCDb)

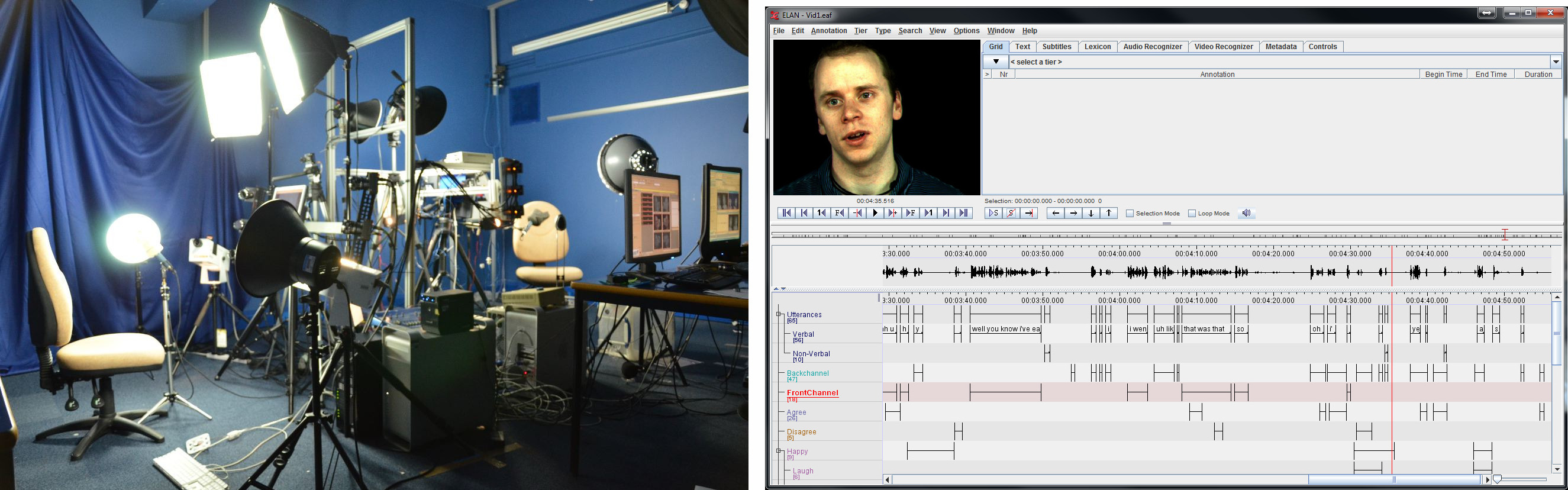

The Cardiff Conversation Database (CCDb) provides a non-scripted, audio-visual natural conversation database where neither participant's role is predefined (i.e. speaker/listener). This database will be a useful resource for the computer vision, affective computing, and cognitive science communities to both analyse natural dyadic conversational data and develop conversation models.

Although the project captured both 2D and 3D data, only the 2D data is provided here.

Gaining Access to Dataset

You need to first download and complete this End User Licence Agreement form (EULA), and return the signed EULA.

Dataset Structure

CCDb-2013: Data captured in 2013, and annotated in 2013; used in Aubrey et al. 2013.

CCDb-2015: Data captured in 2015, and annotated in 2015; used in Vandeventer et al. 2015.

CCDb-2024: Data captured in 2013. Annotations will be released in a few months.

The dataset consists of mp4 video files and eaf ELAN annotation files. The naming convention for the files is: P1_P2_0304 indicates that the conversation is between speakers with anonymised ID 1 and 2. Separate recordings/annotations were made for the two halves of the conversation: P1_P2_0304_C1 contains data from camera 1, P1_P2_0304_C2 contains data from camera 2.

Dataset Creation

The database currently consists of 44 conversations between pairs of speakers, each lasting approximately five minutes. There were 16 speakers in total, 12 male and 4 female, between the ages of 5 and 56 (hence, some speakers were paired multiple times with different conversational partners).

Some conversations have annotations for facial expressions, verbal and non-verbal utterances, and transcribed speech.

Data acquisition

Two 3dMD dynamic scanners captured 3D video, a Basler A312fc firewire CCD camera captured 2D colour video at standard video frame rate, and a microphone placed in front of the participant, out of view of the camera, captured sound (at 44.1KHz).

To ensure all audio and video could be reliably synchronized, each speaker had a handheld buzzer and LED (light emitting diode) device, used to mark the beginning of each recording session. A single button controlled both devices and simultaneously activated the buzzer and LED. No equipment was altered between the recording sessions, except for the height of the chair to ensure the speaker's head was clearly visible by the cameras.

Dataset Funding

CCDb was created as part of a research project 'Actions and Events in Images and Videos' in the Welsh Government funded Research Institute of Visual Computing (RIVIC), which was a collaborative amalgamation of research programmes between the computer science departments in Aberystwyth, Bangor, Cardiff and Swansea Universities.

Citation

BibTeX:

@inproceedings{aubrey2013cardiff,

title={Cardiff conversation database (CCDb): A database of natural dyadic conversations},

author={Aubrey, Andrew J and Marshall, David and Rosin, Paul L and Vendeventer, Jason and Cunningham, Douglas W and Wallraven, Christian},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops},

pages={277--282},

year={2013}

}

@inproceedings{VandeventerARM15,

title={4D Cardiff Conversation Database (4D CCDb): A 4D database of natural, dyadic conversations},

author={Vandeventer, Jason and Aubrey, Andrew J and Rosin, Paul L and Marshall, David},

booktitle = {Joint Conf. on Facial Analysis, Animation and Auditory-Visual Speech Processing},

pages = {157-162},

year = {2015}

}

Additional Annotations of CCDb

CCDb Head Gesture (CCDb-HG)

- https://zenodo.org/records/13927536

- CCDb-HG contains annotations for each frame with 6 head gestures: Nod, Shake, Tilt, Turn, Up/Down, and Waggle.

- P. Vuillecard, A. Farkhondeh, M. Villamizar and J. -M. Odobez, "CCDb-HG: Novel Annotations and Gaze-Aware Representations for Head Gesture Recognition", International Conference on Automatic Face and Gesture Recognition (FG), pp. 1-9, 2024.

Interaction Behavior Database

- https://zenodo.org/records/3820510

- Contains annotation segments smiles and laughs as well as their intensties, expressed by interlocutors in conversational contexts. They also contain annotation segments of the interlocutors' roles (speaker, listener or none) during their conversations.

- El Haddad, Kevin, Sandeep Nallan Chakravarthula, and James Kennedy, "Smile and Laugh Dynamics in Naturalistic Dyadic Interactions: Intensity Levels, Sequences and Roles", International Conference on Multimodal Interaction, 2019.

Dataset Card Contact

Paul Rosin

Cardiff University

rosinpl@cardiff.ac.uk

- Downloads last month

- 17