Core ML Converted Model:

- This model was converted to Core ML for use on Apple Silicon devices. Conversion instructions can be found here.

- Provide the model to an app such as Mochi Diffusion Github / Discord to generate images.

split_einsumversion is compatible with all compute unit options including Neural Engine.originalversion is only compatible withCPU & GPUoption.- Custom resolution versions are tagged accordingly.

- The

vae-ft-mse-840000-ema-pruned.ckptVAE is embedded into the model. - This model was converted with a

vae-encoderfor use withimage2image. - This model is

fp16. - Descriptions are posted as-is from original model source.

- Not all features and/or results may be available in

CoreMLformat. - This model does not have the unet split into chunks.

- This model does not include a

safety checker(for NSFW content). - This model can be used with ControlNet.

inkpunkDiffusion-v2_cn:

Source(s): CivitAI / Hugging Face

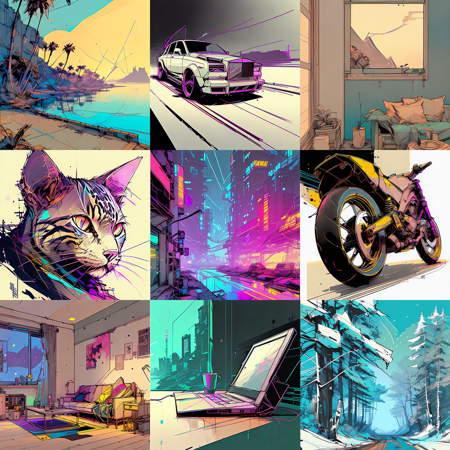

Inkpunk Diffusion v2

Finetuned Stable Diffusion model trained on Dreambooth.

Vaguely inspired by Gorillaz, FLCL, and Yoji Shinkawa

Use nvinkpunk in your prompts.

About Version 2

Improvements:

Excorsized the woman that seemed to be haunting the model and appearing in basically every prompt. (She still pops in here and there, I honestly don't know where she comes from.)

Faces are better overall, and characters are more responsive to descriptive language in prompts. Try playing around with emotions/nationality/age/poses to get the best results.

Hair anarchy has been toned down slightly and added more diverse hairstyles to the dataset to avoid everyone having tumbleweed hair. Again, descriptive language in prompts helps.

Full body poses are also more diverse, though keep in mind that wide angle shots tend to lead to distorted faces that may need to be touched up with img2img/inpainting.

),_((detailed_face)),_((award_winning)),_(High_Detail),_Sharp,_8k,__trending_o.jpeg)