model

#8

by

bzxlZhou

- opened

- README.md +5 -44

- configuration_baichuan.py +1 -1

- handler.py +0 -23

- tokenization_baichuan.py +5 -7

README.md

CHANGED

|

@@ -19,7 +19,6 @@ tasks:

|

|

| 19 |

<a href="https://github.com/baichuan-inc/Baichuan2" target="_blank">🦉GitHub</a> | <a href="https://github.com/baichuan-inc/Baichuan-7B/blob/main/media/wechat.jpeg?raw=true" target="_blank">💬WeChat</a>

|

| 20 |

</div>

|

| 21 |

<div align="center">

|

| 22 |

-

百川API支持搜索增强和192K长窗口,新增百川搜索增强知识库、限时免费!<br>

|

| 23 |

🚀 <a href="https://www.baichuan-ai.com/" target="_blank">百川大模型在线对话平台</a> 已正式向公众开放 🎉

|

| 24 |

</div>

|

| 25 |

|

|

@@ -28,13 +27,8 @@ tasks:

|

|

| 28 |

- [📖 模型介绍/Introduction](#Introduction)

|

| 29 |

- [⚙️ 快速开始/Quick Start](#Start)

|

| 30 |

- [📊 Benchmark评估/Benchmark Evaluation](#Benchmark)

|

| 31 |

-

- [👥 社区与生态/Community](#Community)

|

| 32 |

- [📜 声明与协议/Terms and Conditions](#Terms)

|

| 33 |

|

| 34 |

-

# 更新/Update

|

| 35 |

-

[2023.12.29] 🎉🎉🎉 我们发布了 **[Baichuan2-13B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat) v2** 版本。其中:

|

| 36 |

-

- 大幅提升了模型的综合能力,特别是数学和逻辑推理、复杂指令跟随能力。

|

| 37 |

-

- 使用时需指定revision=v2.0,详细方法参考[快速开始](#Start)

|

| 38 |

|

| 39 |

# <span id="Introduction">模型介绍/Introduction</span>

|

| 40 |

|

|

@@ -64,16 +58,9 @@ In the Baichuan 2 series models, we have utilized the new feature `F.scaled_dot_

|

|

| 64 |

import torch

|

| 65 |

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 66 |

from transformers.generation.utils import GenerationConfig

|

| 67 |

-

tokenizer = AutoTokenizer.from_pretrained("baichuan-inc/Baichuan2-13B-Chat",

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

trust_remote_code=True)

|

| 71 |

-

model = AutoModelForCausalLM.from_pretrained("baichuan-inc/Baichuan2-13B-Chat",

|

| 72 |

-

revision="v2.0",

|

| 73 |

-

device_map="auto",

|

| 74 |

-

torch_dtype=torch.bfloat16,

|

| 75 |

-

trust_remote_code=True)

|

| 76 |

-

model.generation_config = GenerationConfig.from_pretrained("baichuan-inc/Baichuan2-13B-Chat", revision="v2.0")

|

| 77 |

messages = []

|

| 78 |

messages.append({"role": "user", "content": "解释一下“温故而知新”"})

|

| 79 |

response = model.chat(tokenizer, messages)

|

|

@@ -82,7 +69,6 @@ print(response)

|

|

| 82 |

|

| 83 |

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。

|

| 84 |

```

|

| 85 |

-

**注意:如需使用老版本,需手动指定revision参数,设置revision=v1.0**

|

| 86 |

|

| 87 |

# <span id="Benchmark">Benchmark 结果/Benchmark Evaluation</span>

|

| 88 |

|

|

@@ -129,16 +115,6 @@ In addition to the [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baich

|

|

| 129 |

|

| 130 |

|

| 131 |

|

| 132 |

-

# <span id="Community">社区与生态/Community</span>

|

| 133 |

-

|

| 134 |

-

## Intel 酷睿 Ultra 平台运行百川大模型

|

| 135 |

-

|

| 136 |

-

使用酷睿™/至强® 可扩展处理器或配合锐炫™ GPU等进行部署[Baichuan2-7B-Chat],[Baichuan2-13B-Chat]模型,推荐使用 BigDL-LLM([CPU], [GPU])以发挥更好推理性能。

|

| 137 |

-

|

| 138 |

-

详细支持信息可参考[中文操作手册](https://github.com/intel-analytics/bigdl-llm-tutorial/tree/main/Chinese_Version),包括用notebook支持,[加载,优化,保存方法](https://github.com/intel-analytics/bigdl-llm-tutorial/blob/main/Chinese_Version/ch_3_AppDev_Basic/3_BasicApp.ipynb)等。

|

| 139 |

-

|

| 140 |

-

When deploy on Core™/Xeon® Scalable Processors or with Arc™ GPU, BigDL-LLM ([CPU], [GPU]) is recommended to take full advantage of better inference performance.

|

| 141 |

-

|

| 142 |

# <span id="Terms">声明与协议/Terms and Conditions</span>

|

| 143 |

|

| 144 |

## 声明

|

|

@@ -156,21 +132,9 @@ We have done our best to ensure the compliance of the data used in the model tra

|

|

| 156 |

|

| 157 |

## 协议

|

| 158 |

|

| 159 |

-

|

| 160 |

-

1. 您或您的关联方的服务或产品的日均用户活跃量(DAU)低于100万。

|

| 161 |

-

2. 您或您的关联方不是软件服务提供商、云服务提供商。

|

| 162 |

-

3. 您或您的关联方不存在将授予您的商用许可,未经百川许可二次授权给其他第三方的可能。

|

| 163 |

-

|

| 164 |

-

在符合以上条件的前提下,您需要通过以下联系邮箱 opensource@baichuan-inc.com ,提交《Baichuan 2 模型社区许可协议》要求的申请材料。审核通过后,百川将特此授予您一个非排他性、全球性、不可转让、不可再许可、可撤销的商用版权许可。

|

| 165 |

-

|

| 166 |

-

The community usage of Baichuan 2 model requires adherence to [Apache 2.0](https://github.com/baichuan-inc/Baichuan2/blob/main/LICENSE) and [Community License for Baichuan2 Model](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/resolve/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf). The Baichuan 2 model supports commercial use. If you plan to use the Baichuan 2 model or its derivatives for commercial purposes, please ensure that your entity meets the following conditions:

|

| 167 |

-

|

| 168 |

-

1. The Daily Active Users (DAU) of your or your affiliate's service or product is less than 1 million.

|

| 169 |

-

2. Neither you nor your affiliates are software service providers or cloud service providers.

|

| 170 |

-

3. There is no possibility for you or your affiliates to grant the commercial license given to you, to reauthorize it to other third parties without Baichuan's permission.

|

| 171 |

-

|

| 172 |

-

Upon meeting the above conditions, you need to submit the application materials required by the Baichuan 2 Model Community License Agreement via the following contact email: opensource@baichuan-inc.com. Once approved, Baichuan will hereby grant you a non-exclusive, global, non-transferable, non-sublicensable, revocable commercial copyright license.

|

| 173 |

|

|

|

|

| 174 |

|

| 175 |

[GitHub]:https://github.com/baichuan-inc/Baichuan2

|

| 176 |

[Baichuan2]:https://github.com/baichuan-inc/Baichuan2

|

|

@@ -198,6 +162,3 @@ Upon meeting the above conditions, you need to submit the application materials

|

|

| 198 |

[opensource@baichuan-inc.com]: mailto:opensource@baichuan-inc.com

|

| 199 |

[训练过程heckpoint下载]: https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints

|

| 200 |

[百川智能]: https://www.baichuan-ai.com

|

| 201 |

-

|

| 202 |

-

[CPU]: https://github.com/intel-analytics/BigDL/tree/main/python/llm/example/CPU/HF-Transformers-AutoModels/Model/baichuan2

|

| 203 |

-

[GPU]: https://github.com/intel-analytics/BigDL/tree/main/python/llm/example/GPU/HF-Transformers-AutoModels/Model/baichuan2

|

|

|

|

| 19 |

<a href="https://github.com/baichuan-inc/Baichuan2" target="_blank">🦉GitHub</a> | <a href="https://github.com/baichuan-inc/Baichuan-7B/blob/main/media/wechat.jpeg?raw=true" target="_blank">💬WeChat</a>

|

| 20 |

</div>

|

| 21 |

<div align="center">

|

|

|

|

| 22 |

🚀 <a href="https://www.baichuan-ai.com/" target="_blank">百川大模型在线对话平台</a> 已正式向公众开放 🎉

|

| 23 |

</div>

|

| 24 |

|

|

|

|

| 27 |

- [📖 模型介绍/Introduction](#Introduction)

|

| 28 |

- [⚙️ 快速开始/Quick Start](#Start)

|

| 29 |

- [📊 Benchmark评估/Benchmark Evaluation](#Benchmark)

|

|

|

|

| 30 |

- [📜 声明与协议/Terms and Conditions](#Terms)

|

| 31 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

|

| 33 |

# <span id="Introduction">模型介绍/Introduction</span>

|

| 34 |

|

|

|

|

| 58 |

import torch

|

| 59 |

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 60 |

from transformers.generation.utils import GenerationConfig

|

| 61 |

+

tokenizer = AutoTokenizer.from_pretrained("baichuan-inc/Baichuan2-13B-Chat", use_fast=False, trust_remote_code=True)

|

| 62 |

+

model = AutoModelForCausalLM.from_pretrained("baichuan-inc/Baichuan2-13B-Chat", device_map="auto", torch_dtype=torch.bfloat16, trust_remote_code=True)

|

| 63 |

+

model.generation_config = GenerationConfig.from_pretrained("baichuan-inc/Baichuan2-13B-Chat")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 64 |

messages = []

|

| 65 |

messages.append({"role": "user", "content": "解释一下“温故而知新”"})

|

| 66 |

response = model.chat(tokenizer, messages)

|

|

|

|

| 69 |

|

| 70 |

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。

|

| 71 |

```

|

|

|

|

| 72 |

|

| 73 |

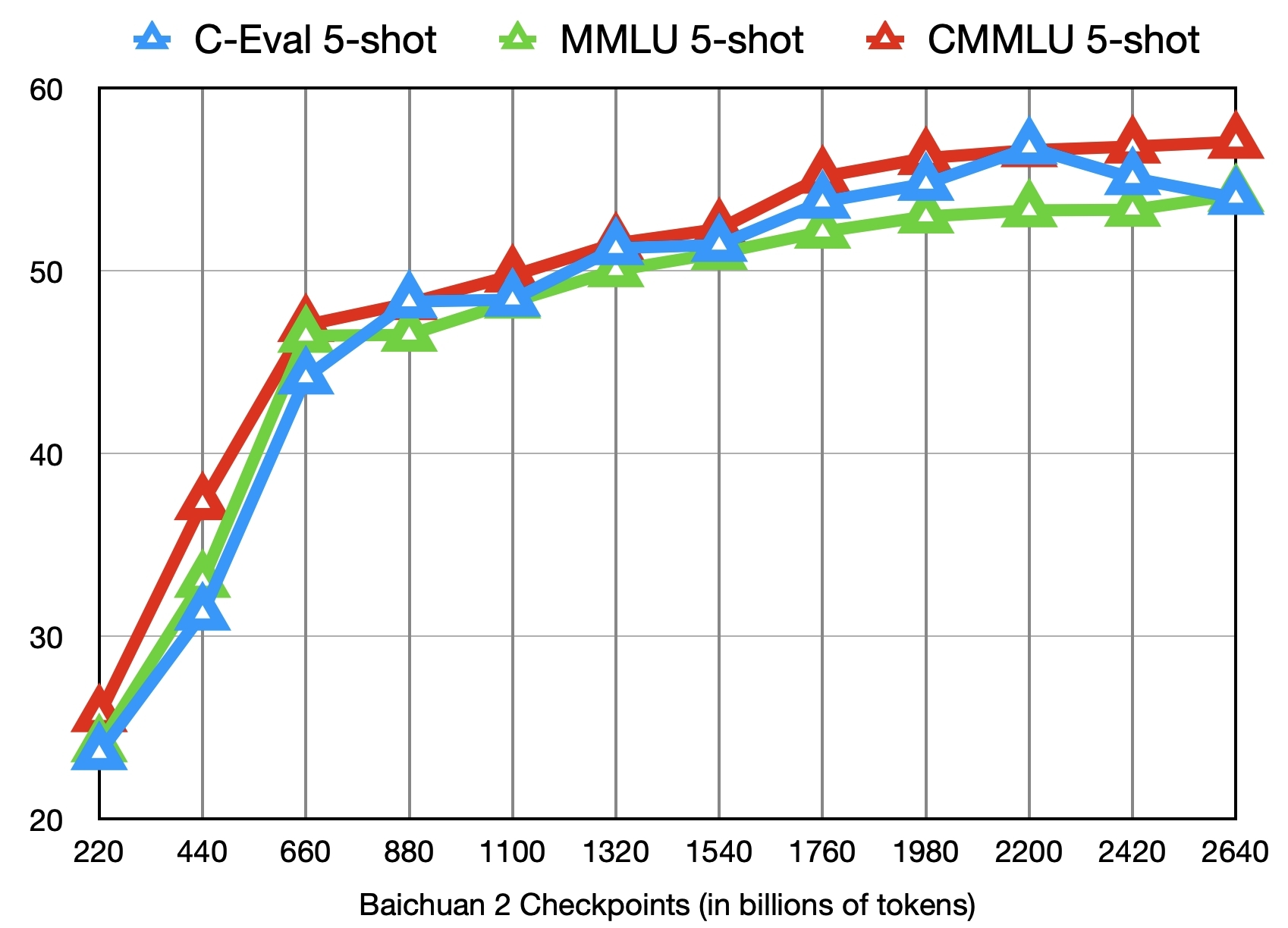

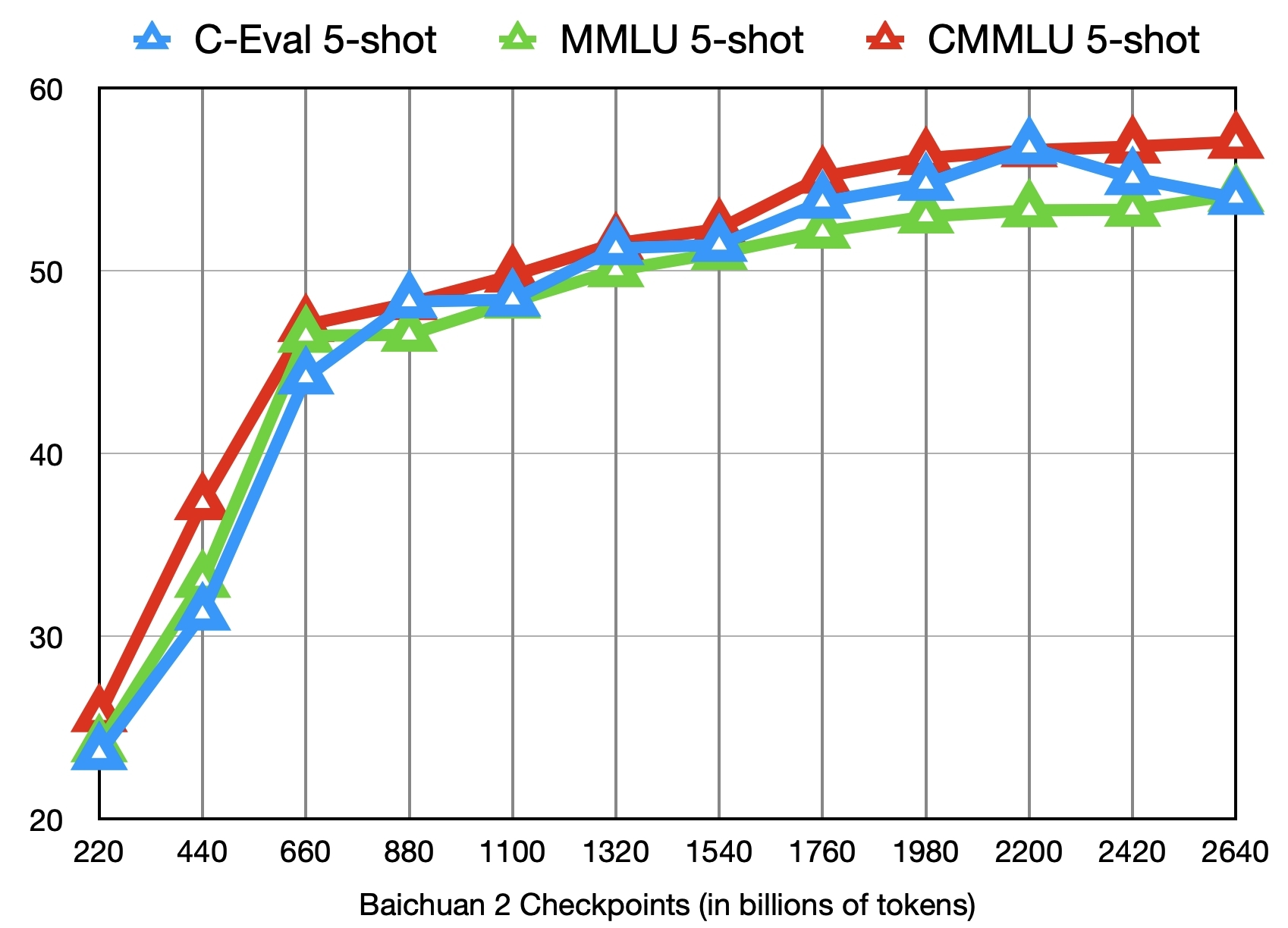

# <span id="Benchmark">Benchmark 结果/Benchmark Evaluation</span>

|

| 74 |

|

|

|

|

| 115 |

|

| 116 |

|

| 117 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

# <span id="Terms">声明与协议/Terms and Conditions</span>

|

| 119 |

|

| 120 |

## 声明

|

|

|

|

| 132 |

|

| 133 |

## 协议

|

| 134 |

|

| 135 |

+

Baichuan 2 模型的社区使用需遵循[《Baichuan 2 模型社区许可协议》]。Baichuan 2 支持商用。如果将 Baichuan 2 模型或其衍生品用作商业用途,请您按照如下方式联系许可方,以进行登记并向许可方申请书面授权:联系邮箱 [opensource@baichuan-inc.com]。

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 136 |

|

| 137 |

+

The use of the source code in this repository follows the open-source license Apache 2.0. Community use of the Baichuan 2 model must adhere to the [Community License for Baichuan 2 Model](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf). Baichuan 2 supports commercial use. If you are using the Baichuan 2 models or their derivatives for commercial purposes, please contact the licensor in the following manner for registration and to apply for written authorization: Email opensource@baichuan-inc.com.

|

| 138 |

|

| 139 |

[GitHub]:https://github.com/baichuan-inc/Baichuan2

|

| 140 |

[Baichuan2]:https://github.com/baichuan-inc/Baichuan2

|

|

|

|

| 162 |

[opensource@baichuan-inc.com]: mailto:opensource@baichuan-inc.com

|

| 163 |

[训练过程heckpoint下载]: https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints

|

| 164 |

[百川智能]: https://www.baichuan-ai.com

|

|

|

|

|

|

|

|

|

configuration_baichuan.py

CHANGED

|

@@ -9,7 +9,7 @@ class BaichuanConfig(PretrainedConfig):

|

|

| 9 |

|

| 10 |

def __init__(

|

| 11 |

self,

|

| 12 |

-

vocab_size=

|

| 13 |

hidden_size=5120,

|

| 14 |

intermediate_size=13696,

|

| 15 |

num_hidden_layers=40,

|

|

|

|

| 9 |

|

| 10 |

def __init__(

|

| 11 |

self,

|

| 12 |

+

vocab_size=64000,

|

| 13 |

hidden_size=5120,

|

| 14 |

intermediate_size=13696,

|

| 15 |

num_hidden_layers=40,

|

handler.py

DELETED

|

@@ -1,23 +0,0 @@

|

|

| 1 |

-

import torch

|

| 2 |

-

from typing import Dict, List, Any

|

| 3 |

-

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

|

| 4 |

-

from transformers.generation.utils import GenerationConfig

|

| 5 |

-

|

| 6 |

-

# get dtype

|

| 7 |

-

dtype = torch.bfloat16 if torch.cuda.get_device_capability()[0] == 8 else torch.float16

|

| 8 |

-

|

| 9 |

-

class EndpointHandler:

|

| 10 |

-

def __init__(self, path=""):

|

| 11 |

-

# load the model

|

| 12 |

-

self.model = AutoModelForCausalLM.from_pretrained(path, device_map="auto", torch_dtype=dtype, trust_remote_code=True)

|

| 13 |

-

self.model.generation_config = GenerationConfig.from_pretrained(path)

|

| 14 |

-

self.tokenizer = AutoTokenizer.from_pretrained(path, use_fast=False, trust_remote_code=True)

|

| 15 |

-

|

| 16 |

-

def __call__(self, data: Any) -> List[List[Dict[str, float]]]:

|

| 17 |

-

inputs = data.pop("inputs", data)

|

| 18 |

-

# ignoring parameters! Default to configs in generation_config.json.

|

| 19 |

-

messages = [{"role": "user", "content": inputs}]

|

| 20 |

-

response = self.model.chat(self.tokenizer, messages)

|

| 21 |

-

if torch.backends.mps.is_available():

|

| 22 |

-

torch.mps.empty_cache()

|

| 23 |

-

return [{'generated_text': response}]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

tokenization_baichuan.py

CHANGED

|

@@ -68,13 +68,6 @@ class BaichuanTokenizer(PreTrainedTokenizer):

|

|

| 68 |

if isinstance(pad_token, str)

|

| 69 |

else pad_token

|

| 70 |

)

|

| 71 |

-

|

| 72 |

-

self.vocab_file = vocab_file

|

| 73 |

-

self.add_bos_token = add_bos_token

|

| 74 |

-

self.add_eos_token = add_eos_token

|

| 75 |

-

self.sp_model = spm.SentencePieceProcessor(**self.sp_model_kwargs)

|

| 76 |

-

self.sp_model.Load(vocab_file)

|

| 77 |

-

|

| 78 |

super().__init__(

|

| 79 |

bos_token=bos_token,

|

| 80 |

eos_token=eos_token,

|

|

@@ -86,6 +79,11 @@ class BaichuanTokenizer(PreTrainedTokenizer):

|

|

| 86 |

clean_up_tokenization_spaces=clean_up_tokenization_spaces,

|

| 87 |

**kwargs,

|

| 88 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 89 |

|

| 90 |

def __getstate__(self):

|

| 91 |

state = self.__dict__.copy()

|

|

|

|

| 68 |

if isinstance(pad_token, str)

|

| 69 |

else pad_token

|

| 70 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 71 |

super().__init__(

|

| 72 |

bos_token=bos_token,

|

| 73 |

eos_token=eos_token,

|

|

|

|

| 79 |

clean_up_tokenization_spaces=clean_up_tokenization_spaces,

|

| 80 |

**kwargs,

|

| 81 |

)

|

| 82 |

+

self.vocab_file = vocab_file

|

| 83 |

+

self.add_bos_token = add_bos_token

|

| 84 |

+

self.add_eos_token = add_eos_token

|

| 85 |

+

self.sp_model = spm.SentencePieceProcessor(**self.sp_model_kwargs)

|

| 86 |

+

self.sp_model.Load(vocab_file)

|

| 87 |

|

| 88 |

def __getstate__(self):

|

| 89 |

state = self.__dict__.copy()

|