SARITA

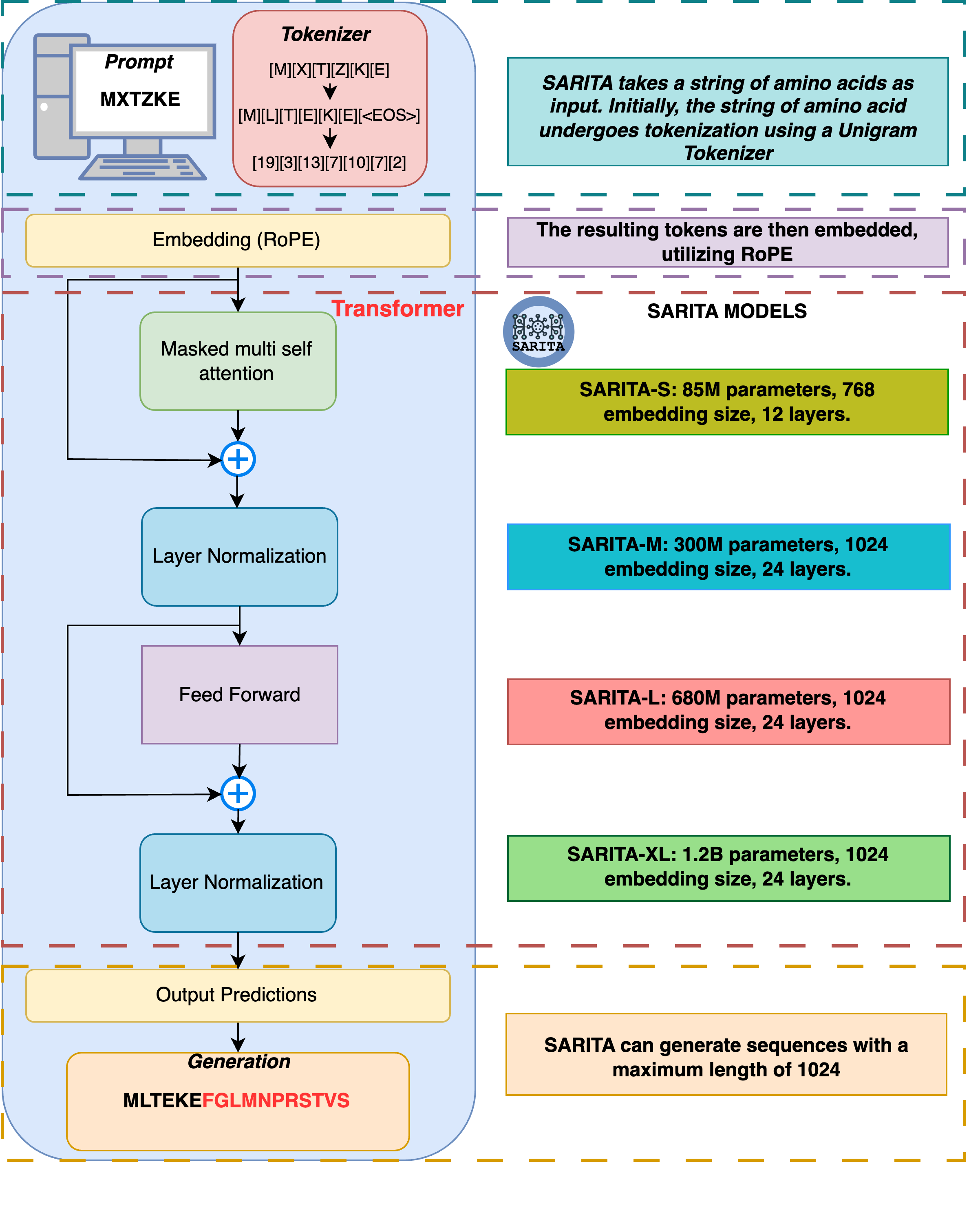

SARITA (or SARS-CoV-2 RITA) is an LLM designed to generate new, synthetic, high-quality and highly realistic SARS-CoV-2 S1 subunits. SARITA builds upon the continual learning framework of RITA, a state-of-the-art generative language model. RITA is an autoregressive model for general protein sequence generation with up to 1.2 billion parameters. To capture the unique biological features of the Spike protein and obtain a specialized approach, we apply continual learning to pre-train RITA via high-quality SARS-CoV-2 S1 sequences from GISAID. To match different needs in terms of computational capacities, SARITA comes in four different sizes: the smallest model has 85 million parameters, while the largest has 1.2 billion. SARITA generates new S1 sequences using as an input the 14 amino acid sequence preceding it. The Results of SARITA are reported in the folliwing pre-print: https://www.biorxiv.org/content/10.1101/2024.12.10.627777v1. The codes to train and to evaluate the model is avaiable on GitHub

SARITA models trained with high-quality SARS-CoV-2 S1 sequences from December 2019 - March 2021 (click on the model's name)

| Model | #Params | d_model | layers |

|---|---|---|---|

| Small | 85M | 768 | 12 |

| Medium | 300M | 1024 | 24 |

| Large | 680M | 1536 | 24 |

| XLarge | 1.2B | 2048 | 24 |

SARITA models trained with high-quality SARS-CoV-2 S1 sequences from December 2019 - August 2024 (click on the model's name)

| Model | #Params | d_model | layers |

|---|---|---|---|

| Small | 85M | 768 | 12 |

| Medium | 300M | 1024 | 24 |

| Large | 680M | 1536 | 24 |

| XLarge | 1.2B | 2048 | 24 |

Architecture

The SARITA architecture is based on a series of decoder-only transformers, inspired by the GPT-3 model. It employs Rotary Positional Embeddings (RoPE) to enhance the model's ability to capture positional relationships within the input data. SARITA is available in four configurations: SARITA-S with 85 million parameters, featuring an embedding size of 768 and 12 transformer layers; SARITA-M with 300 million parameters, featuring an embedding dimension of 1024 and 24 layers; SARITA-L with 680 million parameters featuring an embedding size of 1536 and 24 layers; and SARITA-XL, with 1.2 billion parameters, featuring an embedding size of 2048, and 24 layers. All SARITA models can generate sequences up to 1024 tokens long. SARITA uses the Unigram model for tokenization, where each amino acid is represented as a single token, reflecting its unique role in protein structure and function. The tokenizer also includes special tokens like for padding shorter sequences and for marking sequence ends, ensuring consistency across datasets. This process reduces variability and enhances the model's ability to learn meaningful patterns from protein sequences. At the end each token is transformed into a numerical representation using a look-up table

Model description

SARITA is an LLM with up to 1.2B parameters, based on GPT-3 architecture, designed to generate high-quality synthetic SARS-CoV-2 Spike sequences. SARITA is trained via continuous learning on the pre-existing protein model RITA.

Intended uses & limitations

This model can be used by user to generate synthetic Spike proteins of SARS-CoV-2 Virus.

Framework versions

- Transformers 4.20.1

- Pytorch 1.9.0+cu111

- Datasets 2.18.0

- Tokenizers 0.12.1

Model tree for SimoRancati/SARITA

Base model

lightonai/RITA_l