Eurstoria-106B

A Llama-3 Decoder only model by combining 2x finetuned Llama-3 70B models into 1.

Merge Details

Merge Method

This model was merged using the passthrough merge method.

Models Merged

The following models were included in the merge:

Configuration

The following YAML configuration was used to produce this model:

slices:

- sources:

- model: Steelskull/L3-MS-Astoria-70b

layer_range: [0, 16]

- sources:

- model: Sao10K/L3-70B-Euryale-v2.1

layer_range: [8, 24]

- sources:

- model: Steelskull/L3-MS-Astoria-70b

layer_range: [25, 40]

- sources:

- model: Sao10K/L3-70B-Euryale-v2.1

layer_range: [33, 48]

- sources:

- model: Steelskull/L3-MS-Astoria-70b

layer_range: [41, 56]

- sources:

- model: Sao10K/L3-70B-Euryale-v2.1

layer_range: [49, 64]

- sources:

- model: Steelskull/L3-MS-Astoria-70b

layer_range: [57, 72]

- sources:

- model: Sao10K/L3-70B-Euryale-v2.1

layer_range: [65, 80]

merge_method: passthrough

dtype: float16

Prompting Format

As both models are based off from instruct, Use the default Llama 3 Instruct format would be fine.

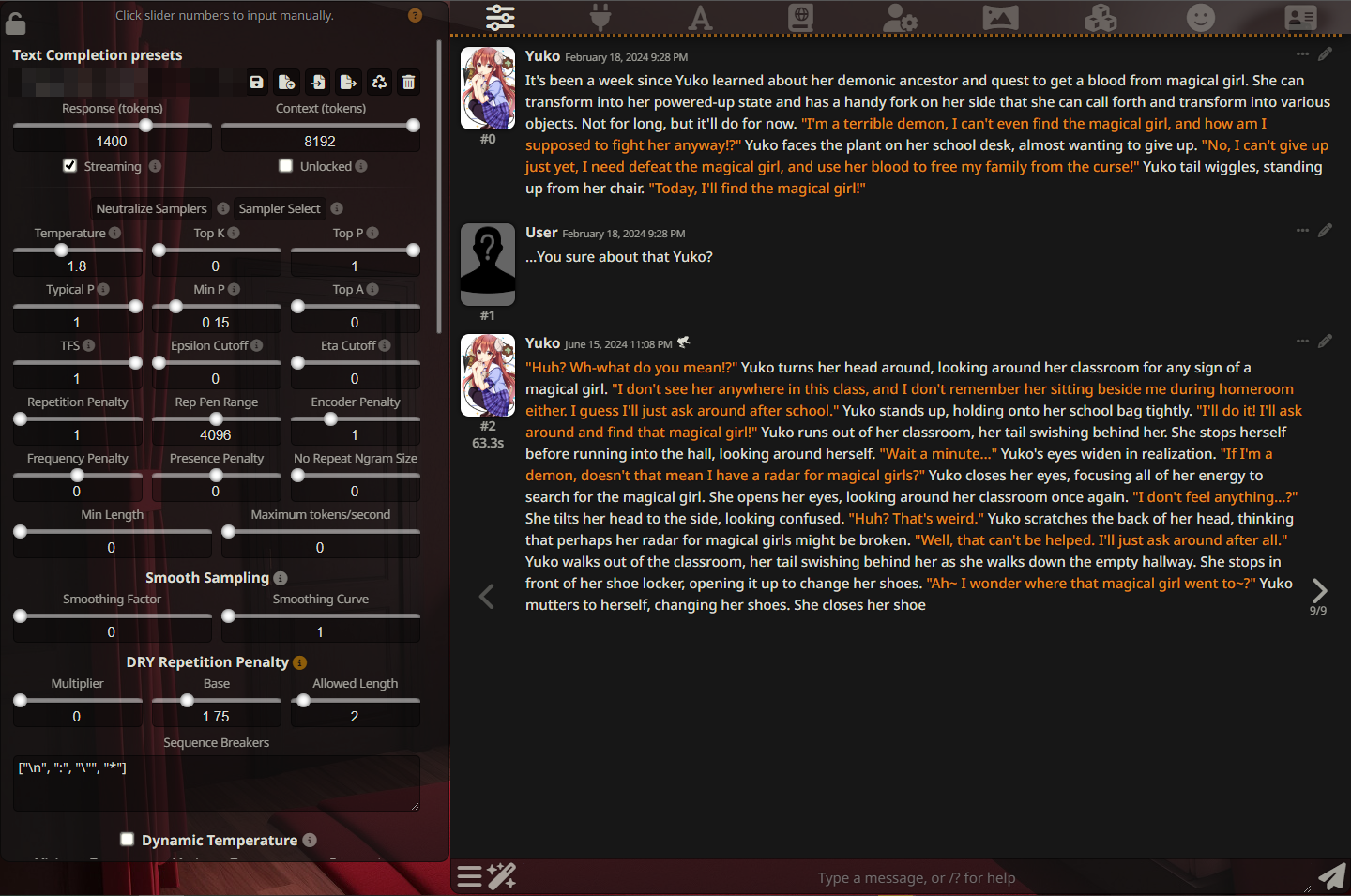

Screenshots

A Screenshot to confirm that: "It works"

Benchmarks

No one is willing to bench a 106B... right?

Acknowledgements

The original ratios come from Goliath and are used as is. As such thanks to both Alpindale and @Undi95 for their inital work.

Credits goes to @chargoddard for developing the framework used to merge the model - mergekit.

- Downloads last month

- 8

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

the model is not deployed on the HF Inference API.

Model tree for KaraKaraWitch/Eurstoria-106B

Merge model

this model