license: apache-2.0

This model is a fine-tuned model for Chat based on mosaicml/mpt-7b with max_seq_lenght=2048 on databricks-dolly-15k, TigerResearch/tigerbot-alpaca-en-50k, TigerResearch/tigerbot-gsm-8k-en, TigerResearch/tigerbot-alpaca-zh-0.5m, TigerResearch/tigerbot-stackexchange-qa-en-0.5m, HC3 dataset.

Model date

Neural-chat-7b-v1.1 was trained between June and July 2023.

Evaluation

We use the same evaluation metrics as open_llm_leaderboard which uses Eleuther AI Language Model Evaluation Harness, a unified framework to test generative language models on a large number of different evaluation tasks.

| Model | Average ⬆️ | ARC (25-s) ⬆️ | HellaSwag (10-s) ⬆️ | MMLU (5-s) ⬆️ | TruthfulQA (MC) (0-s) ⬆️ |

|---|---|---|---|---|---|

| mosaicml/mpt-7b | 47.4 | 47.61 | 77.56 | 31 | 33.43 |

| mosaicml/mpt-7b-chat | 49.95 | 46.5 | 75.55 | 37.60 | 40.17 |

| Ours | 51.41 | 50.09 | 76.69 | 38.79 | 40.07 |

Bias evaluation

Following the blog evaluating-llm-bias, we select 10000 samples randomly from allenai/real-toxicity-prompts to evaluate toxicity bias in Language Models

| Model | Toxicity Rito ↓| |mosaicml/mpt-7b| 0.027 | | Ours | 0.0264 |

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- distributed_type: multi-GPU

- num_devices: 4

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- total_eval_batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.02

- num_epochs: 3.0

Inference with transformers

import transformers

model = transformers.AutoModelForCausalLM.from_pretrained(

'Intel/neural-chat-7b-v1-1',

trust_remote_code=True

)

Inference with INT8

Follow the instructions link to install the necessary dependencies. Use the below command to quantize the model using Intel Neural Compressor link and accelerate the inference.

python run_generation.py \

--model Intel/neural-chat-7b-v1-1 \

--quantize \

--sq \

--alpha 0.95 \

--ipex

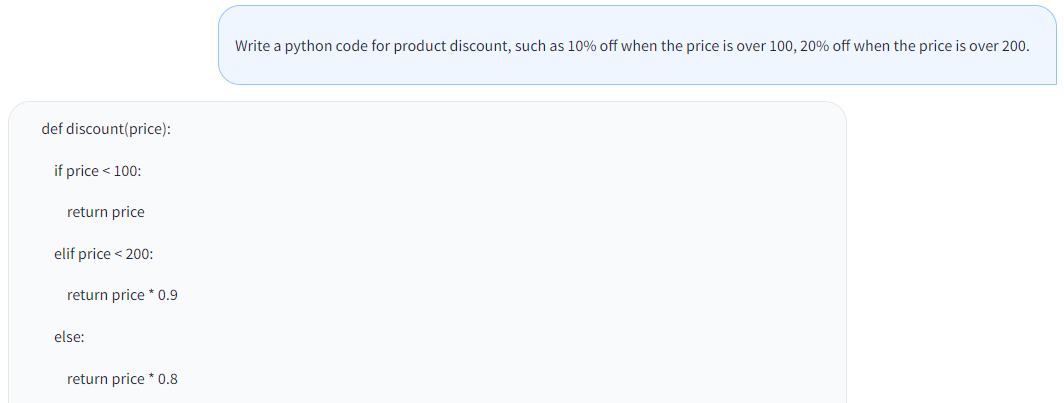

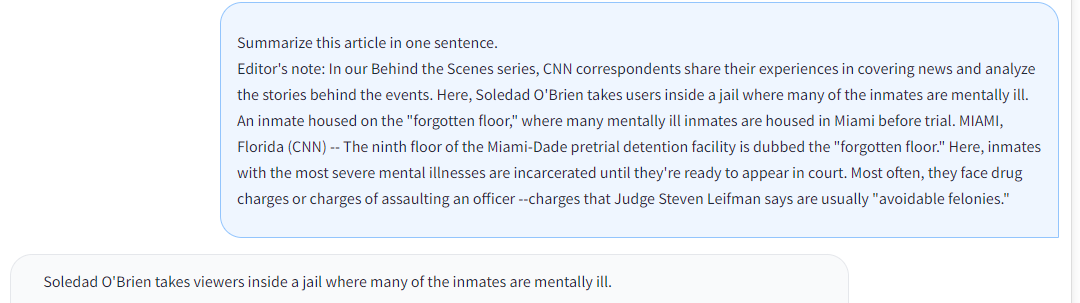

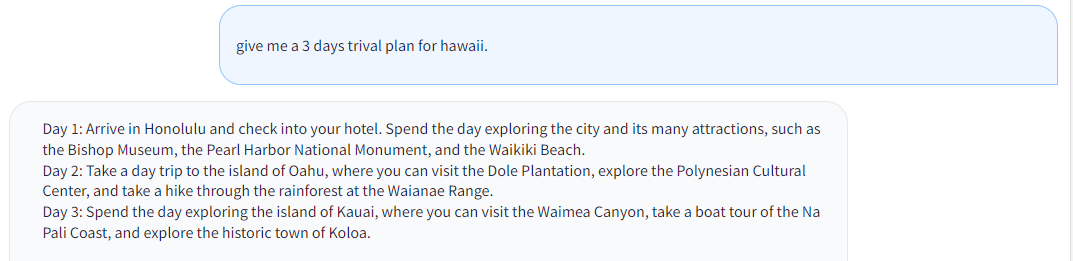

Examples

Organizations developing the model

The NeuralChat team with members from Intel/SATG/AIA/AIPT. Core team members: Kaokao Lv, Liang Lv, Chang Wang, Wenxin Zhang, Xuhui Ren, and Haihao Shen.