Index-1.9B-32K

Model Overview

Index-1.9B-32K is a language model with only 1.9 billion parameters, yet it supports a context length of 32K (meaning this extremely small model can read documents of over 35,000 words in one go). The model has undergone Continue Pre-Training and Supervised Fine-Tuning (SFT) specifically for texts longer than 32K tokens, based on carefully curated long-text training data and self-built long-text instruction sets. The model is now open-source on both Hugging Face and ModelScope.

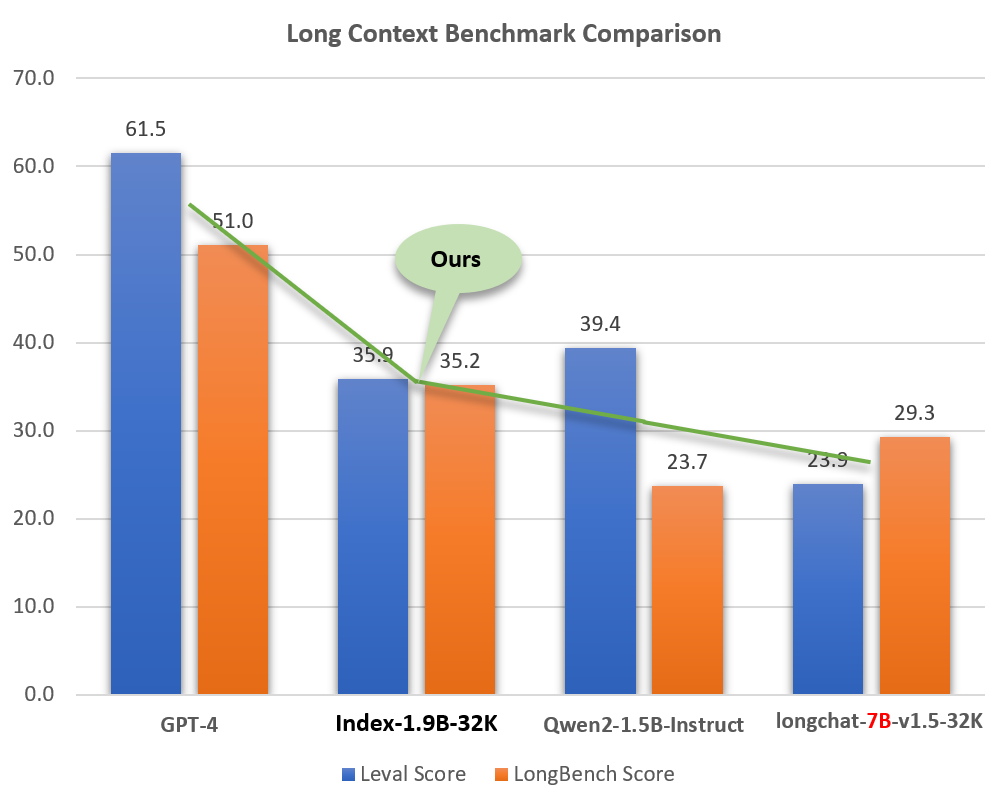

Despite its small size (about 2% of models like GPT-4), Index-1.9B-32K demonstrates excellent long-text processing capabilities. As shown in the figure below, our 1.9B-sized model's score even surpasses that of the 7B-sized model. Below is a comparison with models like GPT-4 and Qwen2:

Comparison of Index-1.9B-32K with GPT-4, Qwen2, and other models in Long Context capability

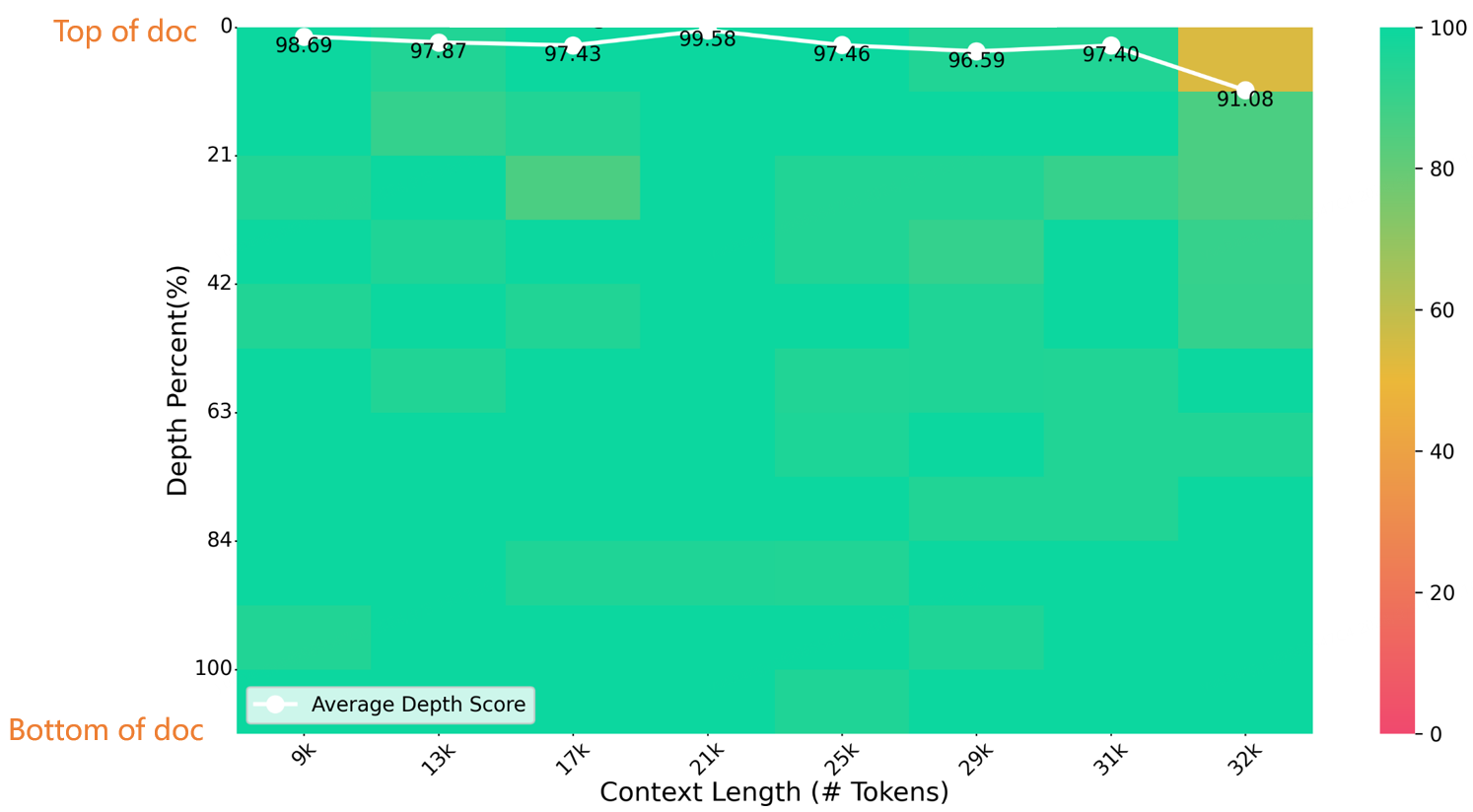

In a 32K-length needle-in-a-haystack test, Index-1.9B-32K achieved excellent results, as shown in the figure below. The only exception was a small yellow spot (91.08 points) in the region of (32K length, 10% depth), with all other areas performing excellently in mostly green zones.

NeedleBench Evaluation

Index-1.9B-32K Model Download, Usage, and Technical Report:

For details on downloading, usage, and the technical report for Index-1.9B-32K, see:

Usage:Long Text Translation and Summary(Index-1.9B-32K)

- Clone the code repository for model execution and evaluation:

git clone https://github.com/bilibili/Index-1.9B

cd Index-1.9B

Download the model files to your local machine.

Use pip to install the required environment:

pip install -r requirements.txt

- Run the interactive tool for long text: demo/cli_long_text_demo.py

- The model will, by default, read this file: data/user_long_text.txt and summarize the text in Chinese.

- You can open a new window and modify the file content in real-time, and the model will read the updated file and summarize it.

cd demo/

CUDA_VISIBLE_DEVICES=0 python cli_long_text_demo.py --model_path '/path/to/model/' --input_file_path data/user_long_text.txt

- Run & Interaction Example (Translation and summarization of the Bilibili financial report released on 2024.8.22 in English --- Original English report here):

Translation and Summary (Bilibili financial report released on 2024.8.22)

Limitations and Disclaimer

Index-1.9B-32K may generate inaccurate, biased, or otherwise objectionable content in some cases. The model cannot understand or express personal opinions or value judgments when generating content, and its output does not represent the views or stance of the model developers. Therefore, please use the generated content with caution. Users are responsible for evaluating and verifying the generated content and should refrain from spreading harmful content. Before deploying any related applications, developers should conduct safety tests and fine-tune the model based on specific use cases.

We strongly caution against using these models to create or spread harmful information or engage in any activities that could harm the public, national, or social security or violate regulations. Additionally, these models should not be used in internet services without proper security review and registration. We have done our best to ensure the compliance of the training data, but due to the complexity of the models and data, unforeseen issues may still arise. We accept no liability for any problems arising from the use of these models, whether data security issues, public opinion risks, or risks and issues caused by misunderstanding, misuse, or non-compliant use of the models.

Model Open Source License

The source code in this repository is licensed under the [Apache-2.0]{.underline} open-source license. The Index-1.9B-32K model weights are subject to the [Model License Agreement]{.underline}。

The Index-1.9B-32K model weights are fully open for academic research and support free commercial use.

Citation

If you find our work helpful, feel free to cite it!

@article{Index-1.9B-32K,

title={Index-1.9B-32K Long Context Technical Report},

year={2024},

url={https://github.com/bilibili/Index-1.9B/blob/main/Index-1.9B-32K_Long_Context_Technical_Report.md},

author={Changye Yu, Tianjiao Li, Lusheng Zhang and IndexTeam}

}

- Downloads last month

- 37