Update README.md

Browse files

README.md

CHANGED

|

@@ -22,20 +22,36 @@ widget:

|

|

| 22 |

- text: "Question:How should covid-19 be prevented? Answer:"

|

| 23 |

example_title: "test Question2"

|

| 24 |

---

|

| 25 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

**YuyuanQA-GPT2-3.5B** is fine-tuned with 10000 medical QA pairs based on **Yuyuan-3.5B** model.

|

| 27 |

|

| 28 |

**Question answering(QA)** is an important subject related to natural language processing and information retrieval. There are many application scenarios in the actual industry. **Traditional methods are often complex**, and their core algorithms involve **machine learning**, **deep learning** and **knowledge graph** related knowledge.

|

| 29 |

|

| 30 |

-

We hope to explore a **simpler** and more **effective** way to use the powerful memory and understanding ability of the large model to directly realize question and answering. Yuyuanqa-GPT2-3.5b model is an attempt and **performs well under subjective test**. At the same time, we also tested 100 QA pairs with

|

| 31 |

|

| 32 |

| gram | 1-gram | 2-gram | 3-gram | 4-gram |

|

| 33 |

| ----------- | ----------- |------|------|------|

|

| 34 |

-

|

|

|

|

|

|

|

|

| 35 |

|

| 36 |

-

|

| 37 |

|

| 38 |

-

### load model

|

| 39 |

```python

|

| 40 |

from transformers import GPT2Tokenizer,GPT2LMHeadModel

|

| 41 |

|

|

@@ -44,7 +60,9 @@ hf_model_path = 'model_path or model name'

|

|

| 44 |

tokenizer = GPT2Tokenizer.from_pretrained(hf_model_path)

|

| 45 |

model = GPT2LMHeadModel.from_pretrained(hf_model_path)

|

| 46 |

```

|

| 47 |

-

|

|

|

|

|

|

|

| 48 |

```python

|

| 49 |

fquestion = "What should gout patients pay attention to in diet?"

|

| 50 |

inputs = tokenizer(f'Question:{question} answer:',return_tensors='pt')

|

|

@@ -66,19 +84,36 @@ for idx,sentence in enumerate(generation_output.sequences):

|

|

| 66 |

print('*'*40)

|

| 67 |

|

| 68 |

```

|

| 69 |

-

## example

|

| 70 |

|

| 71 |

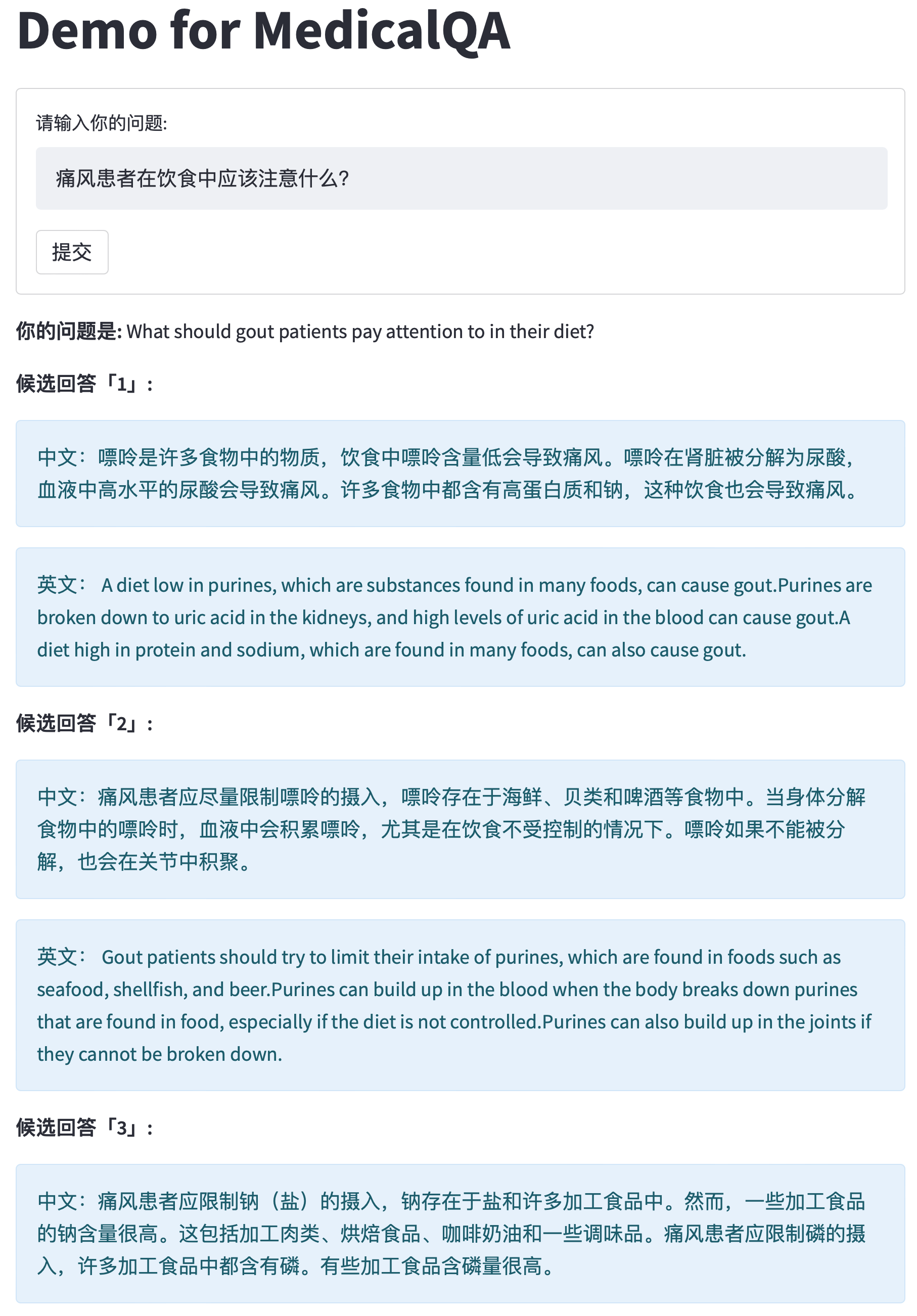

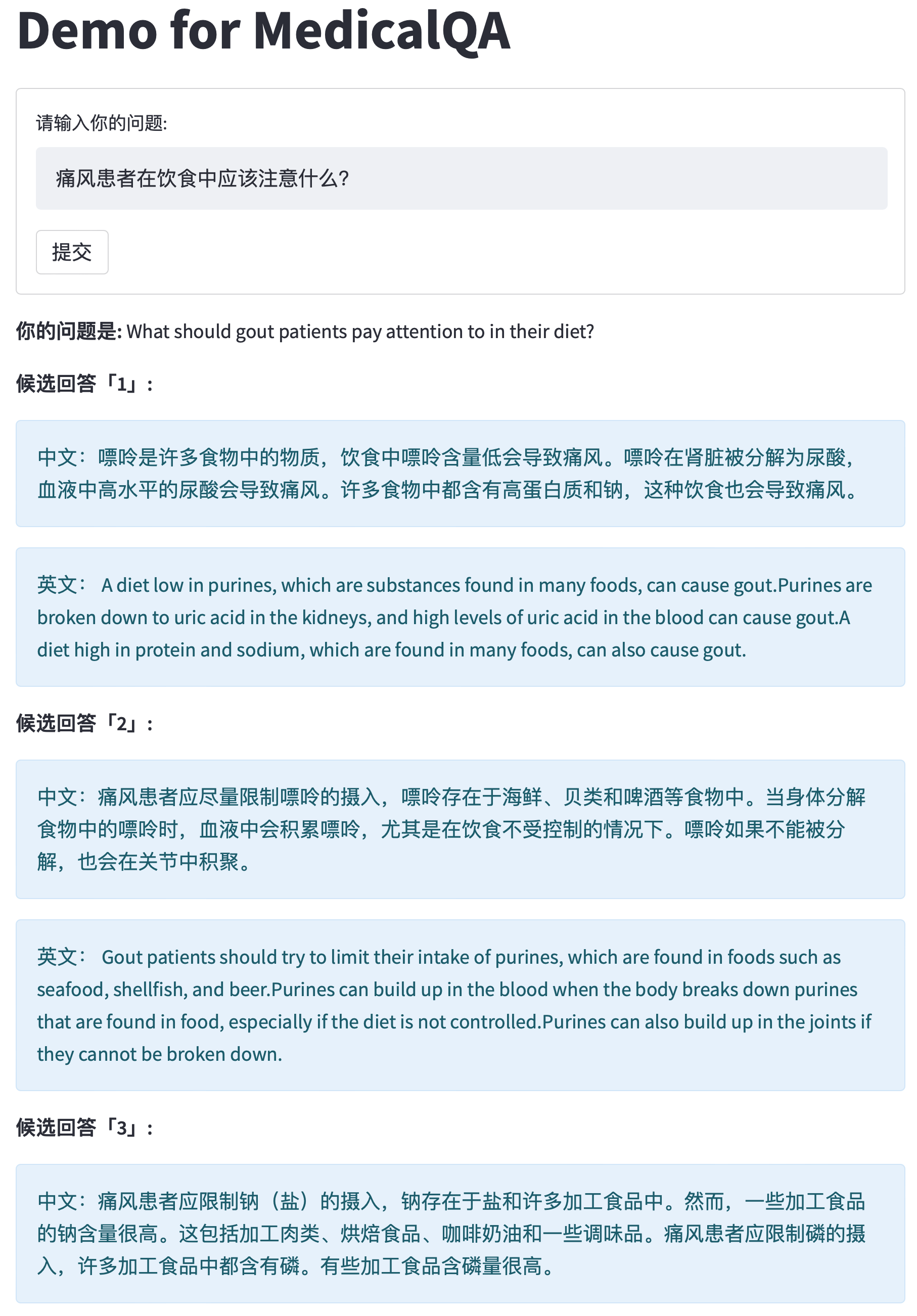

We made a demo of medical Q & A with YuyuanQA-GPT2-3.5B model. In the future, we will make this product into a wechat app to meet you. Please look forward to it.

|

| 72 |

|

| 73 |

|

| 74 |

|

| 75 |

-

## Citation

|

| 76 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 77 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 78 |

@misc{Fengshenbang-LM,

|

| 79 |

title={Fengshenbang-LM},

|

| 80 |

author={IDEA-CCNL},

|

| 81 |

-

year={

|

| 82 |

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

|

| 83 |

}

|

| 84 |

```

|

|

|

|

| 22 |

- text: "Question:How should covid-19 be prevented? Answer:"

|

| 23 |

example_title: "test Question2"

|

| 24 |

---

|

| 25 |

+

|

| 26 |

+

# YuyuanQA-GPT2-3.5B

|

| 27 |

+

|

| 28 |

+

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

|

| 29 |

+

- Docs: [Fengshenbang-Docs](https://fengshenbang-doc.readthedocs.io/)

|

| 30 |

+

|

| 31 |

+

## 模型分类 Model Taxonomy

|

| 32 |

+

|

| 33 |

+

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

|

| 34 |

+

| :----: | :----: | :----: | :----: | :----: | :----: |

|

| 35 |

+

| 特殊 Special | 领域 Domain | 余元 Yuyuan | GPT2 | 3.5B | 问答 QA |

|

| 36 |

+

|

| 37 |

+

## 模型信息 Model Information

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

**YuyuanQA-GPT2-3.5B** is fine-tuned with 10000 medical QA pairs based on **Yuyuan-3.5B** model.

|

| 42 |

|

| 43 |

**Question answering(QA)** is an important subject related to natural language processing and information retrieval. There are many application scenarios in the actual industry. **Traditional methods are often complex**, and their core algorithms involve **machine learning**, **deep learning** and **knowledge graph** related knowledge.

|

| 44 |

|

| 45 |

+

We hope to explore a **simpler** and more **effective** way to use the powerful memory and understanding ability of the large model to directly realize question and answering. Yuyuanqa-GPT2-3.5b model is an attempt and **performs well under subjective test**. At the same time, we also tested 100 QA pairs with **BLEU**:

|

| 46 |

|

| 47 |

| gram | 1-gram | 2-gram | 3-gram | 4-gram |

|

| 48 |

| ----------- | ----------- |------|------|------|

|

| 49 |

+

| blue_score | 0.357727 | 0.2713 | 0.22304 | 0.19099 |

|

| 50 |

+

|

| 51 |

+

## 使用 Usage

|

| 52 |

|

| 53 |

+

### 加载模型 Loading Models

|

| 54 |

|

|

|

|

| 55 |

```python

|

| 56 |

from transformers import GPT2Tokenizer,GPT2LMHeadModel

|

| 57 |

|

|

|

|

| 60 |

tokenizer = GPT2Tokenizer.from_pretrained(hf_model_path)

|

| 61 |

model = GPT2LMHeadModel.from_pretrained(hf_model_path)

|

| 62 |

```

|

| 63 |

+

|

| 64 |

+

### 使用示例 Usage Examples

|

| 65 |

+

|

| 66 |

```python

|

| 67 |

fquestion = "What should gout patients pay attention to in diet?"

|

| 68 |

inputs = tokenizer(f'Question:{question} answer:',return_tensors='pt')

|

|

|

|

| 84 |

print('*'*40)

|

| 85 |

|

| 86 |

```

|

|

|

|

| 87 |

|

| 88 |

We made a demo of medical Q & A with YuyuanQA-GPT2-3.5B model. In the future, we will make this product into a wechat app to meet you. Please look forward to it.

|

| 89 |

|

| 90 |

|

| 91 |

|

| 92 |

+

## 引用 Citation

|

| 93 |

+

|

| 94 |

+

如果您在您的工作中使用了我们的模型,可以引用我们的[论文](https://arxiv.org/abs/2209.02970):

|

| 95 |

+

|

| 96 |

+

If you are using the resource for your work, please cite the our [paper](https://arxiv.org/abs/2209.02970):

|

| 97 |

+

|

| 98 |

+

```text

|

| 99 |

+

@article{fengshenbang,

|

| 100 |

+

author = {Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen and Ruyi Gan and Jiaxing Zhang},

|

| 101 |

+

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

|

| 102 |

+

journal = {CoRR},

|

| 103 |

+

volume = {abs/2209.02970},

|

| 104 |

+

year = {2022}

|

| 105 |

+

}

|

| 106 |

```

|

| 107 |

+

|

| 108 |

+

也可以引用我们的[网站](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

|

| 109 |

+

|

| 110 |

+

You can also cite our [website](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

|

| 111 |

+

|

| 112 |

+

```text

|

| 113 |

@misc{Fengshenbang-LM,

|

| 114 |

title={Fengshenbang-LM},

|

| 115 |

author={IDEA-CCNL},

|

| 116 |

+

year={2021},

|

| 117 |

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

|

| 118 |

}

|

| 119 |

```

|