Update README.md

Browse files

README.md

CHANGED

|

@@ -1,84 +1,84 @@

|

|

| 1 |

-

---

|

| 2 |

-

language:

|

| 3 |

-

- en

|

| 4 |

-

|

| 5 |

-

inference:

|

| 6 |

-

parameters:

|

| 7 |

-

temperature: 0.7

|

| 8 |

-

top_p: 0.6

|

| 9 |

-

max_new_tokens: 64

|

| 10 |

-

num_return_sequences: 3

|

| 11 |

-

do_sample: true

|

| 12 |

-

|

| 13 |

-

license: apache-2.0

|

| 14 |

-

tags:

|

| 15 |

-

- QA

|

| 16 |

-

- medical

|

| 17 |

-

- gpt2

|

| 18 |

-

|

| 19 |

-

widget:

|

| 20 |

-

- text: "Question:What should gout patients pay attention to in diet? Answer:"

|

| 21 |

-

example_title: "test Question1"

|

| 22 |

-

- text: "Question:How should covid-19 be prevented? Answer:"

|

| 23 |

-

example_title: "test Question2"

|

| 24 |

-

---

|

| 25 |

-

# YuyuanQA-GPT2-3.5B model (Medical),one model of [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM).

|

| 26 |

-

**YuyuanQA-GPT2-3.5B** is fine-tuned with 10000 medical QA pairs based on **Yuyuan-3.5B** model.

|

| 27 |

-

|

| 28 |

-

**Question answering(QA)** is an important subject related to natural language processing and information retrieval. There are many application scenarios in the actual industry. **Traditional methods are often complex**, and their core algorithms involve **machine learning**, **deep learning** and **knowledge graph** related knowledge.

|

| 29 |

-

|

| 30 |

-

We hope to explore a **simpler** and more **effective** way to use the powerful memory and understanding ability of the large model to directly realize question and answering. Yuyuanqa-GPT2-3.5b model is an attempt and **performs well under subjective test**. At the same time, we also tested 100 QA pairs with ***

|

| 31 |

-

|

| 32 |

-

| gram | 1-gram | 2-gram | 3-gram | 4-gram |

|

| 33 |

-

| ----------- | ----------- |------|------|------|

|

| 34 |

-

| **blue_score** | 0.357727 | 0.2713 | 0.22304 | 0.19099 |

|

| 35 |

-

|

| 36 |

-

## Usage

|

| 37 |

-

|

| 38 |

-

### load model

|

| 39 |

-

```python

|

| 40 |

-

from transformers import GPT2Tokenizer,GPT2LMHeadModel

|

| 41 |

-

|

| 42 |

-

hf_model_path = 'model_path or model name'

|

| 43 |

-

|

| 44 |

-

tokenizer = GPT2Tokenizer.from_pretrained(hf_model_path)

|

| 45 |

-

model = GPT2LMHeadModel.from_pretrained(hf_model_path)

|

| 46 |

-

```

|

| 47 |

-

### generation

|

| 48 |

-

```python

|

| 49 |

-

fquestion = "What should gout patients pay attention to in diet?"

|

| 50 |

-

inputs = tokenizer(f'Question:{question} answer:',return_tensors='pt')

|

| 51 |

-

|

| 52 |

-

generation_output = model.generate(**inputs,

|

| 53 |

-

return_dict_in_generate=True,

|

| 54 |

-

output_scores=True,

|

| 55 |

-

max_length=150,

|

| 56 |

-

# max_new_tokens=80,

|

| 57 |

-

do_sample=True,

|

| 58 |

-

top_p = 0.6,

|

| 59 |

-

eos_token_id=50256,

|

| 60 |

-

pad_token_id=0,

|

| 61 |

-

num_return_sequences = 5)

|

| 62 |

-

|

| 63 |

-

for idx,sentence in enumerate(generation_output.sequences):

|

| 64 |

-

print('next sentence %d:\n'%idx,

|

| 65 |

-

tokenizer.decode(sentence).split('<|endoftext|>')[0])

|

| 66 |

-

print('*'*40)

|

| 67 |

-

|

| 68 |

-

```

|

| 69 |

-

## example

|

| 70 |

-

|

| 71 |

-

We made a demo of medical Q & A with YuyuanQA-GPT2-3.5B model. In the future, we will make this product into a wechat app to meet you. Please look forward to it.

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

## Citation

|

| 76 |

-

If you find the resource is useful, please cite the following website in your paper.

|

| 77 |

-

```

|

| 78 |

-

@misc{Fengshenbang-LM,

|

| 79 |

-

title={Fengshenbang-LM},

|

| 80 |

-

author={IDEA-CCNL},

|

| 81 |

-

year={2022},

|

| 82 |

-

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

|

| 83 |

-

}

|

| 84 |

-

```

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

|

| 5 |

+

inference:

|

| 6 |

+

parameters:

|

| 7 |

+

temperature: 0.7

|

| 8 |

+

top_p: 0.6

|

| 9 |

+

max_new_tokens: 64

|

| 10 |

+

num_return_sequences: 3

|

| 11 |

+

do_sample: true

|

| 12 |

+

|

| 13 |

+

license: apache-2.0

|

| 14 |

+

tags:

|

| 15 |

+

- QA

|

| 16 |

+

- medical

|

| 17 |

+

- gpt2

|

| 18 |

+

|

| 19 |

+

widget:

|

| 20 |

+

- text: "Question:What should gout patients pay attention to in diet? Answer:"

|

| 21 |

+

example_title: "test Question1"

|

| 22 |

+

- text: "Question:How should covid-19 be prevented? Answer:"

|

| 23 |

+

example_title: "test Question2"

|

| 24 |

+

---

|

| 25 |

+

# YuyuanQA-GPT2-3.5B model (Medical),one model of [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM).

|

| 26 |

+

**YuyuanQA-GPT2-3.5B** is fine-tuned with 10000 medical QA pairs based on **Yuyuan-3.5B** model.

|

| 27 |

+

|

| 28 |

+

**Question answering(QA)** is an important subject related to natural language processing and information retrieval. There are many application scenarios in the actual industry. **Traditional methods are often complex**, and their core algorithms involve **machine learning**, **deep learning** and **knowledge graph** related knowledge.

|

| 29 |

+

|

| 30 |

+

We hope to explore a **simpler** and more **effective** way to use the powerful memory and understanding ability of the large model to directly realize question and answering. Yuyuanqa-GPT2-3.5b model is an attempt and **performs well under subjective test**. At the same time, we also tested 100 QA pairs with ***BLEU***:

|

| 31 |

+

|

| 32 |

+

| gram | 1-gram | 2-gram | 3-gram | 4-gram |

|

| 33 |

+

| ----------- | ----------- |------|------|------|

|

| 34 |

+

| **blue_score** | 0.357727 | 0.2713 | 0.22304 | 0.19099 |

|

| 35 |

+

|

| 36 |

+

## Usage

|

| 37 |

+

|

| 38 |

+

### load model

|

| 39 |

+

```python

|

| 40 |

+

from transformers import GPT2Tokenizer,GPT2LMHeadModel

|

| 41 |

+

|

| 42 |

+

hf_model_path = 'model_path or model name'

|

| 43 |

+

|

| 44 |

+

tokenizer = GPT2Tokenizer.from_pretrained(hf_model_path)

|

| 45 |

+

model = GPT2LMHeadModel.from_pretrained(hf_model_path)

|

| 46 |

+

```

|

| 47 |

+

### generation

|

| 48 |

+

```python

|

| 49 |

+

fquestion = "What should gout patients pay attention to in diet?"

|

| 50 |

+

inputs = tokenizer(f'Question:{question} answer:',return_tensors='pt')

|

| 51 |

+

|

| 52 |

+

generation_output = model.generate(**inputs,

|

| 53 |

+

return_dict_in_generate=True,

|

| 54 |

+

output_scores=True,

|

| 55 |

+

max_length=150,

|

| 56 |

+

# max_new_tokens=80,

|

| 57 |

+

do_sample=True,

|

| 58 |

+

top_p = 0.6,

|

| 59 |

+

eos_token_id=50256,

|

| 60 |

+

pad_token_id=0,

|

| 61 |

+

num_return_sequences = 5)

|

| 62 |

+

|

| 63 |

+

for idx,sentence in enumerate(generation_output.sequences):

|

| 64 |

+

print('next sentence %d:\n'%idx,

|

| 65 |

+

tokenizer.decode(sentence).split('<|endoftext|>')[0])

|

| 66 |

+

print('*'*40)

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

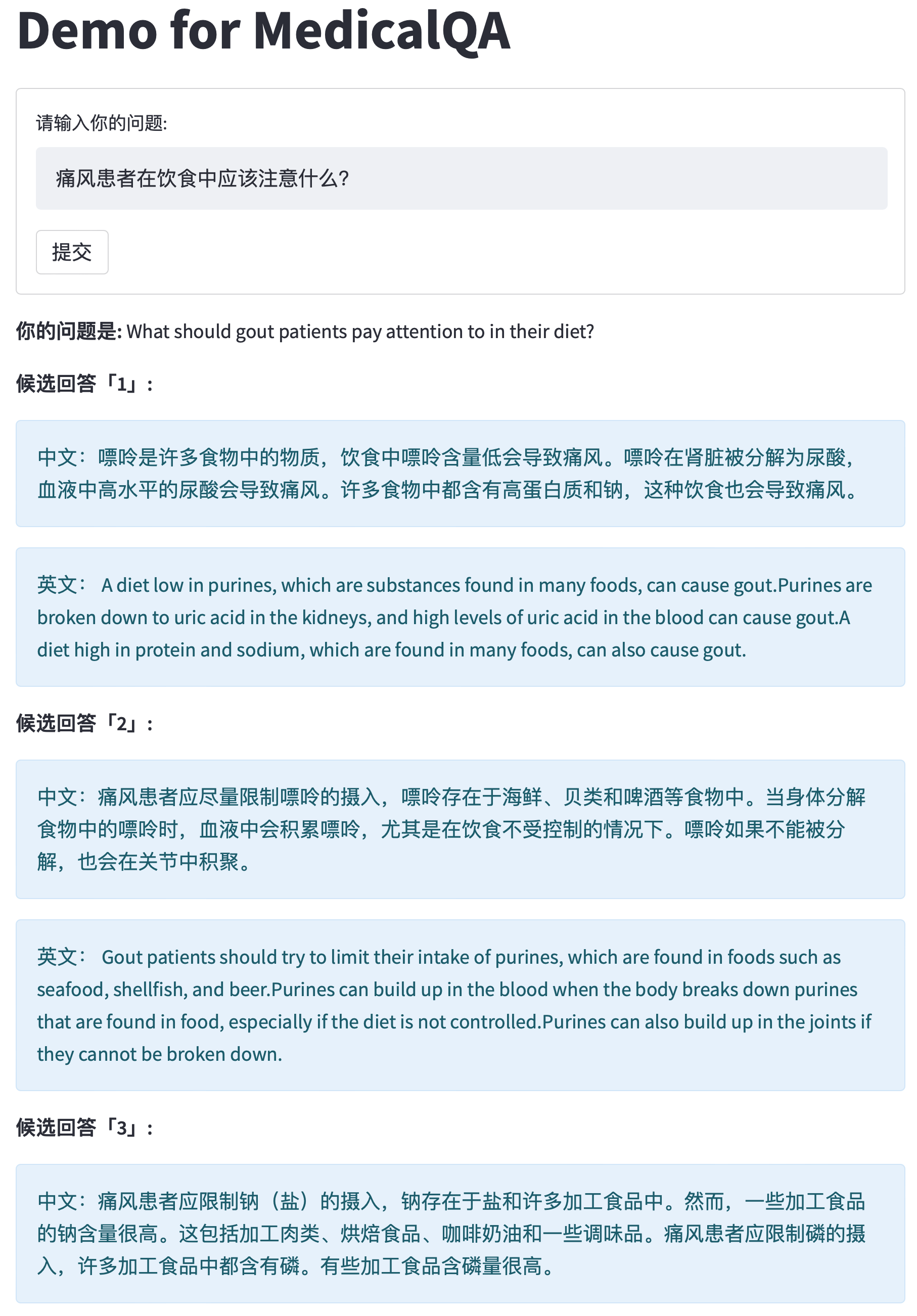

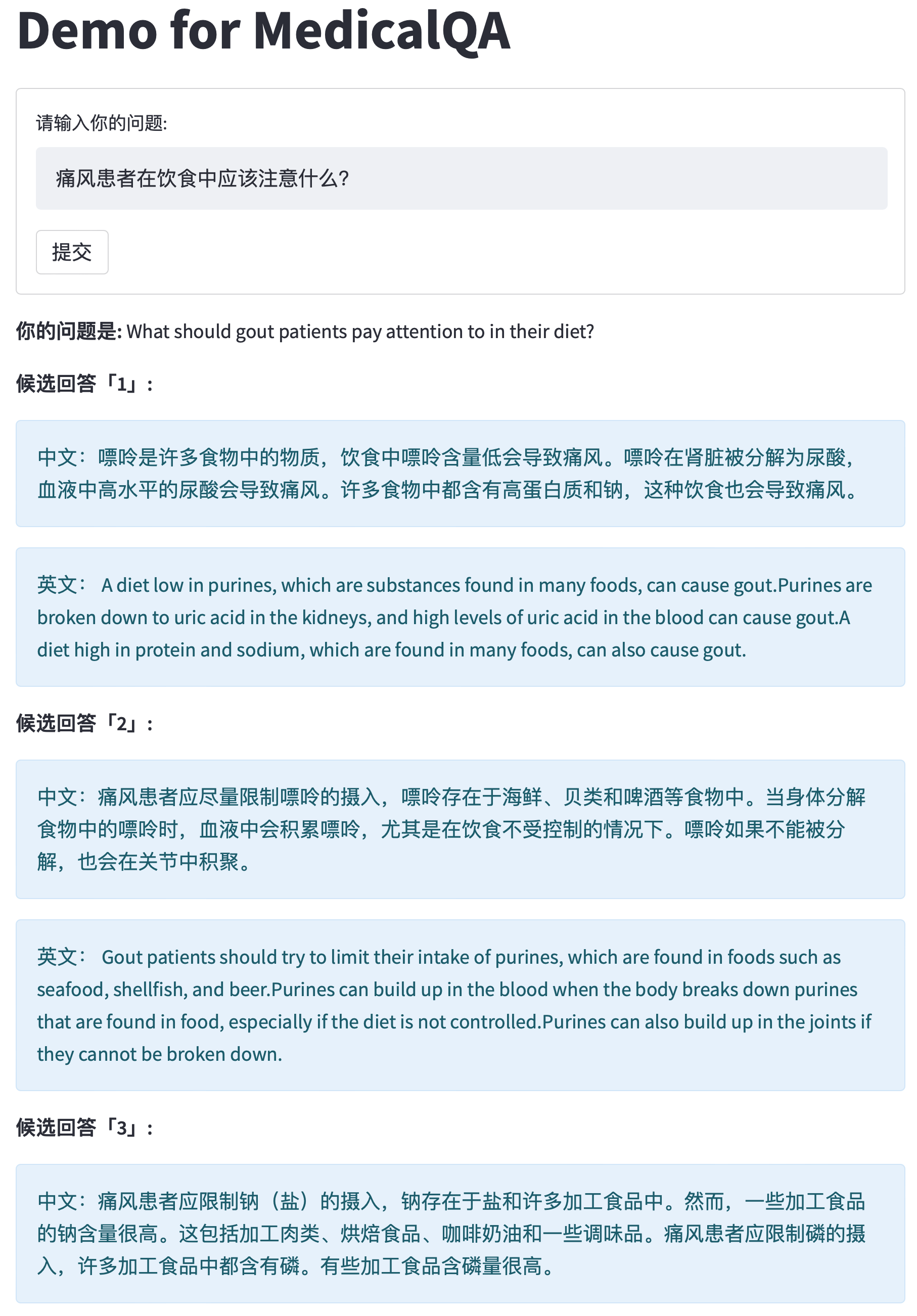

## example

|

| 70 |

+

|

| 71 |

+

We made a demo of medical Q & A with YuyuanQA-GPT2-3.5B model. In the future, we will make this product into a wechat app to meet you. Please look forward to it.

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

## Citation

|

| 76 |

+

If you find the resource is useful, please cite the following website in your paper.

|

| 77 |

+

```

|

| 78 |

+

@misc{Fengshenbang-LM,

|

| 79 |

+

title={Fengshenbang-LM},

|

| 80 |

+

author={IDEA-CCNL},

|

| 81 |

+

year={2022},

|

| 82 |

+

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

|

| 83 |

+

}

|

| 84 |

+

```

|