State-of-the-art compact LLMs for on-device applications: 1.7B, 360M, 135M

Hugging Face Smol Models Research

Enterprise

community

AI & ML interests

Exploring smol models (for text, vision and video) and high quality web and synthetic datasets

Recent Activity

View all activity

Organization Card

Hugging Face Smol Models Research

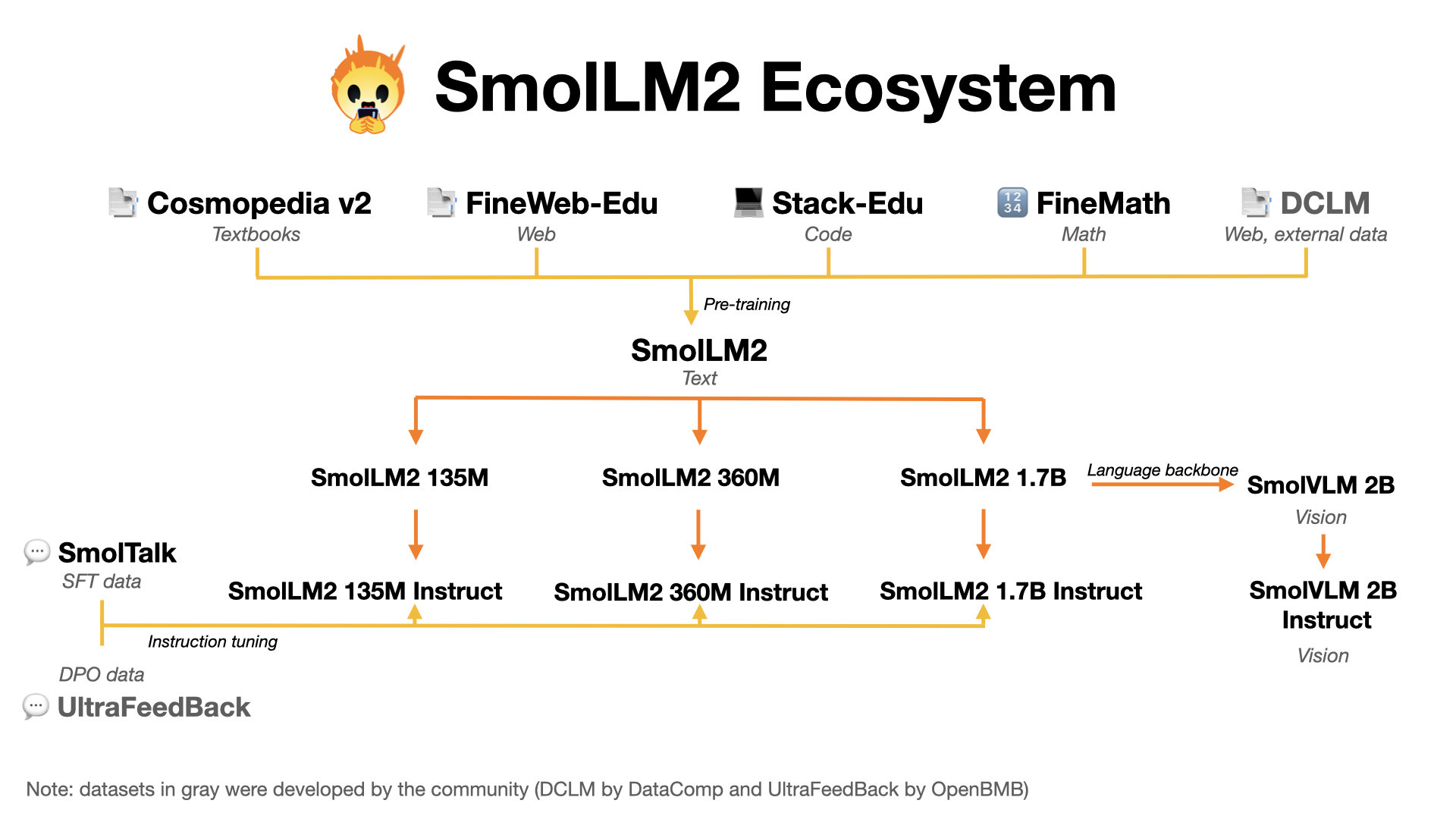

This is the home for smol models (SmolLM & SmolVLM) and high quality pre-training datasets. We released:

- FineWeb-Edu: a filtered version of FineWeb dataset for educational content, paper available here.

- Cosmopedia: the largest open synthetic dataset, with 25B tokens and 30M samples. It contains synthetic textbooks, blog posts, and stories, posts generated by Mixtral. Blog post available here.

- Smollm-Corpus: the pre-training corpus of SmolLM: Cosmopedia v0.2, FineWeb-Edu dedup and Python-Edu. Blog post available here.

- FineMath: the best public math pretraining dataset with 50B tokens of mathematical and problem solving data.

- Stack-Edu: the best open code pretraining dataset with educational code in 15 programming languages.

- SmolLM2 models: a series of strong small models in three sizes: 135M, 360M and 1.7B

- SmolVLM2: a family of small Video and Vision models in three sizes: 2.2B, 500M and 256M. Blog post available here.

News 🗞️

- HuggingSnap: turn your iPhone into a visual assistant usig SmolVLM2. App Store - Source code

- Stack-Edu: 125B tokens of educational code in 15 programming languages. Dataset

Collections

13

spaces

13

Running

28

SmolLM2 1.7B Instruct WebGPU

🚀

A blazingly fast & powerful AI chatbot that runs in-browser!

Running

48

SmolVLM 256M Instruct WebGPU

🐨

Generate descriptions for images using WebGPU technology

Running

4

Smolvlm Web Benchmarking

🌖

Running

17

SmolVLM2 IPhone Waitlist

⏰

sign in to receive news on the iPhone app

Sleeping

25

SmolVLM2 XSPFGenerator (VLC prototype)

🎞

Generate video highlights and playlist

Runtime error

53

SmolVLM2 HighlightGenerator

🐨

Generate video highlights from uploaded video

models

74

HuggingFaceTB/SmolLM2-360M-Instruct

Text Generation

•

Updated

•

845k

•

116

HuggingFaceTB/SmolLM2-135M-Instruct

Text Generation

•

Updated

•

367k

•

188

HuggingFaceTB/SmolLM2-1.7B-Instruct

Text Generation

•

Updated

•

81.1k

•

611

HuggingFaceTB/SmolVLM2-2.2B-Base

Image-Text-to-Text

•

Updated

•

181

•

3

HuggingFaceTB/SmolVLM-256M-Instruct

Image-Text-to-Text

•

Updated

•

486k

•

221

HuggingFaceTB/SmolVLM-Instruct

Image-Text-to-Text

•

Updated

•

74.6k

•

436

HuggingFaceTB/SmolVLM-500M-Instruct

Image-Text-to-Text

•

Updated

•

32k

•

119

HuggingFaceTB/SmolVLM2-256M-Video-Instruct

Image-Text-to-Text

•

Updated

•

27.4k

•

55

HuggingFaceTB/SmolVLM2-500M-Video-Instruct

Image-Text-to-Text

•

Updated

•

18.6k

•

57

HuggingFaceTB/SmolVLM2-2.2B-Instruct

Image-Text-to-Text

•

Updated

•

82.4k

•

170

datasets

39

HuggingFaceTB/stack-edu

Viewer

•

Updated

•

167M

•

1.96k

•

33

HuggingFaceTB/issues-kaggle-notebooks

Viewer

•

Updated

•

16.1M

•

917

•

8

HuggingFaceTB/dclm-edu

Viewer

•

Updated

•

1B

•

14.6k

•

26

HuggingFaceTB/SmolLM2-intermediate-evals

Viewer

•

Updated

•

582

•

58

HuggingFaceTB/smoltalk

Viewer

•

Updated

•

2.2M

•

7.3k

•

333

HuggingFaceTB/smol-smoltalk

Viewer

•

Updated

•

485k

•

2.79k

•

41

HuggingFaceTB/finemath

Viewer

•

Updated

•

48.3M

•

16.6k

•

308

HuggingFaceTB/everyday-conversations-llama3.1-2k

Viewer

•

Updated

•

2.38k

•

567

•

99

HuggingFaceTB/MagPie-Pro-300k-MT

Viewer

•

Updated

•

300k

•

69

•

2

HuggingFaceTB/finemath_contamination_report

Viewer

•

Updated

•

5.33k

•

104

•

1