MiniMA Family

Collection

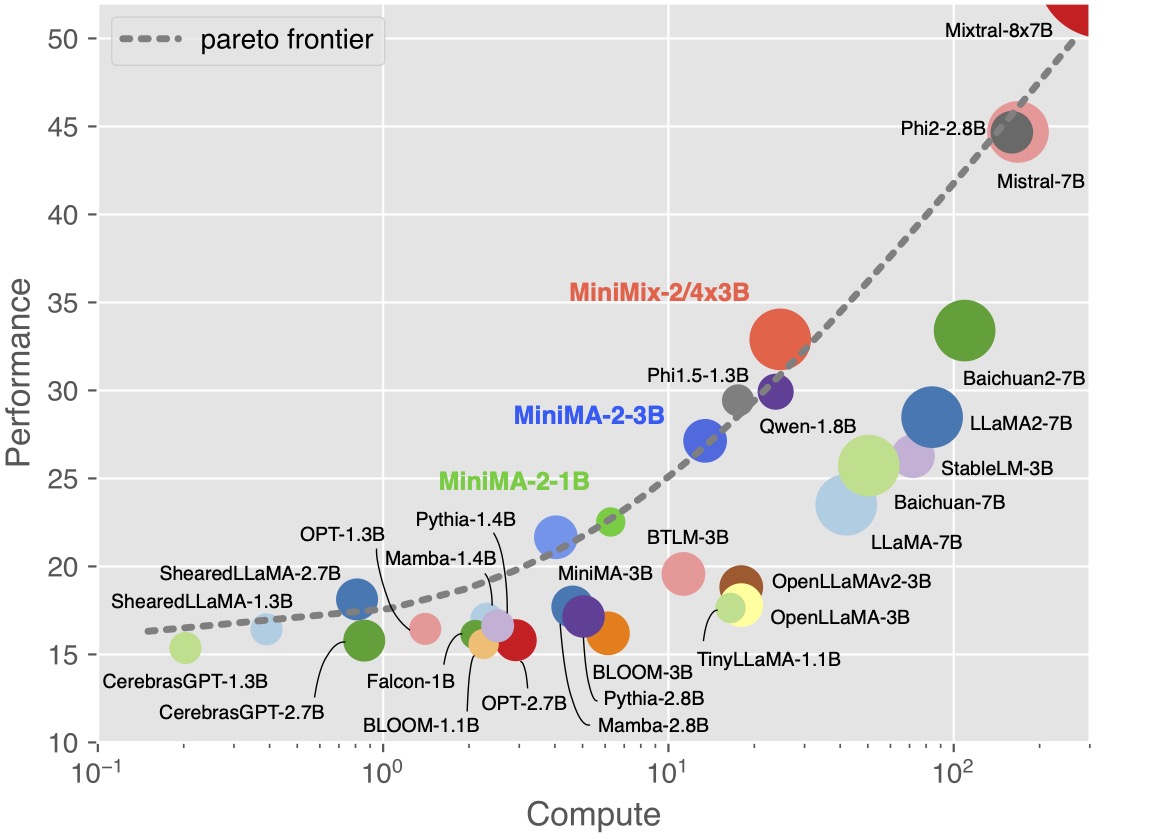

The model family derived from MiniMA

•

10 items

•

Updated

•

1

📑 arXiv | 👻 GitHub | 🤗 HuggingFace-MiniMA-3B | 🤗 HuggingFace-MiniChat-3B | 🤖 ModelScope-MiniMA-3B | 🤖 ModelScope-MiniChat-3B | 🤗 HuggingFace-MiniChat-1.5-3B | 🤗 HuggingFace-MiniMA-2-3B | 🤗 HuggingFace-MiniChat-2-3B | 🤗 HuggingFace-MiniMA-2-1B | 🤗 HuggingFace-MiniLoong-3B | 🤗 HuggingFace-MiniMix-2/4x3B

❗ Must comply with LICENSE of LLaMA-2 since it is derived from LLaMA-2.

Standard Benchmarks

| Method | TFLOPs | MMLU (5-shot) | CEval (5-shot) | DROP (3-shot) | HumanEval (0-shot) | BBH (3-shot) | GSM8K (8-shot) |

|---|---|---|---|---|---|---|---|

| Mamba-2.8B | 4.6E9 | 25.58 | 24.74 | 15.72 | 7.32 | 29.37 | 3.49 |

| ShearedLLaMA-2.7B | 0.8E9 | 26.97 | 22.88 | 19.98 | 4.88 | 30.48 | 3.56 |

| BTLM-3B | 11.3E9 | 27.20 | 26.00 | 17.84 | 10.98 | 30.87 | 4.55 |

| StableLM-3B | 72.0E9 | 44.75 | 31.05 | 22.35 | 15.85 | 32.59 | 10.99 |

| Qwen-1.8B | 23.8E9 | 44.05 | 54.75 | 12.97 | 14.02 | 30.80 | 22.97 |

| Phi-2-2.8B | 159.9E9 | 56.74 | 34.03 | 30.74 | 46.95 | 44.13 | 55.42 |

| LLaMA-2-7B | 84.0E9 | 46.00 | 34.40 | 31.57 | 12.80 | 32.02 | 14.10 |

| MiniMA-3B | 4.0E9 | 28.51 | 28.23 | 22.50 | 10.98 | 31.61 | 8.11 |

| MiniMA-2-1B | 6.3E9 | 31.34 | 34.92 | 20.08 | 10.37 | 31.16 | 7.28 |

| MiniMA-2-3B | 13.4E9 | 40.14 | 44.65 | 23.10 | 14.63 | 31.43 | 8.87 |

| MiniMix-2/4x3B | 25.4E9 | 44.35 | 45.77 | 33.78 | 18.29 | 33.60 | 21.61 |

| MiniChat-3B | 4.0E9 | 38.40 | 36.48 | 22.58 | 18.29 | 31.36 | 29.72 |

| MiniChat-2-3B | 13.4E9 | 46.17 | 43.91 | 30.26 | 22.56 | 34.95 | 38.13 |

@article{zhang2023law,

title={Towards the Law of Capacity Gap in Distilling Language Models},

author={Zhang, Chen and Song, Dawei and Ye, Zheyu and Gao, Yan},

year={2023},

url={https://arxiv.org/abs/2311.07052}

}