Local project

Browse files- Light_Novel_Generation.ipynb +1 -0

- Loss_during_training.png +0 -0

- app.py +17 -0

Light_Novel_Generation.ipynb

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"cells":[{"cell_type":"code","execution_count":1,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T13:27:26.463479Z","iopub.status.busy":"2024-02-16T13:27:26.463242Z","iopub.status.idle":"2024-02-16T13:28:31.851782Z","shell.execute_reply":"2024-02-16T13:28:31.850621Z","shell.execute_reply.started":"2024-02-16T13:27:26.463457Z"},"trusted":true},"outputs":[],"source":["!pip install -q bitsandbytes\n","!pip install -q transformers\n","!pip install -q accelerate\n","!pip install -q datasets\n","!pip install -q peft"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["from datasets import load_dataset\n","train_dataset = load_dataset(\"text\", data_files=\"/kaggle/input/light-novel/Light Novels main.txt\", split=\"train[:2000]\")\n","test_dataset = load_dataset(\"text\", data_files=\"/kaggle/input/light-novel/Light Novels main.txt\", split=\"train[2001:2501]\")\n","print(train_dataset)\n","print(test_dataset)\n","#train_small_dataset = train_dataset['train'][:100]\n","#train_small_dataset = train_dataset['train'][:100]\n","#small_dataset"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["from accelerate import FullyShardedDataParallelPlugin, Accelerator\n","from torch.distributed.fsdp.fully_sharded_data_parallel import FullOptimStateDictConfig, FullStateDictConfig\n","\n","fsdp_plugin = FullyShardedDataParallelPlugin(\n"," state_dict_config=FullStateDictConfig(offload_to_cpu=True, rank0_only=False),\n"," optim_state_dict_config=FullOptimStateDictConfig(offload_to_cpu=True, rank0_only=False),\n",")\n","\n","accelerator = Accelerator(fsdp_plugin=fsdp_plugin)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["import torch\n","from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["\n","\n","base_model_id = \"mistralai/Mistral-7B-v0.1\"\n","bnb_config = BitsAndBytesConfig(\n"," load_in_4bit=True,\n"," bnb_4bit_use_double_quant=True,\n"," bnb_4bit_quant_type=\"nf4\",\n"," bnb_4bit_compute_dtype=torch.bfloat16\n",")\n","\n","model = AutoModelForCausalLM.from_pretrained(base_model_id, quantization_config=bnb_config)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["def formatting_func(example):\n"," text = f\"### {example}\"\n"," return text"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["tokenizer = AutoTokenizer.from_pretrained(\n"," base_model_id,\n"," model_max_length=512,\n"," padding_side=\"left\",\n"," add_eos_token=True)\n","\n","tokenizer.pad_token = tokenizer.eos_token"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["max_length = 512 # This was an appropriate max length for my dataset\n","\n","def generate_and_tokenize_prompt2(prompt):\n"," result = tokenizer(\n"," formatting_func(prompt),\n"," truncation=True,\n"," max_length=max_length,\n"," padding=\"max_length\",\n"," )\n"," result[\"labels\"] = result[\"input_ids\"].copy()\n"," return result"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["tokenized_train_dataset = train_dataset.map(generate_and_tokenize_prompt2)\n","tokenized_test_dataset = test_dataset.map(generate_and_tokenize_prompt2)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["eval_prompt = \"Mathis was a skater boy, she said 'see you later, boy'\""]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["# Init an eval tokenizer that doesn't add padding or eos token\n","eval_tokenizer = AutoTokenizer.from_pretrained(\n"," base_model_id,\n"," add_bos_token=True,\n",")\n","\n","model_input = eval_tokenizer(eval_prompt, return_tensors=\"pt\").to(\"cuda\")\n","\n","model.eval()\n","with torch.no_grad():\n"," print(eval_tokenizer.decode(model.generate(**model_input, max_new_tokens=256, repetition_penalty=1.15)[0], skip_special_tokens=True))"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["from peft import prepare_model_for_kbit_training\n","\n","model.gradient_checkpointing_enable()\n","model = prepare_model_for_kbit_training(model)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["def print_trainable_parameters(model):\n"," \"\"\"\n"," Prints the number of trainable parameters in the model.\n"," \"\"\"\n"," trainable_params = 0\n"," all_param = 0\n"," for _, param in model.named_parameters():\n"," all_param += param.numel()\n"," if param.requires_grad:\n"," trainable_params += param.numel()\n"," print(\n"," f\"trainable params: {trainable_params} || all params: {all_param} || trainable%: {100 * trainable_params / all_param}\"\n"," )"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["print(model)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["from peft import LoraConfig, get_peft_model\n","\n","config = LoraConfig(\n"," r=32,\n"," lora_alpha=64,\n"," target_modules=[\n"," \"q_proj\",\n"," \"k_proj\",\n"," \"v_proj\",\n"," \"o_proj\",\n"," \"gate_proj\",\n"," \"up_proj\",\n"," \"down_proj\",\n"," \"lm_head\",\n"," ],\n"," bias=\"none\",\n"," lora_dropout=0.05, # Conventional\n"," task_type=\"CAUSAL_LM\",\n",")\n","\n","model = get_peft_model(model, config)\n","print_trainable_parameters(model)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["if torch.cuda.device_count() > 1: # If more than 1 GPU\n"," model.is_parallelizable = True\n"," model.model_parallel = True\n","\n","model = accelerator.prepare_model(model)"]},{"cell_type":"code","execution_count":null,"metadata":{"trusted":true},"outputs":[],"source":["import transformers\n","from datetime import datetime\n","\n","project = \"flo-finetune\"\n","base_model_name = \"mistral\"\n","run_name = base_model_name + \"-\" + project\n","output_dir = \"./\" + run_name\n","\n","trainer = transformers.Trainer(\n"," model=model,\n"," train_dataset=tokenized_train_dataset,\n"," eval_dataset=tokenized_test_dataset,\n"," args=transformers.TrainingArguments(\n"," output_dir=output_dir,\n"," warmup_steps=1,\n"," per_device_train_batch_size=2,\n"," gradient_accumulation_steps=1,\n"," gradient_checkpointing=True,\n"," max_steps=500,\n"," learning_rate=2.5e-5,\n"," optim=\"paged_adamw_8bit\",\n"," logging_steps=25, # When to start reporting loss\n"," logging_dir=\"./logs\", # Directory for storing logs\n"," save_strategy=\"steps\", # Save the model checkpoint every logging step\n"," save_steps=25, # Save checkpoints every 50 steps\n"," evaluation_strategy=\"steps\", # Evaluate the model every logging step\n"," eval_steps=25, # Evaluate and save checkpoints every 50 steps\n"," do_eval=True, # Perform evaluation at the end of training\n"," #report_to=\"wandb\", # Comment this out if you don't want to use weights & baises\n"," run_name=f\"{run_name}-{datetime.now().strftime('%Y-%m-%d-%H-%M')}\" # Name of the W&B run (optional)\n"," ),\n"," data_collator=transformers.DataCollatorForLanguageModeling(tokenizer, mlm=False),\n",")\n","\n","model.config.use_cache = False # silence the warnings. Please re-enable for inference!\n","trainer.train()"]},{"cell_type":"markdown","metadata":{},"source":["## Test en local de notre modèle"]},{"cell_type":"code","execution_count":null,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T15:06:56.126561Z","iopub.status.busy":"2024-02-16T15:06:56.125786Z"},"trusted":true},"outputs":[],"source":["!pip install -q bitsandbytes\n","!pip install -q transformers\n","!pip install -q accelerate\n","!pip install -q datasets\n","!pip install -q peft"]},{"cell_type":"code","execution_count":1,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T15:06:44.955244Z","iopub.status.busy":"2024-02-16T15:06:44.954466Z","iopub.status.idle":"2024-02-16T15:06:53.409665Z","shell.execute_reply":"2024-02-16T15:06:53.408076Z","shell.execute_reply.started":"2024-02-16T15:06:44.955205Z"},"trusted":true},"outputs":[{"name":"stderr","output_type":"stream","text":["2024-02-16 15:06:50.797593: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered\n","2024-02-16 15:06:50.797725: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered\n","2024-02-16 15:06:50.934033: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered\n"]},{"ename":"KeyboardInterrupt","evalue":"","output_type":"error","traceback":["\u001b[0;31m---------------------------------------------------------------------------\u001b[0m","\u001b[0;31mKeyboardInterrupt\u001b[0m Traceback (most recent call last)","Cell \u001b[0;32mIn[1], line 2\u001b[0m\n\u001b[1;32m 1\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mtorch\u001b[39;00m\n\u001b[0;32m----> 2\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtransformers\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, pipeline, AutoModel\n","File \u001b[0;32m<frozen importlib._bootstrap>:1075\u001b[0m, in \u001b[0;36m_handle_fromlist\u001b[0;34m(module, fromlist, import_, recursive)\u001b[0m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/utils/import_utils.py:1354\u001b[0m, in \u001b[0;36m_LazyModule.__getattr__\u001b[0;34m(self, name)\u001b[0m\n\u001b[1;32m 1352\u001b[0m value \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_get_module(name)\n\u001b[1;32m 1353\u001b[0m \u001b[38;5;28;01melif\u001b[39;00m name \u001b[38;5;129;01min\u001b[39;00m \u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m_class_to_module\u001b[38;5;241m.\u001b[39mkeys():\n\u001b[0;32m-> 1354\u001b[0m module \u001b[38;5;241m=\u001b[39m \u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_get_module\u001b[49m\u001b[43m(\u001b[49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_class_to_module\u001b[49m\u001b[43m[\u001b[49m\u001b[43mname\u001b[49m\u001b[43m]\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 1355\u001b[0m value \u001b[38;5;241m=\u001b[39m \u001b[38;5;28mgetattr\u001b[39m(module, name)\n\u001b[1;32m 1356\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/utils/import_utils.py:1364\u001b[0m, in \u001b[0;36m_LazyModule._get_module\u001b[0;34m(self, module_name)\u001b[0m\n\u001b[1;32m 1362\u001b[0m \u001b[38;5;28;01mdef\u001b[39;00m \u001b[38;5;21m_get_module\u001b[39m(\u001b[38;5;28mself\u001b[39m, module_name: \u001b[38;5;28mstr\u001b[39m):\n\u001b[1;32m 1363\u001b[0m \u001b[38;5;28;01mtry\u001b[39;00m:\n\u001b[0;32m-> 1364\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m \u001b[43mimportlib\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mimport_module\u001b[49m\u001b[43m(\u001b[49m\u001b[38;5;124;43m\"\u001b[39;49m\u001b[38;5;124;43m.\u001b[39;49m\u001b[38;5;124;43m\"\u001b[39;49m\u001b[43m \u001b[49m\u001b[38;5;241;43m+\u001b[39;49m\u001b[43m \u001b[49m\u001b[43mmodule_name\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[38;5;28;43mself\u001b[39;49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[38;5;18;43m__name__\u001b[39;49m\u001b[43m)\u001b[49m\n\u001b[1;32m 1365\u001b[0m \u001b[38;5;28;01mexcept\u001b[39;00m \u001b[38;5;167;01mException\u001b[39;00m \u001b[38;5;28;01mas\u001b[39;00m e:\n\u001b[1;32m 1366\u001b[0m \u001b[38;5;28;01mraise\u001b[39;00m \u001b[38;5;167;01mRuntimeError\u001b[39;00m(\n\u001b[1;32m 1367\u001b[0m \u001b[38;5;124mf\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mFailed to import \u001b[39m\u001b[38;5;132;01m{\u001b[39;00m\u001b[38;5;28mself\u001b[39m\u001b[38;5;241m.\u001b[39m\u001b[38;5;18m__name__\u001b[39m\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m.\u001b[39m\u001b[38;5;132;01m{\u001b[39;00mmodule_name\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m because of the following error (look up to see its\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 1368\u001b[0m \u001b[38;5;124mf\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124m traceback):\u001b[39m\u001b[38;5;130;01m\\n\u001b[39;00m\u001b[38;5;132;01m{\u001b[39;00me\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 1369\u001b[0m ) \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01me\u001b[39;00m\n","File \u001b[0;32m/opt/conda/lib/python3.10/importlib/__init__.py:126\u001b[0m, in \u001b[0;36mimport_module\u001b[0;34m(name, package)\u001b[0m\n\u001b[1;32m 124\u001b[0m \u001b[38;5;28;01mbreak\u001b[39;00m\n\u001b[1;32m 125\u001b[0m level \u001b[38;5;241m+\u001b[39m\u001b[38;5;241m=\u001b[39m \u001b[38;5;241m1\u001b[39m\n\u001b[0;32m--> 126\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m \u001b[43m_bootstrap\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43m_gcd_import\u001b[49m\u001b[43m(\u001b[49m\u001b[43mname\u001b[49m\u001b[43m[\u001b[49m\u001b[43mlevel\u001b[49m\u001b[43m:\u001b[49m\u001b[43m]\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43mpackage\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43mlevel\u001b[49m\u001b[43m)\u001b[49m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/pipelines/__init__.py:28\u001b[0m\n\u001b[1;32m 26\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mdynamic_module_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m get_class_from_dynamic_module\n\u001b[1;32m 27\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mfeature_extraction_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m PreTrainedFeatureExtractor\n\u001b[0;32m---> 28\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mimage_processing_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m BaseImageProcessor\n\u001b[1;32m 29\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mmodels\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mauto\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mconfiguration_auto\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m AutoConfig\n\u001b[1;32m 30\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mmodels\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mauto\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mfeature_extraction_auto\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m FEATURE_EXTRACTOR_MAPPING, AutoFeatureExtractor\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/image_processing_utils.py:28\u001b[0m\n\u001b[1;32m 26\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mdynamic_module_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m custom_object_save\n\u001b[1;32m 27\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mfeature_extraction_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m BatchFeature \u001b[38;5;28;01mas\u001b[39;00m BaseBatchFeature\n\u001b[0;32m---> 28\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mimage_transforms\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m center_crop, normalize, rescale\n\u001b[1;32m 29\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mimage_utils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m ChannelDimension\n\u001b[1;32m 30\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m (\n\u001b[1;32m 31\u001b[0m IMAGE_PROCESSOR_NAME,\n\u001b[1;32m 32\u001b[0m PushToHubMixin,\n\u001b[0;32m (...)\u001b[0m\n\u001b[1;32m 40\u001b[0m logging,\n\u001b[1;32m 41\u001b[0m )\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/image_transforms.py:47\u001b[0m\n\u001b[1;32m 44\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mtorch\u001b[39;00m\n\u001b[1;32m 46\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m is_tf_available():\n\u001b[0;32m---> 47\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m \u001b[38;5;28;01mas\u001b[39;00m \u001b[38;5;21;01mtf\u001b[39;00m\n\u001b[1;32m 49\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m is_flax_available():\n\u001b[1;32m 50\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mjax\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mnumpy\u001b[39;00m \u001b[38;5;28;01mas\u001b[39;00m \u001b[38;5;21;01mjnp\u001b[39;00m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/__init__.py:48\u001b[0m\n\u001b[1;32m 45\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m tf2 \u001b[38;5;28;01mas\u001b[39;00m _tf2\n\u001b[1;32m 46\u001b[0m _tf2\u001b[38;5;241m.\u001b[39menable()\n\u001b[0;32m---> 48\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m __internal__\n\u001b[1;32m 49\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m __operators__\n\u001b[1;32m 50\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m audio\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/_api/v2/__internal__/__init__.py:8\u001b[0m\n\u001b[1;32m 3\u001b[0m \u001b[38;5;124;03m\"\"\"Public API for tf._api.v2.__internal__ namespace\u001b[39;00m\n\u001b[1;32m 4\u001b[0m \u001b[38;5;124;03m\"\"\"\u001b[39;00m\n\u001b[1;32m 6\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01msys\u001b[39;00m \u001b[38;5;28;01mas\u001b[39;00m \u001b[38;5;21;01m_sys\u001b[39;00m\n\u001b[0;32m----> 8\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m__internal__\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m autograph\n\u001b[1;32m 9\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m__internal__\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m decorator\n\u001b[1;32m 10\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m_api\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mv2\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01m__internal__\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m dispatch\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/_api/v2/__internal__/autograph/__init__.py:8\u001b[0m\n\u001b[1;32m 3\u001b[0m \u001b[38;5;124;03m\"\"\"Public API for tf._api.v2.__internal__.autograph namespace\u001b[39;00m\n\u001b[1;32m 4\u001b[0m \u001b[38;5;124;03m\"\"\"\u001b[39;00m\n\u001b[1;32m 6\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01msys\u001b[39;00m \u001b[38;5;28;01mas\u001b[39;00m \u001b[38;5;21;01m_sys\u001b[39;00m\n\u001b[0;32m----> 8\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mcore\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mag_ctx\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m control_status_ctx \u001b[38;5;66;03m# line: 34\u001b[39;00m\n\u001b[1;32m 9\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mimpl\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mapi\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m tf_convert \u001b[38;5;66;03m# line: 493\u001b[39;00m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/autograph/core/ag_ctx.py:21\u001b[0m\n\u001b[1;32m 18\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01minspect\u001b[39;00m\n\u001b[1;32m 19\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mthreading\u001b[39;00m\n\u001b[0;32m---> 21\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutils\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m ag_logging\n\u001b[1;32m 22\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutil\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mtf_export\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m tf_export\n\u001b[1;32m 25\u001b[0m stacks \u001b[38;5;241m=\u001b[39m threading\u001b[38;5;241m.\u001b[39mlocal()\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/autograph/utils/__init__.py:17\u001b[0m\n\u001b[1;32m 1\u001b[0m \u001b[38;5;66;03m# Copyright 2016 The TensorFlow Authors. All Rights Reserved.\u001b[39;00m\n\u001b[1;32m 2\u001b[0m \u001b[38;5;66;03m#\u001b[39;00m\n\u001b[1;32m 3\u001b[0m \u001b[38;5;66;03m# Licensed under the Apache License, Version 2.0 (the \"License\");\u001b[39;00m\n\u001b[0;32m (...)\u001b[0m\n\u001b[1;32m 13\u001b[0m \u001b[38;5;66;03m# limitations under the License.\u001b[39;00m\n\u001b[1;32m 14\u001b[0m \u001b[38;5;66;03m# ==============================================================================\u001b[39;00m\n\u001b[1;32m 15\u001b[0m \u001b[38;5;124;03m\"\"\"Utility module that contains APIs usable in the generated code.\"\"\"\u001b[39;00m\n\u001b[0;32m---> 17\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutils\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mcontext_managers\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m control_dependency_on_returns\n\u001b[1;32m 18\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutils\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mmisc\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m alias_tensors\n\u001b[1;32m 19\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mautograph\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mutils\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mtensor_list\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m dynamic_list_append\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/autograph/utils/context_managers.py:19\u001b[0m\n\u001b[1;32m 15\u001b[0m \u001b[38;5;124;03m\"\"\"Various context managers.\"\"\"\u001b[39;00m\n\u001b[1;32m 17\u001b[0m \u001b[38;5;28;01mimport\u001b[39;00m \u001b[38;5;21;01mcontextlib\u001b[39;00m\n\u001b[0;32m---> 19\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mframework\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m ops\n\u001b[1;32m 20\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mops\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m tensor_array_ops\n\u001b[1;32m 23\u001b[0m \u001b[38;5;28;01mdef\u001b[39;00m \u001b[38;5;21mcontrol_dependency_on_returns\u001b[39m(return_value):\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/framework/ops.py:40\u001b[0m\n\u001b[1;32m 36\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mcore\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mprotobuf\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m config_pb2\n\u001b[1;32m 37\u001b[0m \u001b[38;5;66;03m# pywrap_tensorflow must be imported first to avoid protobuf issues.\u001b[39;00m\n\u001b[1;32m 38\u001b[0m \u001b[38;5;66;03m# (b/143110113)\u001b[39;00m\n\u001b[1;32m 39\u001b[0m \u001b[38;5;66;03m# pylint: disable=invalid-import-order,g-bad-import-order,unused-import\u001b[39;00m\n\u001b[0;32m---> 40\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m pywrap_tensorflow\n\u001b[1;32m 41\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m pywrap_tfe\n\u001b[1;32m 42\u001b[0m \u001b[38;5;66;03m# pylint: enable=invalid-import-order,g-bad-import-order,unused-import\u001b[39;00m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/pywrap_tensorflow.py:34\u001b[0m\n\u001b[1;32m 29\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mplatform\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m self_check\n\u001b[1;32m 31\u001b[0m \u001b[38;5;66;03m# TODO(mdan): Cleanup antipattern: import for side effects.\u001b[39;00m\n\u001b[1;32m 32\u001b[0m \n\u001b[1;32m 33\u001b[0m \u001b[38;5;66;03m# Perform pre-load sanity checks in order to produce a more actionable error.\u001b[39;00m\n\u001b[0;32m---> 34\u001b[0m \u001b[43mself_check\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mpreload_check\u001b[49m\u001b[43m(\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 36\u001b[0m \u001b[38;5;66;03m# pylint: disable=wildcard-import,g-import-not-at-top,unused-import,line-too-long\u001b[39;00m\n\u001b[1;32m 38\u001b[0m \u001b[38;5;28;01mtry\u001b[39;00m:\n\u001b[1;32m 39\u001b[0m \u001b[38;5;66;03m# This import is expected to fail if there is an explicit shared object\u001b[39;00m\n\u001b[1;32m 40\u001b[0m \u001b[38;5;66;03m# dependency (with_framework_lib=true), since we do not need RTLD_GLOBAL.\u001b[39;00m\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/tensorflow/python/platform/self_check.py:63\u001b[0m, in \u001b[0;36mpreload_check\u001b[0;34m()\u001b[0m\n\u001b[1;32m 50\u001b[0m \u001b[38;5;28;01mraise\u001b[39;00m \u001b[38;5;167;01mImportError\u001b[39;00m(\n\u001b[1;32m 51\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mCould not find the DLL(s) \u001b[39m\u001b[38;5;132;01m%r\u001b[39;00m\u001b[38;5;124m. TensorFlow requires that these DLLs \u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 52\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mbe installed in a directory that is named in your \u001b[39m\u001b[38;5;132;01m%%\u001b[39;00m\u001b[38;5;124mPATH\u001b[39m\u001b[38;5;132;01m%%\u001b[39;00m\u001b[38;5;124m \u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[0;32m (...)\u001b[0m\n\u001b[1;32m 56\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mhttps://support.microsoft.com/help/2977003/the-latest-supported-visual-c-downloads\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 57\u001b[0m \u001b[38;5;241m%\u001b[39m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124m or \u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;241m.\u001b[39mjoin(missing))\n\u001b[1;32m 58\u001b[0m \u001b[38;5;28;01melse\u001b[39;00m:\n\u001b[1;32m 59\u001b[0m \u001b[38;5;66;03m# Load a library that performs CPU feature guard checking. Doing this here\u001b[39;00m\n\u001b[1;32m 60\u001b[0m \u001b[38;5;66;03m# as a preload check makes it more likely that we detect any CPU feature\u001b[39;00m\n\u001b[1;32m 61\u001b[0m \u001b[38;5;66;03m# incompatibilities before we trigger them (which would typically result in\u001b[39;00m\n\u001b[1;32m 62\u001b[0m \u001b[38;5;66;03m# SIGILL).\u001b[39;00m\n\u001b[0;32m---> 63\u001b[0m \u001b[38;5;28;01mfrom\u001b[39;00m \u001b[38;5;21;01mtensorflow\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mpython\u001b[39;00m\u001b[38;5;21;01m.\u001b[39;00m\u001b[38;5;21;01mplatform\u001b[39;00m \u001b[38;5;28;01mimport\u001b[39;00m _pywrap_cpu_feature_guard\n\u001b[1;32m 64\u001b[0m _pywrap_cpu_feature_guard\u001b[38;5;241m.\u001b[39mInfoAboutUnusedCPUFeatures()\n","\u001b[0;31mKeyboardInterrupt\u001b[0m: "]}],"source":["import torch\n","from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, pipeline, AutoModel"]},{"cell_type":"code","execution_count":9,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T15:05:04.590002Z","iopub.status.busy":"2024-02-16T15:05:04.589519Z","iopub.status.idle":"2024-02-16T15:05:05.697199Z","shell.execute_reply":"2024-02-16T15:05:05.695859Z","shell.execute_reply.started":"2024-02-16T15:05:04.589969Z"},"trusted":true},"outputs":[{"data":{"application/vnd.jupyter.widget-view+json":{"model_id":"b1f6468fd3e84d43be51d5aad88d5a67","version_major":2,"version_minor":0},"text/plain":["config.json: 0%| | 0.00/571 [00:00<?, ?B/s]"]},"metadata":{},"output_type":"display_data"},{"ename":"ImportError","evalue":"Using `load_in_8bit=True` requires Accelerate: `pip install accelerate` and the latest version of bitsandbytes `pip install -i https://test.pypi.org/simple/ bitsandbytes` or `pip install bitsandbytes`.","output_type":"error","traceback":["\u001b[0;31m---------------------------------------------------------------------------\u001b[0m","\u001b[0;31mImportError\u001b[0m Traceback (most recent call last)","Cell \u001b[0;32mIn[9], line 10\u001b[0m\n\u001b[1;32m 2\u001b[0m \u001b[38;5;66;03m#base_model_id = \"FloVolo/mistral-flo-finetune-2-T4\"\u001b[39;00m\n\u001b[1;32m 3\u001b[0m bnb_config \u001b[38;5;241m=\u001b[39m BitsAndBytesConfig(\n\u001b[1;32m 4\u001b[0m load_in_4bit\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mTrue\u001b[39;00m,\n\u001b[1;32m 5\u001b[0m bnb_4bit_use_double_quant\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mTrue\u001b[39;00m,\n\u001b[1;32m 6\u001b[0m bnb_4bit_quant_type\u001b[38;5;241m=\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mnf4\u001b[39m\u001b[38;5;124m\"\u001b[39m,\n\u001b[1;32m 7\u001b[0m bnb_4bit_compute_dtype\u001b[38;5;241m=\u001b[39mtorch\u001b[38;5;241m.\u001b[39mbfloat16\n\u001b[1;32m 8\u001b[0m )\n\u001b[0;32m---> 10\u001b[0m model \u001b[38;5;241m=\u001b[39m \u001b[43mAutoModelForCausalLM\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mfrom_pretrained\u001b[49m\u001b[43m(\u001b[49m\u001b[43mbase_model_id\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43mquantization_config\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mbnb_config\u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 12\u001b[0m tokenizer \u001b[38;5;241m=\u001b[39m AutoTokenizer\u001b[38;5;241m.\u001b[39mfrom_pretrained(\n\u001b[1;32m 13\u001b[0m base_model_id,\n\u001b[1;32m 14\u001b[0m add_bos_token\u001b[38;5;241m=\u001b[39m\u001b[38;5;28;01mTrue\u001b[39;00m,\n\u001b[1;32m 15\u001b[0m )\n\u001b[1;32m 17\u001b[0m tokenizer\u001b[38;5;241m.\u001b[39mpad_token \u001b[38;5;241m=\u001b[39m tokenizer\u001b[38;5;241m.\u001b[39meos_token\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/models/auto/auto_factory.py:566\u001b[0m, in \u001b[0;36m_BaseAutoModelClass.from_pretrained\u001b[0;34m(cls, pretrained_model_name_or_path, *model_args, **kwargs)\u001b[0m\n\u001b[1;32m 564\u001b[0m \u001b[38;5;28;01melif\u001b[39;00m \u001b[38;5;28mtype\u001b[39m(config) \u001b[38;5;129;01min\u001b[39;00m \u001b[38;5;28mcls\u001b[39m\u001b[38;5;241m.\u001b[39m_model_mapping\u001b[38;5;241m.\u001b[39mkeys():\n\u001b[1;32m 565\u001b[0m model_class \u001b[38;5;241m=\u001b[39m _get_model_class(config, \u001b[38;5;28mcls\u001b[39m\u001b[38;5;241m.\u001b[39m_model_mapping)\n\u001b[0;32m--> 566\u001b[0m \u001b[38;5;28;01mreturn\u001b[39;00m \u001b[43mmodel_class\u001b[49m\u001b[38;5;241;43m.\u001b[39;49m\u001b[43mfrom_pretrained\u001b[49m\u001b[43m(\u001b[49m\n\u001b[1;32m 567\u001b[0m \u001b[43m \u001b[49m\u001b[43mpretrained_model_name_or_path\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[38;5;241;43m*\u001b[39;49m\u001b[43mmodel_args\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[43mconfig\u001b[49m\u001b[38;5;241;43m=\u001b[39;49m\u001b[43mconfig\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[38;5;241;43m*\u001b[39;49m\u001b[38;5;241;43m*\u001b[39;49m\u001b[43mhub_kwargs\u001b[49m\u001b[43m,\u001b[49m\u001b[43m \u001b[49m\u001b[38;5;241;43m*\u001b[39;49m\u001b[38;5;241;43m*\u001b[39;49m\u001b[43mkwargs\u001b[49m\n\u001b[1;32m 568\u001b[0m \u001b[43m \u001b[49m\u001b[43m)\u001b[49m\n\u001b[1;32m 569\u001b[0m \u001b[38;5;28;01mraise\u001b[39;00m \u001b[38;5;167;01mValueError\u001b[39;00m(\n\u001b[1;32m 570\u001b[0m \u001b[38;5;124mf\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mUnrecognized configuration class \u001b[39m\u001b[38;5;132;01m{\u001b[39;00mconfig\u001b[38;5;241m.\u001b[39m\u001b[38;5;18m__class__\u001b[39m\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m for this kind of AutoModel: \u001b[39m\u001b[38;5;132;01m{\u001b[39;00m\u001b[38;5;28mcls\u001b[39m\u001b[38;5;241m.\u001b[39m\u001b[38;5;18m__name__\u001b[39m\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m.\u001b[39m\u001b[38;5;130;01m\\n\u001b[39;00m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 571\u001b[0m \u001b[38;5;124mf\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mModel type should be one of \u001b[39m\u001b[38;5;132;01m{\u001b[39;00m\u001b[38;5;124m'\u001b[39m\u001b[38;5;124m, \u001b[39m\u001b[38;5;124m'\u001b[39m\u001b[38;5;241m.\u001b[39mjoin(c\u001b[38;5;241m.\u001b[39m\u001b[38;5;18m__name__\u001b[39m\u001b[38;5;250m \u001b[39m\u001b[38;5;28;01mfor\u001b[39;00m\u001b[38;5;250m \u001b[39mc\u001b[38;5;250m \u001b[39m\u001b[38;5;129;01min\u001b[39;00m\u001b[38;5;250m \u001b[39m\u001b[38;5;28mcls\u001b[39m\u001b[38;5;241m.\u001b[39m_model_mapping\u001b[38;5;241m.\u001b[39mkeys())\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m.\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 572\u001b[0m )\n","File \u001b[0;32m/opt/conda/lib/python3.10/site-packages/transformers/modeling_utils.py:3034\u001b[0m, in \u001b[0;36mPreTrainedModel.from_pretrained\u001b[0;34m(cls, pretrained_model_name_or_path, config, cache_dir, ignore_mismatched_sizes, force_download, local_files_only, token, revision, use_safetensors, *model_args, **kwargs)\u001b[0m\n\u001b[1;32m 3032\u001b[0m \u001b[38;5;28;01mraise\u001b[39;00m \u001b[38;5;167;01mRuntimeError\u001b[39;00m(\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mNo GPU found. A GPU is needed for quantization.\u001b[39m\u001b[38;5;124m\"\u001b[39m)\n\u001b[1;32m 3033\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m \u001b[38;5;129;01mnot\u001b[39;00m (is_accelerate_available() \u001b[38;5;129;01mand\u001b[39;00m is_bitsandbytes_available()):\n\u001b[0;32m-> 3034\u001b[0m \u001b[38;5;28;01mraise\u001b[39;00m \u001b[38;5;167;01mImportError\u001b[39;00m(\n\u001b[1;32m 3035\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mUsing `load_in_8bit=True` requires Accelerate: `pip install accelerate` and the latest version of\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3036\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124m bitsandbytes `pip install -i https://test.pypi.org/simple/ bitsandbytes` or\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3037\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124m `pip install bitsandbytes`.\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3038\u001b[0m )\n\u001b[1;32m 3040\u001b[0m \u001b[38;5;28;01mif\u001b[39;00m torch_dtype \u001b[38;5;129;01mis\u001b[39;00m \u001b[38;5;28;01mNone\u001b[39;00m:\n\u001b[1;32m 3041\u001b[0m \u001b[38;5;66;03m# We force the `dtype` to be float16, this is a requirement from `bitsandbytes`\u001b[39;00m\n\u001b[1;32m 3042\u001b[0m logger\u001b[38;5;241m.\u001b[39minfo(\n\u001b[1;32m 3043\u001b[0m \u001b[38;5;124mf\u001b[39m\u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mOverriding torch_dtype=\u001b[39m\u001b[38;5;132;01m{\u001b[39;00mtorch_dtype\u001b[38;5;132;01m}\u001b[39;00m\u001b[38;5;124m with `torch_dtype=torch.float16` due to \u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3044\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mrequirements of `bitsandbytes` to enable model loading in 8-bit or 4-bit. \u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3045\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124mPass your own torch_dtype to specify the dtype of the remaining non-linear layers or pass\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3046\u001b[0m \u001b[38;5;124m\"\u001b[39m\u001b[38;5;124m torch_dtype=torch.float16 to remove this warning.\u001b[39m\u001b[38;5;124m\"\u001b[39m\n\u001b[1;32m 3047\u001b[0m )\n","\u001b[0;31mImportError\u001b[0m: Using `load_in_8bit=True` requires Accelerate: `pip install accelerate` and the latest version of bitsandbytes `pip install -i https://test.pypi.org/simple/ bitsandbytes` or `pip install bitsandbytes`."]}],"source":["base_model_id = \"mistralai/Mistral-7B-v0.1\"\n","#base_model_id = \"FloVolo/mistral-flo-finetune-2-T4\"\n","bnb_config = BitsAndBytesConfig(\n"," load_in_4bit=True,\n"," bnb_4bit_use_double_quant=True,\n"," bnb_4bit_quant_type=\"nf4\",\n"," bnb_4bit_compute_dtype=torch.bfloat16\n",")\n","\n","model = AutoModelForCausalLM.from_pretrained(base_model_id, quantization_config=bnb_config)\n","\n","tokenizer = AutoTokenizer.from_pretrained(\n"," base_model_id,\n"," add_bos_token=True,\n",")\n","\n","tokenizer.pad_token = tokenizer.eos_token"]},{"cell_type":"code","execution_count":13,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T14:39:54.197400Z","iopub.status.busy":"2024-02-16T14:39:54.196607Z","iopub.status.idle":"2024-02-16T14:40:28.867369Z","shell.execute_reply":"2024-02-16T14:40:28.866351Z","shell.execute_reply.started":"2024-02-16T14:39:54.197361Z"},"trusted":true},"outputs":[{"name":"stderr","output_type":"stream","text":["Setting `pad_token_id` to `eos_token_id`:2 for open-end generation.\n","A decoder-only architecture is being used, but right-padding was detected! For correct generation results, please set `padding_side='left'` when initializing the tokenizer.\n"]},{"name":"stdout","output_type":"stream","text":["Here's the list of why I'm a huge dumbass :\n","\n","1. I didn't know that you can't use a 3rd party app to get your phone number from Facebook, so I used one and got my account banned for 2 weeks.\n","\n","2. I was trying to find out how to make an app on Android Studio, but I couldn't figure it out because I don't have any idea about coding or anything like that.\n","\n","3. I tried to download a game called \"Pokemon Go\" but I couldn't find it in the Play Store, so I went to the website and downloaded it there. It turned out to be a virus.\n","\n","4. I wanted to watch some videos on YouTube, but I couldn't find them because they were blocked by my school's firewall. So I decided to go to another site where I could watch them without being blocked. But when I clicked on the link, it took me to a page with ads all over it! And then it said \"You must be logged into Google Chrome before continuing.\" So now I'm stuck here forever...\n","\n","5. I had this really cool idea for a new app called \"The Best App Ever!\" but I forgot what it was called so\n"]}],"source":["device = torch.device('cuda')\n","\n","eval_prompt = \"Here's the list of why I'm a huge dumbass :\"\n","\n","model_input = tokenizer(eval_prompt, return_tensors=\"pt\").to(device)\n","\n","model.eval()\n","with torch.no_grad():\n"," print(tokenizer.decode(model.generate(**model_input, max_new_tokens=256, repetition_penalty=1.15)[0], skip_special_tokens=True))\n"]},{"cell_type":"code","execution_count":14,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T14:41:24.323504Z","iopub.status.busy":"2024-02-16T14:41:24.322852Z","iopub.status.idle":"2024-02-16T14:41:24.344105Z","shell.execute_reply":"2024-02-16T14:41:24.343201Z","shell.execute_reply.started":"2024-02-16T14:41:24.323471Z"},"trusted":true},"outputs":[{"data":{"application/vnd.jupyter.widget-view+json":{"model_id":"53123bc60df54693b6a38e79729413d3","version_major":2,"version_minor":0},"text/plain":["VBox(children=(HTML(value='<center> <img\\nsrc=https://huggingface.co/front/assets/huggingface_logo-noborder.sv…"]},"metadata":{},"output_type":"display_data"}],"source":["from huggingface_hub import notebook_login\n","notebook_login()"]},{"cell_type":"code","execution_count":15,"metadata":{"execution":{"iopub.execute_input":"2024-02-16T14:41:52.798583Z","iopub.status.busy":"2024-02-16T14:41:52.797614Z","iopub.status.idle":"2024-02-16T14:42:46.024533Z","shell.execute_reply":"2024-02-16T14:42:46.023544Z","shell.execute_reply.started":"2024-02-16T14:41:52.798540Z"},"trusted":true},"outputs":[{"data":{"application/vnd.jupyter.widget-view+json":{"model_id":"75bc17262bd34c9da33d064a084d626c","version_major":2,"version_minor":0},"text/plain":["README.md: 0%| | 0.00/1.23k [00:00<?, ?B/s]"]},"metadata":{},"output_type":"display_data"},{"name":"stderr","output_type":"stream","text":["/opt/conda/lib/python3.10/site-packages/transformers/integrations/peft.py:391: FutureWarning: The `active_adapter` method is deprecated and will be removed in a future version.\n"," warnings.warn(\n","/opt/conda/lib/python3.10/site-packages/peft/utils/save_and_load.py:134: UserWarning: Setting `save_embedding_layers` to `True` as embedding layers found in `target_modules`.\n"," warnings.warn(\"Setting `save_embedding_layers` to `True` as embedding layers found in `target_modules`.\")\n"]},{"data":{"application/vnd.jupyter.widget-view+json":{"model_id":"e3d71a0010454a67a271f19ac9a98067","version_major":2,"version_minor":0},"text/plain":["adapter_model.safetensors: 0%| | 0.00/600M [00:00<?, ?B/s]"]},"metadata":{},"output_type":"display_data"},{"data":{"text/plain":["CommitInfo(commit_url='https://huggingface.co/FloVolo/mistral-flo-finetune-2-T4/commit/39736c38b46ed3ca5873b35c54934424de4a443a', commit_message='Upload MistralForCausalLM', commit_description='', oid='39736c38b46ed3ca5873b35c54934424de4a443a', pr_url=None, pr_revision=None, pr_num=None)"]},"execution_count":15,"metadata":{},"output_type":"execute_result"}],"source":["model.push_to_hub('FloVolo/mistral-flo-finetune-2-T4')"]},{"cell_type":"code","execution_count":null,"metadata":{},"outputs":[],"source":[]}],"metadata":{"kaggle":{"accelerator":"gpu","dataSources":[{"datasetId":4446164,"sourceId":7630808,"sourceType":"datasetVersion"}],"dockerImageVersionId":30648,"isGpuEnabled":true,"isInternetEnabled":true,"language":"python","sourceType":"notebook"},"kernelspec":{"display_name":"Python 3","language":"python","name":"python3"},"language_info":{"codemirror_mode":{"name":"ipython","version":3},"file_extension":".py","mimetype":"text/x-python","name":"python","nbconvert_exporter":"python","pygments_lexer":"ipython3","version":"3.10.13"}},"nbformat":4,"nbformat_minor":4}

|

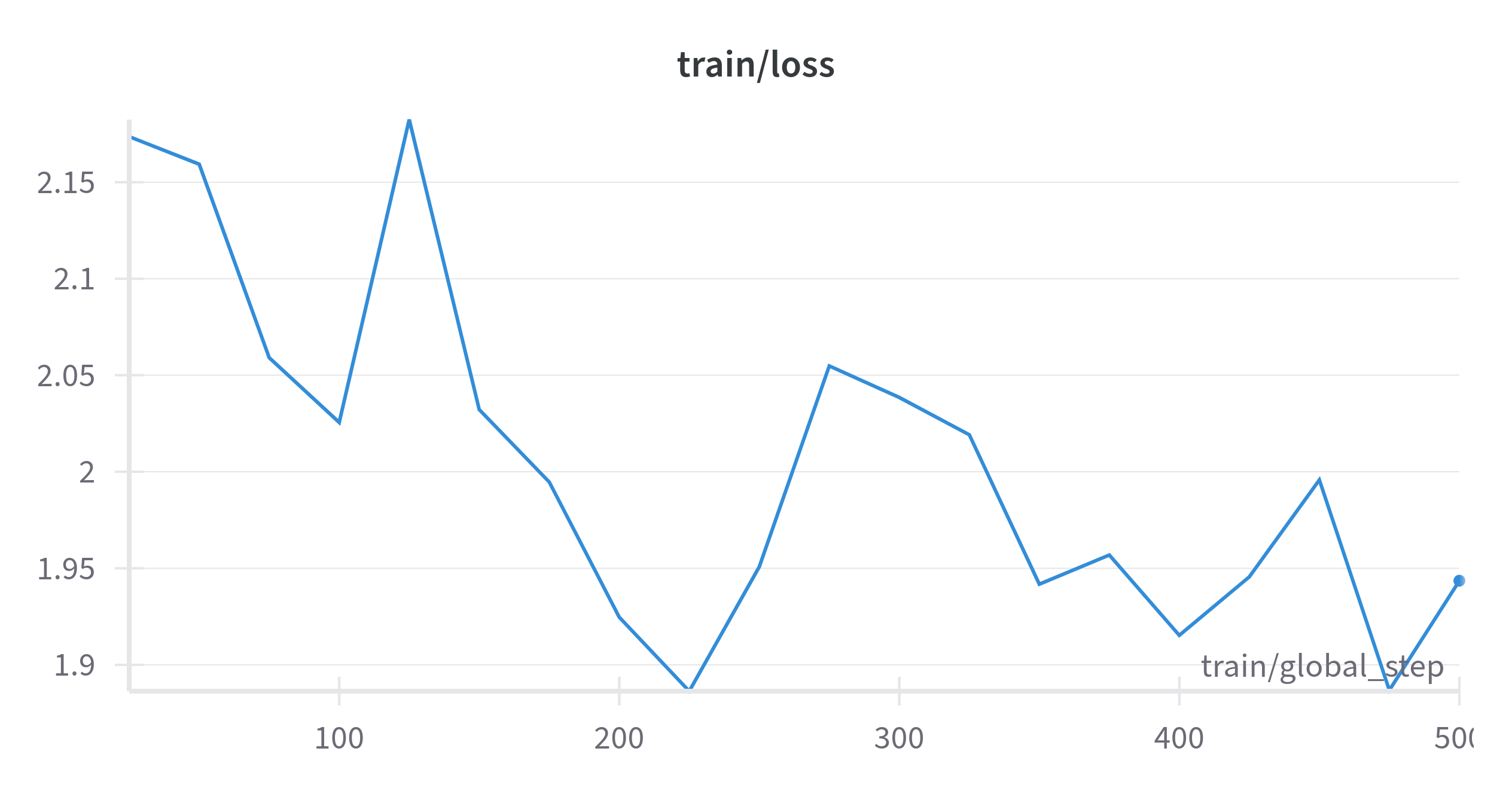

Loss_during_training.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from transformers import pipeline

|

| 3 |

+

|

| 4 |

+

#Calling the pipeline for text generation

|

| 5 |

+

generator = pipeline('text-generation', model='FloVolo/mistral-flo-finetune-2-T4')

|

| 6 |

+

|

| 7 |

+

def generation_text(text):

|

| 8 |

+

return generator(text, max_length=250, do_sample=False)[0]['generated_text']

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

demo = gr.Interface(

|

| 12 |

+

fn=generation_text,

|

| 13 |

+

inputs=["text"],

|

| 14 |

+

outputs=["text"],

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

demo.launch()

|