gte-multilingual-base

The gte-multilingual-base model is the latest in the GTE (General Text Embedding) family of models, featuring several key attributes:

- High Performance: Achieves state-of-the-art (SOTA) results in multilingual retrieval tasks and multi-task representation model evaluations when compared to models of similar size.

- Training Architecture: Trained using an encoder-only transformers architecture, resulting in a smaller model size. Unlike previous models based on decode-only LLM architecture (e.g., gte-qwen2-1.5b-instruct), this model has lower hardware requirements for inference, offering a 10x increase in inference speed.

- Long Context: Supports text lengths up to 8192 tokens.

- Multilingual Capability: Supports over 70 languages.

- Elastic Dense Embedding: Support elastic output dense representation while maintaining the effectiveness of downstream tasks, which significantly reduces storage costs and improves execution efficiency.

- Sparse Vectors: In addition to dense representations, it can also generate sparse vectors.

Model Information

- Model Size: 305M

- Embedding Dimension: 768

- Max Input Tokens: 8192

Usage

- It is recommended to install xformers and enable unpadding for acceleration, refer to enable-unpadding-and-xformers.

- How to use it offline: new-impl/discussions/2

- How to use with TEI: refs/pr/7

Get Dense Embeddings with Transformers

# Requires transformers>=4.36.0

import torch.nn.functional as F

from transformers import AutoModel, AutoTokenizer

input_texts = [

"what is the capital of China?",

"how to implement quick sort in python?",

"北京",

"快排算法介绍"

]

model_name_or_path = 'Alibaba-NLP/gte-multilingual-base'

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

model = AutoModel.from_pretrained(model_name_or_path, trust_remote_code=True)

# Tokenize the input texts

batch_dict = tokenizer(input_texts, max_length=8192, padding=True, truncation=True, return_tensors='pt')

outputs = model(**batch_dict)

dimension=768 # The output dimension of the output embedding, should be in [128, 768]

embeddings = outputs.last_hidden_state[:, 0][:dimension]

embeddings = F.normalize(embeddings, p=2, dim=1)

scores = (embeddings[:1] @ embeddings[1:].T) * 100

print(scores.tolist())

# [[0.3016996383666992, 0.7503870129585266, 0.3203084468841553]]

Use with sentence-transformers

# Requires sentence-transformers>=3.0.0

from sentence_transformers import SentenceTransformer

input_texts = [

"what is the capital of China?",

"how to implement quick sort in python?",

"北京",

"快排算法介绍"

]

model_name_or_path="Alibaba-NLP/gte-multilingual-base"

model = SentenceTransformer(model_name_or_path, trust_remote_code=True)

embeddings = model.encode(input_texts, normalize_embeddings=True) # embeddings.shape (4, 768)

# sim scores

scores = model.similarity(embeddings[:1], embeddings[1:])

print(scores.tolist())

# [[0.301699697971344, 0.7503870129585266, 0.32030850648880005]]

Use with infinity

Usage via docker and infinity, MIT Licensed.

docker run --gpus all -v $PWD/data:/app/.cache -p "7997":"7997" \

michaelf34/infinity:0.0.69 \

v2 --model-id Alibaba-NLP/gte-multilingual-base --revision "main" --dtype float16 --batch-size 32 --device cuda --engine torch --port 7997

Use with custom code to get dense embeddigns and sparse token weights

# You can find the script gte_embedding.py in https://huggingface.co/Alibaba-NLP/gte-multilingual-base/blob/main/scripts/gte_embedding.py

from gte_embedding import GTEEmbeddidng

model_name_or_path = 'Alibaba-NLP/gte-multilingual-base'

model = GTEEmbeddidng(model_name_or_path)

query = "中国的首都在哪儿"

docs = [

"what is the capital of China?",

"how to implement quick sort in python?",

"北京",

"快排算法介绍"

]

embs = model.encode(docs, return_dense=True,return_sparse=True)

print('dense_embeddings vecs', embs['dense_embeddings'])

print('token_weights', embs['token_weights'])

pairs = [(query, doc) for doc in docs]

dense_scores = model.compute_scores(pairs, dense_weight=1.0, sparse_weight=0.0)

sparse_scores = model.compute_scores(pairs, dense_weight=0.0, sparse_weight=1.0)

hybrid_scores = model.compute_scores(pairs, dense_weight=1.0, sparse_weight=0.3)

print('dense_scores', dense_scores)

print('sparse_scores', sparse_scores)

print('hybrid_scores', hybrid_scores)

# dense_scores [0.85302734375, 0.257568359375, 0.76953125, 0.325439453125]

# sparse_scores [0.0, 0.0, 4.600879669189453, 1.570279598236084]

# hybrid_scores [0.85302734375, 0.257568359375, 2.1497951507568356, 0.7965233325958252]

Evaluation

We validated the performance of the gte-multilingual-base model on multiple downstream tasks, including multilingual retrieval, cross-lingual retrieval, long text retrieval, and general text representation evaluation on the MTEB Leaderboard, among others.

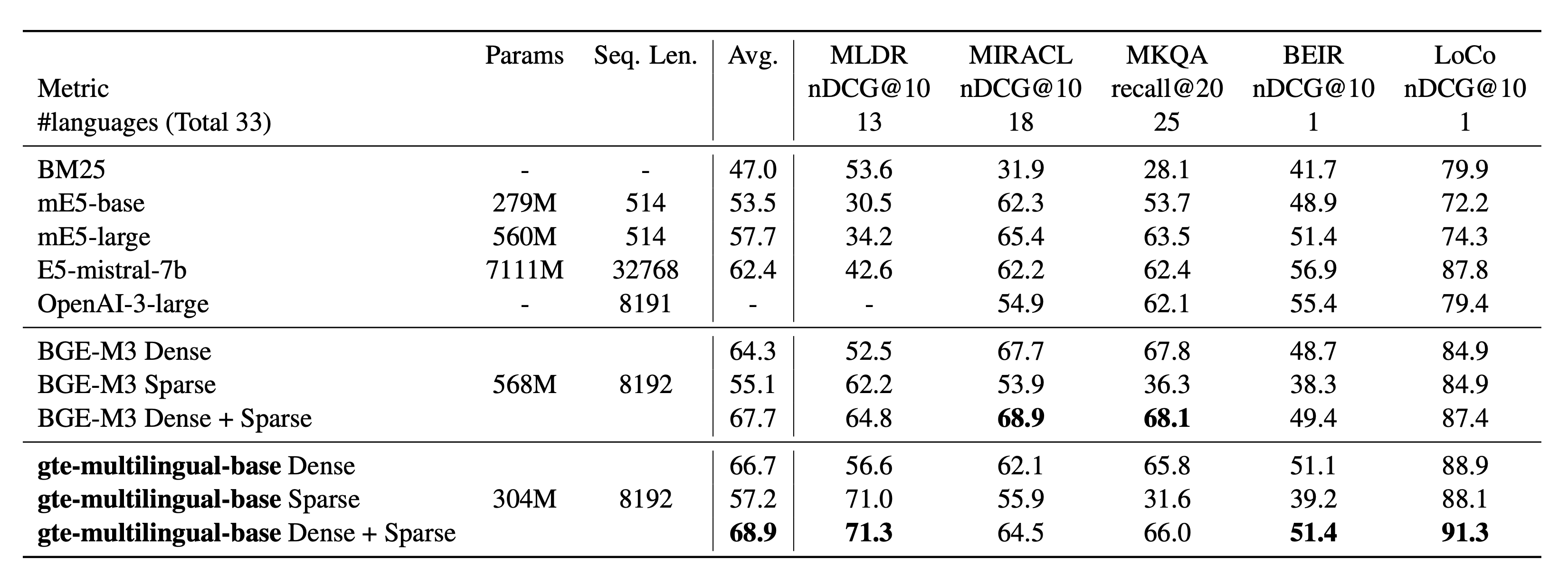

Retrieval Task

Retrieval results on MIRACL and MLDR (multilingual), MKQA (crosslingual), BEIR and LoCo (English).

- Detail results on MLDR

- Detail results on LoCo

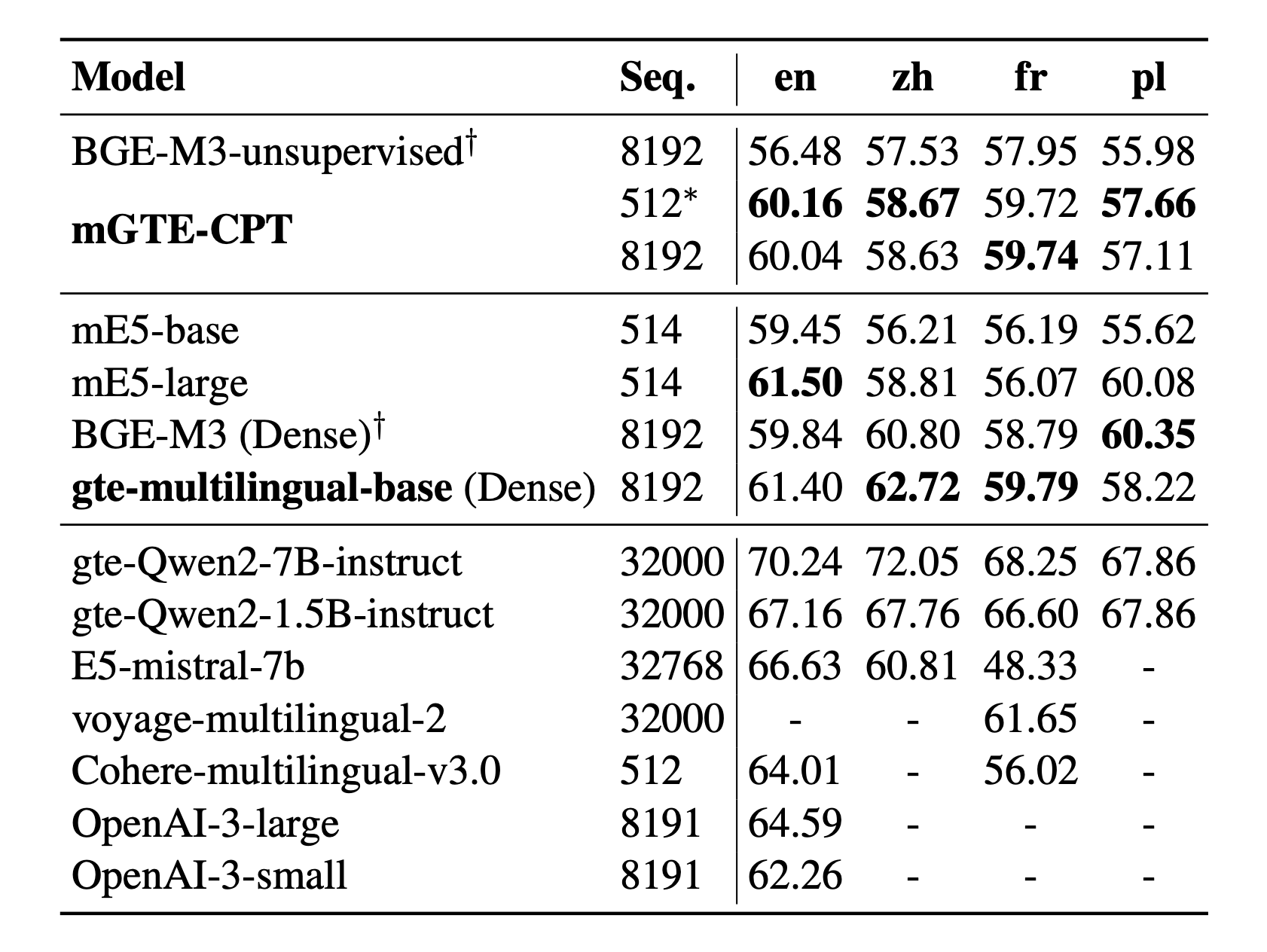

MTEB

Results on MTEB English, Chinese, French, Polish

More detailed experimental results can be found in the paper.

Cloud API Services

In addition to the open-source GTE series models, GTE series models are also available as commercial API services on Alibaba Cloud.

- Embedding Models: Rhree versions of the text embedding models are available: text-embedding-v1/v2/v3, with v3 being the latest API service.

- ReRank Models: The gte-rerank model service is available.

Note that the models behind the commercial APIs are not entirely identical to the open-source models.

Citation

If you find our paper or models helpful, please consider cite:

@misc{zhang2024mgte,

title={mGTE: Generalized Long-Context Text Representation and Reranking Models for Multilingual Text Retrieval},

author={Xin Zhang and Yanzhao Zhang and Dingkun Long and Wen Xie and Ziqi Dai and Jialong Tang and Huan Lin and Baosong Yang and Pengjun Xie and Fei Huang and Meishan Zhang and Wenjie Li and Min Zhang},

year={2024},

eprint={2407.19669},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2407.19669},

}

- Downloads last month

- 1,107,709

Model tree for Alibaba-NLP/gte-multilingual-base

Spaces using Alibaba-NLP/gte-multilingual-base 13

Collection including Alibaba-NLP/gte-multilingual-base

Evaluation results

- v_measure on MTEB 8TagsClusteringtest set self-reported33.667

- cos_sim_spearman on MTEB AFQMCvalidation set self-reported43.548

- cos_sim_spearman on MTEB ATECtest set self-reported48.912

- accuracy on MTEB AllegroReviewstest set self-reported41.690

- v_measure on MTEB AlloProfClusteringP2Ptest set self-reported54.202

- v_measure on MTEB AlloProfClusteringS2Stest set self-reported44.341

- map on MTEB AlloprofRerankingtest set self-reported64.915

- ndcg_at_10 on MTEB AlloprofRetrievaltest set self-reported53.638

- accuracy on MTEB AmazonCounterfactualClassification (en)test set self-reported75.955

- accuracy on MTEB AmazonPolarityClassificationtest set self-reported80.718